- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center Switches

- Re: Port-channel load-balance bug in 3164Q in case of VXLAN-bridged so

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Port-channel load-balance bug in 3164Q in case of VXLAN-bridged source

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-18-2021 02:38 AM - edited 09-18-2021 02:40 AM

Hello,

I believe I have an apparent port-channel load balance bug / issue however regretfully I don't have any TAC account hence no possibility to reach out to them for official diagnosis.

However I think it's really important and severe so it's good if it could be reproduced somehow and dealt with if possible ...

The issue can be seen on Nexus 3164Q series when doing load balance towards switchport port-channels. If the outbound traffic towards the port-channel has first ingressed the switch through a VXLAN-bridged VLAN, then the switch thinks it's all broadcast, hence egressing it towards just one of the port-channel interfaces only. If the same traffic has ingressed the switch through a switchport interface, then no issues of this kind do exist.

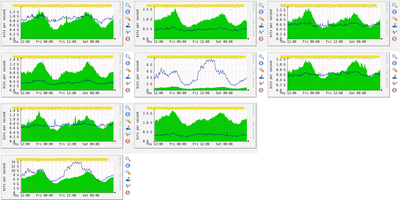

I'm going to show you a snippet of our cacti graph system. This is displaying the utilization of 8x10G interfaces all bundled in a port-channel between a 3164Q and N7000. Clearly evident, the ingress from the N7K is evenly balanced with each port performing 1.4G to 1.5G or so. However, the 3164 is egressing towards the N7K quite different traffic volumes on each port. The reason for this difference is because approx. 60% of the traffic towards the N7K has ingressed through a VXLAN-bridged VLAN while the other 40% are ingressing through other switchports residing in the same 3164. In other words said, if we are going to egress VXLAN-bridged VLANs we may never see the traffic splitting across different members of the port-channel where this traffic is egressing towards.

We have reproduced this on multiple pieces of 3164Q with 7.0.3.I7.8, 7.0.3.I7.9, 9.3.6, 9.3.7 and the result has always been the same.

3164Q# show port-channel traffic interface port-channel 71

NOTE: Clear the port-channel member counters to get accurate statistics

ChanId Port Rx-Ucst Tx-Ucst Rx-Mcst Tx-Mcst Rx-Bcst Tx-Bcst

------ --------- ------- ------- ------- ------- ------- -------

71 Eth1/27/1 12.36% 41.05% 11.97% 0.02% 31.53% 17.12%

71 Eth1/27/2 12.79% 4.99% 11.03% 0.00% 0.37% 0.00%

71 Eth1/37/1 12.29% 3.44% 14.31% 23.67% 0.97% 80.50%

71 Eth1/37/2 12.63% 14.22% 13.48% 24.62% 2.09% 0.34%

71 Eth1/57/1 12.29% 25.91% 13.19% 27.70% 7.77% 0.54%

71 Eth1/57/2 12.63% 3.80% 11.96% 23.96% 6.33% 0.41%

71 Eth1/47/1 12.56% 3.22% 12.93% 0.00% 0.17% 0.52%

71 Eth1/47/2 12.42% 3.32% 11.09% 0.00% 50.74% 0.54%

However if I go to the other side of Port-channel 71 and do the same command on the N7K, I don't see any big traffic percentages for Tx-Bcst for example, as we see on Eth1/37/1.

Nexus7000# show port-channel traffic interface port-channel 71

NOTE: Clear the port-channel member counters to get accurate statistics

ChanId Port Rx-Ucst Tx-Ucst Rx-Mcst Tx-Mcst Rx-Bcst Tx-Bcst

------ --------- ------- ------- ------- ------- ------- -------

71 Eth1/1 17.33% 6.17% 0.00% 5.71% 2.81% 13.00%

71 Eth1/2 2.56% 6.42% 1.75% 6.07% 6.51% 9.88%

71 Eth2/7 1.93% 6.14% 5.68% 6.75% 16.31% 1.52%

71 Eth2/8 6.33% 6.27% 3.94% 6.34% 0.51% 1.14%

71 Eth3/13 1.85% 6.25% 0.00% 7.51% 1.50% 0.24%

71 Eth3/14 5.19% 6.18% 0.00% 6.03% 0.27% 16.01%

71 Eth4/19 11.09% 6.15% 6.23% 6.42% 1.39% 3.14%

71 Eth4/20 3.22% 6.27% 5.52% 6.02% 5.40% 2.72%

The port-channel balance algorithm in effect is:

3164Q# show port-channel load-balance

System config:

Non-IP: src-dst mac

IP: src-dst ip-l4port-vlan rotate 0

Port Channel Load-Balancing Configuration for all modules:

Module 1:

Non-IP: src-dst mac

IP: src-dst ip-l4port-vlan rotate 0

Any help would be highly appreciated...

Thank you !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-17-2021 10:38 AM

Hi again,

It seems I have tried quite a few suggestions found over the internet without any result, the balance is still that wrong.

I have tried to set custom universal id and also to set different rotate integer for each device in our network, but didn't bring any resolution.

It looks weird to me because I was setting different rotates for 'ip load-sharing', for example:

ip load-sharing address source-destination port source-destination rotate 7 universal-id 896058003

but it seems, this is also re-calculating the egress towards port-channels even when concatenation is disabled.

Let's take a look:

3164Q# show interface Eth1/27/1, Eth1/27/2, Eth1/37/1, Eth1/37/2, Eth1/47/1, Eth1/47/2, Eth1/57/1, Eth1/57/2 | i "30 seconds output rate"

30 seconds output rate 1016407200 bits/sec, 287989 packets/sec

30 seconds output rate 4473775144 bits/sec, 571420 packets/sec

30 seconds output rate 1311316888 bits/sec, 553373 packets/sec

30 seconds output rate 509183944 bits/sec, 73487 packets/sec

30 seconds output rate 816816800 bits/sec, 103288 packets/sec

30 seconds output rate 929551904 bits/sec, 140435 packets/sec

30 seconds output rate 638808376 bits/sec, 112065 packets/sec

30 seconds output rate 563301872 bits/sec, 96828 packets/sec

3164Q# show ip load-sharing

IPv4/IPv6 ECMP load sharing:

Universal-id (Random Seed): 896058003

Load-share mode : address source-destination port source-destination

GRE-Outer hash is disabled

Concatenation is disabled

Rotate: 7

3164Q# show port-channel load-balance

System config:

Non-IP: src-dst mac

IP: src-dst ip-l4port rotate 7

Port Channel Load-Balancing Configuration for all modules:

Module 1:

Non-IP: src-dst mac

IP: src-dst ip-l4port rotate 7

Any ideas?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2021 03:36 AM

Hi @ss1

Interesting behavior.

My first thought was to suggest you a different rotate integer, but then I noticed your first commend saying that no changes in behavior was seen after modifying it. I would expect the change of it to give positive results.

Have you tried to enable "symmetric" option as well?

Cheers,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2021 03:50 AM

Dear Sergiu,

Yes, that's right. Pretty interesting indeed.

I think I tested symmetric hashing as well but I'm not 100% sure (on 90% only). I tried quite a lot combinations of rotates etc. and even enabled rtag7 as a hashing algorithm but didn't well. Maybe I could run a monitor session over the port and tcpdump it on my Linux server in order to see what is the port actually egressing, what do you think? Is there anything useful we might see if I do such a session in order to inspect just one of the ports? For example, a wrong broadcast source mac address of the decapsulated traffic or something alike? I don't know.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-23-2022 02:29 AM

Good day to everybody.

As this issue is still persistent I have connected a monitoring server in order to perform tcpdump and check the situation before and after it reaches the final host.

So the deployment I did is as follows.

An end host with the following switch port config:

interface port-channel22

switchport

switchport mode trunk

switchport trunk allowed vlan 126,173-174,178,181,184,186-187,196,210,212,214,219,221,226-227,450,1000,1031,1362,1387

mtu 9216

vpc 22

Another host connected to a monitor session like this:

monitor session 1

source interface port-channel22 tx

destination interface Ethernet1/31/2

no shut

4 out of all switched VLANs arrive on the 3164 through other switched ports (hence no VXLAN bridging for them). All other arrive over as VXLAN bridged. Tcpdump is being performed on both hosts.

The host connected to port-channel22 captures the VLAN tags on all VLANs as intended and the traffic is working without any trouble.

The host connected to the monitor session does capture the VLAN tags for all VLANs who arrived in the 3164 as switched VLANs. However, there are no tags for the VXLAN-bridged traffic (i.e. the traffic is properly sent as trunk with all tags to the end host, however the same traffic is sent as access without any tagging to the monitoring server which is supposed to capture the same traffic on Tx).

I think that it has something in common with the issue discussed here. Perhaps the traffic coming over the nve interface is considered as BUM for some reason, hence not balanced well. I can't be sure but it might perhaps serve as a good starting point for investigation.

The weirdest thing is yet to follow though.

I tried to reset the nve1 interface of the encapsulating switch and then all traffic arrived over the monitoring session as tagged just the way it's supposed to come. However it only worked for 2 or 3 seconds and then all tags were removed again.

I'm looking forward to trying out some ideas regarding this.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide