- Cisco Community

- Technology and Support

- Networking

- Routing

- Re: Intermittent multicast forwarding from the PIM LHR NX-OS switch to the IGMP joined receiver host

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Intermittent multicast forwarding from the PIM LHR NX-OS switch to the IGMP joined receiver host

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2018 05:33 AM

Hello, we are experiencing intermittent cuts in the reception of multicast traffic. There is a multicast sender that sends a multicast packet to 239.1.2.3 each second, but after a variable amount of time in the order of 2-5 minutes, the multicast receiver stops receiving the traffic for 4-5 minutes and then it begins to receive it again.

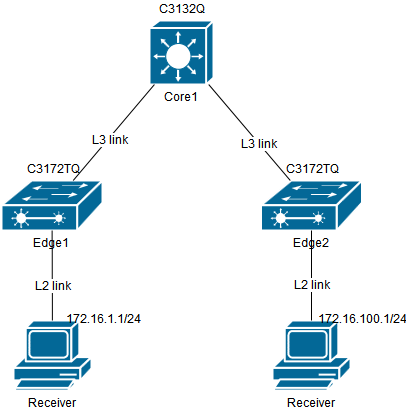

We have this topology:

The sender and receiver are connected to L2 ports in their own VLAN.

PIM seems to work properly, since when the receiver stops receiving the packets I still can see them arrive to Edge1 with ethanalyzer. But, I can cause the multicast traffic to resume again if I restart PIM sometimes in Core1 and others in Edge2, but restarting PIM in Edge1 does not cause any effect in the multicast delivery.

The multicast FIB in Edge1 also looks good, since I see the port that connects to Reeiver in the OIF list for both the (*, 239.1.2.3) and (172.16.100.1/32, 239.1.2.3/32) entries.

IGMP also looks good to me, I can see the group address in the VLAN that connects to the Receiver and its port in the port list. Even in the Receiver I can see the IGMPv3 queries from Ege1 and the corresponding report response while it is not receiving the multicast traffic.

The IGMP debug output shows some messages two seconds after the traffic has resumed:

debug ip igmp internal debug ip igmp mtrace 2018 Nov 15 13:05:55.957072 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:05:55.957097 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee0630] 2018 Nov 15 13:05:55.957149 igmp: Processing clear route for igmp mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:05:55.957176 igmp: Processing clear route for static mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:05:56.376430 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:05:56.376452 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee0633] 2018 Nov 15 13:05:56.377385 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:05:56.377407 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee0636]

With debug ip pim internal I also see some related messages two seconds after the multicast traffic has resumed:

Nov 15 13:25:17.093539 pim: [8605] (default-base) pim_process_periodic_for_context: xid: 0xffff0004 2018 Nov 15 13:25:17.093768 pim: [8605] (default-base) Rcvd route del ack xid ffff0004 2018 Nov 15 13:25:17.093857 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:25:17.093882 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee063b] 2018 Nov 15 13:25:17.093920 igmp: Processing clear route for igmp mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:25:17.093925 pim: Received a notify message from MRIB xid: 0xeeee063d for 1 mroutes 2018 Nov 15 13:25:17.093944 igmp: Processing clear route for static mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:25:17.093957 pim: [8605] (default-base) MRIB Prune notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094027 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094069 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094095 pim: [8605] (default-base) Copied the flags from MRIB for route (172.16.100.1/32, 239.1.2.3/32), (before/after): att F/F, sta F/F, z-oifs T/T, ext F/F, otv_de_rt F/F, otv_de_md F/F, vxlan_de F/F, vxlan_en F/F, dat 2018 Nov 15 13:25:17.094390 pim: [8605] (default-base) MRIB delete notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094428 pim: [8605] (default-base) pim_process_mrib_delete_notify: xid: 0xffff0005 2018 Nov 15 13:25:17.094476 pim: [8605] (default-base) MRIB zero-oif notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094502 pim: [8605] (default-base) Sending ack: xid: 0xeeee063d 2018 Nov 15 13:25:17.094567 pim: [8605] (default-base) Rcvd route del ack xid ffff0005 2018 Nov 15 13:25:17.094632 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:25:17.094649 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee063e] 2018 Nov 15 13:25:17.094706 pim: Received a notify message from MRIB xid: 0xeeee0640 for 1 mroutes 2018 Nov 15 13:25:17.094709 igmp: Processing clear route for igmp mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:25:17.094730 igmp: Processing clear route for static mpib, for VRF default (172.16.100.1/32, 239.1.2.3/32), inform_mrib due to MRIB delete-route request 2018 Nov 15 13:25:17.094735 pim: [8605] (default-base) MRIB delete notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.094755 pim: [8605] (default-base) Sending ack: xid: 0xeeee0640 2018 Nov 15 13:25:17.094839 mrib: [12408] (default-base) mrib_setup_mfdm_route: vrf default Insert delete-op (172.16.100.1/32, 239.1.2.3/32) into MFDM buffer 2018 Nov 15 13:25:17.094869 mrib: [12408] (default-base) RPF-iod: 62,iif:62(v) rpf_s:ac210407(a) oif-list: 00000000 (0), number of GLT intf 0, Bidir-RP Ordinal: 0, mfdm-flags: dpgbrufOvnl3r3 2018 Nov 15 13:25:17.094911 mrib: default: Moving MFDM txlist member marker to version 2417, routes skipped 0 2018 Nov 15 13:25:17.102917 mrib: Received update-ack from MFDM route-count: 1, xid: 0xaca6 2018 Nov 15 13:25:17.103016 mrib: [12408] (default-base) default: Received update-ack from MFDM, route-buffer 0xe9582684, route-count 1, xid 0x0, Used buffer queue count:0, Free buffer queue count:10 2018 Nov 15 13:25:17.513479 mrib: mrib_update_oifs: Updated the OIF Vlan33 to route (172.16.100.1, 239.1.2.3), vpc-svi FALSEwas_vpc_svi FALSE, is_vpc_svi FALSE, remove ? TRUE 2018 Nov 15 13:25:17.513657 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:25:17.513679 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee0641] 2018 Nov 15 13:25:17.513710 pim: Received a notify message from MRIB xid: 0xeeee0643 for 1 mroutes 2018 Nov 15 13:25:17.513709 mrib: [12408] (default-base) ^IOIF interface: Vlan33 2018 Nov 15 13:25:17.513748 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.513760 mrib: [12408] (default-base) mrib_setup_mfdm_route: vrf default Insert add-op (172.16.100.1/32, 239.1.2.3/32) into MFDM buffer 2018 Nov 15 13:25:17.513779 mrib: [12408] (default-base) MRIB-MFDM-IF:Non-vpc mode. Invoked mfdm_mrib_add_reg_oif for non-oiflist oif Vlan33 2018 Nov 15 13:25:17.513810 mrib: [12408] (default-base) RPF-iod: 62,iif:62(v) rpf_s:ac210407(a) oif-list: 00000010 (1), number of GLT intf 0, Bidir-RP Ordinal: 0, mfdm-flags: dpgbrufovnl3r3 2018 Nov 15 13:25:17.513814 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.513829 mrib: [12408] (default-base) ^IOIF interface: Vlan33 2018 Nov 15 13:25:17.513847 mrib: [12408] (default-base) ^IOIF interface: Vlan33 2018 Nov 15 13:25:17.513859 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.513880 mrib: default: Moving MFDM txlist member marker to version 2422, routes skipped 0 2018 Nov 15 13:25:17.513893 pim: [8605] (default-base) Copied the flags from MRIB for route (172.16.100.1/32, 239.1.2.3/32), (before/after): att F/F, sta F/F, z-oifs T/F, ext F/F, otv_de_rt F/F, otv_de_md F/F, vxlan_de F/F, vxlan_en F/F, dat 2018 Nov 15 13:25:17.513928 pim: [8605] (default-base) Add (172.16.100.1/32, 239.1.2.3/32) to MRIB add-buffer, add_count: 1 2018 Nov 15 13:25:17.514011 pim: [8605] (default-base) pim_process_mrib_join_notify: xid: 0xffff0006 2018 Nov 15 13:25:17.514309 pim: [8605] (default-base) pim_process_mrib_rpf_notify: MRIB RPF notify for (172.16.100.1/32, 239.1.2.3/32), old RPF info: 10.0.1.1 (Ethernet1/50), new RPF info: 10.0.1.1 (Ethernet1/50). LISP source RLOC address: 2018 Nov 15 13:25:17.514342 pim: [8605] (default-base) Sending ack: xid: 0xeeee0643 2018 Nov 15 13:25:17.514582 igmp: Received Message from MRIB minor 8 2018 Nov 15 13:25:17.514600 igmp: Received Notification from MRIB for 1 routes [xid: 0xeeee0644] 2018 Nov 15 13:25:17.514999 pim: Received a notify message from MRIB xid: 0xeeee0646 for 1 mroutes 2018 Nov 15 13:25:17.515026 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 15 13:25:17.515421 pim: [8605] (default-base) Sending ack: xid: 0xeeee0646 2018 Nov 15 13:25:17.515543 mrib: Received update-ack from MFDM route-count: 1, xid: 0xaca7 2018 Nov 15 13:25:17.515646 mrib: [12408] (default-base) default: Received update-ack from MFDM, route-buffer 0xe959b6c4, route-count 1, xid 0x0, Used buffer queue count:0, Free buffer queue count:10

I have exhausted all the diagnosis resources I have found without seeing any trace that could lead me to the root cause of this problem. If anyone has any idea it would be welcomed.

- Labels:

-

Routing Protocols

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2018 06:26 AM

Hello

what pim mode are you using ?

sh ip pim interface xxx

sh is pim neighbour

sh igmp group

debug ip pim 239.1.2.3

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2018 11:04 PM - edited 11-15-2018 11:34 PM

Hello Paul, thanks for querying, we are using PIM sparse mode with Anycast RP on two switches, but for testing we have shutdown the other RP switch to remove all distracting variables in this case, so Core1 is the actual and only RP.

sh ip pim group-range 239.1.2.3:

PIM Group-Range Configuration for VRF "default" Group-range Action Mode RP-address Shared-tree-only range 224.0.0.0/4 - ASM 10.0.0.224 -

10.0.0.224 is the IP of the Loopback10 interface in Core1, which is the Anycast RP.

Also we are using MTU 9216.

I expose the result of the commands that you request:

sh ip pim interface:

Vlan33, Interface status: protocol-up/link-up/admin-up

IP address: 172.16.1.254, IP subnet: 172.16.1.0/24

PIM DR: 172.16.1.254, DR's priority: 1

PIM neighbor count: 0

PIM hello interval: 30 secs, next hello sent in: 00:00:04

PIM neighbor holdtime: 105 secs

PIM configured DR priority: 1

PIM configured DR delay: 0 secs

PIM border interface: yes

PIM GenID sent in Hellos: 0x1a93c1b4

PIM Hello MD5-AH Authentication: disabled

PIM Neighbor policy: none configured

PIM Join-Prune inbound policy: none configured

PIM Join-Prune outbound policy: none configured

PIM Join-Prune interval: 1 minutes

PIM Join-Prune next sending: 1 minutes

PIM BFD enabled: no

PIM passive interface: no

PIM VPC SVI: no

PIM Auto Enabled: no

PIM Interface Statistics, last reset: never

General (sent/received):

Hellos: 2211/0 (early: 1), JPs: 0/0, Asserts: 0/0

Grafts: 0/0, Graft-Acks: 0/0

DF-Offers: 0/0, DF-Winners: 0/0, DF-Backoffs: 0/0, DF-Passes: 0/0

Errors:

Checksum errors: 0, Invalid packet types/DF subtypes: 0/0

Authentication failed: 0

Packet length errors: 0, Bad version packets: 0, Packets from self: 0

Packets from non-neighbors: 0

Packets received on passiveinterface: 0

JPs received on RPF-interface: 0

(*,G) Joins received with no/wrong RP: 0/0

(*,G)/(S,G) JPs received for SSM/Bidir groups: 0/0

JPs filtered by inbound policy: 0

JPs filtered by outbound policy: 0

loopback0, Interface status: protocol-up/link-up/admin-up

IP address: 10.0.11.1, IP subnet: 10.0.11.1/32

PIM DR: 10.0.11.1, DR's priority: 1

PIM neighbor count: 0

PIM hello interval: 30 secs, next hello sent in: 00:00:06

PIM neighbor holdtime: 105 secs

PIM configured DR priority: 1

PIM configured DR delay: 0 secs

PIM border interface: no

PIM GenID sent in Hellos: 0x19903113

PIM Hello MD5-AH Authentication: disabled

PIM Neighbor policy: none configured

PIM Join-Prune inbound policy: none configured

PIM Join-Prune outbound policy: none configured

PIM Join-Prune interval: 1 minutes

PIM Join-Prune next sending: 1 minutes

PIM BFD enabled: no

PIM passive interface: no

PIM VPC SVI: no

PIM Auto Enabled: no

PIM Interface Statistics, last reset: never

General (sent/received):

Hellos: 2212/0 (early: 1), JPs: 0/0, Asserts: 0/0

Grafts: 0/0, Graft-Acks: 0/0

DF-Offers: 0/0, DF-Winners: 0/0, DF-Backoffs: 0/0, DF-Passes: 0/0

Errors:

Checksum errors: 0, Invalid packet types/DF subtypes: 0/0

Authentication failed: 0

Packet length errors: 0, Bad version packets: 0, Packets from self: 0

Packets from non-neighbors: 0

Packets received on passiveinterface: 0

JPs received on RPF-interface: 0

(*,G) Joins received with no/wrong RP: 0/0

(*,G)/(S,G) JPs received for SSM/Bidir groups: 0/0

JPs filtered by inbound policy: 0

JPs filtered by outbound policy: 0

Ethernet1/49, Interface status: protocol-up/link-up/admin-up

IP unnumbered interface (loopback0)

PIM DR: 10.0.11.1, DR's priority: 1

PIM neighbor count: 1

PIM hello interval: 30 secs, next hello sent in: 00:00:11

PIM neighbor holdtime: 105 secs

PIM configured DR priority: 1

PIM configured DR delay: 0 secs

PIM border interface: no

PIM GenID sent in Hellos: 0x19b3ac73

PIM Hello MD5-AH Authentication: disabled

PIM Neighbor policy: none configured

PIM Join-Prune inbound policy: none configured

PIM Join-Prune outbound policy: none configured

PIM Join-Prune interval: 1 minutes

PIM Join-Prune next sending: 1 minutes

PIM BFD enabled: no

PIM passive interface: no

PIM VPC SVI: no

PIM Auto Enabled: no

PIM Interface Statistics, last reset: never

General (sent/received):

Hellos: 2211/2218 (early: 1), JPs: 49/0, Asserts: 0/0

Grafts: 0/0, Graft-Acks: 0/0

DF-Offers: 0/0, DF-Winners: 0/0, DF-Backoffs: 0/0, DF-Passes: 0/0

Errors:

Checksum errors: 0, Invalid packet types/DF subtypes: 0/0

Authentication failed: 0

Packet length errors: 0, Bad version packets: 0, Packets from self: 0

Packets from non-neighbors: 0

Packets received on passiveinterface: 0

JPs received on RPF-interface: 0

(*,G) Joins received with no/wrong RP: 0/0

(*,G)/(S,G) JPs received for SSM/Bidir groups: 0/0

JPs filtered by inbound policy: 0

JPs filtered by outbound policy: 0

Ethernet1/50, Interface status: protocol-up/link-up/admin-up

IP unnumbered interface (loopback0)

PIM DR: 10.0.11.1, DR's priority: 1

PIM neighbor count: 1

PIM hello interval: 30 secs, next hello sent in: 00:00:23

PIM neighbor holdtime: 105 secs

PIM configured DR priority: 1

PIM configured DR delay: 0 secs

PIM border interface: no

PIM GenID sent in Hellos: 0x19c5c7a3

PIM Hello MD5-AH Authentication: disabled

PIM Neighbor policy: none configured

PIM Join-Prune inbound policy: none configured

PIM Join-Prune outbound policy: none configured

PIM Join-Prune interval: 1 minutes

PIM Join-Prune next sending: 1 minutes

PIM BFD enabled: no

PIM passive interface: no

PIM VPC SVI: no

PIM Auto Enabled: no

PIM Interface Statistics, last reset: never

General (sent/received):

Hellos: 2215/2215 (early: 1), JPs: 967/1001, Asserts: 0/0

Grafts: 0/0, Graft-Acks: 0/0

DF-Offers: 0/0, DF-Winners: 0/0, DF-Backoffs: 0/0, DF-Passes: 0/0

Errors:

Checksum errors: 0, Invalid packet types/DF subtypes: 0/0

Authentication failed: 0

Packet length errors: 0, Bad version packets: 0, Packets from self: 0

Packets from non-neighbors: 0

Packets received on passiveinterface: 0

JPs received on RPF-interface: 0

(*,G) Joins received with no/wrong RP: 0/0

(*,G)/(S,G) JPs received for SSM/Bidir groups: 0/0

JPs filtered by inbound policy: 0

JPs filtered by outbound policy: 0

sh ip pim neighbour:

PIM Neighbor Status for VRF "default"

Neighbor Interface Uptime Expires DR Bidir- BFD

Priority Capable State

10.0.1.1 Ethernet1/49 17:34:45 00:01:31 1 no n/a

10.0.1.1 Ethernet1/50 17:34:45 00:01:35 1 no n/a

10.0.1.1 is the IP address of the Loopback0 interface in Core1.

sh ip igmp groups:

IGMP Connected Group Membership for VRF "default" - 2 total entries Type: S - Static, D - Dynamic, L - Local, T - SSM Translated Group Address Type Interface Uptime Expires Last Reporter 239.1.2.3 D Vlan33 00:00:02 00:04:17 172.16.1.1

debug ip pim does not accept a group address in our switches:

# debug ip pim ? assert PIM Assert packet events data-register PIM data register packet events ha PIM system HA events hello PIM Hello packet events internal PIM system internal events join-prune PIM Join-Prune packet events null-register PIM Null Register packet events packet PIM header debugs policy PIM policy information rp PIM RP related events vpc VPC related events vrf PIM VRF creation/deletion events

debug ip pim join-prune shows this records both when multicast is being received at Receiver host and when it isn't:

When multicast is being received: 2018 Nov 16 06:57:53.026006 pim: Periodic timer expired, aging routes and building JP messages 2018 Nov 16 06:57:53.026037 pim: [8605] (default-base) Walk routes for VRF default, VRF-id: 1, table-id: 0x00000001 2018 Nov 16 06:57:53.026131 pim: [8605] (default-base) (*, 239.1.2.3/32) entry timer updated due to non-empty oif-list or ssm group or attached/static route 2018 Nov 16 06:57:53.026151 pim: [8605] (default-base) Stats Previous/Current 0/0 2018 Nov 16 06:57:53.026187 pim: [8605] (default-base) wc_bit = TRUE, rp_bit = TRUE 2018 Nov 16 06:57:53.026203 pim: [8605] (default-base) Put (*, 239.1.2.3/32), WRS in join-list for nbr 10.0.1.1 2018 Nov 16 06:57:53.026245 pim: [8605] (default-base) Stats Previous/Current 16/20 2018 Nov 16 06:57:53.026263 pim: [8605] (default-base) (172.16.100.1/32, 239.1.2.3/32) expiration timer updated due to data activity 2018 Nov 16 06:57:53.026278 pim: [8605] (default-base) Stats Previous/Current 16/20 2018 Nov 16 06:57:53.026291 pim: [8605] (default-base) For Group 239.1.2.3 2018 Nov 16 06:57:53.026313 pim: [8605] (default-base) wc_bit = FALSE, rp_bit = FALSE 2018 Nov 16 06:57:53.026327 pim: [8605] (default-base) Put (172.16.100.1/32, 239.1.2.3/32), S in join-list for nbr 10.0.1.1 2018 Nov 16 06:57:53.026369 pim: [8605] (default-base) Stats Previous/Current 10/10 2018 Nov 16 06:57:53.026385 pim: [8605] (default-base) For Group 239.255.0.1 source count is 3 2018 Nov 16 06:57:53.026408 pim: [8605] (default-base) wc_bit = TRUE, rp_bit = TRUE 2018 Nov 16 06:57:53.026421 pim: [8605] (default-base) Put (*, 239.255.0.1/32), WRS in join-list for nbr 10.0.1.1 When multicast is not being received: 2018 Nov 16 06:58:54.112672 pim: Periodic timer expired, aging routes and building JP messages 2018 Nov 16 06:58:54.112705 pim: [8605] (default-base) Walk routes for VRF default, VRF-id: 1, table-id: 0x00000001 2018 Nov 16 06:58:54.112819 pim: [8605] (default-base) Stats Previous/Current 0/0 2018 Nov 16 06:58:54.112866 pim: [8605] (default-base) wc_bit = TRUE, rp_bit = TRUE 2018 Nov 16 06:58:54.112888 pim: [8605] (default-base) Put (*, 239.1.2.3/32), WRS in join-list for nbr 10.0.1.1 2018 Nov 16 06:58:54.112944 pim: [8605] (default-base) Stats Previous/Current 20/24 2018 Nov 16 06:58:54.112969 pim: [8605] (default-base) (172.16.100.1/32, 239.1.2.3/32) expiration timer updated due to data activity 2018 Nov 16 06:58:54.112995 pim: [8605] (default-base) Stats Previous/Current 20/24 2018 Nov 16 06:58:54.113014 pim: [8605] (default-base) For Group 239.1.2.3 2018 Nov 16 06:58:54.113050 pim: [8605] (default-base) wc_bit = FALSE, rp_bit = FALSE 2018 Nov 16 06:58:54.113063 pim: [8605] (default-base) Put (172.16.100.1/32, 239.1.2.3/32), S in join-list for nbr 10.0.1.1 2018 Nov 16 06:58:54.113106 pim: [8605] (default-base) Stats Previous/Current 10/10 2018 Nov 16 06:58:54.113120 pim: [8605] (default-base) For Group 239.255.0.1 source count is 3 2018 Nov 16 06:58:54.113141 pim: [8605] (default-base) wc_bit = TRUE, rp_bit = TRUE 2018 Nov 16 06:58:54.113152 pim: [8605] (default-base) Put (*, 239.255.0.1/32), WRS in join-list for nbr 10.0.1.1

Three seconds after the multicast traffic has resumed I get this:

2018 Nov 16 07:31:36.906738 pim: [8605] (default-base) pim_process_periodic_for_context: xid: 0xffff0023 2018 Nov 16 07:31:36.906901 pim: [8605] (default-base) Rcvd route del ack xid ffff0023 2018 Nov 16 07:31:36.907083 pim: Received a notify message from MRIB xid: 0xeeee069e for 1 mroutes 2018 Nov 16 07:31:36.907106 pim: [8605] (default-base) MRIB Prune notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:36.907167 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:36.907195 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:36.907227 pim: [8605] (default-base) Copied the flags from MRIB for route (172.16.100.1/32, 239.1.2.3/32), (before/after): att F/F, sta F/F, z-oifs T/T, ext F/F, otv_de_rt F/F, otv_de_md F/F, vxlan_de F/F, vxlan_en F/F, dat 2018 Nov 16 07:31:36.907532 pim: [8605] (default-base) MRIB delete notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:36.907569 pim: [8605] (default-base) pim_process_mrib_delete_notify: xid: 0xffff0024 2018 Nov 16 07:31:36.907603 pim: [8605] (default-base) MRIB zero-oif notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:36.907624 pim: [8605] (default-base) Sending ack: xid: 0xeeee069e 2018 Nov 16 07:31:36.907752 pim: [8605] (default-base) Rcvd route del ack xid ffff0024 2018 Nov 16 07:31:37.436611 pim: Received a notify message from MRIB xid: 0xeeee06a1 for 1 mroutes 2018 Nov 16 07:31:37.436652 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:37.436709 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:37.436739 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:37.437295 pim: [8605] (default-base) pim_process_mrib_rpf_notify: MRIB RPF notify for (172.16.100.1/32, 239.1.2.3/32), old RPF info: 10.0.1.1 (Ethernet1/50), new RPF info: 10.0.1.1 (Ethernet1/50). LISP source RLOC address: 2018 Nov 16 07:31:37.437356 pim: [8605] (default-base) Sending ack: xid: 0xeeee06a1 2018 Nov 16 07:31:37.437806 pim: Received a notify message from MRIB xid: 0xeeee06a4 for 1 mroutes 2018 Nov 16 07:31:37.437835 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 07:31:37.438164 pim: [8605] (default-base) Sending ack: xid: 0xeeee06a4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2018 11:44 PM

When multicast traffic is not being sent to Receiver I see that the packets are arriving to the LHR Edge1:

ethanalyzer local interface inband capture-filter "host 239.1.2.3":

Capturing on inband 2018-11-16 07:39:32.494698 172.16.100.1 -> 239.1.2.3 UDP Source port: 37513 Destination port: 12345 2018-11-16 07:39:33.494850 172.16.100.1 -> 239.1.2.3 UDP Source port: 37513 Destination port: 12345 2018-11-16 07:39:34.494964 172.16.100.1 -> 239.1.2.3 UDP Source port: 37513 Destination port: 12345

And FIB looks good for me:

sh forwarding distribution ip multicast route group 239.1.2.3:

(*, 239.1.2.3/32), RPF Interface: Ethernet1/49, flags: G

Received Packets: 0 Bytes: 0

Number of Outgoing Interfaces: 1

Outgoing Interface List Index: 19

Vlan33

( Mem L2 Ports: Ethernet1/29 )

l2_oiflist_index: 9

(172.16.100.1/32, 239.1.2.3/32), RPF Interface: Ethernet1/50, flags:

Received Packets: 6 Bytes: 390

Number of Outgoing Interfaces: 1

Outgoing Interface List Index: 19

Vlan33

( Mem L2 Ports: Ethernet1/29 )

l2_oiflist_index: 9

sh ip fib distribution multicast outgoing-interface-list L3 19:

Outgoing Interface List Index: 19

Reference Count: 2

Platform Index: 0xb00013

Number of Outgoing Interfaces: 1

Vlan33

( Mem L2 Ports: Ethernet1/29 )

l2_oiflist_index: 9

sh ip fib distribution multicast outgoing-interface-list L2 9:

Outgoing Interface List Index: 9

[ipg (pim_en) OR macg (pim_dis)]

Reference Count: 2

Num L3 usages: 1

Platform Index: 0xa00009

Number of Outgoing Interfaces: 1

Ethernet1/29

Eth1/29 is the port where Receiver is connected.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2018 12:24 AM

Right now the only thing that I see is bound to this issue is that when the Edge1 switch resumes the delivery of multicast to Receiver, the following PIM debug events are showw:

debug ip pim internal

2018 Nov 16 08:08:12.469929 pim: [8605] (default-base) pim_process_periodic_for_context: xid: 0xffff002f 2018 Nov 16 08:08:12.470099 pim: [8605] (default-base) Rcvd route del ack xid ffff002f 2018 Nov 16 08:08:12.470238 pim: Received a notify message from MRIB xid: 0xeeee06c2 for 1 mroutes 2018 Nov 16 08:08:12.470271 pim: [8605] (default-base) MRIB Prune notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.470327 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.470354 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.470376 pim: [8605] (default-base) Copied the flags from MRIB for route (172.16.100.1/32, 239.1.2.3/32), (before/after): att F/F, sta F/F, z-oifs T/T, ext F/F, otv_de_rt F/F, otv_de_md F/F, vxlan_de F/F, vxlan_en F/F, dat 2018 Nov 16 08:08:12.470668 pim: [8605] (default-base) MRIB delete notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.470703 pim: [8605] (default-base) pim_process_mrib_delete_notify: xid: 0xffff0030 2018 Nov 16 08:08:12.470750 pim: [8605] (default-base) MRIB zero-oif notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.470774 pim: [8605] (default-base) Sending ack: xid: 0xeeee06c2 2018 Nov 16 08:08:12.470894 pim: [8605] (default-base) Rcvd route del ack xid ffff0030 2018 Nov 16 08:08:12.700855 pim: Received a notify message from MRIB xid: 0xeeee06c5 for 1 mroutes 2018 Nov 16 08:08:12.700895 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.700958 pim: [8605] (default-base) For RPF Source 172.16.100.1 RPF neighbor 10.0.1.1 and RPF interface Ethernet1/50 Route (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.701000 pim: [8605] (default-base) Add to PT (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.701032 pim: [8605] (default-base) Copied the flags from MRIB for route (172.16.100.1/32, 239.1.2.3/32), (before/after): att F/F, sta F/F, z-oifs T/F, ext F/F, otv_de_rt F/F, otv_de_md F/F, vxlan_de F/F, vxlan_en F/F, dat 2018 Nov 16 08:08:12.701118 pim: [8605] (default-base) Add (172.16.100.1/32, 239.1.2.3/32) to MRIB add-buffer, add_count: 1 2018 Nov 16 08:08:12.701191 pim: [8605] (default-base) pim_process_mrib_join_notify: xid: 0xffff0031 2018 Nov 16 08:08:12.701511 pim: [8605] (default-base) pim_process_mrib_rpf_notify: MRIB RPF notify for (172.16.100.1/32, 239.1.2.3/32), old RPF info: 10.0.1.1 (Ethernet1/50), new RPF info: 10.0.1.1 (Ethernet1/50). LISP source RLOC address: 2018 Nov 16 08:08:12.701547 pim: [8605] (default-base) Sending ack: xid: 0xeeee06c5 2018 Nov 16 08:08:12.701951 pim: Received a notify message from MRIB xid: 0xeeee06c8 for 1 mroutes 2018 Nov 16 08:08:12.701977 pim: [8605] (default-base) MRIB Join notify for (172.16.100.1/32, 239.1.2.3/32) 2018 Nov 16 08:08:12.702309 pim: [8605] (default-base) Sending ack: xid: 0xeeee06c8

Reception of first packet after interruption:

08:08:09.157867 172.16.100.1.37513 12 bytes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2018 03:29 AM

Hello

Not so sure i am following this correctly so apologies.

Your topology shows a single core switch two access switch and two receivers and you say your applying Anycast RP so why not just use a static rp instead of anycast?

Would you be able to post configuration the core and access switch please.

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2018 03:49 AM

The production topology that we pretend to deploy has two cores. We are now testing with only one to remove any unnecessary variable from the equation.

The actual PIM and PIM interfaces configuration of the Core1 is this, where now there is no Anycast-RP:

ip pim rp-address 10.0.0.224 group-list 224.0.0.0/4 ip pim log-neighbor-changes ip pim ssm range 232.0.0.0/8 interface loopback0 ip address 10.0.1.1/32 ip router ospf 1 area 0.0.0.0 ip pim sparse-mode interface loopback10 ip address 10.0.0.224/32 ip router ospf 1 area 0.0.0.0 ip pim sparse-mode interface Ethernet1/1 description L3 link to Edge1 no switchport link debounce time 0 mtu 9216 medium p2p no ip redirects ip unnumbered loopback0 no ipv6 redirects ip router ospf 1 area 0.0.0.0 ip pim sparse-mode ip igmp version 3 no shutdown interface Ethernet1/3 description L3 link to Edge2 no switchport link debounce time 0 mtu 9216 medium p2p no ip redirects ip unnumbered loopback0 no ipv6 redirects ip router ospf 1 area 0.0.0.0 ip pim sparse-mode ip igmp version 3 no shutdown

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2018 04:40 AM

I have found RPF is in the root of the problem, but don't know yet how to fix it. Each connection between edges and cores has two L3 links, from Eth1/49 and Eth1/50 in Edge1 to Eth1/1 and Eth1/2 in Core1.

sh ip mroute 239.1.2.3 shows this:

(*, 239.1.2.3/32), uptime: 01:22:35, ip pim igmp

Incoming interface: Ethernet1/49, RPF nbr: 10.0.1.1, uptime: 01:22:35

Outgoing interface list: (count: 1)

Vlan33, uptime: 00:24:26, igmp

(172.16.100.1/32, 239.1.2.3/32), uptime: 00:34:02, ip mrib pim

Incoming interface: Ethernet1/50, RPF nbr: 10.0.1.1, uptime: 00:34:02

Outgoing interface list: (count: 1)

Vlan33, uptime: 00:24:26, mrib

That is, Eth1/49 for (*, 239.1.2.3) and Eth1/50 for (172.16.100.1/32, 239.1.2.3/32). Apparently this is causing problems with RPF, since enabling debug ip mfwd packet makes the Edge1 switch show every second the following message, independently of whether the multicast traffis is sent to Receiver or not:

2018 Nov 16 08:43:51.953 Edge1 mcastfwd: <{mcastfwd}>RPF check for route (172.16.100.1/32, 239.1.2.3/32): Ethernet1/50, failed

If one of the uplinks is disabled, then the mroute for 239.1.2.3 becomes this:

(*, 239.1.2.3/32), uptime: 01:42:05, ip pim igmp

Incoming interface: Ethernet1/49, RPF nbr: 10.0.1.1, uptime: 01:42:05

Outgoing interface list: (count: 1)

Vlan33, uptime: 00:43:56, igmp

(172.16.100.1/32, 239.1.2.3/32), uptime: 00:12:49, ip mrib pim

Incoming interface: Ethernet1/49, RPF nbr: 10.0.1.1, uptime: 00:12:49

Outgoing interface list: (count: 1)

Vlan33, uptime: 00:12:49, mrib

Now both mroutes use the same and only Eth1/49 port that connects with Core1 and the RPF check for (172.16.100.1/32, 239.1.2.3/32) stops failing.

So the problem is that RPF fails when using two ECMP links between Edge1 and Core1.

But, how can I fix it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2018 06:56 AM - edited 11-16-2018 07:09 AM

Hello

you could use a static mroute entry to resolve a rpf failure-

I see you have enabled ssm but have no ssm source applied also you have defined a RP which isn’t required

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide