- Cisco Community

- Technology and Support

- Networking

- Routing

- Re: Shapping, Nested policies and wrong queue-limit size

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Shapping, Nested policies and wrong queue-limit size

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2022 08:11 AM

Hi,

I recently came across an issue with the default calculation of queue limit. I am seeing very high queue limit value that does not coincide with the theoretical formula of the cisco documentation.

For context, company is a public entity with a lot of sites and homemade templating for configuration.

Interfaces are configured as follow:

!# Physical interface going to “WAN SWITCH”

interface GigabitEthernet0/0/1

no ip address

no ip redirects

no ip unreachables

no ip proxy-arp

negotiation auto

end

!# Sub interface going to ISP router

interface GigabitEthernet0/0/1.50

bandwidth 8888

encapsulation dot1Q 122

ip address XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX

no ip redirects

no ip unreachables

no ip proxy-arp

ip mtu 1366

service-policy output POLICY-ROOT

end

We are using 3 levels of policy-map (nested in each other), configured as follow:

policy-map POLICY-ROOT

class class-default

shape average 8888000

service-policy POLICY-CHILD-1

policy-map POLICY-CHILD-1

class ISP-SPEED

shape average 160000000

service-policy POLICY-CHILD-2

policy-map POLICY-CHILD-2

class REAL-TIME

priority percent 10

class SRV-RX

bandwidth percent 10

random-detect dscp-based

class PREF-DATA

bandwidth percent 5

random-detect dscp-based

class INTERACTIVE

bandwidth percent 35

random-detect dscp-based

class STREAM

bandwidth percent 15

random-detect dscp-based

class VISIO

bandwidth percent 15

random-detect dscp-based

class BULK-DATA

bandwidth percent 5

random-detect dscp-based

class class-default

bandwidth percent 5

random-detect dscp-based

class-map match-any REAL-TIME

match dscp ef

class-map match-any SRV-RX

match dscp cs6

class-map match-any PREF-DATA

match dscp cs4

class-map match-any INTERACTIVE

match dscp af22

class-map match-any STREAM

match dscp af32

class-map match-any VISIO

match dscp af42

class-map match-any BULK-DATA

match dscp af12

- POLICY-ROOT goal is to set a virtual limit for the WAN link.

- POLICY-CHILD-1 goal limit the WAN link bandwidth to the ISP link bandwidth.

- POLICY-CHILD-2 goal is to share the bandwidth between the different QoS class.

The virtual WAN link change over times, when that happen, 2 lines are edited:

- bandwidth in the sub interface configuration

- shape rate in the POLICY-ROOT policy-map

Therefore, the shape rate value of the POLICY-ROOT can be less than the shape rate value of the POLICY-CHILD-1. Based on the following documentation Queue Limits and WRED (IOS XE 17, ISR 4000) cisco give the following formula for the default queue limit:

queue-limit = (visible bandwidth / 8 bits) * 50mS / MTU with a minimum value of 64 packets.

Where MTU is the Ethernet MTU shown by show interface (in ours case 1500).

So in theory:

At the POLICY-ROOT level I should have :

- Queue-limit = 8 888 000 / 8 * 0.05 / 1500 = 37, 37 < 64, so queue-limit should be 64 packets.

At the POLICY-CHILD-1 level, I should have queue-limit :

- Queue-limit = 160 000 000 / 8 * 0.05 / 1500 = 666, 666 > 64, so queue-limit should be 666 packets.

For the POLICY-CHILD-2, the cisco documentation is unclear, but from my interpretation, the visible bandwidth is 10% of the parent shape rate.

- Queue-limit = (160 000 000 * 0.10) / 8 * 0.05 / 1500 = 666, 666 > 64, so queue-limit should be 66 packets.

However, in practice:

If I look at the queue limit on the router

HOST#show policy-map int Gi0/0/X.50 output

GigabitEthernet0/0/X.50

Service-policy output: POLICY-ROOT

Class-map: class-default (match-any)

13041400 packets, 1266523272 bytes

5 minute offered rate 6000 bps, drop rate 0000 bps

Match: any

Queueing

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 620518/98117702

shape (average) cir 8888000, bc 35552, be 35552

target shape rate 8888000

Service-policy : POLICY-CHILD-1

Class-map: ISP-SPEED (match-any)

13038453 packets, 1266334664 bytes

5 minute offered rate 6000 bps, drop rate 0000 bps

Match: access-group name ISP-SPEED

Queueing

queue limit 1152 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 617571/97929094

shape (average) cir 160000000, bc 640000, be 640000

target shape rate 160000000

Service-policy : POLICY-CHILD-2

!# . . . Some output omitted

Class-map: SRV-RX (match-any)

12914735 packets, 1203996146 bytes

5 minute offered rate 5000 bps, drop rate 0000 bps

Match: dscp cs6 (48)

Queueing

queue limit 115 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 494074/35611258

bandwidth 10% (16000 kbps)

Exp-weight-constant: 9 (1/512)

Mean queue depth: 0 packets

For the ROOT-POLICY, I get 64 packets, no issue there.

If I look at the queue limit for the POLICY-CHILD-1, I get 1152 packets, which is obviously more than the theoretical value of 666.

Again, for the POLICY-CHILD-2, I get 115 packets, which is more than 66.

Moreover, it get stranger:

If I increase the ‘WAN virtual limit’, (both bandwidth on the sub-interface and shape rate on the POLICY-ROOT), then the queue limit shown by the router tends towards the theoretical values. But if I decreased the ‘WAN virtual limit’, then the queue limit shown by the router tends towards the maximum queue limit of the physical interface.

The maximum queue limit is obtained with the next command:

show platform hardware qfp active infrastructure bqs interface Gi0/0/X | inc limit

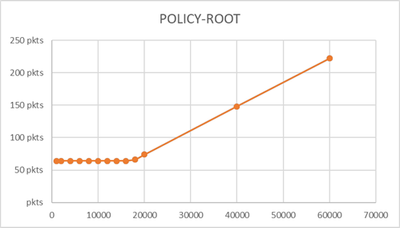

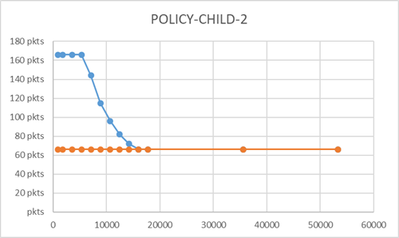

I extracted the queue-limit for several WAN speeds limits and I get the followings graph:

In orange is the theoretical queue limit, and in blue the queue limit seen on the router (speed is in Kbps).

Neiher reload nor factory-reset change the seen behavior.

I’ve observed the same behavior one different ISR 4000 and on an ASR 1001 router, with both IOS-XE 17.3.4a and 16.6.4.

I am wondering if anyone had seen something similar and/or have an explanation before I open a case to cisco.

Best regards,

Clément.

- Labels:

-

ASR 1000 Series

-

ISR 4000 Series

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2022 03:44 PM - edited 11-29-2022 05:43 PM

Hmm, cannot comment seeing something similar or have an explanation.

From your work, perhaps you've indeed found a software defect.

Is it worth opening a case on?

I would say no because for optimal default queue limit settings, deriving queue limit settings from just bandwidth is often very suboptimal.

BTW, very curious about your thinking and/or explanation of the root shaper i.e. "goal is to set a virtual limit for the WAN link".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2022 01:48 AM

Well, my earlier explanation was a bit oversimplified.

Actually, there is one (or more depending on the bandwidth) encryption equipment between our router and the ISP router.

POLICY-CHILD-1 real purpose is to limit the bandwidths to the maximum capacity of the encryption equipment (which is a fixed value for a given model).

POLICY-ROOT goal is to limit the WAN bandwidths to the bandwidths of the ISP link (or less depending of the circumstances).

Could you elaborate when you say: “deriving queue limit settings from just bandwidth is often very suboptimal.”

I tend to agree, if only because the cisco formula is based on the Ethernet MTU of 1500 bytes, while the average packet size is under 1000 bytes (or at least is was last time I checked).

However, I preferred to avoid having to “manually” enforce the queue limit size, both because the large number of equipment and contractual obligation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2022 08:32 AM

"Actually, there is one (or more depending on the bandwidth) encryption equipment between our router and the ISP router."

Ah, I had also been wondering about the "ip mtu 1366". Makes sense if all your interface egress traffic will be encrypted. However, 1366 seem smaller than what's usually needed (as 1400 often is even a bit less than what's needed, at least when doing encryption on Cisco routers). BTW, are you familiar with the "ip tcp mss-adjust" interface command?

Your root and child-1 shapers also make (logical) sense. (Personally, I would recommend against using two shapers and the extra policy level as they are not actually needed [as the minimal shaper is the overall bandwidth limiter].)

"I tend to agree, if only because the cisco formula is based on the Ethernet MTU of 1500 bytes, while the average packet size is under 1000 bytes (or at least is was last time I checked)."

Actually, I suspect, it has more to do with counting queued packets being simpler. BTW, newer IOSs sometimes offer queuing limits now in bytes or ms, both to address the issue that packets do vary in size. (Some newer shapers [and policers?] now appear to automatically, or by configuration, include L2 overhead in their bandwidth computations [of course, L2 overhead, as a percentage, much impacted by packet size].)

"Could you elaborate when you say: “deriving queue limit settings from just bandwidth is often very suboptimal.”"

Laugh, depends how "elaborate" you would like me to get. To me, queuing limits are just as integral to QoS as setting bandwidth allocations or traffic classes. (Often even simplistic queue length management often either suggests larger queues for higher priority traffic, to avoid that traffic's drops, or larger queues for lower priority traffic, as such traffic is more likely to be queued and needs the additional queue capacity. [Note, of course these two approaches are opposites, but I did write they are simplistic.])

I'm willing to elaborate further, but that rabbit hole goes mighty deep (Alice in Wonderland) or do you want the red or blue pill (Matrix)?

"However, I preferred to avoid having to “manually” enforce the queue limit size, both because the large number of equipment and contractual obligation."

Yea, that would be great, but really effective QoS needs you to understand your QoS goals for your traffic, and create configurations to accomplish the result you want. Often, Cisco's default configurations often are "one size that fit, almost, no one (ideally)".

BTW, I don't intend to imply everything Cisco does as part of some auto generation or auto monitoring is bad or won't continue to improve (far from it). Just for QoS, currently to do it "well" needs "hands-on" and some network engineering expertise. (BTW, although QoS often needs to be "tailored" for you, that doesn't mean every device runs a unique, to it, QoS configuration.)

Again, I'm willing to go, pretty much, to any depth explaining QoS. I have a good bit of experience making it work well in the "real world".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2022 01:43 AM

“BTW, are you familiar with the "ip tcp mss-adjust" interface command?”

Those interfaces only see GRE tunneling protocol (with MPLS on top of it), hence the fixed MTU.

“BTW, newer IOSs sometimes offer queuing limits now in bytes or ms, both to address the issue that packets do vary in size.”

Hardware only support packets and bytes, so ms get converted to bytes. Nevertheless, yes bytes mode do address the issue. We’re using WRED and I suppose that bytes mode+WRED would be more efficient than packet mode+WRED (but also less readable).

“Just for QoS, currently to do it "well" needs "hands-on" and some network engineering expertise”

That kinda what we are trying to avoid, having to engineer every queue-limit. Not because of the technical part, but because of the cost (software development, test, production run, maintenance). Not sure the benefice is worth the cost, but we opened a case to cisco and depending on their answer, we will have to.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2022 09:52 AM

"Those interfaces only see GRE tunneling protocol (with MPLS on top of it), hence the fixed MTU."

Well, of course, GRE, alone, doesn't need an IP MTU of 1366 and MPLS, generally, extends frame size, i.e. doesn't reduce it, so I would guess there's much more concerning how this works. No need to get further into that, but using Cisco's IP TCP mss-adjust further upstream, assuming you're not already doing so, might be worth considering.

"Hardware only support packets and bytes, so ms get converted to bytes. Nevertheless, yes bytes mode do address the issue. We’re using WRED and I suppose that bytes mode+WRED would be more efficient than packet mode+WRED (but also less readable)."

Laugh, yea, don't be me started on the issues with WRED. I generally recommend it only be used by real/true QoS experts. Without going into the issues of RED, I often mention, search the Internet on the subject, and you find lots of "improved" RED variants. Even Dr. Floyd revised her (the) initial RED, and Cisco has their own FRED variant (which tries to address one of the major issues of RED.)

"That kinda what we are trying to avoid, having to engineer every queue-limit. Not because of the technical part, but because of the cost (software development, test, production run, maintenance). Not sure the benefice is worth the cost, but we opened a case to cisco and depending on their answer, we will have to."

Yea, again, fully understand. In fact, I often recommend consideration be given to "care and feeding" when deciding to implement technologies. Ditto for a cost vs. benefit analysis. Heck, even with QoS, I've never suggested it must to be everywhere as often the most "bang for the buck" is implementations at just congestion points where congestion is adverse to the traffic. Even for congestion locations that are problematic, sometimes the "best" solution is acquiring more bandwidth.

On the other hand, the benefits of "good" QoS are often overlooked, and often "good" QoS doesn't need to be "complex".

Unfortunately, "by-the-book" QoS models, like the RFCs/Cisco's 12 (or so) class models, overall, are not much better than a global FIFO queue, well except for some high priority real-time traffic, like VoIP.

On Cisco routers that support FQ, moving from a global single FIFO queue to FQ often yields wonderful results. Combine it with LLQ for real-time traffic, and you likely have a simple QoS policy for 90 to 95% of all QoS needs. Add two more classes, ideally also using FQ, one for higher-priority than BE and one for lower-priority than BE, might deal with about 99% of all QoS needs.

I.e.: my 99% QoS policy:

policy-map 99percent

class real-time

priorty percent 33

class hi-priority

bandwidth remaining percent 81

fair-queue

class lo-priority

bandwidth remaining percent 1

fair-queue

class class-default

bandwidth remaining percent 9

fair-queue

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2022 01:42 AM

Hi,

I wanted to make a quick update after having discussed the matter with Cisco.

From their perspective, the way we implement QoS (shapping child policy > shapping parent policy) do not follow the good practices. Therefor it is not a bug.

Moreover, they told me that for the same reasons, the queue-limit values are unpredictable.

I don’t thinks the way we do QoS is explicitly against the good practices, but we surely aren’t doing it the logical/classic way. However from my experimentation, the queue-limit values clearly follow a pattern.

Anyway, it is what it is, so I’m going to look into fixing the queue-limit values in the configuration of each policy-map.

Thanks for the exchange @Joseph W. Doherty, it was quite enlightening.

Best regards,

Clément.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2022 07:19 AM

@Clement L thank you for the "update".

I don't see anything wrong with a parent and child shaper(s), but I'm assuming, what Cisco is saying that is not good practice is the parent shaper being more restrictive than a child shaper, correct? If so, I will say it's a bit "unusual".

It sort of makes sense, though, for Cisco to set, explicitly undefined, queue limits based on shaping values, as those indicate bandwidth limits (much as they might do for physical interfaces of different bandwidths).

I can appreciate why you don't want to explicitly set queue sizes, but it's nice that you can and further, setting those, if truly important to your QoS goals, avoids situations where Cisco decides to revise their auto queue bandwidth calculation in some subsequence IOS version changing how your QoS "works".

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide