- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Blogs

- BFD over Logical Bundle (BLB) implementation on NCS5500 platforms

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Before going further ...

This document describes "BFD over Logical Bundle" (BLB) implementation on NCS5500 platforms.

BLB over IPv4 is supported on these platforms since IOS XR 6.3.2.

BLB over IPv6 is supported on these platforms since IOS XR 6.6.1 (limited availability release).

For older platforms (like ASR9K or CRS) running IOS XR, BLB has been supported since 4.3.0.

Unless noted, details in this document are applicable across all platforms running IOS XR.

There are many documentations already about XR implementation of BLB or BFD in general, this document took some info from them while also adding new data about specific NCS5500 implementation.

This is also a living document, the document will be updated if we have new information.

Update 4/15/2021, this article will not be updated anymore going forward.

The information in this document is accurate as of IOS XR 7.0.2.

Newer version of IOS XR might have new BFD features / enhancements that are not covered in this doc.

Whenever in doubt, please reach out to your friendly TAC team for assistance.

A particularly good general reference is as follows:

https://supportforums.cisco.com/t5/service-providers-documents/bfd-support-on-cisco-asr9000/ta-p/3153191

A very high level overview of BLB

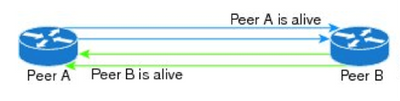

In the context of routing, purpose of BFD is to detect communication failure between two routers faster than what is supported by routing protocols detection timers.

BFD detects the failure by monitoring incoming BFD control packets from neighbor router.

If a number of packets are lost in transmission for whatever reason and thus not received by the monitoring router, the monitoring router will bring down routing session to the neighbor router.

Keep in mind that BFD will bring down the routing session, but it will be up to the routing protocols to bring up the routing session again (i.e. BFD is only responsible to bring down, not to bring up routing sessions).

So it is possible that the following scenario might happen:

- BFD misses BFD control packets from a specific neighbor.

- BFD session goes down, down BFD session brings down routing session (say, OSPF) to that neighbor.

- After a while, OSPF for some reason manages to recover on its own (maybe the problem only affects BFD packets and not OSPF packets).

- OSPF session to the neighbor will recover.

- But BFD session will still be down since it still experiences missing BFD packets.

So now we have OSPF up and BFD down.

This outcome is counter-intuitive but expected.

BLB is a BFD implementation on bundle interface.

Since bundle interface has multiple member links, we need a somewhat more complex implementation of BFD when it runs on bundle interface, as compared with when it runs on regular physical interface.

BFD implementation on bundle interface:

BVLAN VS BOB VS BLB

There are three different BFD over bundle interface implementations on IOS XR platform:

- BVLAN ("BFD over VLAN over bundle")

Note:

Supported since IOS-XR 3.3, withdrawn and then supported again on 3.8.2.

Now has reached end of development and thus not advised to be implemented anymore.

BFD session can run only on VLAN subinterfaces (e.g. be1.1), not on main bundle interface (e.g. be1). - BoB ("BFD over Bundle")

Also known as:

MicroBFD

Note:

Supported since IOS-XR 4.0.1 for ASR9K.

Operation:

Each bundle member link runs its own BFD session.In the above figure, we will have 4 total BFD sessions running (1 session per member link).

BFD Client:

bundlemgr, i.e. down BFD session on a specific member link can potentially bring down the whole bundle interface (say when down member link would make number of available links to fall below required minimum).

Down bundle interface will in turn bring down routing session. - BLB ("BFD over Logical Bundle")

Also known as:

Logical bundle

Note:

Supported since IOS-XR 4.3.0 for ASR9K, since 6.3.2 for NCS5500.

Replaces BVLAN implementation.

Does not support echo mode (since BLB relies on bfd multipath implementation).

BFD session can run on main bundle interface (e.g. be1) as well as on VLAN subinterface (e.g. be1.1).

For ASR9K, BoB and BLB coexistence can be configured via "bfd bundle coexistence bob-blb <>" config, but this is not supported yet for NCS5500.

Operation:

On NCS5500 platforms, BFD is hardware offloaded, meaning processing of the BFD packets will mostly be done in LC NPU.

LC CPU will process BFD packets only during BFD initialization process.

No BFD packets are ever sent to RP.

This is different than default operation of ASR9K platform in which RP and LC will work together to support BFD sessions.

User will configure a specific LC to host BFD sessions, which doesn't need to be the same LC on which bundle member links reside.

So for example, it's possible that bundle member links are on LC slot 2 and slot 3, while the BFD sessions are actually hosted by LC on slot 5.

This is different with the way BFD on non-bundle interface works.

For non-bundle BFD, the BFD sessions will always be hosted on the LC where the port resides.

So for example, say we configure OSPF BFD over Hu0/6/0/32.147 interface, then this BFD session will always be hosted by LC on slot 6.

In case of BLB, the host LC will:

- Send BFD packets into bundle by querying FIB for the list of next hops for the BFD session destination address.

This is done according to load-balance algorithm, thus different sessions may use different member links of the bundle.

- Receive BFD packets from bundle via internal path.

At any time, Tx packets for a particular BFD session will be on one single bundle member link.

Rx packets, on the other hand, can be received on any bundle member link.

When LC NPU doesn't receive BFD packets in timely manner, it will generate protection packet towards LC CPU.

LC CPU will then bring BFD sessions down and notify routing protocol clients.

Same as other XR platforms, BFD packets will use UDP with destination port of 3784. Source port might differ though.

Here's an example of BLB operation:

In the figure above, be1 has 2 member links: link1 on LC1 and link2 on LC2.

LC1 is configured as BFD host LC.

We configure 4 VLAN subinterfaces: be1.1, be1.2, be1.3, and be1.4.

BFD session X is running for be1.1 (say, to serve OSPF on be1.1).

BFD session Y is running for be1.2 (say, to serve ISIS on be1.2).

Based on load-balance algorithm for the next hops of the BFD session destination address:

- BFD packets for BFD session X will be transmitted on link1

- BFD packets for BFD session Y will be transmitted on link2

Incoming BFD packets for any session can be received on any link (link1 or link2).

BFD Client:

Routing protocols (single-hop BGP, ISIS, OSPF, static as of IOS-XR 6.3.2), i.e. down BFD brings down routing session.

Detection of physical bundle member link failure is done via ifmgr and/or LACP informing bundlemgr, which doesn't have anything to do with BFD.

In case of member link failure (that happens to host a specific BLB session), bundlemgr will update the load-balance tables and transmit the BFD packets using different member link, which means a failure of member link will NOT bring down the BLB session.

When it comes to BLB implementation, the only time bundle will bring down BLB sessions is when the whole bundle goes down.

This is because BLB then won't be able to transmit BFD packets on any bundle member link.

Sample Configurations

BVLAN

configure BFD under each desired protocol.

-----------------------------------------------------------------------------

interface Bundle-Ether2.1

ipv4 address 200.1.1.1 255.255.255.0

encapsulation dot1q 1

!

router static

address-family ipv4 unicast

202.158.3.13/32 200.1.1.2 bfd fast-detect minimum-interval 300 multiplier 3

!

!

router isis cybi

interface Bundle-Ether2.1

bfd minimum-interval 300

bfd multiplier 3

bfd fast-detect ipv4

address-family ipv4 unicast

!

!

!

router ospf cybi

area 0

interface Bundle-Ether2.1

bfd fast-detect

bfd minimum-interval 300

bfd multiplier 3

!

!

!

router bgp 4787

neighbor 202.158.1.1

remote-as 4787

address-family ipv4 unicast

route-policy PASS-ALL in

route-policy PASS-ALL out

!

bfd fast-detect

bfd multiplier 3

bfd minimum-interval 300

!

!

-----------------------------------------------------------------------------

BoB

No need for BFD config under protocol since routing protocol is NOT client to BoB.

BoB is configured on main bundle interface (i.e. be1), NOT on VLAN subinterface (i.e. be1.1).

Only ietf mode is supported for NCS5500 platform.

-----------------------------------------------------------------------------

interface be1

bfd mode ietf

bfd address-family ipv4 destination 202.158.2.1

bfd address-family ipv4 fast-detect

-----------------------------------------------------------------------------

BLB

Configure BFD under each desired protocol (exactly the same with BVLAN).

Configure multipath capability under BFD since BFD needs to use multiple paths (i.e. bundle member links) to reach BFD neighbor.

assuming we pick LC on slot 6 to host BFD:

-----------------------------------------------------------------------------

bfd

multipath include location 0/6/CPU0

-----------------------------------------------------------------------------

Multiple LCs can be configured for load sharing / redundancy purpose:

-----------------------------------------------------------------------------

bfd

multipath include location 0/6/CPU0

multipath include location 0/2/CPU0

...

-----------------------------------------------------------------------------

There is no specific algorithm to pick the host LC for particular BLB session.

At any point in time, any configured LC that have sufficient resource (in terms of PPS, etc) can host any new BLB session.

Whenever a host LC can no longer support these session type and PPS etc, any new BLB session will be created in next LC in list.

Whenever a host LC restarts, its hosted BLB sessions will be brought down and recreated in next host LC in list.

Next, configure the following to ensure that an adjacency entry is created for the BFD peer IP address. Wihout this configuration, hardware session creation might fail.

This configuration is mandatory for IOS XR 6.6.2 and above.

cef proactive-arp-nd enable

Supported Scale and Timers

How many BFD sessions can run on one time depends on multiple factors as follow:

- How many clients served by the BFD sessions.

The less BFD clients to support, the more BFD sessions can be run.

For example:

We can run more BFD sessions if they serve only OSPF clients, instead of the whole OSPF, ISIS, BGP, and static route as clients. - How aggressive the BFD timers are.

The less aggressive the timers are, the more BFD sessions can be run.

For example:

We can run more BFD sessions if they're configured with 300ms*3 timers, instead of 150ms*3.

On NCS5500 platforms, supported scale is as follows:

Quick note about LC:

Modular NCS-5500 platforms like NCS-5508 support multiple LC, while "pizza boxes" platforms like NCS-5501 is considered a single LC as a whole (LC 0/0/CPU0).

Per LC:

500 mixed BFD sessions (multihop, singlehop on regular interface, BLB, BoB).

( BLB + BFD multihop ) per LC:

Modular platforms (NCS-5508, NCS-5516 etc) : 250 sessions

"Pizza boxes" platforms with Jericho or better ASIC (NCS-55A2-MOD, NCS-55A1-24H, etc) : 250 sessions

"Pizza boxes" platforms with Qumran ASIC (NCS-5501-SE, NCS-5501) : 125 sessions

Per chassis:

Modular platforms (NCS-5508, NCS-5516 etc) : 4000 mixed BFD sessions

"Pizza boxes" platforms (NCS-5501 etc) : 500 mixed BFD sessions

During test, each of BLB session has OSPF, ISIS, and BGP as client.

On NCS5500 platforms, recommended timer is as follows:

Minimum of 300ms with multiplier 3.

Value more aggressive (i.e. less) than 300ms can be configured but not advised.

This is because the implementation for BLB (BFD multipath) is depending on FIB to get BFD updates, and this FIB part is still done in software.

Interval less than 300ms are likely to give false failure and thus can bring down BFD session.

Multiplier less than 3 is also not supported..

"show bfd summary" command will give you the info about supported BFD PPS and sessions per chassis.

BLB and NSR

RP switch over will not tear down existing BLB sessions when NSR is configured under each desired routing protocols.

Caveats

Same with ASR9K, only BFD async mode is supported for BLB, echo mode is not supported.

In fact, echo mode is not supported at all with NCS5500 as of 6.3.2 release.

NCS5500 does not support MPLS protocols like RSVP-TE and LDP as BFD client.

Since BFD processing is hardware offloaded, BFD packet counters will not increment when we issue certain show bfd commands like "show bfd session detail".

This is expected since regular BFD CLI command will derive the counter from LC CPU, not from LC NPU.

Sample Scenario

Topology

NCS-5508 "potat"

|

|

|Bundle-Ether2.1 : 1 BLB session with OSPF and static route as client

|Bundle-Ether2.2 : 1 BLB session with ISIS and BGP as client

|

|

NCS-5501 "birin"

Router configurations

(only relevant config is shown)

NCS-5508 "potat"

bfd

multipath include location 0/6/CPU0

!

# mandatory starting with IOS XR 6.6.2

cef proactive-arp-nd enable

interface Bundle-Ether2.1

ipv4 address 202.158.1.1 255.255.255.0

encapsulation dot1q 1

!

interface Bundle-Ether2.2

ipv4 address 202.158.2.1 255.255.255.0

encapsulation dot1q 2

!

router static

address-family ipv4 unicast

202.158.3.13/32 202.158.1.2 bfd fast-detect minimum-interval 300 multiplier 3

!

!

router isis cybi

nsr

interface Bundle-Ether2.2

bfd minimum-interval 300

bfd multiplier 3

bfd fast-detect ipv4

address-family ipv4 unicast

!

!

!

router ospf cybi

nsr

area 0

interface Bundle-Ether2.1

bfd fast-detect

bfd minimum-interval 300

bfd multiplier 3

!

!

!

router bgp 4787

nsr

neighbor 202.158.2.2

remote-as 4787

address-family ipv4 unicast

route-policy PASS-ALL in

route-policy PASS-ALL out

!

update-source Bundle-Ether2.2

bfd fast-detect

bfd multiplier 3

bfd minimum-interval 300

!

!

NCS-5501 "birin"

bfd

multipath include location 0/0/CPU0

!

# mandatory starting with IOS XR 6.6.2

cef proactive-arp-nd enable

interface Bundle-Ether2.1

ipv4 address 202.158.1.2 255.255.255.0

encapsulation dot1q 1

!

interface Bundle-Ether2.2

ipv4 address 202.158.2.2 255.255.255.0

encapsulation dot1q 2

!

router static

address-family ipv4 unicast

202.158.3.14/32 202.158.1.1 bfd fast-detect minimum-interval 300 multiplier 3

!

!

router isis cybi

interface Bundle-Ether2.2

bfd minimum-interval 300

bfd multiplier 3

bfd fast-detect ipv4

address-family ipv4 unicast

!

!

!

router ospf cybi

area 0

interface Bundle-Ether2.1

bfd fast-detect

bfd minimum-interval 300

bfd multiplier 3

!

!

!

router bgp 4787

neighbor 202.158.2.1

remote-as 4787

address-family ipv4 unicast

route-policy PASS-ALL in

route-policy PASS-ALL out

!

update-source Bundle-Ether2.2

bfd fast-detect

bfd multiplier 3

bfd minimum-interval 300

!

!

Show commands

(from NCS-5508 "potat")

RP/0/RP0/CPU0:potat#sh bfd session

Interface Dest Addr Local det time(int*mult) State

Echo Async H/W NPU

------------------- --------------- ---------------- ---------------- ----------

BE2.1 202.158.1.2 0s(0s*0) 900ms(300ms*3) UP

Yes 0/6/CPU0

BE2.2 202.158.2.2 0s(0s*0) 900ms(300ms*3) UP

Yes 0/6/CPU0

RP/0/RP0/CPU0:potat#sh bfd session detail interface be2.1

I/f: Bundle-Ether2.1, Location: 0/6/CPU0

Dest: 202.158.1.2

Src: 202.158.1.1

State: UP for 0d:21h:35m:54s, number of times UP: 1

Session type: SW/V4/SH/BL

Received parameters:

Version: 1, desired tx interval: 300 ms, required rx interval: 300 ms

Required echo rx interval: 0 ms, multiplier: 3, diag: None

My discr: 12584150, your discr: 845, state UP, D/F/P/C/A: 0/0/0/1/0

Transmitted parameters:

Version: 1, desired tx interval: 300 ms, required rx interval: 300 ms

Required echo rx interval: 0 ms, multiplier: 3, diag: None

My discr: 845, your discr: 12584150, state UP, D/F/P/C/A: 0/1/0/1/0

Timer Values:

Local negotiated async tx interval: 300 ms

Remote negotiated async tx interval: 300 ms

Desired echo tx interval: 0 s, local negotiated echo tx interval: 0 ms

Echo detection time: 0 ms(0 ms*3), async detection time: 900 ms(300 ms*3)

Label:

Internal label: 64119/0xfa77

Local Stats:

Intervals between async packets:

Tx: Number of intervals=3, min=160 ms, max=726 ms, avg=385 ms

Last packet transmitted 77754 s ago

Rx: Number of intervals=4, min=100 ms, max=270 ms, avg=183 ms

Last packet received 77753 s ago

Intervals between echo packets:

Tx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet transmitted 0 s ago

Rx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet received 0 s ago

Latency of echo packets (time between tx and rx):

Number of packets: 0, min=0 ms, max=0 ms, avg=0 ms

MP download state: BFD_MP_DOWNLOAD_ACK

State change time: Dec 14 18:38:06.721

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

ospf-cybi 300 ms 3 300 ms 3

ipv4_static 300 ms 3 300 ms 3

H/W Offload Info:

H/W Offload capability : Y, Hosted NPU : 0/6/CPU0

Async Offloaded : Y, Echo Offloaded : N

Async rx/tx : 5/4

Platform Info:

NPU ID: 0

Async RTC ID : 1 Echo RTC ID : 0

Async Feature Mask : 0x0 Echo Feature Mask : 0x0

Async Session ID : 0x34d Echo Session ID : 0x0

Async Tx Key : 0x34d Echo Tx Key : 0x0

Async Tx Stats addr : 0x0 Echo Tx Stats addr : 0x0

Async Rx Stats addr : 0x0 Echo Rx Stats addr : 0x0

RP/0/RP0/CPU0:potat#sh bfd session detail destination 202.158.2.2

I/f: Bundle-Ether2.2, Location: 0/6/CPU0

Dest: 202.158.1.2

Src: 202.158.1.1

State: UP for 0d:21h:39m:36s, number of times UP: 1

Session type: SW/V4/SH/BL

Received parameters:

Version: 1, desired tx interval: 300 ms, required rx interval: 300 ms

Required echo rx interval: 0 ms, multiplier: 3, diag: None

My discr: 12584129, your discr: 824, state UP, D/F/P/C/A: 0/0/0/1/0

Transmitted parameters:

Version: 1, desired tx interval: 300 ms, required rx interval: 300 ms

Required echo rx interval: 0 ms, multiplier: 3, diag: None

My discr: 824, your discr: 12584129, state UP, D/F/P/C/A: 0/1/0/1/0

Timer Values:

Local negotiated async tx interval: 300 ms

Remote negotiated async tx interval: 300 ms

Desired echo tx interval: 0 s, local negotiated echo tx interval: 0 ms

Echo detection time: 0 ms(0 ms*3), async detection time: 900 ms(300 ms*3)

Label:

Internal label: 64098/0xfa62

Local Stats:

Intervals between async packets:

Tx: Number of intervals=3, min=160 ms, max=616 ms, avg=383 ms

Last packet transmitted 77975 s ago

Rx: Number of intervals=4, min=100 ms, max=374 ms, avg=209 ms

Last packet received 77975 s ago

Intervals between echo packets:

Tx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet transmitted 0 s ago

Rx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet received 0 s ago

Latency of echo packets (time between tx and rx):

Number of packets: 0, min=0 ms, max=0 ms, avg=0 ms

MP download state: BFD_MP_DOWNLOAD_ACK

State change time: Dec 14 18:38:06.721

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

isis-cybi 300 ms 3 300 ms 3

bgp-default 300 ms 3 300 ms 3

H/W Offload Info:

H/W Offload capability : Y, Hosted NPU : 0/6/CPU0

Async Offloaded : Y, Echo Offloaded : N

Async rx/tx : 5/4

Platform Info:

NPU ID: 0

Async RTC ID : 1 Echo RTC ID : 0

Async Feature Mask : 0x0 Echo Feature Mask : 0x0

Async Session ID : 0x338 Echo Session ID : 0x0

Async Tx Key : 0x338 Echo Tx Key : 0x0

Async Tx Stats addr : 0x0 Echo Tx Stats addr : 0x0

Async Rx Stats addr : 0x0 Echo Rx Stats addr : 0x0

Logs to provide to Cisco TAC for BLB related issues on NCS5500 platform

As BLB runs between 2 routers, we need to provide logs from both routers.

Gather the following set of logs from each router:

- Replace "NAME_OF_ROUTER"with the name of your router.

- Replace "HOSTLC" with the location of the LC, e.g: 00cpu0 for 0/0/CPU0.

- Timestamp when the problem occurs (e.g. 16:25:15.095 GMT-7 Fri Dec 15 2017), the more exact, the better.

It's best if the timestamp can be copied from a specific line of "show log" output. - show tech routing bfd file harddisk:/NAME_OF_ROUTER_sh_tech_routing_bfd

- show tech bfdhwoff location <host LC> file harddisk:/NAME_OF_ROUTER_sh_tech_bfdhwoff_loca_HOSTLC

(if you have multiple host LCs, grab show tech from all of them) - show dpa trace location <host LC> | file harddisk:/NAME_OF_ROUTER_show_dpa_trace_location_HOSTLC.txt

(if you have multiple host LCs, grab output from all of them) - show controller fia trace all location <host LC> | file harddisk:/NAME_OF_ROUTER_show_controller_fia_trace_all_location_HOSTLC.txt

(if you have multiple host LCs, grab output from all of them) - show bfd session detail | file harddisk:/NAME_OF_ROUTER_show_bfd_sess_det.txt

- show bfd summary | file harddisk:/NAME_OF_ROUTER_show_bfd_sum.txt

- show log | file harddisk:/NAME_OF_ROUTER_show_log.txt

(showing the events when the problem occurs)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: