- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Load-balancing architecture and characteristics

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

08-28-2012

07:08 AM

- edited on

11-22-2022

08:23 AM

by

nkarpysh

![]()

Introduction

In this document it is discussed how the ASR9000 decides how to take multiple paths when it can load-balance. This includes IPv4, IPv6 and both ECMP and Bundle/LAG/Etherchannel scenarios in both L2 and L3 environments

Core Issue

The load-balancing architecture of the ASR9000 might be a bit complex due to the 2 stage forwarding the platform has. In this article the various scenarios should explain how a load-balancing decision is made so you can architect your network around it.

In this document it is assumed that you are running XR 4.1 at minimum (the XR 3.9.X will not be discussed) and where applicable XR42 enhancements are alerted.

Load-balancing Architecture and Characteristics

Characteristics

ASR9000 has the following load-balancing characteristics:

- ECMP:

- Non recursive or IGP paths : 32-way

- Recursive or BGP paths:

- 8-way for Trident

- 32 way for Typhoon

- 64 way Typhoon in XR 5.1+

- 64 way Tomahawk XR 5.3+ (Tomahawk only supported in XR 5.3.0 onwards)

- Bundle:

- 64 members per bundle

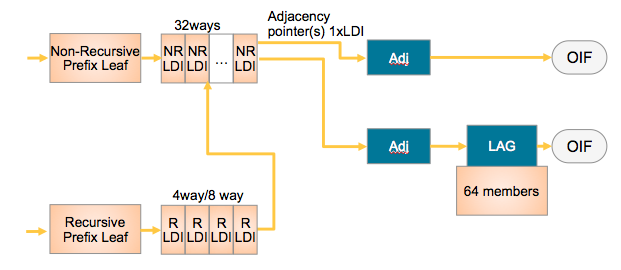

The way they tie together is shown in this simplified L3 forwarding model:

NRLDI = Non Recursive Load Distribution Index

RLDI = Recursive Load Distribution Index

ADJ = Adjancency (forwarding information)

LAG = Link Aggregation, eg Etherchannel or Bundle-Ether interface

OIF = Outgoing InterFace, eg a physical interface like G0/0/0/0 or Te0/1/0/3

What this picture shows you is that a Recursive BGP route can have 8 different paths, pointing to 32 potential IGP ways to get to that BGP next hop, and EACH of those 32 IGP paths can be a bundle which could consist of 64 members each!

Architecture

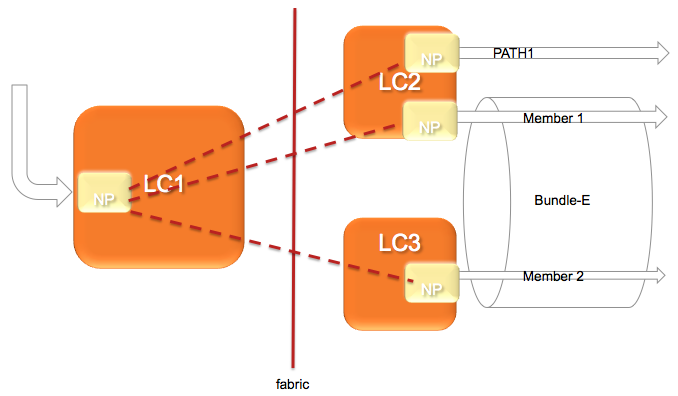

The architecture of the ASR9000 load-balancing implementation surrounds around the fact that the load-balancing decision is made on the INGRESS linecard.

This ensures that we ONLY send the traffic to that LC, path or member that is actually going to forward the traffic.

The following picture shows that:

In this diagram, let's assume there are 2 paths via the PATH-1 on LC2 and a second path via a Bundle with 2 members on different linecards.

(note this is a bit extraordinary considering that equal cost paths can't be mathematically created by a 2 member bundle and a single physical interface)

The Ingress NPU on the LC1 determines based on the hash computation that PATH1 is going to forward the traffic, then traffic is sent to LC2 only.

If the ingress NPU determines that PATH2 is to be chosen, the bundle-ether, then the LAG (link aggregation) selector points directly to the member and traffic is only sent to the NP on that linecard of that member that is going to forward the traffic.

Based on the forwarding achitecture you can see that the adj points to a bundle which can have multiple members.

Allowing this model, when there are lag table udpates (members appearing/disappearing) do NOT require a FIB update at all!!!

What is a HASH and how is it computed

In order to determine which path (ECMP) or member (LAG) to choose, the system computes a hash. Certain bits out of this hash are used to identify member or path to be taken.

- Pre 4.0.x Trident used a folded XOR methodology resulting in an 8 bit hash from which bits were selected

- Post 4.0.x Trident uses a checksum based calculation resulting in a 16 bit hash value

- Post 4.2.x Trident uses a checksum based calculation resulting in a 32 bit hash value

- Typhoon 4.2.0 uses a CRC based calculation of the L3/L4 info and computes a 32 bit hash

8-way recursive means that we are using 3 bits out of that hash result

32-way non recursive means that we are using 5 bits

64 members means that we are looking at 6 bits out of that hash result

It is system defined, by load-balancing type (recursive, non-recursive or bundle member selection) which bits we are looking at for the load-balancing decision.

Fields used in ECMP HASH

What is fed into the HASH depends on the scenario:

| Incoming Traffic Type | Load-balancing Parameters |

|---|---|

| IPv4 |

Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID |

| IPv6 |

Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID |

| MPLS - IP Payload, with < 4 labels |

Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID |

|

From 6.2.3 onwards, for Tomahawk + later ASR9K LCs: MPLS - IP Payload, with < 8 labels |

Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID Typhoon LCs retain the original behaviour of supporting IP hashing for only up to 4 labels.

|

|

MPLS - IP Payload, with > 9 labels |

If 9 or more labels are present, MPLS hashing will be performed on labels 3, 4, and 5 (labels 7, 8, and 9 from 7.1.2 onwards). Typhoon LCs retain the original behaviour of supporting IP hashing for only up to 4 labels. |

| - IP Payload, with > 4 labels |

4th MPLS Label (or Inner most) and Router ID |

|

- Non-IP Payload |

Inner most MPLS Label and Router ID |

* Non IP Payload includes an Ethernet interworking, generally seen on Ethernet Attachment Circuits running VPLS/VPWS.

These have a construction of

EtherHeader-Mpls(next hop label)-Mpls(pseudowire label)-etherheader-InnerIP

In those scenarios the system will use the MPLS based case with non ip payload.

IP Payload in MPLS is a common case for IP based MPLS switching on LSR's whereby after the inner label an IP header is found directly.

Router ID

The router ID is a value taken from an interface address in the system in an order to attempt to provide some per node variation

This value is determined at boot time only and what the system is looking for is determined by:

sh arm router-ids

Example:

RP/0/RSP0/CPU0:A9K-BNG#show arm router-id

Tue Aug 28 11:51:50.291 EDT

Router-ID Interface

8.8.8.8 Loopback0

RP/0/RSP0/CPU0:A9K-BNG#

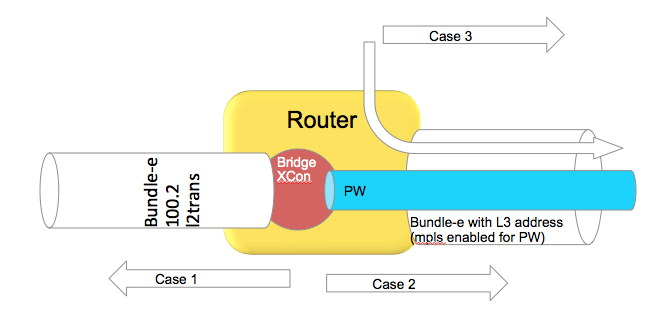

Bundle in L2 vs L3 scenarios

This section is specific to bundles. A bundle can either be an AC or attachment circuit, or it can be used to route over.

Depending on how the bundle ether is used, different hash field calculations may apply.

When the bundle ether interface has an IP address configured, then we follow the ECMP load-balancing scheme provided above.

When the bundle ether is used as an attachment circuit, that means it has the "l2transport" keyword associated with it and is used in an xconnect or bridge-domain configuration, by default L2 based balancing is used. That is Source and Destination MAC with Router ID.

If you have 2 routers on each end of the AC's, then the MAC's are not varying a lot, that is not at all, then you may want to revert to L3 based balancing which can be configured on the l2vpn configuration:

RP/0/RSP0/CPU0:A9K-BNG#configure

RP/0/RSP0/CPU0:A9K-BNG(config)#l2vpn

RP/0/RSP0/CPU0:A9K-BNG(config-l2vpn)#load-balancing flow ?

src-dst-ip Use source and destination IP addresses for hashing

src-dst-mac Use source and destination MAC addresses for hashing

Use case scenarios

Case 1 Bundle Ether Attachment circuit (downstream)

In this case the bundle ether has a configuration similar to

interface bundle-ether 100.2 l2transport

encap dot1q 2

rewrite ingress tag pop 1 symmetric

And the associated L2VPN configuration such as:

l2vpn

bridge group BG

bridge-domain BD

interface bundle-e100.2

In the downstream direction by default we are load-balancing with the L2 information, unless the load-balancing flow src-dest-ip is configured.

Case 2 Pseudowire over Bundle Ether interface (upstream)

The attachment circuit in this case doesn't really matter, whether it is bundle or single interface.

The associated configuration for this in the L2VPN is:

l2vpn

bridge group BG

bridge-domain BD

interface bundle-e100.2

vfi MY_VFI

neighbor 1.1.1.1 pw-id 2

interface bundle-ether 200

ipv4 add 192.168.1.1 255.255.255.0

router static

address-family ipv4 unicast

1.1.1.1/32 192.168.1.2

In this case neighbor 1.1.1.1 is found via routing which appens to be egress out of our bundle Ethernet interface.

This is MPLS encapped (PW) and therefore we will use MPLS based load-balancing.

Case 3 Routing through a Bundle Ether interface

In this scenario we are just routing out the bundle Ethernet interface because our ADJ tells us so (as defined by the routing).

Config:

interface bundle-ether 200

ipv4 add 200.200.1.1 255.255.255.0

show route (OSPF inter area route)

O IA 49.1.1.0/24 [110/2] via 200.200.1.2, 2w4d, Bundle-Ether200

Even if this bundle-ether is MPLS enabled and we assign a label to get to the next hop or do label swapping, in this case

the Ether header followed by MPLS header has Directly IP Behind it.

We will be able to do L3 load-balancing in that case as per chart above.

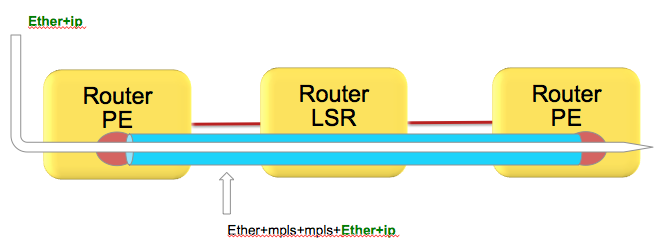

(Layer 3) Load-balancing in MPLS scenarios

As attempted to be highlighted throughout this technote the load-balacning in MPLS scenarios, whether that be based on MPLS label or IP is dependent on the inner encapsulation.

Depicted in the diagram below, we have an Ethernet frame with IP going into a pseudo wire switched through the LSR (P router) down to the remote PE.

Pseudowires in this case are encapsulating the complete frame (with ether header) into mpls with an ether header for the next hop from the PE left router to the LSR in the middle.

Although the number of labels is LESS then 4. AND there is IP available, the system can't skip beyond the ether header and read the IP and therefore falls back to MPLS label based load-balancing.

How does system differentiate between an IP header after the inner most label vs non IP is explained here:

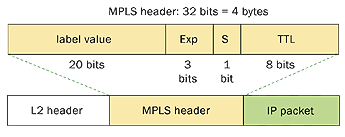

Just to recap, the MPLS header looks like this:

Now the important part of this picture is that this shows MPLS-IP. In the VPLS/VPWS case this "GREEN" field is likely start with Ethernet headers.

Because hardware forwarding devices are limited in the number of PPS they can handle, and this is a direct equivalent to the number of instructions that are needed to process a packet, we want to make sure we can work with a packet in the LEAST number of instructions possible.

In order to comply with that thought process, we check the first nibble following the MPLS header and if that starts with a 4 (ipv4) or a 6 (ipv6) we ASSUME that this is an IP header and we'll interpret the data following as an IP header deriving the L3 source and destination.

Now this works great in the majority scenarios, because hey let's be honest, MAC addresses for the longest time started with 00-0......

in other words not a 4 or 6 and we'd default to MPLS based balancing, something that we wanted for VPLS/VPWS.

However, these days we see mac addresses that are not starting with zero's anymore and in fact 4's or 6's are seen!

This fools the system to believe that the inner packet is IP, while it is an Ether header in reality.

There is no good way to classify an ip header with a limited number of instruction cycles that would not affect performance.

In an ideal world you'd want to use an MD5 hash and all the checks possible to make the perfect decision.

Reality is different and no one wants to pay the price for it either what it would cost to design ASICS that can do high performance without affecting the PPS rate due to a very very comprehensive check of tests.

Bottom line is that if your DMAC starts with a 4 or 6 you have a situation.

Solution

Use the MPLS control word.

Control word is negotiated end to end and inserts a special 4 bytes with zero's especially to accommodate this purpose.

The system will now read a 0 instead of a 4 or 6 and default to MPLS based balancing.

Configuration

to enable control word use the follow template:

l2vpn

pw-class CW

encapsulation mpls

control-word

!

!

xconnect group TEST

p2p TEST_PW

interface GigabitEthernet0/0/0/0

neighbor 1.1.1.1 pw-id 100

pw-class CW

!

!

!

!

Alternative solutions: Fat Pseudowire

Since you might have little control over the inner label, the PW label, and you probably want to ensure some sort of load-balancing, especially on P routers that have no knowledge over the offered service or mpls packets it transports another solution is available known as FAT Pseudowire.

FAT PW inserts a "flow label" whereby the label has a value that is computed like a hash to provide some hop by hop variation and more granular load-balancing. Special care is taken into consideration that there is variation (based on the l2vpn command, see below) and that no reserved values are generated and also don't collide with allocated label values.

Fat PW is supported starting XR 4.2.1 on both Trident and Typhoon based linecards. From 6.5.1 onward we support FAT label over PWHE.

Packet transformation with a Flow Label

Configuration of FAT Pseudowire

The following is configuration example :

l2vpn

load-balancing flow src-dst-ip

pw-class test

encapsulation mpls

load-balancing

flow-label both static

!

!

You can also affect the way that the flow label is computed:

Under L2VPN configuration, use the “load-balancing flow” configuration command to determine how the flow label is generated:

l2vpn

load-balancing flow src-dst-mac

This is the default configuration, and will cause the NP to build the flow label from the source and destination MAC addresses in each frame.

l2vpn

load-balancing flow src-dst-ip

This is the recommended configuration, and will cause the NP to build the flow label from the source and destination IP addresses in each frame.

FAT Pseudowire TLV

Flow Aware Label (FAT) PW signalled sub-tlv id is currently carrying value 0x11 as specified originally in draft draft-ietf-pwe3-fat-pw. This value has been recently corrected in the draft and should be 0x17. Value 0x17 is the flow label sub-TLV identifier assigned by IANA.

When Inter operating between XR versions 4.3.1 and earlier, with XR version 4.3.2 and later. All XR releases 4.3.1 and prior that support FAT

PW will default to value 0x11. All XR releases 4.3.2 and later default to value 0x17.

Solution:

Use the following config on XR version 4.3.2 and later to configure the sub-tlv id

pw-class <pw-name>

encapsulation mpls

load-balancing

flow-label both

flow-label code 17

NOTE: Got a lot of questions regarding the confusion about the statement of 0x11 to 0x17 change (as driven by IANA) and the config requirement for number 17 in this example.

The crux is that the flow label code is configured DECIMAL, and the IANA/DRAFT numbers mentioned are HEX.

So 0x11, the old value is 17 decimal, which indeed is very similar to 0x17 which is the new IANA assigned number. Very annoying, thank IANA

(or we could have made the knob in hex I guess )

Loadbalancing and priority configurations

In the case of VPWS or VPLS, at the ingress PE side, it’s possible to change the load-balance upstream to MPLS Core in three different ways:

1. At the L2VPN sub-configuration mode with “load-balancing flow” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-l2vpn)# load-balancing flow ?

src-dst-ip

src-dst-mac [default]

2. At the pw-class sub-configuration mode with “load-balancing” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-l2vpn-pwc-mpls-load-bal)#?

flow-label [see FAT Pseudowire section]

pw-label [per-VC load balance]

3. At the Bundle interface sub-configuration mode with “bundle load-balancing hash” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-if)#bundle load-balancing hash ? [For default, see previous sections]

dst-ip

src-ip

It’s important to not only understand these commands but also that: 1 is weaker than 2 which is weaker than 3.

Example:

l2vpn

load-balancing flow src-dst-ip

pw-class FAT

encapsulation mpls

control-word

transport-mode ethernet

load-balancing

pw-label

flow-label both static

interface Bundle-Ether1

(...)

bundle load-balancing hash dst-ip

Because of the priorities, on the egress side of the ingress PE (to the MPLS Core), we will do per-dst-ip load-balance (3).

If the bundle-specific configuration is removed, we will do per-VC load-balance (2).

If the pw-class load-balance configuration is removed, we will do per-src-dst-ip load-balance (1).

with thanks to Bruno Oliveira for this priority section

P2MP MPLS TE Tunnels

Only one bundle member will be selected to forward traffic on the P2MP MPLS TE mid-point node.

Possible alternatives that would achieve better load balancing are: a) increase the number of tunnels or b) switch to mLDP.

IPv6

Pre 4.2.0 releases, for the ipv6 hash calculation we only use the last 64 bits of the address to fold and feed that into the hash, this including the regular routerID and L4 info.

In 4.2.0 we made some further enhancements that the full IPv6 Addr is taken into consideration with L4 and router ID.

Determining load-balancing

You can determine the load-balancing on the router by using the following commands

L3/ECMP

For IP :

RP/0/RSP0/CPU0:A9K-BNG#show cef exact-route 1.1.1.1 2.2.2.2 protocol udp ?

source-port Set source port

You have the ability to only specify L3 info, or include L4 info by protocol with source and destination ports.

It is important to understand that the 9k does FLOW based hashing, that is, all packets belonging to the same flow will take the same path.

If one flow is more active or requires more bandwidth then another flow, path utilization may not be a perfect equal spread.

UNLESS you provide enough variation in L3/L4 randomness, this problem can't be alleviated and is generally seen in lab tests due the limited number of flows.

For MPLS based hashing :

RP/0/RSP0/CPU0:A9K-BNG#sh mpls forwarding exact-route label 1234 bottom-label 16000 ... location 0/1/cpu0

This command gives us the output interface chosen as a result of hashing with mpls label 16000. The bottom-label (in this case '16000') is either the VC label (in case of PW L2 traffic) or the bottom label of mpls stack (in case of mpls encapped L3 traffic with more than 4 labels). Please note that for regular mpls packets (with <= 4 labels) encapsulating an L3 packet, only IP based hashing is performed on the underlying IP packet.

Also note that the mpls hash algorithm is different for trident and typhoon. The varied the label is the better is the distribution. However, in case of trident there is a known behavior of mpls hash on bundle interfaces. If a bundle interface has an even number of member links, the mpls hash would cause only half of these links to be utlized. To get around this, you may have to configure "cef load-balancing adjust 3" command on the router. Or use odd number of member links within the bundle interface. Note that this limitation applies only to trident line cards and not typhoon.

Bundle member selection

RP/0/RSP0/CPU0:A9K-BNG#bundle-hash bundle-e 100 loc 0/0/cPU0

Calculate Bundle-Hash for L2 or L3 or sub-int based: 2/3/4 [3]: 3

Enter traffic type (1.IPv4-inbound, 2.MPLS-inbound, 3:IPv6-inbound): [1]: 1

Single SA/DA pair or range: S/R [S]:

Enter source IPv4 address [255.255.255.255]:

Enter destination IPv4 address [255.255.255.255]:

Compute destination address set for all members? [y/n]: y

Enter subnet prefix for destination address set: [32]:

Enter bundle IPv4 address [255.255.255.255]:

Enter L4 protocol ID. (Enter 0 to skip L4 data) [0]:

Invalid protocol. L4 data skipped.

Link hashed [hash_val:1] to is GigabitEthernet0/0/0/19 LON 1 ifh 0x4000580

The hash type L2 or L3 depends on whether you are using the bundle Ethernet interface as an Attachment Circuit in a Bridgedomain or VPWS crossconnect, or whether the bundle ether is used to route over (eg has an IP address configured).

Polarization

Polarization pertains mostly to ECMP scenarios and is the effect of routers in a chain making the same load-balancing decision.

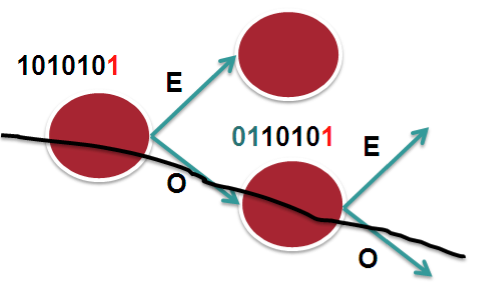

The following picture tries to explain that.

In this scenario we assume 2 bucket, 1 bit on a 7 bit hash result. Let's say that in this case we only look at bit-0. So it becomes an "EVEN" or "ODD" type decision. The routers in the chain have access to the same L3 and L4 fields, the only varying factor between them is the routerID.

In the case that we have RID's that are similar or close (which is not uncommon), the system may not provide enough variation in the hash result which eventually leads to subsequent routers to compute the same hash and therefor polarize to a "Southern" (in this example above) or "Northern" path.

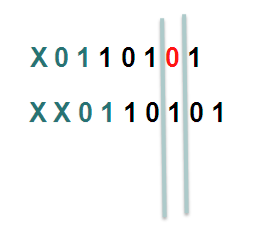

In XR 4.2.1 via a SMU or in XR 4.2.3 in the baseline code, we provide a knob that allows for shifting the hash result. By choosing a different "shift" value per node, we can make the system look at a different bit (for this example), or bits.

In this example the first line shifts the hash by 1, the second one shifts it by 2.

Considering that we have more buckets in the real implementation and more bits that we look at, the member or path selection can alter significantly based on the same hash but with the shifting, which is what we ultimately want.

HASH result Shifting

- Trident allows for a shift of maximum of 4 (performance reasons)

- Typhoon allows for a shift of maximum of 32.

Command

cef load-balancing algorithm adjust <value>

The command allows for values larger then 4 on Trident, if you configure values large then 4 for Trident, you will effectively use a modulo, resulting in the fact that shift of 1 is the same as a shift of 5

Fragmentation and Load-balancing

When the system detects fragmented packets, it will no longer use L4 information. The reason for that is that if L4 info were to be used, and subsequent fragments don't contain the L4 info anymore (have L3 header only!) the initial fragment and subsequent fragments produce a different hash result and potentially can take different paths resulting in out of order.

Regardless of release, regardless of hardware (ASR9K or CRS), when fragmentation is detected we only use L3 information for the hash computation.

Hashing updates

- Starting release 6.4.2, when an layer 2 interface (EFP) receives mpls encapped ip packets, the hashing algorithm if configured for src-dest-ip will pick up ip from ingress packet to create a hash. Before 6.4.2 the Hash would be based on MAC.

- Starting XR 6.5, layer 2 interfaces receiving GTP encapsulated packets will automatically pick up the TEID to generate a hash when src-dest-ip is configured.

Related Information

Xander Thuijs, CCIE #6775

Sr Tech Lead ASR9000

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleks,

these tunnels are used for internet traffic only (IGW to PE over four 10G links). I will try to disable the first tunnel where is the double traffic.

The weird part is that the other tunnels to another next-hop are working fine.

Only this group of four tunnels (Te2021 to 2024) are not load balancing properly.

On second IGW it all ok!

Maybe I have to do a shut/no shut.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, I will try to change the value if shut/no shut does not help. What is the default value?

All other tunnels are working fine.

I have this Cisco Live presentation and that's where I have found out that I have to use next-hop and interface for proper LB when we are using static routes.

Thanks for that presentation Xander!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yeah, I will do that as soon as possible. The static route is for the BGP next-hop to the ISP for outgoing traffic. I have used this only for an example.

It's weird because all other tunnel groups are working fine. Even on second IGW LB is working great to next-hop 10.100.96.202.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

is it possible to manipulate CEF for an MPLS prefix that see 2 equal paths and determine the exact outgoing interface for ASR 9k (9010);

say 1.1.1.1/30 in VRF abcd and next-hop is the Loopback of the originating PE but learnt via RR and CEF resolves that the outgoing interfaces is say te0/0/0/1 and te0/4/0/1.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, Xander

is there support for MPLS Entropy label (RFC6790) in ASR9k?

Best regards

Michael

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

I'm looking for ways to improve load balancing to deal with some elephant flows causing peak egress congestion on one interface when the other members of my LAGs are relatively low utilization. These are straightforward ipv4 10G Bundle-Ether LAGs.

I have a few questions that online documentation and the above don't seem to answer. If anyone can provide links to other documentation or know the answers it would be awesome.

1: From the above article and other online documentation it seems that only IP/MAC based hashes are supported for egress on bundle-ether LAGs instead of 5-tuple. Is my understanding correct?

2: Does anyone know if the 9Ks support per-packet load balancing. I know this has some major negatives but just exploring all options for this specific scenario.

3: I see in the show command "show bundle load-balancing" the line "Avoid rebalancing?: False". I can't find any documentation to support the idea that 9Ks re-balance traffic dynamically but this show command leads me to believe that it does. Any ideas/links that talk about this feature?

Thanks!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander, excellent!

The concept of FAT PW is working pretty well in my customer and now we are getting more efficiency in L2VPN domain.

Thanks for your explanation!

Best regards,

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

I'm trying to figure out the outgoing interface (multiple outgoing interfaces using ECMP) for a PW but i'm a little bit confused:

RP/0/RSP0/CPU0:asr9k#sh l2vpn bridge-domain pw-id 3507030501 det

Legend: pp = Partially Programmed.

Bridge group: TEST-BG, bridge-domain: TEST-BD, id: 271, state: up, ShgId: 0, MSTi: 0

Coupled state: disabled

VINE state: Default

MAC learning: enabled

MAC withdraw: enabled

MAC withdraw for Access PW: enabled

MAC withdraw sent on: bridge port up

MAC withdraw relaying (access to access): disabled

Flooding:

Broadcast & Multicast: enabled

Unknown unicast: enabled

MAC aging time: 300 s, Type: inactivity

MAC limit: 10000, Action: none, Notification: syslog, trap

MAC limit reached: no, threshold: 75%

MAC port down flush: enabled

MAC Secure: disabled, Logging: disabled

Split Horizon Group: none

Dynamic ARP Inspection: disabled, Logging: disabled

IP Source Guard: disabled, Logging: disabled

DHCPv4 Snooping: disabled

DHCPv4 Snooping profile: none

IGMP Snooping: disabled

IGMP Snooping profile: none

MLD Snooping profile: none

Storm Control: disabled

Bridge MTU: 9200

MIB cvplsConfigIndex: 272

Filter MAC addresses:

Load Balance Hashing: src-dst-ip

P2MP PW: disabled

Create time: 20/03/2018 16:16:49 (41w2d ago)

No status change since creation

ACs: 4 (4 up), VFIs: 1, PWs: 1 (1 up), PBBs: 0 (0 up), VNIs: 0 (0 up)

List of Access PWs:

List of VFIs:

VFI TEST-VFI (up)

PW: neighbor 10.201.203.18, PW ID 3507030501, state is up ( established )

PW class TEST-PW-CLASS, XC ID 0xa0000295

Encapsulation MPLS, protocol LDP

Source address 10.201.201.242

PW type Ethernet, control word enabled, interworking none

Sequencing not set

Load Balance Hashing: src-dst-ip

Flow Label flags configured (Tx=1,Rx=1), negotiated (Tx=0,Rx=0)

PW Status TLV in use

MPLS Local Remote

------------ ------------------------------ -------------------------

Label 25410 652

Group ID 0x10f 0x0

Interface TEST-VFI unknown

MTU 9200 9200

Control word enabled enabled

PW type Ethernet Ethernet

VCCV CV type 0x2 0x2

(LSP ping verification) (LSP ping verification)

VCCV CC type 0x7 0x3

(control word) (control word)

(router alert label) (router alert label)

(TTL expiry)

------------ ------------------------------ -------------------------

Incoming Status (PW Status TLV):

Status code: 0x0 (Up) in Notification message

MIB cpwVcIndex: 2684355221

Create time: 20/03/2018 16:16:49 (41w2d ago)

Last time status changed: 20/03/2018 16:17:29 (41w2d ago)

MAC withdraw messages: sent 3, received 0

Forward-class: 0

Static MAC addresses:

Statistics:

packets: received 596507371998 (unicast 596506236047), sent 1204991775809

bytes: received 212684441078216 (unicast 212684298555321), sent 1517943198135613

MAC move: 0

Storm control drop counters:

packets: broadcast 0, multicast 0, unknown unicast 0

bytes: broadcast 0, multicast 0, unknown unicast 0

DHCPv4 Snooping: disabled

DHCPv4 Snooping profile: none

IGMP Snooping: disabled

IGMP Snooping profile: none

MLD Snooping profile: none

VFI Statistics:

drops: illegal VLAN 0, illegal length 0

List of Access VFIs:

I'm using the following commands, but it's not clear what the physical interface is:

RP/0/RSP0/CPU0:asr9k#sh mpls forwarding labels 25410 det

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

25410 Pop PW(10.201.203.18:3507030501) \

BD=271 point2point 215072303046700

Updated: Mar 20 16:17:29.855

Path Flags: 0x8 [ ]

Label Stack (Top -> Bottom): { }

MAC/Encaps: 0/0, MTU: 0

Packets Switched: 596512568592

RP/0/RSP0/CPU0:asr9k#sh mpls forwarding exact-route label 25410 bottom-label 652

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

25410 Pop PW(10.201.203.18:3507030501) \

BD=271 point2point N/A

Via: NULLIFHNDL, Next Hop: special

Label Stack (Top -> Bottom): { 1048611 }

NHID: 0x0, Encap-ID: N/A, Path idx: 0, Backup path idx: 0, Weight: 0

Hash idx: 0

MAC/Encaps: 0/0, MTU: 0

Am i doing something wrong? Should i use another command?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, Xander

I do have problem with L3 Bundle-Interface with src-ip based load balancing. We are testing a setup for cisco SCE with ASR9010 RSP440 and typhoon linecard. It's nearly identical setup and same configuration that recommended in the document https://www.cisco.com/c/en/us/td/docs/routers/asr9000/software/asr9k_r4-2/interfaces/configuration/guide/hc42asr9kbook/hc42lbun.html#12448

The only difference is that we are using a single SCE device. Currently we have only one user's traffic on the subscriber vrf for testing. But for the traffic towards the SCE is not using a single interface as traffic comes from a single subsceriber/source-ip. After a little digging in the documents i am a little bit confused.

When I look with the command

sho cef vrf SCE_INTERNET_SUBS_VRF summary

It says L4 and ı am not sure if this effect bundle-interface load-balance has src-ip command.

So dou I have to configure cef load-balancing fields l3?

UPDATE : We have confirmed for labelled packets, src-ip/dst-ip based load-balacing does not works. TAC Case# : SR 686188279. These algorithms are specially designed for MGCP setup where customer's are directly connected to ASR9K (ASR9K as BNG).

@xthuijs wrote:

Introduction

In this document it is discussed how the ASR9000 decides how to take multiple paths when it can load-balance. This includes IPv4, IPv6 and both ECMP and Bundle/LAG/Etherchannel scenarios in both L2 and L3 environments

Core Issue

The load-balancing architecture of the ASR9000 might be a bit complex due to the 2 stage forwarding the platform has. In this article the various scenarios should explain how a load-balancing decision is made so you can architect your network around it.

In this document it is assumed that you are running XR 4.1 at minimum (the XR 3.9.X will not be discussed) and where applicable XR42 enhancements are alerted.

Load-balancing Architecture and Characteristics

Characteristics

ASR9000 has the following load-balancing characteristics:

- ECMP:

- Non recursive or IGP paths : 32-way

- Recursive or BGP paths:

- 8-way for Trident

- 32 way for Typhoon

- 64 way Typhoon in XR 5.1+

- 64 way Tomahawk XR 5.3+ (Tomahawk only supported in XR 5.3.0 onwards)

- Bundle:

- 64 members per bundle

The way they tie together is shown in this simplified L3 forwarding model:

NRLDI = Non Recursive Load Distribution Index

RLDI = Recursive Load Distribution Index

ADJ = Adjancency (forwarding information)

LAG = Link Aggregation, eg Etherchannel or Bundle-Ether interface

OIF = Outgoing InterFace, eg a physical interface like G0/0/0/0 or Te0/1/0/3

What this picture shows you is that a Recursive BGP route can have 8 different paths, pointing to 32 potential IGP ways to get to that BGP next hop, and EACH of those 32 IGP paths can be a bundle which could consist of 64 members each!

Architecture

The architecture of the ASR9000 load-balancing implementation surrounds around the fact that the load-balancing decision is made on the INGRESS linecard.

This ensures that we ONLY send the traffic to that LC, path or member that is actually going to forward the traffic.

The following picture shows that:

In this diagram, let's assume there are 2 paths via the PATH-1 on LC2 and a second path via a Bundle with 2 members on different linecards.

(note this is a bit extraordinary considering that equal cost paths can't be mathematically created by a 2 member bundle and a single physical interface)

The Ingress NPU on the LC1 determines based on the hash computation that PATH1 is going to forward the traffic, then traffic is sent to LC2 only.

If the ingress NPU determines that PATH2 is to be chosen, the bundle-ether, then the LAG (link aggregation) selector points directly to the member and traffic is only sent to the NP on that linecard of that member that is going to forward the traffic.

Based on the forwarding achitecture you can see that the adj points to a bundle which can have multiple members.

Allowing this model, when there are lag table udpates (members appearing/disappearing) do NOT require a FIB update at all!!!

What is a HASH and how is it computed

In order to determine which path (ECMP) or member (LAG) to choose, the system computes a hash. Certain bits out of this hash are used to identify member or path to be taken.

- Pre 4.0.x Trident used a folded XOR methodology resulting in an 8 bit hash from which bits were selected

- Post 4.0.x Trident uses a checksum based calculation resulting in a 16 bit hash value

- Post 4.2.x Trident uses a checksum based calculation resulting in a 32 bit hash value

- Typhoon 4.2.0 uses a CRC based calculation of the L3/L4 info and computes a 32 bit hash

8-way recursive means that we are using 3 bits out of that hash result

32-way non recursive means that we are using 5 bits

64 members means that we are looking at 6 bits out of that hash result

It is system defined, by load-balancing type (recursive, non-recursive or bundle member selection) which bits we are looking at for the load-balancing decision.

Fields used in ECMP HASH

What is fed into the HASH depends on the scenario:

Incoming Traffic Type Load-balancing Parameters IPv4 Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID

IPv6 Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID

MPLS - IP Payload, with < 4 labels Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID

- IP Payload, with > 4 labels 4th MPLS Label (or Inner most) and Router ID

- Non-IP Payload

Inner most MPLS Label and Router ID

* Non IP Payload includes an Ethernet interworking, generally seen on Ethernet Attachment Circuits running VPLS/VPWS.

These have a construction of

EtherHeader-Mpls(next hop label)-Mpls(pseudowire label)-etherheader-InnerIP

In those scenarios the system will use the MPLS based case with non ip payload.

IP Payload in MPLS is a common case for IP based MPLS switching on LSR's whereby after the inner label an IP header is found directly.

Router ID

The router ID is a value taken from an interface address in the system in an order to attempt to provide some per node variation

This value is determined at boot time only and what the system is looking for is determined by:

sh arm router-ids

Example:

RP/0/RSP0/CPU0:A9K-BNG#show arm router-id

Tue Aug 28 11:51:50.291 EDT

Router-ID Interface

8.8.8.8 Loopback0

RP/0/RSP0/CPU0:A9K-BNG#

Bundle in L2 vs L3 scenarios

This section is specific to bundles. A bundle can either be an AC or attachment circuit, or it can be used to route over.

Depending on how the bundle ether is used, different hash field calculations may apply.

When the bundle ether interface has an IP address configured, then we follow the ECMP load-balancing scheme provided above.

When the bundle ether is used as an attachment circuit, that means it has the "l2transport" keyword associated with it and is used in an xconnect or bridge-domain configuration, by default L2 based balancing is used. That is Source and Destination MAC with Router ID.

If you have 2 routers on each end of the AC's, then the MAC's are not varying a lot, that is not at all, then you may want to revert to L3 based balancing which can be configured on the l2vpn configuration:

RP/0/RSP0/CPU0:A9K-BNG#configure

RP/0/RSP0/CPU0:A9K-BNG(config)#l2vpn

RP/0/RSP0/CPU0:A9K-BNG(config-l2vpn)#load-balancing flow ?

src-dst-ip Use source and destination IP addresses for hashing

src-dst-mac Use source and destination MAC addresses for hashing

Use case scenarios

Case 1 Bundle Ether Attachment circuit (downstream)

In this case the bundle ether has a configuration similar to

interface bundle-ether 100.2 l2transport

encap dot1q 2

rewrite ingress tag pop 1 symmetric

And the associated L2VPN configuration such as:

l2vpn

bridge group BG

bridge-domain BD

interface bundle-e100.2

In the downstream direction by default we are load-balancing with the L2 information, unless the load-balancing flow src-dest-ip is configured.

Case 2 Pseudowire over Bundle Ether interface (upstream)

The attachment circuit in this case doesn't really matter, whether it is bundle or single interface.

The associated configuration for this in the L2VPN is:

l2vpn

bridge group BG

bridge-domain BD

interface bundle-e100.2

vfi MY_VFI

neighbor 1.1.1.1 pw-id 2

interface bundle-ether 200

ipv4 add 192.168.1.1 255.255.255.0

router static

address-family ipv4 unicast

1.1.1.1/32 192.168.1.2

In this case neighbor 1.1.1.1 is found via routing which appens to be egress out of our bundle Ethernet interface.

This is MPLS encapped (PW) and therefore we will use MPLS based load-balancing.

Case 3 Routing through a Bundle Ether interface

In this scenario we are just routing out the bundle Ethernet interface because our ADJ tells us so (as defined by the routing).

Config:

interface bundle-ether 200

ipv4 add 200.200.1.1 255.255.255.0

show route (OSPF inter area route)

O IA 49.1.1.0/24 [110/2] via 200.200.1.2, 2w4d, Bundle-Ether200

Even if this bundle-ether is MPLS enabled and we assign a label to get to the next hop or do label swapping, in this case

the Ether header followed by MPLS header has Directly IP Behind it.

We will be able to do L3 load-balancing in that case as per chart above.

(Layer 3) Load-balancing in MPLS scenarios

As attempted to be highlighted throughout this technote the load-balacning in MPLS scenarios, whether that be based on MPLS label or IP is dependent on the inner encapsulation.

Depicted in the diagram below, we have an Ethernet frame with IP going into a pseudo wire switched through the LSR (P router) down to the remote PE.

Pseudowires in this case are encapsulating the complete frame (with ether header) into mpls with an ether header for the next hop from the PE left router to the LSR in the middle.

Although the number of labels is LESS then 4. AND there is IP available, the system can't skip beyond the ether header and read the IP and therefore falls back to MPLS label based load-balancing.

How does system differentiate between an IP header after the inner most label vs non IP is explained here:

Just to recap, the MPLS header looks like this:

Now the important part of this picture is that this shows MPLS-IP. In the VPLS/VPWS case this "GREEN" field is likely start with Ethernet headers.

Because hardware forwarding devices are limited in the number of PPS they can handle, and this is a direct equivalent to the number of instructions that are needed to process a packet, we want to make sure we can work with a packet in the LEAST number of instructions possible.

In order to comply with that thought process, we check the first nibble following the MPLS header and if that starts with a 4 (ipv4) or a 6 (ipv6) we ASSUME that this is an IP header and we'll interpret the data following as an IP header deriving the L3 source and destination.

Now this works great in the majority scenarios, because hey let's be honest, MAC addresses for the longest time started with 00-0......

in other words not a 4 or 6 and we'd default to MPLS based balancing, something that we wanted for VPLS/VPWS.

However, these days we see mac addresses that are not starting with zero's anymore and in fact 4's or 6's are seen!

This fools the system to believe that the inner packet is IP, while it is an Ether header in reality.

There is no good way to classify an ip header with a limited number of instruction cycles that would not affect performance.

In an ideal world you'd want to use an MD5 hash and all the checks possible to make the perfect decision.

Reality is different and no one wants to pay the price for it either what it would cost to design ASICS that can do high performance without affecting the PPS rate due to a very very comprehensive check of tests.

Bottom line is that if your DMAC starts with a 4 or 6 you have a situation.

Solution

Use the MPLS control word.

Control word is negotiated end to end and inserts a special 4 bytes with zero's especially to accommodate this purpose.

The system will now read a 0 instead of a 4 or 6 and default to MPLS based balancing.

Configuration

to enable control word use the follow template:

l2vpn

pw-class CW

encapsulation mpls

control-word

!

!

xconnect group TEST

p2p TEST_PW

interface GigabitEthernet0/0/0/0

neighbor 1.1.1.1 pw-id 100

pw-class CW

!

!

!

!

Alternative solutions: Fat Pseudowire

Since you might have little control over the inner label, the PW label, and you probably want to ensure some sort of load-balancing, especially on P routers that have no knowledge over the offered service or mpls packets it transports another solution is available known as FAT Pseudowire.

FAT PW inserts a "flow label" whereby the label has a value that is computed like a hash to provide some hop by hop variation and more granular load-balancing. Special care is taken into consideration that there is variation (based on the l2vpn command, see below) and that no reserved values are generated and also don't collide with allocated label values.

Fat PW is supported starting XR 4.2.1 on both Trident and Typhoon based linecards.

One note to make is that with the relatively newer functionality of PseudoWire Headend (aka PWHE), FAT labels cannot be used. You can configure it, but the forwarding, especially downstream, entering the pwhe interface will break.

PWHE requires a pindown list to identify the possible ingress/egress interfaces for the pw to come in on (and all features are programmed there). The system will loadbalance based on pwid, on both the a9k startign the pwhe as well as then obviously also interim P nodes. The reason for that is that the egress interface selection is already made at the time the pw encap is made. It isnt a simple change in the current forwarding architecture to "rehash" based on something else, without incurring heavy pps reduction (and possible recirculation).

Packet transformation with a Flow Label

Configuration of FAT Pseudowire

The following is configuration example :

l2vpn

load-balancing flow src-dst-ip

pw-class test

encapsulation mpls

load-balancing

flow-label both static

!

!

You can also affect the way that the flow label is computed:

Under L2VPN configuration, use the “load-balancing flow” configuration command to determine how the flow label is generated:

l2vpn

load-balancing flow src-dst-mac

This is the default configuration, and will cause the NP to build the flow label from the source and destination MAC addresses in each frame.

l2vpn

load-balancing flow src-dst-ip

This is the recommended configuration, and will cause the NP to build the flow label from the source and destination IP addresses in each frame.

• Note that IPv6 hashing is not supported in the first release.FAT Pseudowire TLV

Flow Aware Label (FAT) PW signalled sub-tlv id is currently carrying value 0x11 as specified originally in draft draft-ietf-pwe3-fat-pw. This value has been recently corrected in the draft and should be 0x17. Value 0x17 is the flow label sub-TLV identifier assigned by IANA.

When Inter operating between XR versions 4.3.1 and earlier, with XR version 4.3.2 and later. All XR releases 4.3.1 and prior that support FAT

PW will default to value 0x11. All XR releases 4.3.2 and later default to value 0x17.

Solution:

Use the following config on XR version 4.3.2 and later to configure the sub-tlv id

pw-class <pw-name>

encapsulation mpls

load-balancing

flow-label both

flow-label code 17

NOTE: Got a lot of questions regarding the confusion about the statement of 0x11 to 0x17 change (as driven by IANA) and the config requirement for number 17 in this example.

The crux is that the flow label code is configured DECIMAL, and the IANA/DRAFT numbers mentioned are HEX.

So 0x11, the old value is 17 decimal, which indeed is very similar to 0x17 which is the new IANA assigned number. Very annoying, thank IANA

(or we could have made the knob in hex I guess )

Loadbalancing and priority configurations

In the case of VPWS or VPLS, at the ingress PE side, it’s possible to change the load-balance upstream to MPLS Core in three different ways:

1. At the L2VPN sub-configuration mode with “load-balancing flow” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-l2vpn)# load-balancing flow ?

src-dst-ip

src-dst-mac [default]

2. At the pw-class sub-configuration mode with “load-balancing” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-l2vpn-pwc-mpls-load-bal)#?

flow-label [see FAT Pseudowire section]

pw-label [per-VC load balance]

3. At the Bundle interface sub-configuration mode with “bundle load-balancing hash” command with the following options:

RP/0/RSP1/CPU0:ASR9000(config-if)#bundle load-balancing hash ? [For default, see previous sections]

dst-ip

src-ip

It’s important to not only understand these commands but also that: 1 is weaker than 2 which is weaker than 3.

Example:

l2vpn

load-balancing flow src-dst-ip

pw-class FAT

encapsulation mpls

control-word

transport-mode ethernet

load-balancing

pw-label

flow-label both static

interface Bundle-Ether1

(...)

bundle load-balancing hash dst-ip

Because of the priorities, on the egress side of the ingress PE (to the MPLS Core), we will do per-dst-ip load-balance (3).

If the bundle-specific configuration is removed, we will do per-VC load-balance (2).

If the pw-class load-balance configuration is removed, we will do per-src-dst-ip load-balance (1).

with thanks to Bruno Oliveira for this priority section

P2MP MPLS TE Tunnels

Only one bundle member will be selected to forward traffic on the P2MP MPLS TE mid-point node.

Possible alternatives that would achieve better load balancing are: a) increase the number of tunnels or b) switch to mLDP.

IPv6

Pre 4.2.0 releases, for the ipv6 hash calculation we only use the last 64 bits of the address to fold and feed that into the hash, this including the regular routerID and L4 info.

In 4.2.0 we made some further enhancements that the full IPv6 Addr is taken into consideration with L4 and router ID.

Determining load-balancing

You can determine the load-balancing on the router by using the following commands

L3/ECMP

For IP :

RP/0/RSP0/CPU0:A9K-BNG#show cef exact-route 1.1.1.1 2.2.2.2 protocol udp ?

source-port Set source port

You have the ability to only specify L3 info, or include L4 info by protocol with source and destination ports.

It is important to understand that the 9k does FLOW based hashing, that is, all packets belonging to the same flow will take the same path.

If one flow is more active or requires more bandwidth then another flow, path utilization may not be a perfect equal spread.

UNLESS you provide enough variation in L3/L4 randomness, this problem can't be alleviated and is generally seen in lab tests due the limited number of flows.

For MPLS based hashing :

RP/0/RSP0/CPU0:A9K-BNG#sh mpls forwarding exact-route label 1234 bottom-label 16000 ... location 0/1/cpu0

This command gives us the output interface chosen as a result of hashing with mpls label 16000. The bottom-label (in this case '16000') is either the VC label (in case of PW L2 traffic) or the bottom label of mpls stack (in case of mpls encapped L3 traffic with more than 4 labels). Please note that for regular mpls packets (with <= 4 labels) encapsulating an L3 packet, only IP based hashing is performed on the underlying IP packet.

Also note that the mpls hash algorithm is different for trident and typhoon. The varied the label is the better is the distribution. However, in case of trident there is a known behavior of mpls hash on bundle interfaces. If a bundle interface has an even number of member links, the mpls hash would cause only half of these links to be utlized. To get around this, you may have to configure "cef load-balancing adjust 3" command on the router. Or use odd number of member links within the bundle interface. Note that this limitation applies only to trident line cards and not typhoon.

Bundle member selection

RP/0/RSP0/CPU0:A9K-BNG#bundle-hash bundle-e 100 loc 0/0/cPU0

Calculate Bundle-Hash for L2 or L3 or sub-int based: 2/3/4 [3]: 3

Enter traffic type (1.IPv4-inbound, 2.MPLS-inbound, 3:IPv6-inbound): [1]: 1

Single SA/DA pair or range: S/R [S]:

Enter source IPv4 address [255.255.255.255]:

Enter destination IPv4 address [255.255.255.255]:

Compute destination address set for all members? [y/n]: y

Enter subnet prefix for destination address set: [32]:

Enter bundle IPv4 address [255.255.255.255]:

Enter L4 protocol ID. (Enter 0 to skip L4 data) [0]:

Invalid protocol. L4 data skipped.

Link hashed [hash_val:1] to is GigabitEthernet0/0/0/19 LON 1 ifh 0x4000580

The hash type L2 or L3 depends on whether you are using the bundle Ethernet interface as an Attachment Circuit in a Bridgedomain or VPWS crossconnect, or whether the bundle ether is used to route over (eg has an IP address configured).

Polarization

Polarization pertains mostly to ECMP scenarios and is the effect of routers in a chain making the same load-balancing decision.

The following picture tries to explain that.

In this scenario we assume 2 bucket, 1 bit on a 7 bit hash result. Let's say that in this case we only look at bit-0. So it becomes an "EVEN" or "ODD" type decision. The routers in the chain have access to the same L3 and L4 fields, the only varying factor between them is the routerID.

In the case that we have RID's that are similar or close (which is not uncommon), the system may not provide enough variation in the hash result which eventually leads to subsequent routers to compute the same hash and therefor polarize to a "Southern" (in this example above) or "Northern" path.

In XR 4.2.1 via a SMU or in XR 4.2.3 in the baseline code, we provide a knob that allows for shifting the hash result. By choosing a different "shift" value per node, we can make the system look at a different bit (for this example), or bits.

In this example the first line shifts the hash by 1, the second one shifts it by 2.

Considering that we have more buckets in the real implementation and more bits that we look at, the member or path selection can alter significantly based on the same hash but with the shifting, which is what we ultimately want.

HASH result Shifting

- Trident allows for a shift of maximum of 4 (performance reasons)

- Typhoon allows for a shift of maximum of 32.

Command

cef load-balancing algorithm adjust <value>

The command allows for values larger then 4 on Trident, if you configure values large then 4 for Trident, you will effectively use a modulo, resulting in the fact that shift of 1 is the same as a shift of 5

Fragmentation and Load-balancing

When the system detects fragmented packets, it will no longer use L4 information. The reason for that is that if L4 info were to be used, and subsequent fragments don't contain the L4 info anymore (have L3 header only!) the initial fragment and subsequent fragments produce a different hash result and potentially can take different paths resulting in out of order.

Regardless of release, regardless of hardware (ASR9K or CRS), when fragmentation is detected we only use L3 information for the hash computation.

Related Information

Xander Thuijs, CCIE #6775

Sr Tech Lead ASR9000

interface Bundle-Ether300

description ### SCE INTERNET SUBS-INTERFACE

vrf SCE_INTERNET_SUBS_VRF

ipv4 address 172.16.251.65 255.255.255.252

lacp cisco enable link-order signaled

bundle load-balancing hash src-ip

bundle maximum-active links 2

load-interval 30

!

interface TenGigE0/5/0/6

description ## Cisco SCE-1 SUBSCRIBER- Port0

bundle id 300 mode active

bundle port-priority 1

load-interval 30

!

RP/0/RSP0/CPU0:Nethouse-BGP-Router#sh run int tenGigE 0/5/0/7

Fri Feb 22 08:50:22.480 GMT

interface TenGigE0/5/0/7

description ## Cisco SCE-1 SUBSCRIBER- Port2

bundle id 300 mode active

bundle port-priority 1

load-interval 30

!

RP/0/RSP0/CPU0:Nethouse-BGP-Router#sh int tenGigE 0/5/0/6

Thu Feb 21 13:35:42.087 GMT

TenGigE0/5/0/6 is up, line protocol is up

Interface state transitions: 3

Hardware is TenGigE, address is 5087.8961.9c16 (bia 5087.8961.9c16)

Layer 1 Transport Mode is LAN

Description: ## Cisco SCE-1 SUBSCRIBER- Port0

Internet address is Unknown

MTU 1514 bytes, BW 10000000 Kbit (Max: 10000000 Kbit)

reliability 255/255, txload 0/255, rxload 0/255

Encapsulation ARPA,

Full-duplex, 10000Mb/s, link type is force-up

output flow control is off, input flow control is off

Carrier delay (up) is 10 msec

loopback not set,

Last link flapped 01:00:26

Last input 00:00:00, output 00:00:00

Last clearing of "show interface" counters 00:39:28

30 second input rate 5082000 bits/sec, 434 packets/sec

30 second output rate 3332000 bits/sec, 483 packets/sec

1188230 packets input, 1664573001 bytes, 0 total input drops

0 drops for unrecognized upper-level protocol

Received 0 broadcast packets, 80 multicast packets

0 runts, 0 giants, 0 throttles, 0 parity

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored, 0 abort

821347 packets output, 486531603 bytes, 0 total output drops

Output 0 broadcast packets, 78 multicast packets

0 output errors, 0 underruns, 0 applique, 0 resets

0 output buffer failures, 0 output buffers swapped out

0 carrier transitions

RP/0/RSP0/CPU0:Nethouse-BGP-Router#sh int tenGigE 0/5/0/7

Thu Feb 21 13:35:44.726 GMT

TenGigE0/5/0/7 is up, line protocol is up

Interface state transitions: 7

Hardware is TenGigE, address is 5087.8961.9c17 (bia 5087.8961.9c17)

Layer 1 Transport Mode is LAN

Description: ## Cisco SCE-1 SUBSCRIBER- Port2

Internet address is Unknown

MTU 1514 bytes, BW 10000000 Kbit (Max: 10000000 Kbit)

reliability 255/255, txload 0/255, rxload 0/255

Encapsulation ARPA,

Full-duplex, 10000Mb/s, link type is force-up

output flow control is off, input flow control is off

Carrier delay (up) is 10 msec

loopback not set,

Last link flapped 00:13:12

Last input 00:00:02, output 00:00:00

Last clearing of "show interface" counters 00:39:29

30 second input rate 0 bits/sec, 0 packets/sec

30 second output rate 891000 bits/sec, 130 packets/sec

89 packets input, 11036 bytes, 0 total input drops

0 drops for unrecognized upper-level protocol

Received 0 broadcast packets, 89 multicast packets

0 runts, 0 giants, 0 throttles, 0 parity

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored, 0 abort

223302 packets output, 139316935 bytes, 0 total output drops

Output 0 broadcast packets, 90 multicast packets

0 output errors, 0 underruns, 0 applique, 0 resets

0 output buffer failures, 0 output buffers swapped out

4 carrier transitions

RP/0/RSP0/CPU0:Nethouse-BGP-Router#sh int bundle-ether 300

Thu Feb 21 13:35:50.370 GMT

Bundle-Ether300 is up, line protocol is up

Interface state transitions: 3

Hardware is Aggregated Ethernet interface(s), address is 8478.ac7b.05f0

Description: ### SCE INTERNET SUBS-INTERFACE

Internet address is 172.16.251.65/30

MTU 1514 bytes, BW 20000000 Kbit (Max: 20000000 Kbit)

reliability 255/255, txload 0/255, rxload 0/255

Encapsulation ARPA,

Full-duplex, 20000Mb/s

loopback not set,

Last link flapped 00:59:49

ARP type ARPA, ARP timeout 04:00:00

No. of members in this bundle: 2

TenGigE0/5/0/6 Full-duplex 10000Mb/s Active

TenGigE0/5/0/7 Full-duplex 10000Mb/s Active

Last input 00:00:00, output 00:00:00

Last clearing of "show interface" counters 00:39:32

30 second input rate 5031000 bits/sec, 430 packets/sec

30 second output rate 4220000 bits/sec, 612 packets/sec

1191815 packets input, 1669681154 bytes, 0 total input drops

0 drops for unrecognized upper-level protocol

Received 0 broadcast packets, 169 multicast packets

0 runts, 0 giants, 0 throttles, 0 parity

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored, 0 abort

1049383 packets output, 629933247 bytes, 0 total output drops

Output 0 broadcast packets, 168 multicast packets

0 output errors, 0 underruns, 0 applique, 0 resets

0 output buffer failures, 0 output buffers swapped out

0 carrier transitions

sho cef vrf SCE_INTERNET_SUBS_VRF summary

Fri Feb 22 08:52:24.327 GMT

Router ID is 172.31.8.27

IP CEF with switching (Table Version 0) for node0_RSP0_CPU0

Load balancing: L4

Tableid 0xe0000019 (0x7146ef64), Vrfid 0x6000000a, Vrid 0x20000000, Flags 0x1001

Vrfname SCE_INTERNET_SUBS_VRF, Refcount 25

11 routes, 0 protected, 0 reresolve, 0 unresolved (0 old, 0 new), 880 bytes

4 rib, 0 lsd, 0:0 aib, 1 internal, 2 interface, 4 special, 1 default routes

10 load sharing elements, 2987 bytes, 0 references

0 shared load sharing elements, 0 bytes

10 exclusive load sharing elements, 2987 bytes

0 route delete cache elements

13 local route bufs received, 83 remote route bufs received, 0 mix bufs received

3 local routes, 1 remote routes

14 total local route updates processed

83 total remote route updates processed

0 pkts pre-routed to cust card

0 pkts pre-routed to rp card

0 pkts received from core card

0 CEF route update drops, 5 revisions of existing leaves

0 CEF route update drops due to version mis-match

Resolution Timer: 15s

0 prefixes modified in place

0 deleted stale prefixes

1 prefix with label imposition, 1 prefix with label information

0 LISP EID prefixes, 0 merged, via 0 rlocs

2 next hops

1 incomplete next hop

0 PD backwalks on LDIs with backup path

RP/0/RSP0/CPU0:Nethouse-BGP-Router#sho cef vrf SCE_INTERNET_SUBS_VRF detail

Fri Feb 22 08:52:25.785 GMT

0.0.0.0/0, version 71, proxy default, internal 0x1000011 0x0 (ptr 0x71f40670) [1], 0x0 (0x0), 0x0 (0x0)

Updated Feb 21 12:36:01.800

Prefix Len 0, traffic index 0, precedence n/a, priority 3

via 172.16.251.66/32, 3 dependencies, recursive [flags 0x0]

path-idx 0 NHID 0x0 [0x72c51a38 0x0]

next hop 172.16.251.66/32 via 172.16.251.66/32

via 172.16.251.66/32, Bundle-Ether300, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 1 NHID 0x0 [0x71712790 0x0]

next hop 172.16.251.66/32

local adjacency

0.0.0.0/32, version 0, broadcast

Updated Feb 21 08:54:51.052

Prefix Len 32

93.182.72.63/32, version 99, internal 0x5000001 0x0 (ptr 0x72ccee20) [1], 0x0 (0x0), 0x208 (0x7202be60)

Updated Feb 22 08:31:54.789

Prefix Len 32, traffic index 0, precedence n/a, priority 3

via 172.31.8.18/32, 3 dependencies, recursive [flags 0x6000]

path-idx 0 NHID 0x0 [0x720f38fc 0x0]

recursion-via-/32

next hop VRF - 'default', table - 0xe0000000

next hop 172.31.8.18/32 via 24011/0/21

next hop 172.16.251.70/32 BE101 labels imposed {ImplNull 19171}

172.16.251.64/30, version 68, attached, connected, glean adjacency, internal 0x3000061 0x0 (ptr 0x72c0d760) [1], 0x0 (0x71de4b50), 0x0 (0x0)

Updated Feb 21 12:36:01.796

Prefix Len 30, traffic index 0, precedence n/a, priority 0

via Bundle-Ether300, 2 dependencies, weight 0, class 0 [flags 0x8]

path-idx 0 NHID 0x0 [0x713a48ec 0x0]

glean adjacency

172.16.251.64/32, version 0, broadcast

Updated Feb 21 12:36:01.797

Prefix Len 32

172.16.251.65/32, version 69, attached, receive

Updated Feb 21 12:36:01.796

Prefix Len 32

internal 0x3004041 (ptr 0x72c0c490) [3], 0x0 (0x71de06b8), 0x0 (0x0)

172.16.251.66/32, version 0, internal 0x1020001 0x0 (ptr 0x72c51a38) [3], 0x0 (0x71de4bc8), 0x0 (0x0)

Updated Feb 21 12:36:01.800

Prefix Len 32, traffic index 0, Adjacency-prefix, precedence n/a, priority 15

via 172.16.251.66/32, Bundle-Ether300, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0x71712790 0x0], Internal 0x71246d08

next hop 172.16.251.66/32

local adjacency

172.16.251.67/32, version 0, broadcast

Updated Feb 21 12:36:01.796

Prefix Len 32

224.0.0.0/4, version 0, external adjacency, internal 0x1040001 0x0 (ptr 0x71f40830) [1], 0x0 (0x71de0a78), 0x0 (0x0)

Updated Feb 21 08:54:51.052

Prefix Len 4, traffic index 0, precedence n/a, priority 15

via 0.0.0.0/32, 25 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0x713a42b0 0x0]

next hop 0.0.0.0/32

external adjacency

224.0.0.0/24, version 0, receive

Updated Feb 21 08:54:51.052

Prefix Len 24

internal 0x1004001 (ptr 0x71f407c0) [1], 0x0 (0x71de0a50), 0x0 (0x0)

255.255.255.255/32, version 0, broadcast

Updated Feb 21 08:54:51.051

Prefix Len 32

RP/0/RSP0/CPU0:Nethouse-BGP-Router#

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

I have problem with my boxes (Cisco NCS 5001 , IOS XR Version 6.5.2) whereas the bundle that has two TenGE port members does not do load balance between bundle ports members on egress direction towards CE customer devise. This platform does not support this command (bundle load-balancing hash dst-ip) in the context of bundle interface.

Hence, I applied this command (bundle load-balancing hash auto) in the context of sub interface (l2transport interface) but nothing was change. Also I tried manually to stick port members by using (bundle load-balancing hash 1). Again nothing has been changed. Still the egress traffic flow mainly on port 2 while almost nothing is egressing traffic on port 1.

The issue is my customer payload is non IP payload (MPLS Traffic). For that my be the hashing algorithm does not work properly.

Would you please advise here with thanks ?

Sincerely,

Mustafa

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

Hope you are doing good!

I have a question, not related to load-balancing but to LAG and since I couldn't find any other relevant document I decided to ask you here :) .

It seems it is not allowed to configure the bandwidth command to physical interfaces and configure them as bundle members at the same time. What I want to achieve is the following (in high level):

interface TenGigE0/0/0/0

description ** Physical - LAG member **

bandwidth 2500000

bundle id 99 mode active

!

interface TenGigE0/0/0/1

description ** Physical - LAG member **

bandwidth 2500000

bundle id 99 mode active

!

interface Bundle-Ether99

description ** LAG member **

With the above config we could have the following output (also in SNMP):

- When both physical interfaces are up and running:

RP/0/RSP0/CPU0:ASR9000#sh int Bundle-Ether99

Bundle-Ether99 is up, line protocol is up

<...>

MTU 1600 bytes, BW 5000000 Kbit (Max: 20000000 Kbit)

- When only one physical interface is up and running:

RP/0/RSP0/CPU0:ASR9000#sh int Bundle-Ether99

Bundle-Ether99 is up, line protocol is up

<...>

MTU 1600 bytes, BW 2500000 Kbit (Max: 10000000 Kbit)

This is very helpful in operation/troubleshooting and capacity planning and it is supported in IOS/IOS-XE.

Is the behavior expected in IOS-XR? Do we need to open a DDTS?

Thank you in advance,

Dimitris

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

can somebody explain what exactly "HASH result Shifting" does? In my case I have a P router with a bundle interface to another router and I can see unequal load on both members. I have MPLS with IP traffic. So I assume "Source IP, Destination IP, Source port (TCP/UDP only), Destination port (TCP/UDP only), Router ID" is used for hashing. Obviously for all flows I have similar hash calculations. Could shifting help in my case?

Cheers

Michael

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hashing updates- Starting release 6.4.2, when an layer 2 interface (EFP) receives mpls encapped ip packets, the hashing algorithm if configured for src-dest-ip will pick up ip from ingress packet to create a hash. Before 6.4.2 the Hash would be based on MAC.

- Starting XR 6.5, layer 2 interfaces receiving GTP encapsulated packets will automatically pick up the TEID to generate a hash when src-dest-ip is configured.

I wish layer 2 interfaces receiving PPPoE encapsulated packets would automatically pick up the Session_ID to generate a hash when src-dest-ip is configured.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks Xander!

It's possible load balance L2TPv3 tunnel based on session ID? if is not possible, is on roadmap? I'm working in remote-phy implementation and L2TPv3 tunnels are problem with LB on CIN network (ASR9K). Maybe adding UDP headers in the packets can solve the problem. Same problem with multicast, the remote-phy specification only permit up tp 16 mcast groups.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, how can I check a flow for a specific flow, I have 6 fibers in a Bundle on the ASR9k IOS-XR, looking into exact route the output shows me the bundle interface and not the physical link in that bundle...

RP/0/RP0/CPU0:<router hostaname>#sho cef vrf AAA exact-route 10.10.10.10 20.20.20.20 internal

Tue Nov 12 10:48:03.943 CET

10.10.10.0/26, version 138848, attached, connected, glean adjacency, internal 0x3000061 0x0 (ptr 0x9781729c) [1], 0x400 (0x786876d8), 0x0 (0x0)

Updated Nov 5 01:37:54.095

Prefix Len 26, traffic index 0, precedence n/a, priority 0

via Bundle-Ether3.3483

via Bundle-Ether3.3483, 4 dependencies, weight 0, class 0 [flags 0x8]

path-idx 0 NHID 0x0 [0x7833aec8 0x0]

glean adjacency

- « Previous

- Next »

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: