- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Understanding QOS, default marking behavior and troubleshooting

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-07-2011 01:43 PM - edited 12-18-2018 05:19 AM

Introduction

This document provides details on how QOS is implemented in the ASR9000 and how to interpret and troubleshoot qos related issues.

Core Issue

QOS is always a complex topic and with this article I'll try to describe the QOS architecture and provide some tips for troubleshooting.

Based on feedback on this document I'll keep enhancing it to document more things bsaed on that feedback.

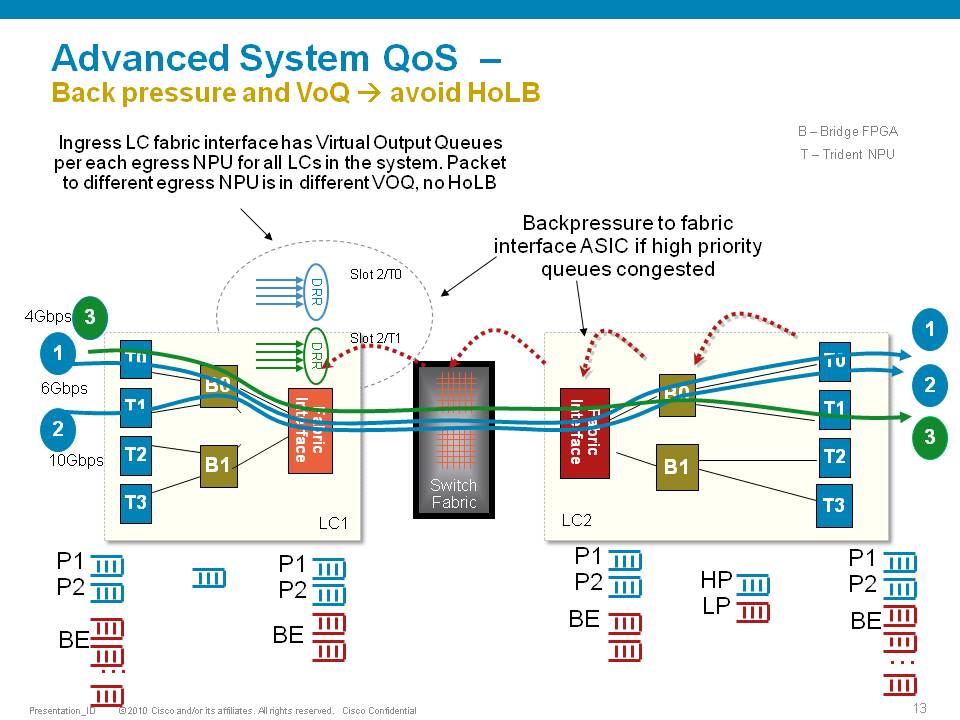

The ASR9000 employs an end to end qos architecture throughout the whole system, what that means is that priority is propagated throughout the systems forwarding asics. This is done via backpressure between the different fowarding asics.

One very key aspect of the A9K's qos implementation is the concept of using VOQ's (virtual output queues). Each network processor, or in fact every 10G entity in the system is represented in the Fabric Interfacing ASIC (FIA) by a VOQ on each linecard.

That means in a fully loaded system with say 24 x 10G cards, each linecard having 8 NPU's and 4 FIA's, a total of 192 (24 times 8 slots) VOQ's are represented at each FIA of each linecard.

The VOQ's have 4 different priority levels: Priority 1, Priority 2, Default priority and multicast.

The different priority levels used are assigned on the packets fabric headers (internal headers) and can be set via QOS policy-maps (MQC; modular qos configuration).

When you define a policy-map and apply it to a (sub)interface, and in that policy map certain traffic is marked as priority level 1 or 2 the fabric headers will represent that also, so that this traffic is put in the higher priority queues of the forwarding asics as it traverses the FIA and fabric components.

If you dont apply any QOS configuration, all traffic is considered to be "default" in the fabric queues. In order to leverage the strength of the asr9000's asic priority levels, you will need to configure (ingress) QOS at the ports to apply the priority level desired.

In this example T0 and T1 are receiving a total of 16G of traffic destined for T0 on the egress linecard. For a 10G port that is obviously too much.

T0 will flow off some of the traffic, depending on the queue, eventually signaling it back to the ingress linecard. While T0 on the ingress linecard also has some traffic for T1 on the egress LC (green), this traffic is not affected and continues to be sent to the destination port.

Resolution

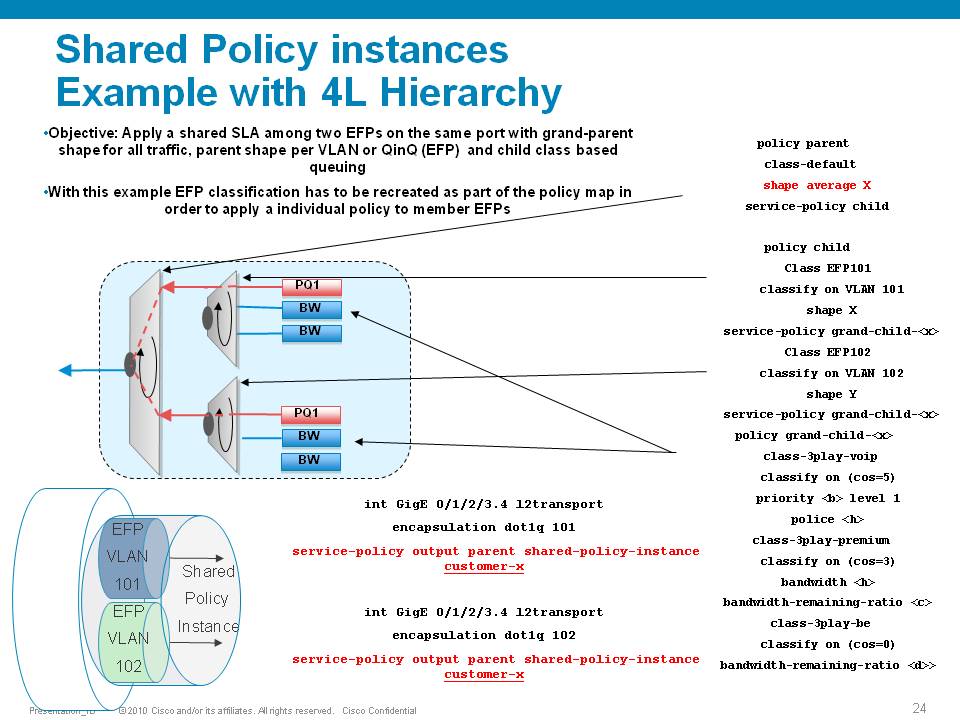

The ASR9000 has the ability of 4 levels of qos, a sample configuration and implemenation detail presented in this picture:

Policer having exceeddrops, not reaching configured rate

Set the Bc to CIR bps * (1 byte) / (8 bits) * 1.5 seconds

and

Be=2xBc

Default burst values are not optimal

Say you are allowing 1 pps, and then 1 second you don’t send anything, but the next second you want to send 2. in that second you’ll see an exceed, to visualize the problem.

Alternatively, Bc and Be can be configured in time units, e.g.:

policy-map OUT

class EF

police rate percent 25 burst 250 ms peak-burst 500 ms

For viewing the Bc and Be applied in hardware, run the "show qos interface interface [input|output]".

Why do I see non-zero values for Queue(conform) and Queue(exceed) in show policy-map commands?

On the ASR9k, every HW queue has a configured CIR and PIR value. These correspond to the "guaranteed" bandwidth for the queue, and the "maximum" bandwidth (aka shape rate) for the queue.

In some cases the user-defined QoS policy does NOT explicitly use both of these. However, depending on the exact QoS config the queueing hardware may require some nonzero value for these fields. Here, the system will choose a default value for the queue CIR. The "conform" counter in show policy-map is the number of packets/bytes that were transmitted within this CIR value, and the "exceed" value is the number of packets/bytes that were transmitted within the PIR value.

Note that "exceed" in this case does NOT equate to a packet drop, but rather a packet that is above the CIR rate on that queue.

You could change this behavior by explicitly configuring a bandwidth and/or a shape rate on each queue, but in general it's just easier to recognize that these counters don't apply to your specific situation and ignore them.

What is counted in QOS policers and shapers?

When we define a shaper in a qos pmap, the shaper takes the L2 header into consideration.

The shape rate defined of say 1Mbps would mean that if I have no dot1q or qinq, I can technically send more IP traffic then having a QIQ which has more L2 overhead. When I define a bandwidth statement in a class, same applies, also L2 is taken into consideration.

When defining a policer, it looks at L2 also.

In Ingress, for both policer & shaper, we use the incoming packet size (including the L2 header).

In order to account the L2 header in ingress shaper case, we have to use a TM overhead accounting feature, that will only let us add overhead in 4 byte granularity, which can cause a little inaccuracy.

In egress, for both policer & shaper we use the outgoing packet size (including the L2 header).

ASR9K Policer implementation supports 64Kbps granularity. When a rate specified is not a multiple of 64Kbps the rate would be rounded down to the next lower 64Kbps rate.

For policing, shaping, BW command for ingress/egress direction the following fields are included in the accounting.

|

MAC DA |

MAC SA |

EtherType |

VLANs.. |

L3 headers/payload |

CRC |

Port level shaping

Shaping action requires a queue on which the shaping is applied. This queue must be created by a child level policy. Typically shaper is applied at parent or grandparent level, to allow for differentiation between traffic classes within the shaper. If there is a need to apply a flat port-level shaper, a child policy should be configured with 100% bandwidth explicitly allocated to class-default.

Understanding show policy-map counters

QOS counters and show interface drops:

Policer counts are directly against the (sub)interface and will get reported on the "show interface" drops count.

The drop counts you see are an aggregate of what the NP has dropped (in most cases) as well as policer drops.

Packets that get dropped before the policer is aware of them are not accounted for by the policy-map policer drops but may

show under the show interface drops and can be seen via the show controllers np count command.

Policy-map queue drops are not reported on the subinterface drop counts.

The reason for that is that subinterfaces may share queues with each other or the main interface and therefore we don’t

have subinterface granularity for queue related drops.

Counters come from the show policy-map interface command

| Class name as per configuration | Class precedence6 | ||||||||

| Statistics for this class | Classification statistics (packets/bytes) (rate - kbps) | ||||||||

| Packets that were matched | Matched : 31583572/2021348608 764652 | ||||||||

| packets that were sent to the wire | Transmitted : Un-determined | ||||||||

| packets that were dropped for any reason in this class | Total Dropped : Un-determined | ||||||||

| Policing stats | Policing statistics (packets/bytes) (rate - kbps) | ||||||||

| Packets that were below the CIR rate | Policed(conform) : 31583572/2021348608 764652 | ||||||||

| Packets that fell into the 2nd bucket above CIR but < PIR | Policed(exceed) : 0/0 0 | ||||||||

| Packets that fell into the 3rd bucket above PIR | Policed(violate) : 0/0 0 | ||||||||

| Total packets that the policer dropped | Policed and dropped : 0/0 | ||||||||

| Statistics for Q'ing | Queueing statistics <<<---- | ||||||||

| Internal unique queue reference | Queue ID : 136 | ||||||||

|

how many packets were q'd/held at max one time (value not supported by HW) |

High watermark (Unknown) | ||||||||

|

number of 512-byte particles which are currently waiting in the queue |

Inst-queue-len (packets) : 4096 | ||||||||

|

how many packets on average we have to buffer (value not supported by HW) |

Avg-queue-len (Unknown) | ||||||||

|

packets that could not be buffered because we held more then the max length |

Taildropped(packets/bytes) : 31581615/2021223360 | ||||||||

| see description above (queue exceed section) | Queue(conform) : 31581358/2021206912 764652 | ||||||||

| see description above (queue exceed section) | Queue(exceed) : 0/0 0 | ||||||||

|

Packets subject to Randon Early detection and were dropped. |

RED random drops(packets/bytes) : 0/0 | ||||||||

Understanding the hardware qos output

RP/0/RSP0/CPU0:A9K-TOP#show qos interface g0/0/0/0 output

With this command the actual hardware programming can be verified of the qos policy on the interface

(not related to the output from the previous example above)

Tue Mar 8 16:46:21.167 UTC

Interface: GigabitEthernet0_0_0_0 output

Bandwidth configured: 1000000 kbps Bandwidth programed: 1000000

ANCP user configured: 0 kbps ANCP programed in HW: 0 kbps

Port Shaper programed in HW: 0 kbps

Policy: Egress102 Total number of classes: 2

----------------------------------------------------------------------

Level: 0 Policy: Egress102 Class: Qos-Group7

QueueID: 2 (Port Default)

Policer Profile: 31 (Single)

Conform: 100000 kbps (10 percent) Burst: 1248460 bytes (0 Default)

Child Policer Conform: TX

Child Policer Exceed: DROP

Child Policer Violate: DROP

----------------------------------------------------------------------

Level: 0 Policy: Egress102 Class: class-default

QueueID: 2 (Port Default)

----------------------------------------------------------------------

Default Marking behavior of the ASR9000

If you don't configure any service policies for QOS, the ASR9000 will set an internal cos value based on the IP Precedence, 802.1 Priority field or the mpls EXP bits.

Depending on the routing or switching scenario, this internal cos value will be used to do potential marking on newly imposed headers on egress.

Scenario 1

Scenario 2

Scenario 3

Scenario 4

Scenario 5

Scenario 6

Special consideration:

If the node is L3 forwarding, then there is no L2 CoS propagation or preservation as the L2 domain stops at the incoming interface and restarts at the outgoing interface.

Default marking PHB on L3 retains no L2 CoS information even if the incoming interface happened to be an 802.1q or 802.1ad/q-in-q sub interface.

CoS may appear to be propagated, if the corresponding L3 field (prec/dscp) used for default marking matches the incoming CoS value and so, is used as is for imposed L2 headers at egress.

If the node is L2 switching, then the incoming L2 header will be preserved unless the node has ingress or egress rewrites configured on the EFPs.

If an L2 rewrite results in new header imposition, then the default marking derived from the 3-bit PCP (as specified in 802.1p) on the incoming EFP is used to mark the new headers.

An exception to the above is that the DEI bit value from incoming 802.1ad / 802.1ah headers is propagated to imposed or topmost 802.1ad / 802.1ah headers for both L3 and L2 forwarding;

Related Information

ASR9000 Quality of Service configuration guide

Xander Thuijs, CCIE #6775

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi

I would like to ask about policer on l2 interfaces ( E-LINE )

When I configured x bytes in policer what exactly will be counted

- L3 Header + Data

- L2 Header + L3 Header + Data ( what about VLAN tag in trunk )

- L2 Header + L3 Header + Data + ( the 20 bytes from the Inter-Frame Gap and Preamb )

I can not find any good source to check it

Any ideas ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Konard, see the above document section "What is counted in QOS policers and shapers?"

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks a lot

Just to be sure

I undestrand that ( Preamble, Start of frame delimiter, Interpacket gap ) are not counted by policer ?

Konrad

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That's correct, Preamble, Start of frame delimiter and IPG are NOT counted.

The following fields are counted:

MAC DA | MAC SA | EtherType | VLANs.. | L3 headers/payload | CRC |

Eddie.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Konad,

If you would like to see some of the headers counted (for example 12 byte IFG and 4 byte preamble), then you can apply policy as below

interface x/y/z

service-policy input/output <policy> user-defined <number of bytes>

Above will add additional 20 bytes to calculations.

Thanks,

-Phadke

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

we have egress policy map towards the remote PE with RED enabled to default-class on our PE.Given the egress traffic gets MPLS labels( like Pseudo-wire ) and only labelled traffic with EXP value is visible to the router (we set Exp to zero manually ),could it be possible that UDP packets get affected too ? or the router is able to look further into the packet to find something about the flow (like ip protocol number for TCP )? Also is the command below is valid to increase threshholds in my scenario .(we have a bundle interface comprising 2 *1G ,so I am expecting the configuration is also repeated to each member)

random-detect exp 0 800mb 900mb.

Thanks

MEH-D

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi MEH-D,

qos on bundle's is replicated to the members, correct.

also true is that wred serves tcp much better since it is "congestion aware". UDP by itself doesnt have that retransmit capability or detection like tcp does.

so while WRED is by nature not L4 aware, only prec/dscp, you can use that to reduce drop of wred in cases by adjusting the prec/dscp or exp in this case.

so have your voice for instnce marked with the right exp/prec so that wred doesnt consider them for droppage.

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Alexander,

Let me reword my problem as the issue was not apparently with WRED.we were having egress queue drops in class-default on a 1Gig link.Even though RED/WRED was removed off the class-default where all our the customer traffic falls in.However the traffic was almost at 300MB in total during peak time .we then changed queue-limit to 8000Kbyte for default-class and the issue has disappeared since then.Is it right to say the queue should start filling up when we got close to 1Gb of traffic? Why did queue start filling up already ?could it be that our customer traffic is so bursty?could also changing the queue size to a long value cause traffic delay in our case where we just do 300Mb on the link ?

The default value of queue-limit was initially 66Kb as per output below.

Level: 0 Policy: MPLS_OUTPUT_QOSClass: class-default

QueueID: 65614 (Priority Normal)

Queue Limit: 66 kbytes Abs-Index: 19 Template: 7 Curve: 4

Shape CIR Profile: INVALID

WFQ Profile: 1/19 Committed Weight: 20 Excess Weight: 20

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 10

WRED Type: RED Default Table: 0 Curves: 1

Default RED Curve Profile: 19/7/4 Thresholds Min : 32 (31) kbytes Max: 66 (62) kbytes

we applied the command below and the issue was fixed.

policy-map MPLS_OUTPUT_QOS

class class-default

queue-limit 8000 kbytes

Thanks,

MEH-D

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi MEH-D,

on asr9k the default queue limit is always set to 100ms of visible bandwidth for the class. Google the session BRKSPG-2904 from this year's Cisco Live in San Diego and read the "Queue Limits/Occupancy" section for more information on what's a visible bandwidth of a class.

if your solution requires longer queue size, you can change them manually but you should pay attention to some aspects, mentioned in the same presentation.

hth,

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks Aleksandar for your quick response.I went through the slides.

we know that when defining WFQ without shaping or policing,bandwidth gets allocated dynamically to classes each that can go above their limits if bandwidth available.

However we are hindered here by queue-limit ,not being adjusted dynamically too.don't you think that is a big issue ? in my case I am getting drops on a 1G physical link even though the actual traffic is almost 300M.

do you have any recommendation or I just need to keep bumping up the queue-limit as traffic grows in that class.The slides didn't say anything about potential queue-limit increase issues.

Thanks,

MEH-D

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi MED-D,

the 66kB queue limit seems rather low for a 1G interface. Can you share the configuration of the interface, original config of the policy-map and the 'sh qos interface <interface> out'?

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar,

ORIGINAL configuration before applying “queue-limit 8000 kbytes “

policy-map MPLS_OUTPUT_QOS

class exp5

priority level 1

!

class exp3

bandwidth remaining percent 30

random-detect default

!

class exp2

bandwidth remaining percent 15

random-detect default

!

class exp1

bandwidth remaining percent 5

random-detect default

!

class exp4

bandwidth remaining percent 40

random-detect default

!

class class-default

random-detect default

!

end-policy-map

!

—————

interface Bundle-Ether3

mtu 9216

service-policy output MPLS_OUTPUT_QOS

ipv4 mtu 9142

ipv4 address 10.196.4.5 255.255.255.252

lacp system mac 0001.0001.0003

lacp switchover suppress-flaps 15000

mpls

mtu 9162

!

mac-address a80c.d29.d682

load-interval 30

———current output with “queue-limit 8000 kbytes “ applied ——

show qos interface bundle-Ether 3 output member gigabitEthernet 100/0/0/0

Interface: GigabitEthernet100_0_0_0 output

Bandwidth configured: 1000000 kbps Bandwidth programed: 1000000 kbps

ANCP user configured: 0 kbps ANCP programed in HW: 0 kbps

Port Shaper programed in HW: 0 kbps

Policy: MPLS_OUTPUT_QOS Total number of classes: 6

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: exp5

QueueID: 65608 (Priority 1)

Queue Limit: 12224 kbytes Abs-Index: 126 Template: 0 Curve: 6

Shape CIR Profile: INVALID

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: exp3

QueueID: 65610 (Priority Normal)

Queue Limit: 190 kbytes Abs-Index: 36 Template: 7 Curve: 4

Shape CIR Profile: INVALID

WFQ Profile: 1/59 Committed Weight: 60 Excess Weight: 60

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 30

WRED Type: RED Default Table: 0 Curves: 1

Default RED Curve Profile: 36/7/4 Thresholds Min : 94 (93) kbytes Max: 190 (187) kbytes

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: exp2

QueueID: 65611 (Priority Normal)

Queue Limit: 96 kbytes Abs-Index: 24 Template: 7 Curve: 4

Shape CIR Profile: INVALID

WFQ Profile: 1/29 Committed Weight: 30 Excess Weight: 30

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 15

WRED Type: RED Default Table: 0 Curves: 1

Default RED Curve Profile: 24/7/4 Thresholds Min : 47 (46) kbytes Max: 96 (93) kbytes

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: exp1

QueueID: 65612 (Priority Normal)

Queue Limit: 32 kbytes Abs-Index: 12 Template: 7 Curve: 4

Shape CIR Profile: INVALID

WFQ Profile: 1/9 Committed Weight: 10 Excess Weight: 10

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 5

WRED Type: RED Default Table: 0 Curves: 1

Default RED Curve Profile: 12/7/4 Thresholds Min : 15 (15) kbytes Max: 32 (31) kbytes

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: exp4

QueueID: 65613 (Priority Normal)

Queue Limit: 254 kbytes Abs-Index: 41 Template: 7 Curve: 4

Shape CIR Profile: INVALID

WFQ Profile: 1/71 Committed Weight: 81 Excess Weight: 81

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 40

WRED Type: RED Default Table: 0 Curves: 1

Default RED Curve Profile: 41/7/4 Thresholds Min : 126 (125) kbytes Max: 254 (250) kbytes

----------------------------------------------------------------------

Level: 0 Policy: MPLS_OUTPUT_QOS Class: class-default

QueueID: 65614 (Priority Normal)

Queue Limit: 8128 kbytes (8000 kbytes) Abs-Index: 116 Template: 0 Curve: 0

Shape CIR Profile: INVALID

WFQ Profile: 1/19 Committed Weight: 20 Excess Weight: 20

Bandwidth: 0 kbps, BW sum for Level 0: 0 kbps, Excess Ratio: 10

----------------------------------------------------------------------

Thanks,

MEH-D

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi MEH-D,

when a class is designated as priority class and doesn't have a policer configured, we set the visible BW of that class to the 95% of the physical interface BW (that's why the priority level1 queue used by class exp5 was allocated 12224 kbytes). The sum of the visible BW for all other classes was 5% of the physical interface BW. This is why they all got so small queues by default. Our recommendation is to always configure a policer in a priority class. If 95% of the traffic is high priority, then nothing is high priority, as they say.

hth,

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks- your response helped a lot.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander ,

Many thanks for the docs.

What about Ingress ( untagged , exp 4 , ipp 1 ) going to Egress dot1q ( routed IP only ), similar to scenario 6. What i'm seeing in the lab is that the internal cos = 4 ( based on exp ) is not rewritten into the dot1q packet going out. Instead IPP is used.

Was expecting the dot1q cos value to match the internal one for the dot1q interfaces.

Regards,

Catalin

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: