- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-20-2018 11:34 PM - edited 03-01-2019 02:01 PM

Symptoms

When VSM services part fails, the failure will not propagate to ABF, which will result in black holing of the traffic instead of switching the traffic to backup VSM, if one exists

Solution

To track the reach-ability between Ingress service App interface and Egress traffic port using IPSLA and trigger the ABF when this reach-ability breaks.

Details

Consider a router with VSM and CGN services running on both of them. We have applied an ABF on Ingress port with first next hop pointing to Ingress service App in VSM1 and second next hop pointing to Ingress service App in VSM 2. At any instance, when next hop 1 becomes unreachable, the ABF will start diverting the traffic to service App in VSM2. Hence black holing of traffic will be avoided.

Each VSM has service and Infra part. There are situations where a service part of the VSM will not get propagated to infra part of VSM, which results in ABF been not notified of the next hop failure. So ABF will not switch the traffic to next hop, instead will continue to forward traffic to VSM1 which will result in traffic dropped as service part is no longer in running state to process the traffic.

To mitigate this scenario, one of the approach it to implement IPSLA which will track the path from Ingress service App to Egress traffic port. IPSLA used ICMP to track the path. If any failure happens in service part, this path will break and IP reachability goes down. Hence when service part of VSM fails, the IPSLA catches the failure. The failure will be propagated to ABF from then on and action will be taken accordingly which will be switchover of traffic from nexthop1 to next hop 2. Even after the failure, the IPSLA continues to track the path and once it comes back again, ABF will switch traffic back to nexthop1. Thus the traffic black holing due to services part failure is mitigated.

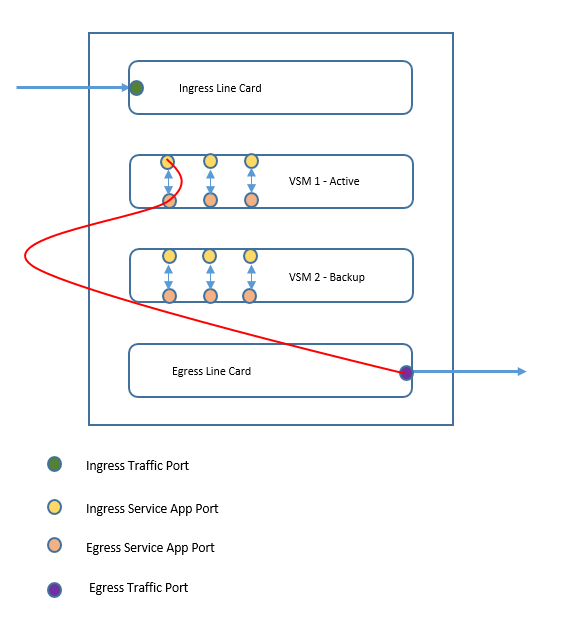

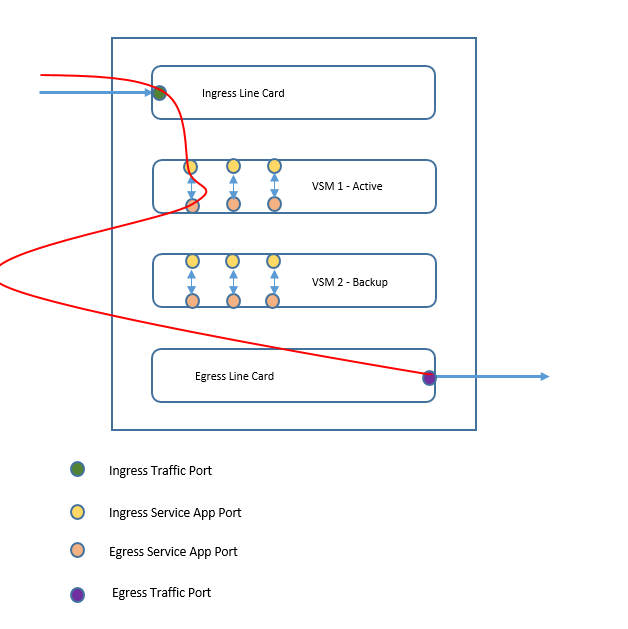

Following figures give a clear explanation on packet flow when VSM1 is active and when VSM1 fails. Also it gives explanation where the IPSLA is triggered. After these figures, the configuration examples are provided, which will help in implementing the solution without any hick ups.

This solution is verified on ASR9K routers with VSM running CGN services. We need to define one IPSLA track per VSM card using any one service App. The same can be used for tracking all ABFs associated with that card

Note: Please refer to following cisco support forum page for detailed explanation on CGN on VSM with HA scenarios.

IPSLA Tracking Enabled between service Ingress Port and Egress Traffic Port:

- IPSLA is run between Ingress service App port of VSM1 and egress traffic port.

- IPSLA used ICMP for tracking

- On failure ABF will be notified, which results in traffic switchover according to the ABF rule defined

Traffic Path When VSM 1 is Active:

- Traffic enters Ingress traffic port.

- Then based on ABF applied on Ingress traffic port, which has next hop IP as Ingress Service App IP, also based on IPSLA which doesn’t report any failure, traffic enters service App 1 in VSM1.

- Then traffic passes to Egress service App on VSM1 along with NAT been applied.

- Then traffic exits out of Egress traffic port based on Routing information available.

Example configuration:

Ingress traffic port configuration:

RP/0/RSP0/CPU0:Hulk#sh running-config interface bundle-ether 400.101

interface Bundle-Ether400.101

ipv4 address 10.22.231.2 255.255.255.252

encapsulation dot1q 101

ipv4 access-group VNAT1_ABF ingress

!

Egress traffic port configuration:

interface TenGigE0/3/0/4

ipv4 address 22.1.1.1 255.255.255.0

!

Ingress service App configuration:

RP/0/RSP0/CPU0:Hulk#sh running-config interface serviceApp 1

interface ServiceApp1

vrf SVSM-1

ipv4 address 10.22.241.14 255.255.255.252

load-interval 30

service cgn VSM-1 service-type nat44

!

VRF configuration for ingress service App:

RP/0/RSP0/CPU0:Hulk#sh running-config vrf SVSM-1

vrf SVSM-1

description <<inside vrf1 on VSM-1>>

address-family ipv4 unicast

import route-target

20:1

!

export route-target

20:1

!

!

!

Static route to Traffic Egress port under Ingress next Hop:

RP/0/RSP0/CPU0:Hulk#sh running-config router static vrf SVSM-1

router static

vrf SVSM-1

address-family ipv4 unicast

10.1.1.0/24 vrf default 10.22.231.1

22.1.1.1/32 ServiceApp1

!

!

!

IPSLA Configuration:

RP/0/RSP0/CPU0:Hulk#sh running-config ipsla

ipsla

operation 1000

type icmp echo

vrf SVSM-1

source address 10.22.241.14

destination address 22.1.1.1

timeout 2000

frequency 5

!

!

reaction operation 1000

react timeout

action logging

!

!

schedule operation 1000

start-time now

life forever

!

Track configuration:

RP/0/RSP0/CPU0:Hulk#sh running-config track

track TSA1

type rtr 1000 reachability

delay up 5

delay down 5

!

ABF configuration (ABF is attached to ingress traffic port):

RP/0/RSP0/CPU0:Hulk#sh running-config ipv4 access-list VNAT1_ABF

ipv4 access-list VNAT1_ABF

10 permit ipv4 10.1.1.0/24 any nexthop1 track TSA1 vrf SVSM-1 ipv4 10.22.241.13 nexthop2 vrf SVSM-33 ipv4 10.22.241.141

20 permit ipv4 10.1.2.0/24 any nexthop1 track TSA1 vrf SVSM-3 ipv4 10.22.241.21 nexthop2 vrf SVSM-35 ipv4 10.22.241.149

30 permit ipv4 10.1.3.0/24 any nexthop1 track TSA1 vrf SVSM-5 ipv4 10.22.241.29 nexthop2 vrf SVSM-37 ipv4 10.22.241.157

40 permit ipv4 10.1.4.0/24 any nexthop1 track TSA1 vrf SVSM-7 ipv4 10.22.241.37 nexthop2 vrf SVSM-39 ipv4 10.22.241.165

50 permit ipv4 10.1.5.0/24 any nexthop1 track TSA1 vrf SVSM-9 ipv4 10.22.241.45 nexthop2 vrf SVSM-41 ipv4 10.22.241.173

60 permit ipv4 10.1.6.0/24 any nexthop1 track TSA1 vrf SVSM-11 ipv4 10.22.241.53 nexthop2 vrf SVSM-43 ipv4 10.22.241.181

70 permit ipv4 10.1.7.0/24 any nexthop1 track TSA1 vrf SVSM-13 ipv4 10.22.241.61 nexthop2 vrf SVSM-45 ipv4 10.22.241.189

80 permit ipv4 10.1.8.0/24 any nexthop1 track TSA1 vrf SVSM-15 ipv4 10.22.241.69 nexthop2 vrf SVSM-47 ipv4 10.22.241.197

1000 permit ipv4 any any

!

IPSLA statistics:

RP/0/RSP0/CPU0:Hulk#sh ipsla statistics

Entry number: 1000

Modification time: 16:15:15.275 IST Fri May 20 2016

Start time : 16:15:15.276 IST Fri May 20 2016

Number of operations attempted: 18435

Number of operations skipped : 0

Current seconds left in Life : Forever

Operational state of entry : Active

Operational frequency(seconds): 5

Connection loss occurred : FALSE

Timeout occurred : FALSE

Latest RTT (milliseconds) : 47

Latest operation start time : 17:51:25.481 IST Sat May 21 2016

Next operation start time : 17:51:30.481 IST Sat May 21 2016

Latest operation return code : OK

RTT Values:

RTTAvg : 47 RTTMin: 47 RTTMax : 47

NumOfRTT: 1 RTTSum: 47 RTTSum2: 2209

Results :

VSM HA will induce 15sec of delay while convergence if CGN APP fails.

IPSLA solution can help us reduce the SWO time considering IPSLA probes tracking.

Note: Probe timers should not be less than 10 to avoid building stress conditions.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Nitin,

Excellent article about active-active VSM mode.

It reminds me on my setup of VSM solution.

1. Per your config, is it enough to SLA tracking only one ServiceApp1 on VSM module?

There is no need to track ServiceApp1, ServiceApp3, ServiceApp5... because they are all on the same VSM module?

So there is no need to send SLA traffic inbound to inside physical interface Bundle-Ether400.101 to get NATed?

It can be just sourced from ServiceApp interface, no need for a traffic to right enter a ServiceApp through an inside physical interface?

2. In your setup you have only one uplink interface TenGigE0/3/0/4 (to the Internet).

If we have a multihoming, then this SLA tracking of only one uplink is maybe not accurate because if this particular uplink will go down then there is a ABF switchover and that is actually not necessary because other uplinks will work.

Is there a valid solution if a SLA tracking is toward a Loopback interface in global routing table?

3. I found a command o2i-vrf-override, is this a valid solution instead of a route leaking?

In your config ServiceApp1 is in vrf SVSM-1 and inside physical port Bundle-Ether400.101 is in global routing table.

Would that command help here so you don't have a following static route in your config?

router static

vrf SVSM-1

address-family ipv4 unicast

10.1.1.0/24 vrf default 10.22.231.1

I appreciate your answers.

Best regards,

Antonio