- Cisco Community

- Technology and Support

- Data Center and Cloud

- Storage Networking

- You will need the following

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2011 08:44 AM

Hello:

We have 2 Nexus 5000 Switch with n5000-uk9.5.0.3.N2.2.bin system image file.

The 2 Nexus are distributed in 2 centers and are connected directly.

In each of the switches there is an Oracle server. These Oracle servers are clustered and connected by a heartbeat link.

The Oracle administrator ask us to configure jumbo frames in the heartbeat.

How can I make this?

Thanks!!!

Solved! Go to Solution.

- Labels:

-

Storage Networking

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2011 12:46 PM

All you need to do is put this configuration on all your nexus 5ks:

switch(config)#policy-map type network-qos jumbo

switch(config-pmap-nq)#class type network-qos class-default

switch(config-pmap-c-nq)#mtu 9216

switch(config-pmap-c-nq)#exit

switch(config-pmap-nq)#exit

switch(config)#system qos

switch(config-sys-qos)#service-policy type network-qos jumbo

This will globally enable jumbo frame capability.

To verify that it works, do a "show int eth x/y", if you see the jumbo configuration increasing and the packets aren't getting stomped, its working.

Also note that the mtu won't change on the interface itself, its a global command, not a 'per interface' command. But thats ok, even if mtu says 1500 it can still accept jumbo frames if you have the global config applied.

Example:

Ethernet1/1 is up

...

RX

0 unicast packets 1221713 multicast packets 2644 broadcast packets

1224357 input packets 91236171 bytes

---> 458 jumbo packets 0 storm suppression packets

0 giants 0 input error 0 short frame 0 overrun 0 underrun

0 watchdog 0 if down drop

0 input with dribble 0 input discard

0 Rx pause

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2011 06:08 PM

by jumpo frame means they will be using MTU of 9000( we used this) instead of default 1500. As far as i know you need to set the MTU to 9000 on the lan card configuration of the server and MTU of 9000 on the switch port where this lan connections are terminated..

Oracle recommends it for performance, but the disadvantage is that when an NIC is replaced and admin forget to change the MTU from default the nodes will not be able to join/reform the cluster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2011 02:28 AM

I have seen how to configure jumbo frames in a Nexus 5k, is not necessary configure mtu in the interfaces:

http://www.cisco.com/en/US/products/ps9670/products_configuration_example09186a0080b44116.shtml

!--- You can enable the Jumbo MTU

!--- for the whole switch by setting the MTU

!--- to its maximum size (9216 bytes) in

!--- the policy map for the default

!--- Ethernet system class (class-default).

switch(config)#policy-map type network-qos jumbo

switch(config-pmap-nq)#class type network-qos class-default

switch(config-pmap-c-nq)#mtu 9216

switch(config-pmap-c-nq)#exit

switch(config-pmap-nq)#exit

switch(config)#system qos

switch(config-sys-qos)#service-policy type network-qos jumbo

But my answer is:

Is necessary configure policy-maps to get jumbo frames?

If I configure jumbo frames in the 2 nexus, can the 2 Oracle nodes in different Nexus use jumbo frames or I have to do something more?

Thanks!!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2011 12:46 PM

All you need to do is put this configuration on all your nexus 5ks:

switch(config)#policy-map type network-qos jumbo

switch(config-pmap-nq)#class type network-qos class-default

switch(config-pmap-c-nq)#mtu 9216

switch(config-pmap-c-nq)#exit

switch(config-pmap-nq)#exit

switch(config)#system qos

switch(config-sys-qos)#service-policy type network-qos jumbo

This will globally enable jumbo frame capability.

To verify that it works, do a "show int eth x/y", if you see the jumbo configuration increasing and the packets aren't getting stomped, its working.

Also note that the mtu won't change on the interface itself, its a global command, not a 'per interface' command. But thats ok, even if mtu says 1500 it can still accept jumbo frames if you have the global config applied.

Example:

Ethernet1/1 is up

...

RX

0 unicast packets 1221713 multicast packets 2644 broadcast packets

1224357 input packets 91236171 bytes

---> 458 jumbo packets 0 storm suppression packets

0 giants 0 input error 0 short frame 0 overrun 0 underrun

0 watchdog 0 if down drop

0 input with dribble 0 input discard

0 Rx pause

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2011 05:19 AM

Thank you very much!!

We have tried it and it worked fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2011 02:11 PM

Another way to easily tell if you hace configured Jumbo frames is to execute the following command and look for the section for qos-group 0 (which is the default class for all ethernet traffic):

switch# show queuing interface ethernet 1/1

Ethernet1/1 queuing information:

TX Queuing

qos-group sched-type oper-bandwidth

0 WRR 50

1 WRR 50

RX Queuing

qos-group 0

q-size: 243200, HW MTU: 9280 (9216 configured)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-19-2020 05:20 AM

Hello Stanti,

How can we disable/restrict an interface from sending jumbo frames on to the connected devices? we have a catalyst switch connected to our Nexus 5K, I see lot of jumbo frames being sent on the ports and in the cat switch I see lot of giants and output packet discards. We want the jumbo frames on our Nexus switches, but it shouldn't transmit on few ports.

Regards,

Sai.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2015 11:24 AM

You will need the following config for Jumbo with FCOE.

policy-map type network-qos jumboFCOE

class type network-qos class-fcoe

pause no-drop

mtu 2158

class type network-qos class-default

mtu 9216

multicast-optimize

system qos

service-policy type network-qos jumboFCOE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2019 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-11-2013 05:35 AM

Hi all,

When i have used the above commands to set up jumbo frames on my Nexus 5548UP's running 6.0.2 i have found that i cannot ping any higher than 8972 from the Nexus to the hosts and hosts to hosts/ NetApp controllers

Performing a Wireshark of the ICMP there is a lot of overhead (42 bytes in total) but no real information on what it is other than 20 for the frame header and 8 i believe for ICMP itself. Is this standard behaviour?

vmhost> vmkping -d -s 9000 10.62.24.10

times out...

1# ping 10.62.24.10 packet-size 9000 df-bit

PING 10.62.24.10 (10.62.24.10): 9000 data bytes

Request 0 timed out

Request 1 timed out

Request 2 timed out

Request 3 timed out

Request 4 timed out

--- 10.62.24.10 ping statistics ---

5 packets transmitted, 0 packets received, 100.00% packet loss

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-12-2013 12:31 AM

Hi David,

ESX/ESXi supports an MTU size of 9000-bytes which means the value you're using with the -s option is too large.

When using vmkping the value specified with the -s option is the payload. What you should do is run the test with a value of -s 8972 so that when we include the 8-byte ICMP and 20-byte IP header the packet becomes 9000-bytes. This should work assuming the end-to-end path is all configured for 9000-bytes.

The same is true for the ping from the Nexus switch so the value you should use with the packet-size option is 28-bytes less than the MTU.

Is the test successful if you use 8972-bytes? Also I'm intrigued about the 42-bytes overhead you're seeing on the Wireshark capture. Is it possible to post that here?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-15-2013 04:16 AM

Hi Steve,

Thanks for you input, i think you have cleared this up for us. My strorage guys seemed to think they could ping 9000 with the -s but we were only getting 8972.

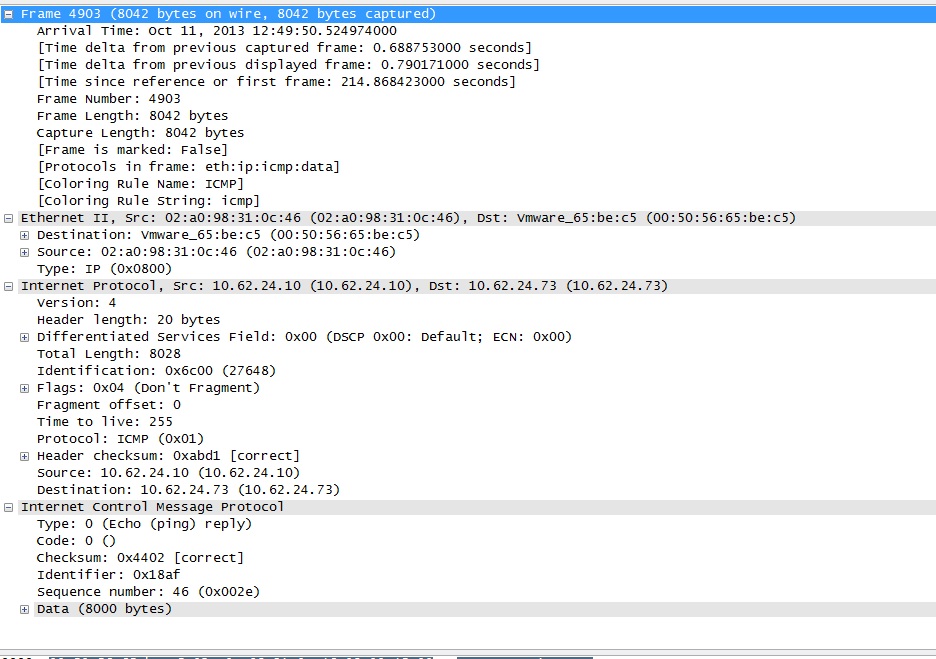

Heres a sample at 8000 to show that over all its 8042 but i believe the extra 14 is coming from the frame header, correct?:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-15-2013 04:48 AM

Hi David,

Exactly right. As shown in Wireshark what you have is 8000-bytes payload + 8-bytes ICMP header + 20-bytes IP header + 14-bytes Ethernet frame header. This total the 8042-bytes reported.

As you've already know you can successfully ping up to 8972-bytes, which would be seen as 9014-bytes in Wireshark.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-01-2014 01:54 PM

Hi guys,

I know this is an old subject, and my apologies for resuscitating it, but I was wondering if applying the above mentioned commands will disrupt service in any way?

Thank you!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-04-2014 05:27 AM

No, jumbo configuration is not disruptive in 5.0(3)N2(2) and later code on the Nexus 5000s, even in a VPC.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide