Figure source: https://www.darpa.mil/program/explainable-artificial-intelligence

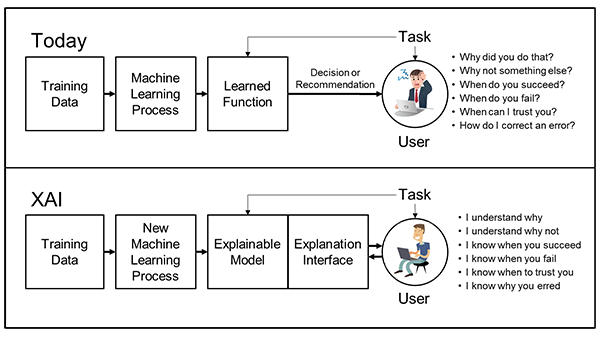

As AI becomes more advanced and complex, humans are finding it increasingly difficult to comprehend how AI arrives at decisions and results.

Figure source: https://www.darpa.mil/program/explainable-artificial-intelligence

Explainable AI (XAI) is the field of research that focuses on developing methods and techniques to make the outputs of AI systems and machine learning easier for humans to understand and consume. It explains how AI and machine learning models make their decisions.

When someone tells us the result of a decision and provides a reason for it, it increases our trust in the decision's outcome. This concept is applied to XAI. People also feel more comfortable if the reasoning is provided along with the decisions they need to make.

Other than aiding in decision-making, XAI tools also make it easier for developers to identify issues with AI models. If a model is making incorrect predictions, XAI can pinpoint the sources of the problem. There are more benefits of XAI depending on how we use it.