- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Reduced CP MTU and expect MultiPod to work?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2021 09:31 PM - edited 10-21-2021 09:32 PM

Hi community,

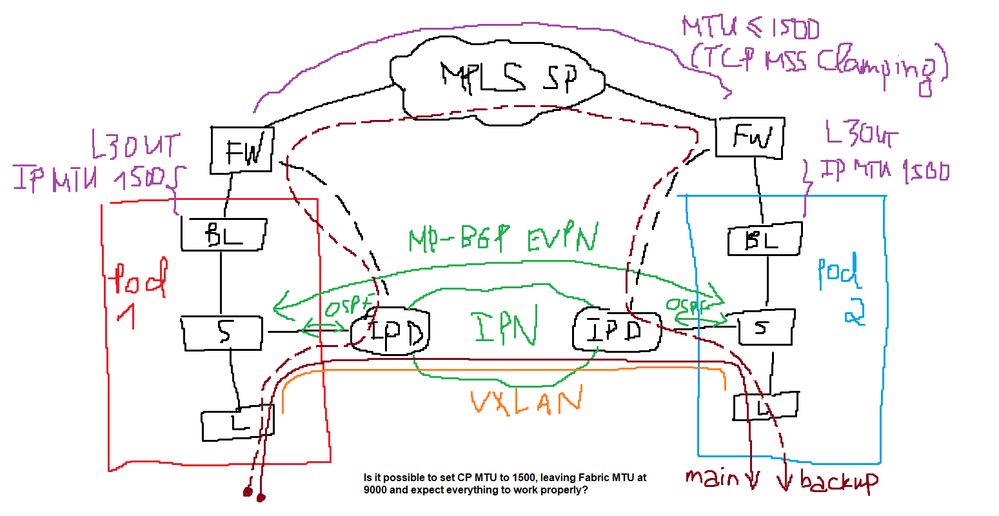

I have a case where we have a typical MultiPod deployment (2 Spines connecting to 2 IPN devices at each site, 2 IPN devices connecting to each other and connecting to the other sites via P2P). The dedicated IPN is configured to support increased MTU.

If I want to have redundant paths by connecting our IPN devices to our CE devices (FW), which interconnect via MPLS service providers (where IP MTU is not of our control), is it possible to have the CP MTU lowered (for MP-BGP establishment, since we're at release 4.2 where 9150B is no longer mandated), while leaving the Fabric MTU at 9000 and still expect the traffic to flow through? This would cause data and VXLAN to be fragmented traversing the CE and MPLS infrastructure, which is probably against best practice/white paper.

The CE (FW) is also used for North-South traffic, hence it is not preferred to run ECMP with the existing IPN.

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2021 05:24 AM

Typically you wouldn't want your data MTU any higher than your CP MTU for the reason you called out - fragmentation. Your MTU (Data & CP) should be dictated by the largest supported MTU size of any/all IPN devices involved. You'll see poor performance if you're having to fragment & re-assemble all your data traffic. The absolute bare min. would be 1550B. If your provider network can't provide at least this, then its a no-go option. Having the FW participating as an IPN device to the other Pod is fine, assuming it meets all the IPN requirements, but you'd want to put the IPN traffic into it's own VRF/Context since you're also using it for reg. L3out. You can also implement traffic engineering to "prefer" the path between certain IPN device rather using path cost metrics - making the better cost path through your existing IPN devices and a secondary path via the FW IPN connection.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2021 05:24 AM

Typically you wouldn't want your data MTU any higher than your CP MTU for the reason you called out - fragmentation. Your MTU (Data & CP) should be dictated by the largest supported MTU size of any/all IPN devices involved. You'll see poor performance if you're having to fragment & re-assemble all your data traffic. The absolute bare min. would be 1550B. If your provider network can't provide at least this, then its a no-go option. Having the FW participating as an IPN device to the other Pod is fine, assuming it meets all the IPN requirements, but you'd want to put the IPN traffic into it's own VRF/Context since you're also using it for reg. L3out. You can also implement traffic engineering to "prefer" the path between certain IPN device rather using path cost metrics - making the better cost path through your existing IPN devices and a secondary path via the FW IPN connection.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-24-2021 09:04 AM

Can you route Multicast PIM bidir through the firewall and the MPLS network? If not then this isn't an option.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-02-2021 07:40 PM

True, the FW pairs do not support PIM Bidir at all - I should've looked it up first.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide