- Cisco Community

- Technology and Support

- Networking

- Cisco Catalyst Center

- Re: DNA Center failed upgrade to 1.3.0.109

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DNA Center failed upgrade to 1.3.0.109

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021 03:18 AM

Hello everyone,

I see many threads that people had problems upgrading their DNA center (standalone), but never saw a resolution after their TAC cases. Is that complicated to solve this?

I'm trying to upgrade my DNA center from 1.2.0.1013 to 1.3.0.109, but after 3-4 hours stuck at 0%, I get the following message:

"System update failed during DOWNLOADED_HOOKS. Timeout waiting to pull system update hook bundle"

Unfortunately I don't have support for at least 1 or 2 months, hence I cannot open a TAC case. Does someone now how to fix this?

Thank you!

Vali Puiu

- Labels:

-

Cisco DNA Automation

-

Other Cisco DNA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021 05:55 AM

Have you gone through pre-upgrade checks to confirm you are ready to upgrade? Have you ensured that your DNAC can reach out? Cisco has an Audit Upgrade Readiness Analyzer (AURA) tool that you can pull down to your DNAC nodes from github that essentially automates all of the "pre-upgrade" checks that TAC typically wants you to go through. Take a peek here: https://community.cisco.com/t5/cisco-digital-network/dnac-aura-tool/td-p/4146539

HTH!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021

07:30 AM

- last edited on

01-14-2021

01:16 PM

by

Jonathan Cuthbert

![]()

Hello Mike,

Appreciate your support, took the AURA tool although running on a lower version than recommended, but it worked.

At the "Cisco DNA Center & Device Upgrade Readiness Results" I'm having 2 warning and 1 error.

#03:Checking:Count of Exited containers

INFO:324

Warning:Found 324 exited containers. Please contact TAC to perform a cleanup

#04:Checking:Count of Non Running Pods

INFO:18

Warning:Found 18 Non Running Pods. Please contact TAC to perform a cleanup

#05:Checking:Maglev Catalog Settings

Error:#01:Maglev Catalog Settings Validation check failed while executing command: Validating c

atalog server settings...

ERROR: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)

I suppose the error is the reason of the failed upgrade, but it's a really strange error. The certificate is imported and according to the GUI it looks good.

I do have some ISE PSN certificates expired in the trustpool but I don't see a reason for the upgrade to fail.

Do you have any other recommendation?

Thank you!

Vali Puiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021

12:18 PM

- last edited on

01-14-2021

12:57 PM

by

Jonathan Cuthbert

![]()

You have the ability to clean the non running pods:

Check for lingering pods

The output of the following command should return no results. If there is a result returned, it indicates that pods are not being reaped appropriately.

$ kubectl get pod -a --all-namespaces | grep -v Running

NAMESPACE NAME READY STATUS RESTARTS AGE

maglev-system cluster-updater 0/1 Completed 0 7h

maglev-system daily-catalog-cleanup-1541635200-q3nst 0/1 Completed 0 1d

maglev-system daily-catalog-cleanup-1541721600-lc46s 0/1 Completed 0 1h

ndp aggregationjobs-1541721600-lzdxk 0/1 Completed 0 1h

ndp aggregationjobs-1541725200-lblzm 0/1 Completed 0 39m

ndp purge-jobs-1541721600-2s7bz 0/1 Completed 0 1h

ndp purge-jobs-1541725200-x0k18 0/1 Completed 0 39m

Remove pods not in Running state

kubectl get pods -a --all-namespaces --no-headers | grep -v Running | awk '{print "kubectl delete pod -n "$1,$2}' | while read -r cmd; do $cmd ; done

Restart docker:

After container and pod cleanup, it is prudent to restart docker service so that it can perform house keeping routines and starts with cleaner set of containers.

$ sudo systemctl restart docker

The attached is a checklist that used to be used prior to aura automation tool. HTH!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-15-2021 02:41 AM

Thank you for the guidance, after performing the steps the report looks better as I got rid of the readiness error checks:

#03:Checking:Count of Exited containers

INFO:290

Warning:Found 290 exited containers. Please contact TAC to perform a cleanup

#04:Checking:Count of Non Running Pods

INFO:1

INFO:Looks OK. No Non-Running Pods were found

#05:Checking:Maglev Catalog Settings

INFO:Validating catalog server settings...

Parent catalog settings are valid

INFO:Maglev Catalog Settings Validate looks OK

INFO:Maglev Catalog Settings Display :

maglev-1 [main - https://kong-frontend.maglev-system.svc.cluster.local:443]

But the problem persists when I want to upgrade:

System update status:

Version successfully installed : 1.2.0.1013

Version currently processed : 1.3.0.109

Update phase : failed

Update details : Timeout waiting to pull system update hook bundle

Progress : 0%

Updater State:

Currently processed version : 1.3.0.109

State : FAILED

Sub-State : DOWNLOADED_HOOKS

Details : Timeout waiting to pull system update hook bundle

Source : system-updater

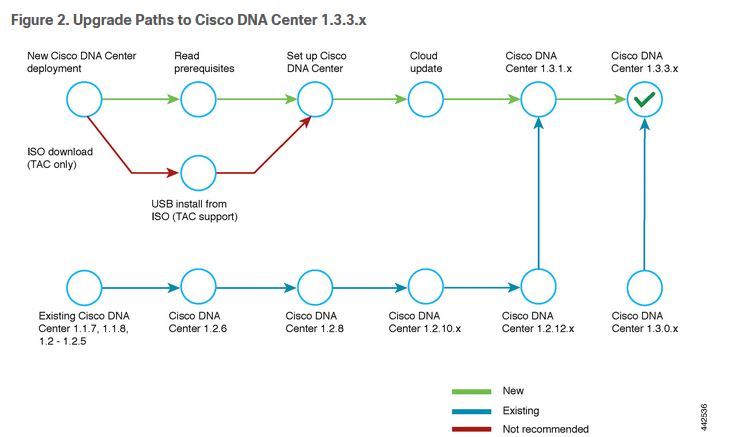

What I find strange is the path from 1.2.0.1013 to 1.3.0.109, which according to some Cisco documentation must be done through several steps:

Is there a way to upgrade manually through this intermediate versions? Or I can only upgrade to whatever DNA center shows me to?

Thank you!

Vali Puiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021 01:10 PM

Vali,

Unfortunately it is no longer possible to update to 1.3.0.109 (1.3.1.4) currently we can only update to the latest maintenance release of any train. For 1.3.1.X that would be 1.3.1.7, however that would not cause the current error message. So there are at least two issues here. Without TAC support this will be difficult to resolve over this forum. Reach out to your account/sales team to see if they can assist you with support, and if you don't have much data you can always reimage with the latest version. You can download the ISO from software.cisco.com and follow the instructions in the install guide for your model and desired version to reimage the appliance.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide