- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- ACI: Common migration issue / VMM vSwitch policy missing

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-08-2018 05:09 PM - edited 03-01-2019 06:07 AM

- Document Objective

- Problem Description

- Common troubleshooting steps

- Validating the connectivity

- iPing test

- VerifyEndpoint learning

- Reviewing EPM trace for endpoint move

- Review the Endpoint state transition using EP tracker

- Review the DVS load-balancing policy at vCenter

- Review the vSwitch for VMM domain

- Resolution steps

- Verification

Document Objective

This document discuss a common issue observed during the VMM integration & VM workload migration to ACI fabric.

Problem Description

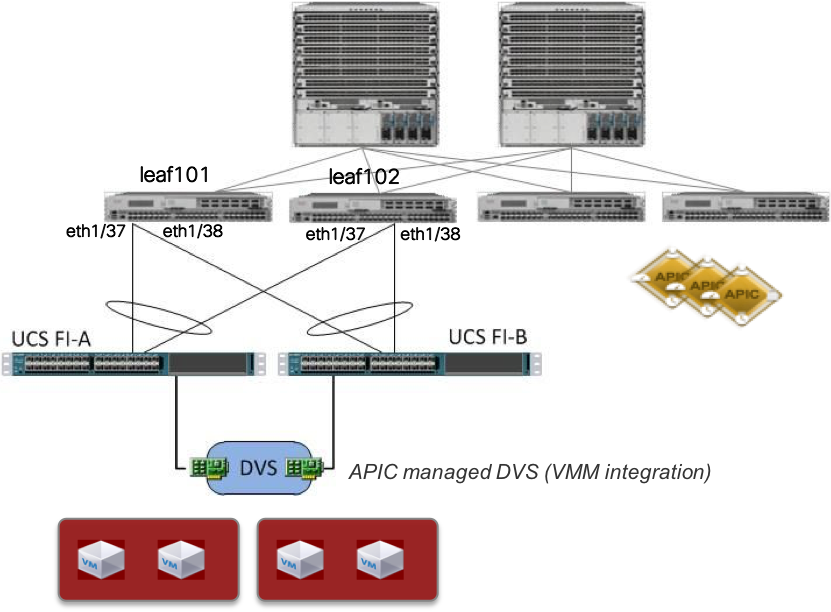

VMware Virtual machines are hosted in Cisco UCS-B series blade servers (ESXi servers). UCS Fabric interconnect is connected to leaf101 & leaf102 (vPC - LACP active).

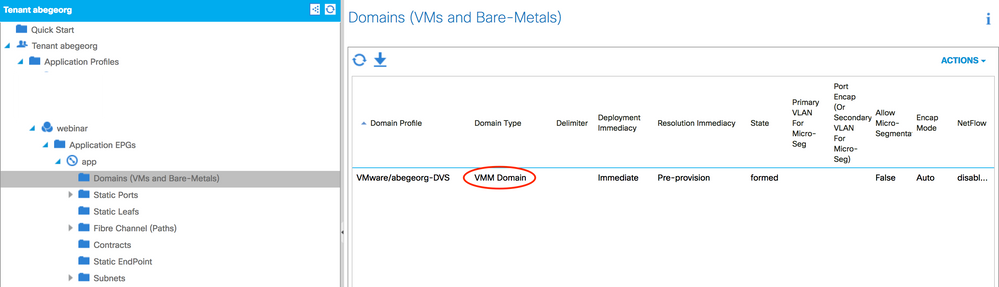

EPG "abegeorg:webinar:app" has a VMM domain (VMWare DVS) deployed, so the corresponding port-group will be created for VMWare DVS.

Once the VMM integration (VMWare DVS) is completed and migrated the VM workload to ACI managed DVS, intermittent connectivity issue is observed with the newly migrated VM. Users are experiencing the slowness in accessing the services, sometimes services are not accessible.

The example VM considered for this troubleshooting is IP -> 10.10.92.1/24, the default GW of this endpoint is residing in ACI fabric (10.10.92.254/24)

Common troubleshooting steps

This sections covers common troubleshooting steps can be used to isolate similar issues. You are encouraged to use these steps to troubleshoot such connectivity issues.

Validating the connectivity

Now, lets check some connectivity state and validate how the ACI fabric is connected to the server (in this case UCS B-Series chassis). The CDP and port-channel output obtained below indicates that the FI-A & FI-B are connected to ACI fabric leaf (leaf101 & leaf102) using vPC. The vPC ports and up.

leaf101# show cdp neighbors

<< snip >>

UCS-ACI-PODs-A(SSI182207R7) Eth1/37 171 S I s UCS-FI-6248UP Eth1/5

UCS-ACI-PODs-B(SSI1822054V) Eth1/38 127 S I s UCS-FI-6248UP Eth1/5

<< snip >>

leaf101#

leaf101# show vpc extended

<< snip >>

691 Po9 up success success 3874-3875,3887 abegeorg-ucs-a-vpc

692 Po10 up success success 3874-3875,3887 abegeorg-ucs-b-vpc

<< snip >>

leaf101#

leaf101# show port-channel extended

<< snip >>

9 Po9(SU) abegeorg-ucs-a-vpc LACP Eth1/37(P)

10 Po10(SU) abegeorg-ucs-b-vpc LACP Eth1/38(P)

leaf101#

leaf102# show cdp neighbors

<< snip >>

UCS-ACI-PODs-A(SSI182207R7) Eth1/37 165 S I s UCS-FI-6248UP Eth1/6

UCS-ACI-PODs-B(SSI1822054V) Eth1/38 121 S I s UCS-FI-6248UP Eth1/6

<< snip >>

leaf102#

leaf102# show vpc extended

<< snip >>

691 Po8 up success success 3874-3875,3887 abegeorg-ucs-a-vpc

692 Po9 up success success 3874-3875,3887 abegeorg-ucs-b-vpc

<< snip >>

leaf102#

leaf102# show port-channel extended

<< snip >>

8 Po8(SU) abegeorg-ucs-a-vpc LACP Eth1/37(P)

9 Po9(SU) abegeorg-ucs-b-vpc LACP Eth1/38(P)

leaf102#

In this case, the default GW of the VM workload is at the ACI fabric. So, lets do some connectivity test from ACI fabric. The iping result indicates that there are intermittent ping drops, the connectivity from the ACI fabric itself is having issue.

iPing test

leaf101# iping -V abegeorg:webinar 10.10.92.1 -c 20

PING 10.10.92.1 (10.10.92.1) from 10.10.92.254: 56 data bytes

Request 0 timed out

64 bytes from 10.10.92.1: icmp_seq=1 ttl=64 time=0.277 ms

64 bytes from 10.10.92.1: icmp_seq=2 ttl=64 time=0.346 ms

64 bytes from 10.10.92.1: icmp_seq=3 ttl=64 time=0.341 ms

64 bytes from 10.10.92.1: icmp_seq=4 ttl=64 time=0.445 ms

Request 5 timed out

Request 6 timed out

Request 7 timed out

Request 8 timed out

Request 9 timed out

64 bytes from 10.10.92.1: icmp_seq=10 ttl=64 time=0.292 ms

64 bytes from 10.10.92.1: icmp_seq=11 ttl=64 time=0.305 ms

64 bytes from 10.10.92.1: icmp_seq=12 ttl=64 time=0.348 ms

64 bytes from 10.10.92.1: icmp_seq=13 ttl=64 time=0.327 ms

64 bytes from 10.10.92.1: icmp_seq=14 ttl=64 time=0.48 ms

64 bytes from 10.10.92.1: icmp_seq=15 ttl=64 time=0.323 ms

64 bytes from 10.10.92.1: icmp_seq=16 ttl=64 time=0.314 ms

Request 17 timed out

Request 18 timed out

64 bytes from 10.10.92.1: icmp_seq=19 ttl=64 time=0.324 ms

--- 10.10.92.1 ping statistics ---

20 packets transmitted, 12 packets received, 40.00% packet loss

round-trip min/avg/max = 0.277/0.343/0.48 ms

leaf101#

Verify Endpoint learning

Now, lets check the endpoint learning details... When I tried "show system internal epm endpoint ip 10.10.92.1" between two times in two minutes interval, we are seeing the endpoint is moving between two distinct interfaces po9 & po10. The po9 and po10 are the connections to FI-A and FI-B respectively. This means that the traffic from 10.10.92.1 is load-balanced between FI-A and FI-B, which is not expected.

leaf101# show system internal epm endpoint ip 10.10.92.1

MAC : 0050.5690.864e ::: Num IPs : 2

IP# 0 : 172.16.200.104 ::: IP# 0 flags :

IP# 1 : 10.10.92.1 ::: IP# 1 flags :

Vlan id : 44 ::: Vlan vnid : 13106 ::: VRF name : abegeorg:webinar

BD vnid : 16613251 ::: VRF vnid : 2129922

Phy If : 0x16000009 ::: Tunnel If : 0

Interface : port-channel10

Flags : 0x80005c05 ::: sclass : 21 ::: Ref count : 6

EP Create Timestamp : 04/05/2018 10:20:37.357432

EP Update Timestamp : 04/06/2018 10:59:26.854181

EP Flags : local|vPC|IP|MAC|host-tracked|sclass|timer|

::::

leaf101# show clock

11:00:07.059408 CST Fri Apr 06 2018

leaf101#

leaf101#

leaf101# show system internal epm endpoint ip 10.10.92.1

MAC : 0050.5690.864e ::: Num IPs : 2

IP# 0 : 172.16.200.104 ::: IP# 0 flags :

IP# 1 : 10.10.92.1 ::: IP# 1 flags :

Vlan id : 44 ::: Vlan vnid : 13106 ::: VRF name : abegeorg:webinar

BD vnid : 16613251 ::: VRF vnid : 2129922

Phy If : 0x16000008 ::: Tunnel If : 0

Interface : port-channel9

Flags : 0x80005c05 ::: sclass : 21 ::: Ref count : 6

EP Create Timestamp : 04/05/2018 10:20:37.357432

EP Update Timestamp : 04/06/2018 11:00:52.054097

EP Flags : local|vPC|IP|MAC|host-tracked|sclass|timer|

::::

leaf101#

Unfortunately, it is very hard for you to get the timing correct when you attempt to do "show system internal epm endpoint ip 10.10.92.1" twice. Hence, another way you can check the endpoint learning behaviour in by reviewing the EPM traces as shown below...

Reviewing EPM trace for endpoint move

leaf101# grep 10.10.92.1 -A6 -B8 /var/log/dme/log/epm-trace.txt | more

--

[2018 Apr 6 10:40:05.093513948:9945985:epm_add_or_mod_ip_ep_obj:1803:t] Mac-to-IP relation obj added to objstore with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/mac-00:50:56:90

:86:4E/rsmacEpToIpEpAtt-[sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[172.16.200.104]]

[2018 Apr 6 10:40:05.093566785:9945986:epm_add_or_mod_ip_ep_obj:1789:t] Add/Mod of IpEp obj with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[10.10.92.1]

[2018 Apr 6 10:40:05.093617536:9945987:epm_add_or_mod_ip_ep_obj:1803:t] Mac-to-IP relation obj added to objstore with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/mac-00:50:56:90

:86:4E/rsmacEpToIpEpAtt-[sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[10.10.92.1]]

[2018 Apr 6 10:40:05.093619163:9945988:epm_attach_to_dirty_vlan_list:4359:t] Adding vlan 44 to dirty list with version 120505

[2018 Apr 6 10:40:05.093622239:9945989:epm_debug_dump_epm_ep:398:t] log_collect_ep_event

EP entry:

mac = 0050.5690.864e; num_ips = 2

ip[0] = 172.16.200.104

ip[1] = 10.10.92.1

vlan = 44; epg_vnid = 13106; bd_vnid = 16613251; vrf_vnid = 2129922

ifindex = 0x16000009; tun_ifindex = 0; vtep_tun_ifindex = 0

sclass = 21; ref_cnt = 6

flags = local|vPC|IP|MAC|host-tracked|sclass|timer|

create_ts = 04/05/2018 10:20:37.357432

upd_ts = 04/06/2018 10:40:07.854374

--

[2018 Apr 6 10:40:05.392355836:9946083:epm_add_or_mod_ip_ep_obj:1803:t] Mac-to-IP relation obj added to objstore with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/mac-00:50:56:90

:86:4E/rsmacEpToIpEpAtt-[sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[172.16.200.104]]

[2018 Apr 6 10:40:05.392408865:9946084:epm_add_or_mod_ip_ep_obj:1789:t] Add/Mod of IpEp obj with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[10.10.92.1]

[2018 Apr 6 10:40:05.392460098:9946085:epm_add_or_mod_ip_ep_obj:1803:t] Mac-to-IP relation obj added to objstore with Dn sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/mac-00:50:56:90

:86:4E/rsmacEpToIpEpAtt-[sys/ctx-[vxlan-2129922]/bd-[vxlan-16613251]/vlan-[vlan-3874]/db-ep/ip-[10.10.92.1]]

[2018 Apr 6 10:40:05.392461585:9946086:epm_attach_to_dirty_vlan_list:4359:t] Adding vlan 44 to dirty list with version 120506

[2018 Apr 6 10:40:05.392464652:9946087:epm_debug_dump_epm_ep:398:t] log_collect_ep_event

EP entry:

mac = 0050.5690.864e; num_ips = 2

ip[0] = 172.16.200.104

ip[1] = 10.10.92.1

vlan = 44; epg_vnid = 13106; bd_vnid = 16613251; vrf_vnid = 2129922

ifindex = 0x16000008; tun_ifindex = 0; vtep_tun_ifindex = 0

sclass = 21; ref_cnt = 6

flags = local|vPC|IP|MAC|host-tracked|sclass|timer|

create_ts = 04/05/2018 10:20:37.357432

upd_ts = 04/06/2018 10:40:08.154360

--

Now, get the interface name from ifindex to validate

leaf101# vsh_lc

vsh_lc

module-1# show system internal eltmc info interface ifindex 0x16000008

IfInfo:

interface: port-channel9 ::: ifindex: 369098760

iod: 110 ::: state: up

module-1# show system internal eltmc info interface ifindex 0x16000009

IfInfo:

interface: port-channel10 ::: ifindex: 369098761

module-1#

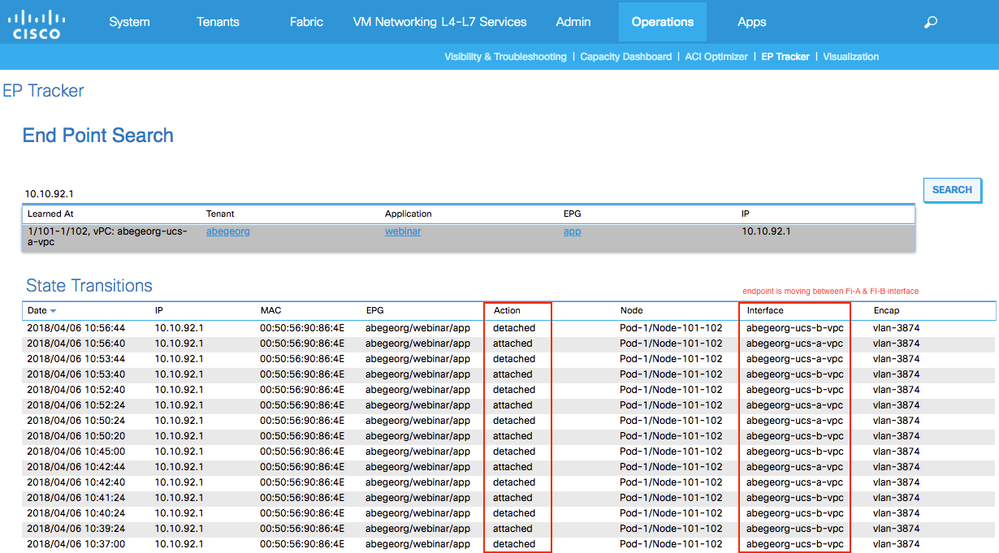

Review the Endpoint state transition using EP tracker

Now, another easy way to review the endpoint move is from GUI. Navigate to Operations -> EP Tracker, input endpoint IP or MAC for endpoint seach, once the endpoint details are displayed, click on it to get the state transition details. The endpoint state transition details for 10.10.92.1 is clearly indicating that the endpoint is moving between abegeorg-ucs-a-vpc and abegeorg-ucs-b-vpc continuously.

Based on the review so far, we see that the traffic from the VM (10.10.92.1) is load-balanced across both FI-A and FI-B side, which is not supported for UCS-B series chassis deployment. The only supported load balancing mechanism when UCS B series is used is Route Based on Originating Virtual Port, which is expected to either through FI-A or FI-B (not both). So, now lets review the load-balancing policy configured at the DVS.

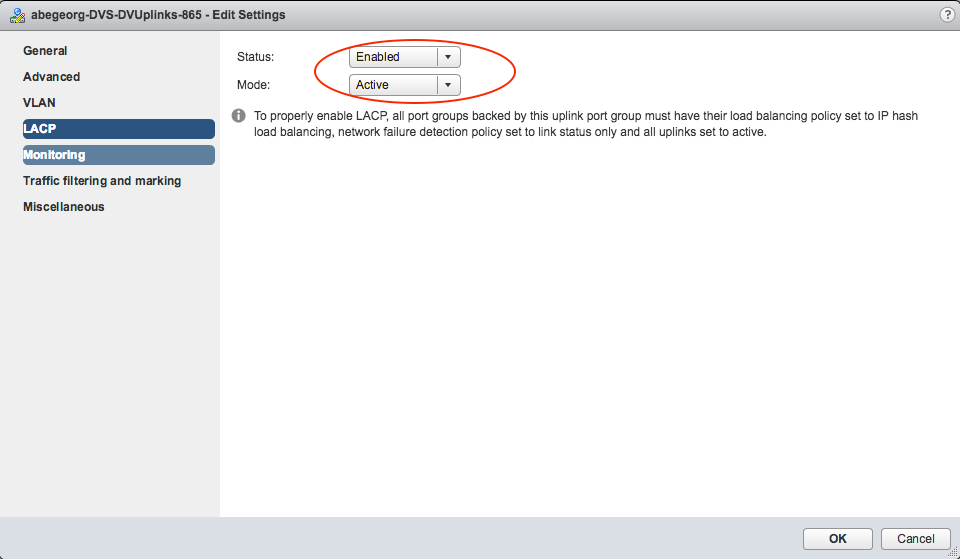

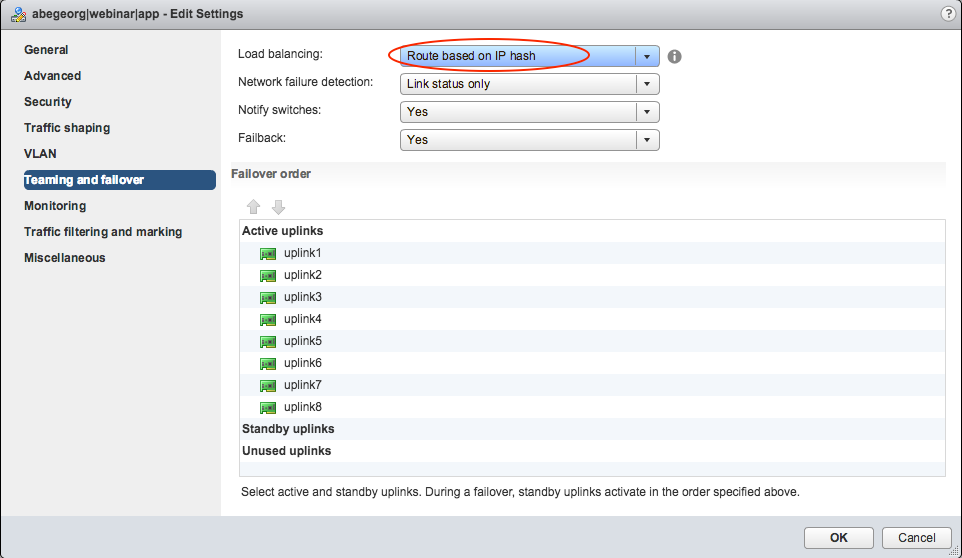

Review the DVS load-balancing policy at vCenter

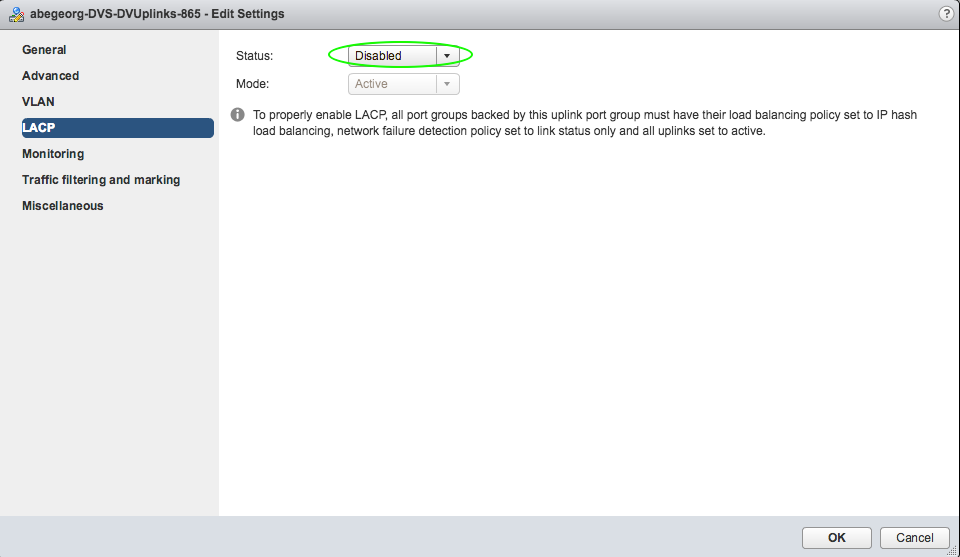

LACP is enabled for DVS uplink, which is not expected.

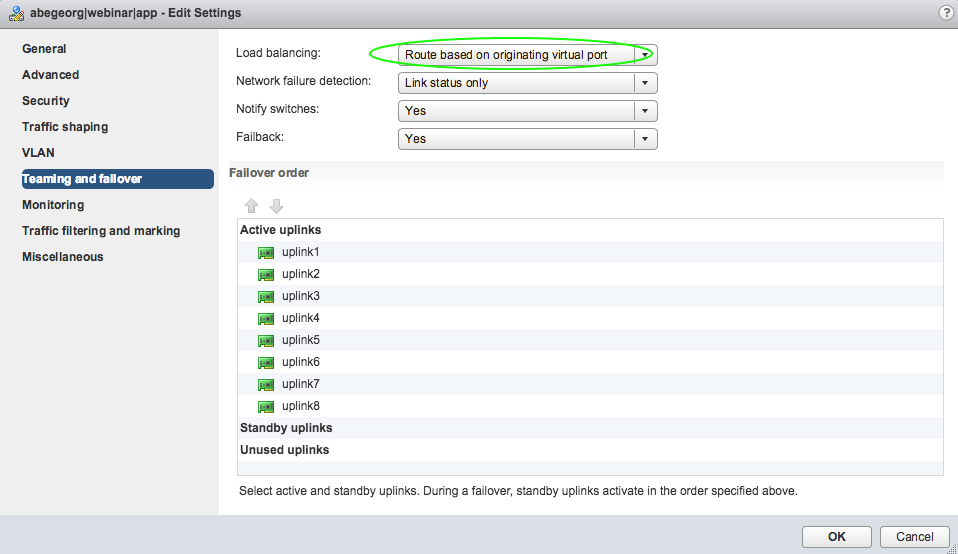

The load-balancing policy for the DVS port-group is configured with "Route based on IP hash", but the supported mode is "Route Based on Originating Virtual Port" for UCS-B based deployment.

Please note: The DVS vSwitch policy is not manually configured at the vCenter, rather it is pushed from APIC as part of the VMM domain integration. So, how does this invalid policy is being pushed? This happens because we haven't used an explicit vSwitch policy for VMM domain,so it uses the interface policy (the interface policy we configured for leaf101/leaf102 to FI-A/FI-B is LACP active).

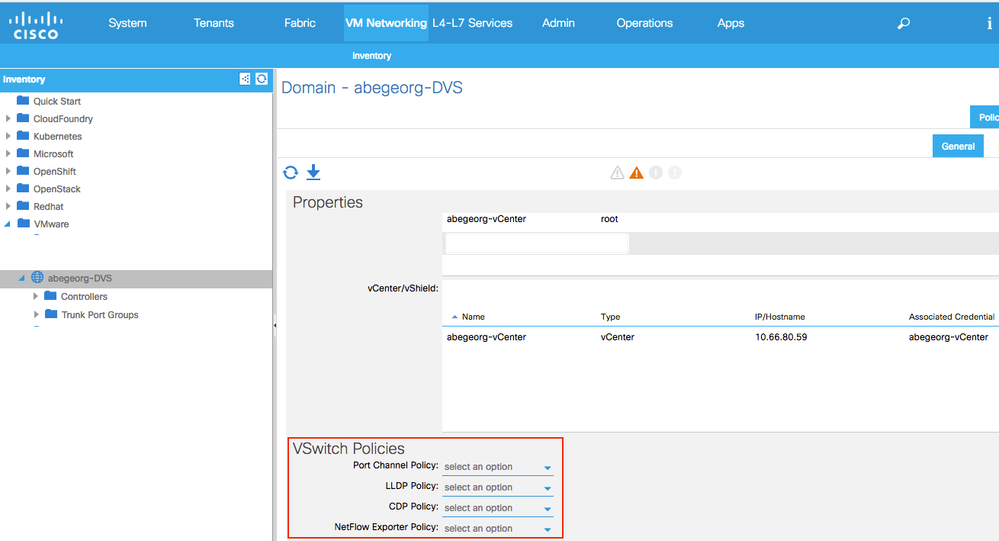

Review the vSwitch for VMM domain

We can see that the vSwitch policy has not configured here.

Resolution steps

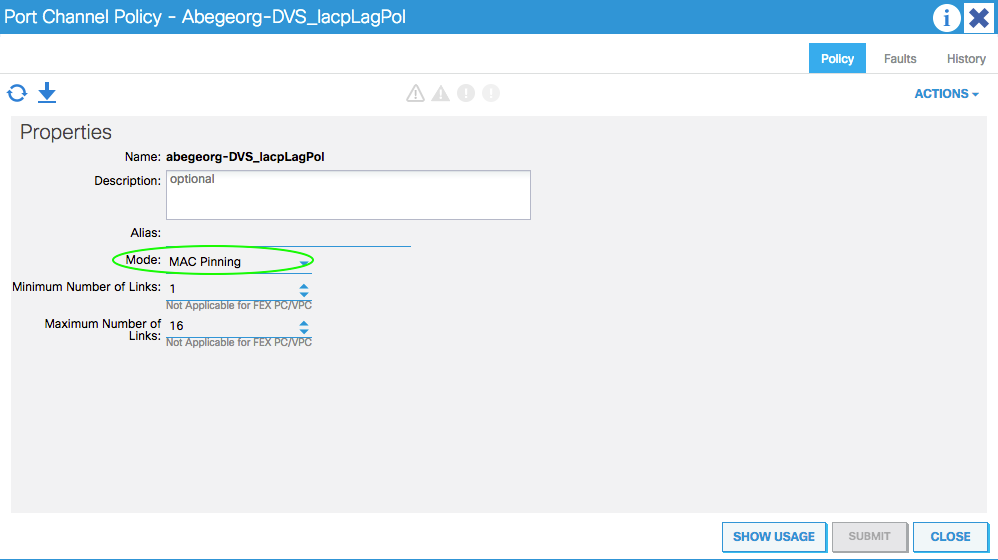

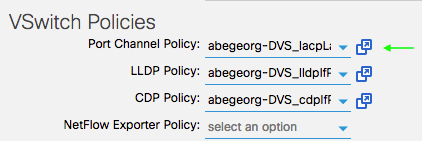

The vSwitch policy for the VMM Domain shall be configured with MAC-pinning to fix this issue

Verification

Now, lets verify at vCenter and review how this policy is applied to the DVS. The following screenshot indicates that the DVS uplink LACP is disabled and the correct changes has been pushed from APIC to vCenter.

The DVS port-group load-balancing policy has been changed to "Route based on originating virtual port", this is expected as per the vSwitch policy is configured for VMM domain.

Applying the correct vSwitch policy helped the connectivity issue to be resolved...

leaf101# iping -V abegeorg:webinar 10.10.92.1 -c 20

PING 10.10.92.1 (10.10.92.1) from 10.10.92.254: 56 data bytes

64 bytes from 10.10.92.1: icmp_seq=0 ttl=64 time=0.399 ms

64 bytes from 10.10.92.1: icmp_seq=1 ttl=64 time=0.362 ms

64 bytes from 10.10.92.1: icmp_seq=2 ttl=64 time=0.302 ms

64 bytes from 10.10.92.1: icmp_seq=3 ttl=64 time=0.382 ms

64 bytes from 10.10.92.1: icmp_seq=4 ttl=64 time=0.353 ms

64 bytes from 10.10.92.1: icmp_seq=5 ttl=64 time=0.352 ms

64 bytes from 10.10.92.1: icmp_seq=6 ttl=64 time=0.43 ms

64 bytes from 10.10.92.1: icmp_seq=7 ttl=64 time=0.375 ms

64 bytes from 10.10.92.1: icmp_seq=8 ttl=64 time=0.351 ms

64 bytes from 10.10.92.1: icmp_seq=9 ttl=64 time=0.319 ms

64 bytes from 10.10.92.1: icmp_seq=10 ttl=64 time=0.347 ms

64 bytes from 10.10.92.1: icmp_seq=11 ttl=64 time=0.382 ms

64 bytes from 10.10.92.1: icmp_seq=12 ttl=64 time=0.331 ms

64 bytes from 10.10.92.1: icmp_seq=13 ttl=64 time=0.344 ms

64 bytes from 10.10.92.1: icmp_seq=14 ttl=64 time=0.302 ms

64 bytes from 10.10.92.1: icmp_seq=15 ttl=64 time=0.352 ms

64 bytes from 10.10.92.1: icmp_seq=16 ttl=64 time=0.368 ms

64 bytes from 10.10.92.1: icmp_seq=17 ttl=64 time=0.348 ms

64 bytes from 10.10.92.1: icmp_seq=18 ttl=64 time=0.272 ms

64 bytes from 10.10.92.1: icmp_seq=19 ttl=64 time=0.317 ms

--- 10.10.92.1 ping statistics ---

20 packets transmitted, 20 packets received, 0.00% packet loss

round-trip min/avg/max = 0.272/0.349/0.43 ms

leaf101#

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: