- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- Cisco Next-G Data Center Switches (Nexus) Design Examples

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-19-2012 02:19 AM - edited 03-01-2019 05:57 AM

Overview

Cisco's Nexus switches offer the latest in Next-Generation Data Center switching innovations that enable efficient virtualization, high-performance computing, and a unified fabric. There is a wide range of Cisco Nexus family products that support different features and capabilities. Consequently designing a data center network that uses Cisco’s Next Generation of data center switches (Nexus) requires a very good understanding of the data center network recruitments such as servers’ network connectivity requirements, protocols, layer 2/layer 3 demarcation points, data center interconnect requirements and traffic load.

For more details about Cisco Nexus family products see the following link:

http://www.cisco.com/en/US/products/ps9441/Products_Sub_Category_Home.html

This document will briefly discuss some high level design examples of data center networks that utilise Cisco Nexus Switches with emphasis on the mapping between data center business requirements and the selected hardware and features to meet these requirements, taking into consideration the size of the data center network.

Note:

The bellow design examples can be used as a guide/reference only and are not Cisco’s official best practice solutions

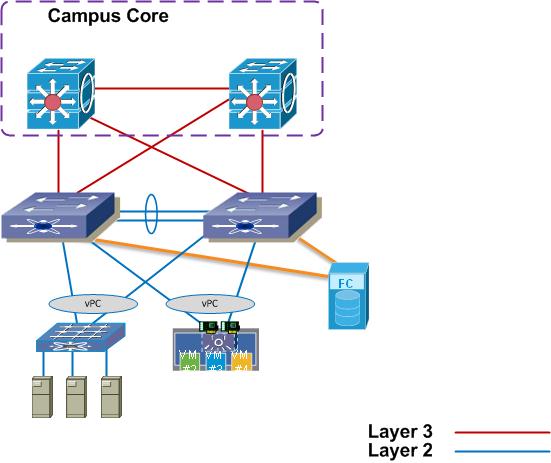

Example 1:

XYZ.com has a small size data center with the following requirements

- 20x servers that use single 1Gbp Ethernet copper access ports

- 5x physical servers for Virtualized services with 2x 10G/1G that work in active/active and active/standby NIC teaming modes

- 4x 10G uplinks to the campus core with L3 routing

- 2x 1G FiberChannel storage array

Solution:

Recommended Hardware

2x Cisco Nexus 5548 with the following HW specifications per chassis:

- L3 daughter cards

- 2x Nexus 2248 Fabric extender switches (FEX)

Design description:

Using Cisco nexus 5500 series in a small to medium data cneter can provide a reliable and cost effective data center switching solution. Based on the above requirements nexus 5548 can provide the following:

- 1G/10G unified fabric interfaces

- FC port for NAS/SAN

- Layer 3 routing capability

- Nexus 2K FEXs for ToR to provide high density of access ports

- vPC for active/active multi-homed services

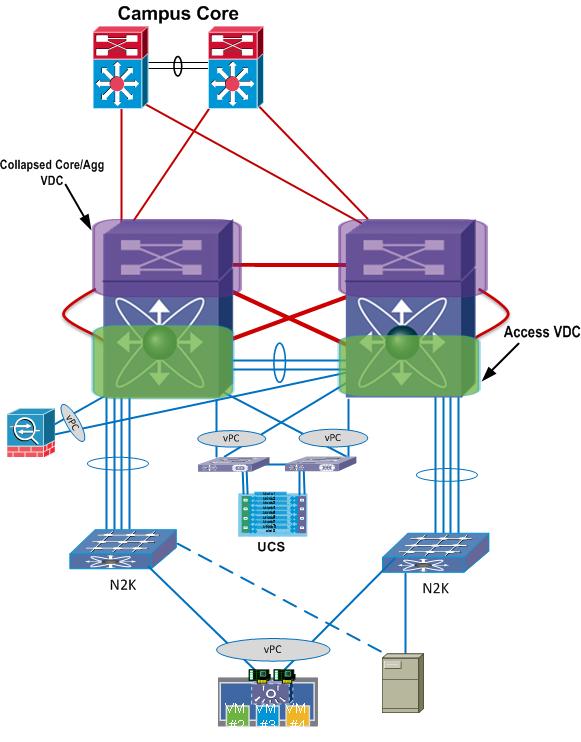

Example 2:

XYZ.com has a medium size data center with the following requirements

- 150x servers that use dual 1Gbp Ethernet copper access ports that work in active/active and active/standby NIC teaming modes

- Virtualized services on 1x Cisco UCS system that need 4x10G uplinks to the network in active/active mode

- 4x 10G uplinks to the campus core ( routed )

- 2x ASA firewalls with 2x 1G interfaces each

- Some services need to be placed in a DMZ zone behind the ASA firewall

- Future plan is to increase 10G services in the DC access

Solution:

Recommended Hardware

2x Cisco Nexus 7009 with the following specifications per chassis:

- 2x supervisors

- 2x F2 10G/1G module ( to provide up to 48 ports line rate of 10G/1G ports )

- 4x Nexus 2248 Fabric extender switches ( can provide up to 192 1G copper access ports )

- 5x FAB2 ( can provide bandwidth per slot up to 550G)

Design description:

- The recommended HW above can provide a simple Nexus DC design without complexity and able to meet the requirements in terms of 1G/10G port density

- Collapsed access/distribution design model will be used here by using Nexus VDCs to achieve a complete logical separation (resources and interfaces) over a single physical chassis

- vPC will be used for active/active multi-homed services

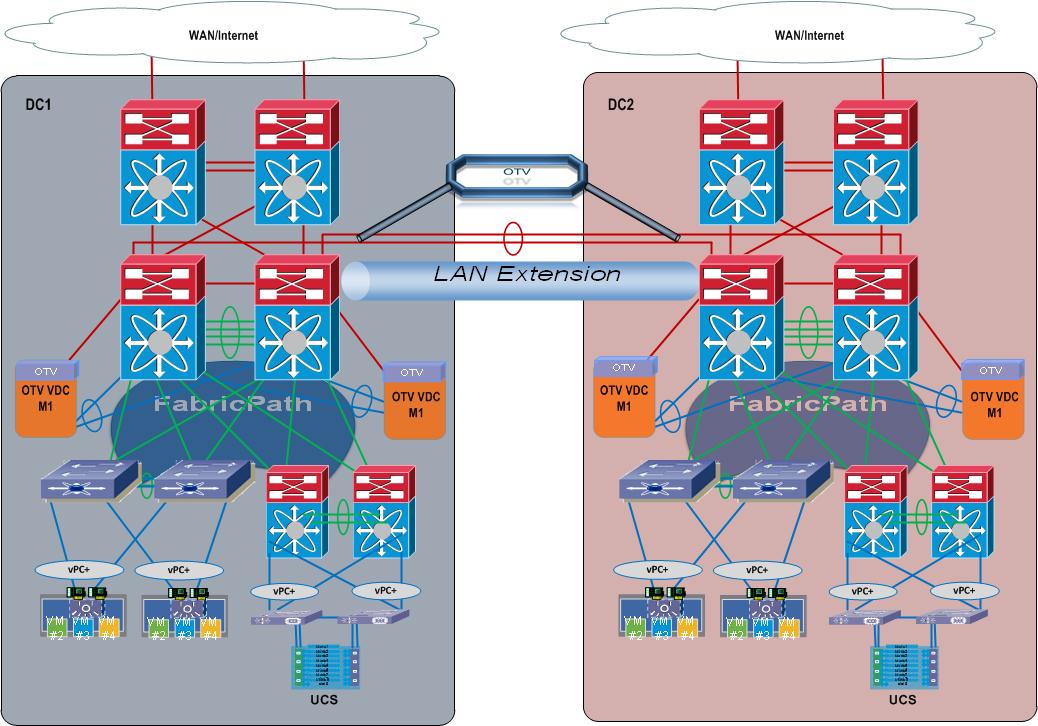

Example 3:

XYZ.com has 2x large data centers with the following requirements:

- 100s of servers that use dual 1G/10G access ports that work in active/active and active/standby NIC teaming modes

- Virtualized services on multiple Cisco UCS systems that need x10G uplinks to the network in active/active mode

- 10G uplinks to the DC core

- High density 10G ports

- Layer 2 extension between the 2 data centers over 2x 10G dark fiber links

- Support for local default gateway for extended layer 2 vlans between the 2 DCs

- Layer 2 multi-pathing between access and aggregation DC layers without STP blocking in any link

- Support of multi-homed services in active/active mode

- Scalable solution to add more distribution PODs to the DC

Solution:

Recommended Hardware per DC:

DC core:

- 2x Nexus 7009

- FAB2

- M1 10G modules

DC aggregation:

- 2x Nexus 7010/7018

- FAB2

- M1 10G modules ( for OTV)

- Multiple F2 modules for high density of 10G ports and fabricpath support

DC Access:

- Nexus 7009 with FAB2, Multiple F2 modules for high density of 10G/1G ports

- Nexus 5596, nexus 2K if required

Design description:

The above HW can meet the requirements by providing:

- Layer 3 routing capability with 10G support in the core

- High density of 10G ports in the DC aggregation and access layers

- L2 Multi-pathing (none-blocking) between the DC access and aggregation layers using Cisco FabricPath

- Layer 2 VLAN stretching between the 2 data centers using OTV which support localised default gateway

- vPC+ that can support multi-homed services in the access layer in conjunction with fabricpath between the DC access and aggregation layers

Regards,

Marwan Alshawi

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In example 2 can you go with 12 FEX's directly connected to the 7k's? In our PDC we have the 7/5/2k, but in our SDC we're looking to use a scaled down model and remove the 5k (smaller DC and less 10G).

I know it scales to 32 FEX's but the real question is, how does this architecture differ from a 7/5/2 design. What are the implications of this design? Will the 2k interface commands and code upgrade process remain the same? At what point do you need to add a 5k?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Michael,

you are right, you can go up to 32 FEXs, and going to this type of architecture is something depends on your requirements, for example you can check the features and capability supported when using N2K to N5K vs N2K to N2K and see if this is not going to effect your DC requirements, however this design is going to reduce the number of L2 hops but you will need to consider using the collapsed architecture in the N7K with VDCs to help you use vPC(multi-homing) between the access VDC and distribution VDC on both 2K chassis. the FEXs will only extend the access layer for more services to be connected, also the N2K port channel can span several I/O Modules of the 7K for redundancy

Regards,

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Nice!

+5

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, on Example #2 - Why do we need the Core? What are the benefits? Please elaborate - Thanks

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi

in example 2 the core function is combined with the distribution to perform L3 routing with the campus core or WAN edge

it is not a pure core like example 3 and again this is a design choice that can change depending on the network

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

test comment

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: