- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center Switches

- Issues with multicast distribution over a VxLAN underlay with ECMP

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issues with multicast distribution over a VxLAN underlay with ECMP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-03-2022 09:26 AM - edited 12-03-2022 09:40 AM

Dear friends,

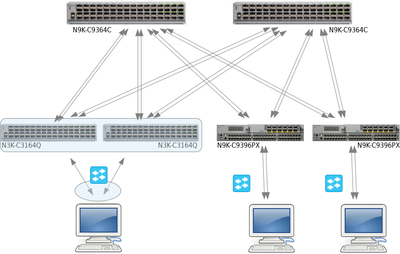

I have a strange issue to share regarding my VxLAN fabric. I'm using two N9K-C9364C for Spines and 3164Q / 9396PX for leafs. They are only doing simple VxLAN bridging of hosts' traffic, so no customer routing etc. /no customer Layer 3 on them/.

The issue sound simple but I don't know how to fix it. Let's see a diagram snippet below:

As we all know, the BUM traffic is always encapsulated into the multicast group that we configure under interface nve1. This works without any issues up to the moment when an additional link is added to the OSPF ECMP between the spines and the leafs. Then, any leaf will have an equal cost of 4 ports in order to reach any other leaf. So far so good.

The thing that is not so good is the fact that a certain underlay multicast group can ingress a leaf through link (A), get decapsulated, go to the host, and then the traffic in the opposite direction (which is the same underlay multicast group) may go to the spine through any other link but not necessarily through link (A).

I have a host exchanging 4G multicast traffic with another host through a bridged VLAN. Let's say, this is their own multicast traffic. Host A is sending 4G to host B and host B is returning another 4G to host A. This is their ordinary traffic according to their own services. I want to configure the underlay to work so that the path is always symmetric, if a multicast group arrives from link (A) then the reverse traffic must always return through (A) but not through (B) or any other link. I believe, this kind of non-symmetric BUM traffic causes some kind of traffic blackholing, possibly due to RPF check fail but I'm not sure about that. Hence I have to configure the switches in such a way that they always return the BUM traffic through the port it initially arrived from, rather than to return it through some other port. To be honest I'm not even sure how the switches manage to split a single multicast group into pieces so that the upstream traffic goes through link (A) while the downstream traffic is received through link (B) but somehow it causes some 5% of the services to have their traffic blackholed in case such a thing happens.

My load sharing method is s-g-hash and I'm not sure if it would be better to set s-g-hash next-hop-based instead, maybe also add ip multicast multipath resilient but I can't figure out if it would help the RPF stop blackholing the underlay BUM groups if these are set.

Another thing that's worth reading is that 'show ip multicast vrf default' on 3164 displays a different group count on each of the VPC nodes while the same thing executed on 9396 displays the same group count on each VPC node. This makes me suspect that RPF failures are probably taking place thus blackholing part of my BUM traffic:

3164-1# show ip multicast vrf default

Multicast Routing VRFs (2 VRFs)

VRF Name VRF Table Route Group Source (*,G) State

ID ID Count Count Count Count

default 1 0x00000001 219 95 136 82 Up

Multipath configuration (1): s-g-hash

Resilient configuration: Disabled

3164-2# show ip multicast vrf default

Multicast Routing VRFs (2 VRFs)

VRF Name VRF Table Route Group Source (*,G) State

ID ID Count Count Count Count

default 1 0x00000001 310 98 227 82 Up

Multipath configuration (1): s-g-hash

Resilient configuration: DisabledAny help will be appreciated here.

Thank you.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide