TRex is an open source, low cost, stateful and stateless traffic generator fuelled by DPDK. It is all about scale. It can scale up to 200-400Gbps,160MPPS and millions of flows using one Cisco UCS (or any COTS server).

This release contains many new features, fixes and improvements. Please find below a detailed description of what’s new in v2.15

- Cisco VIC support

- Mellanox ConnectX-4

- SR-IOV support

- Stateless GUI with Packet builder

- Stateful scale improvement

Cisco VIC support

- Supported on both UCS C-series and B-series (blade server)

- PCIe16 - 2x40GbE ports

- Can be shared by VMs using vNIC (non SR-IOV)

- Only 13xx series Cisco adapters are supported

see more here vic support

Mellanox ConnectX-4

- Supports 25/50/100 GbE speeds

- PCIe16 - 2x100GbE ports with one NIC

- Require OFED kernel drivers

- Small footprint

- SR-IOV support

- The Linux kernel driver is up while DPDK works

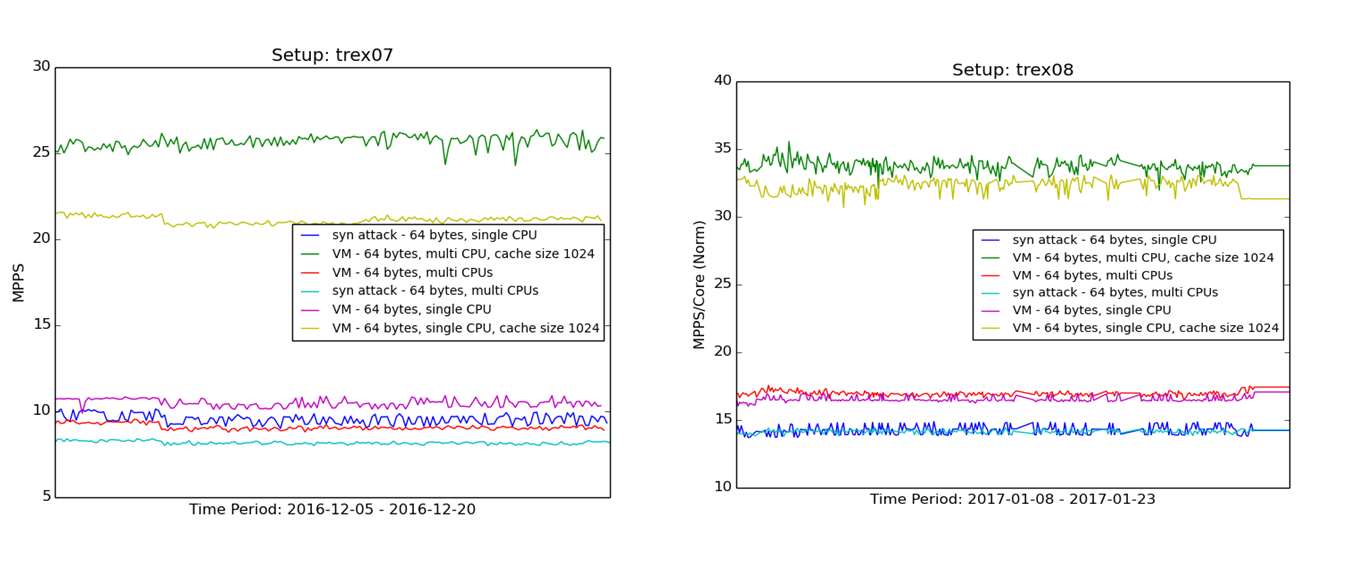

- Performance numbers

- Tx only - 64B, maximum 90MPPS

- Tx and Rx - 64B, maximum ~50MPPS

- IMIX - maximum 90Gbps

- CPU cycles per packet is up to 50% higher relative to Intel XL710 when checked with 64B packet size

- In the average stateful scenario, ConnectX-4 is about the same as XL710.

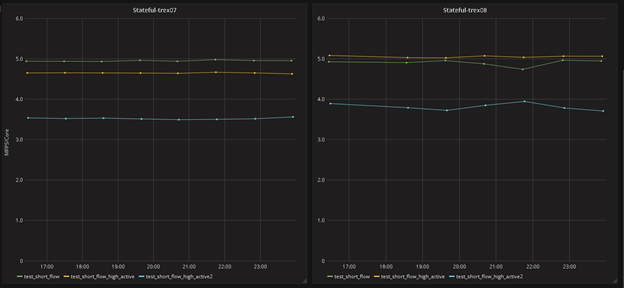

Stateful comparison of XL710 (trex08) vs. ConnextX-4 (trex07)

Stateless comparison of XL710 (trex08) vs. ConnextX-4 (trex07) (cycle per packet in number of usecase)

SR-IOV support

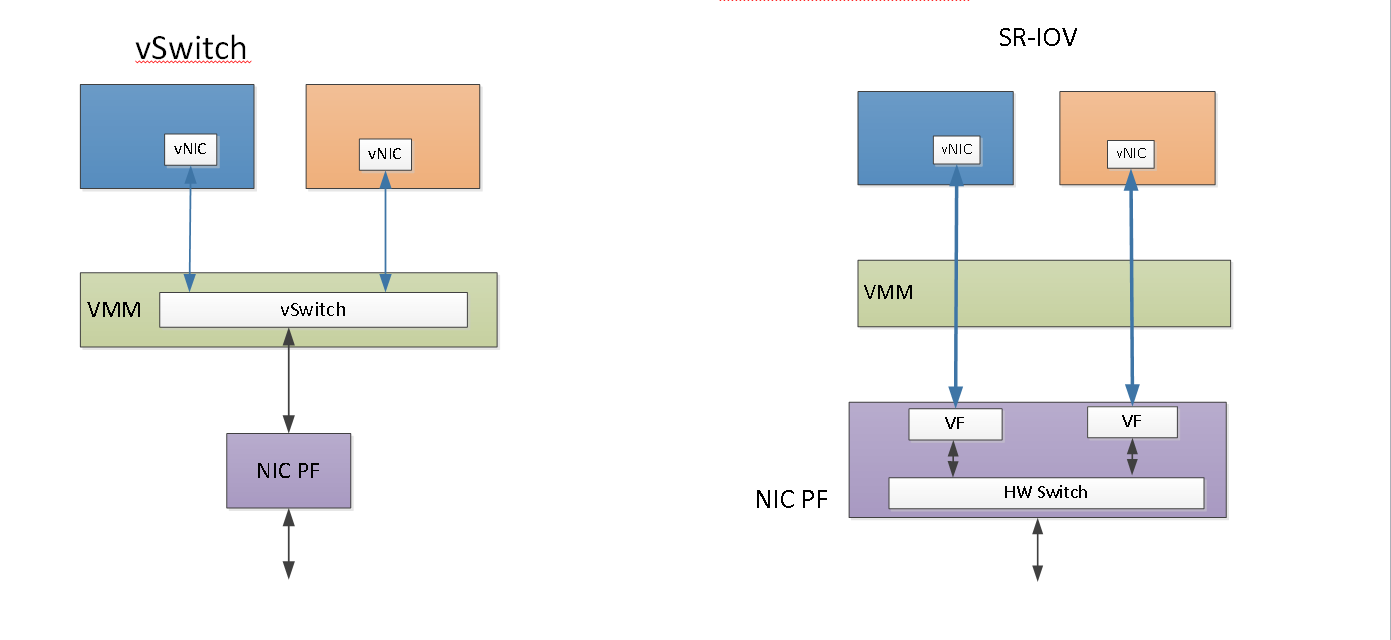

TRex supports paravirtualized interfaces such as VMXNET3/virtio/E1000 however when connected to a vSwitch, the vSwitch limits the performance. VPP or OVS-DPDK can improve the performance but require more software resources to handle the rate.

SR-IOV can accelerate the performance and reduce CPU resource usage as well as latency by utilizing NIC hardware switch capability (the switching is done by hardware).

TRex version 2.15 now includes SR-IOV support for XL710 and X710.

The following diagram compares between vSwitch and SR-IOV.

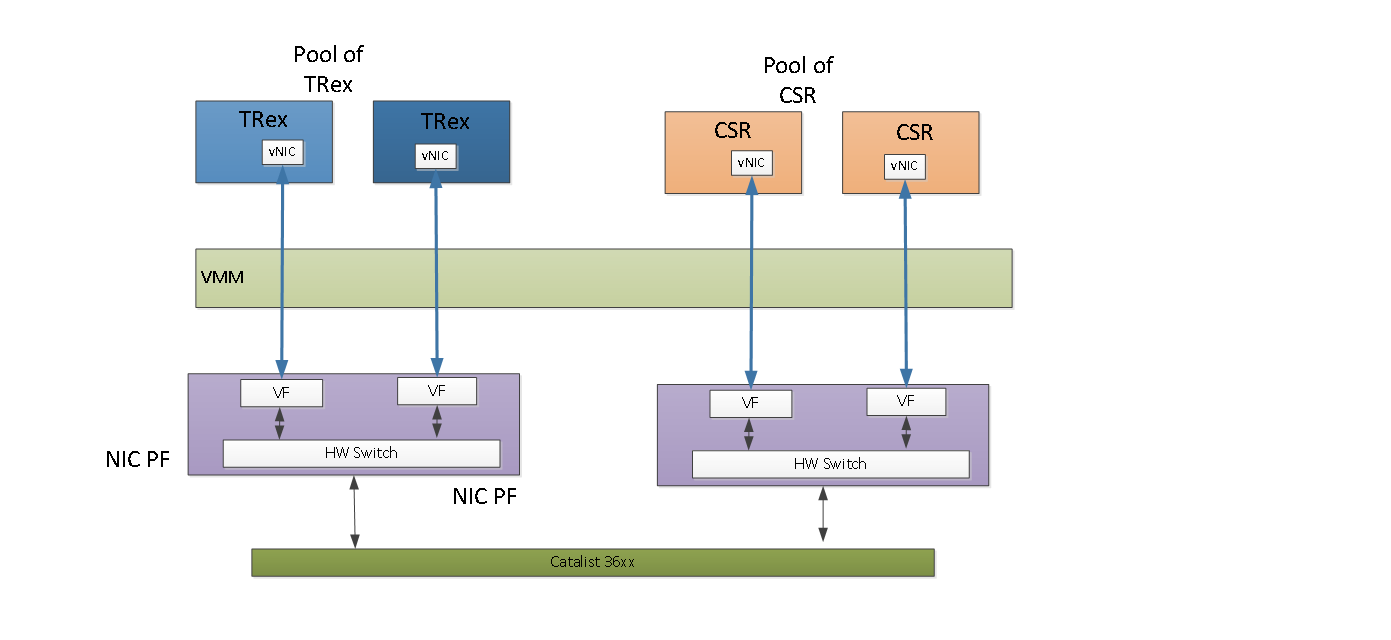

One use case which shows the performance gain that can be acheived by using SR-IOV is when a user wants to create a pool of TRex VMs that tests a pool of virtual DUTs (e.g. ASAv,CSR etc.)

When using newly supported SR-IOV, compute, storage and networking resources can be controlled dynamically (e.g by using OpenStack)

The above diagram is an example of one server with two NICS. TRex VMs can be allocated on one NIC while the DUTs can be allocated on another.

Stateless GUI with Packet builder

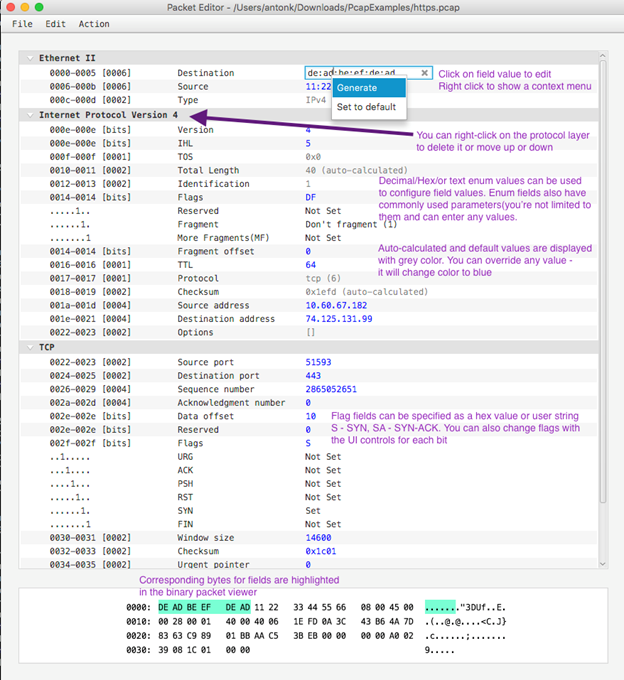

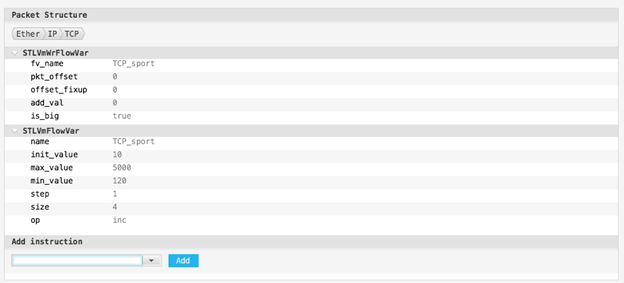

Using the new stateless GUI advanced packet builder you can build any type of packet. It utilizes

TRex scapy server for building the packets and can be easily extended.

more information can be found under

https://github.com/cisco-system-traffic-generator/trex-stateless-gui/wiki

Stateful scale improvement

Starting from version v2.15, TRex uses a new Stateful scheduler that works better in cases where the number of active flows is high. When tested with a typical EMIX traffic profile, a 70% performance improvement was observed.

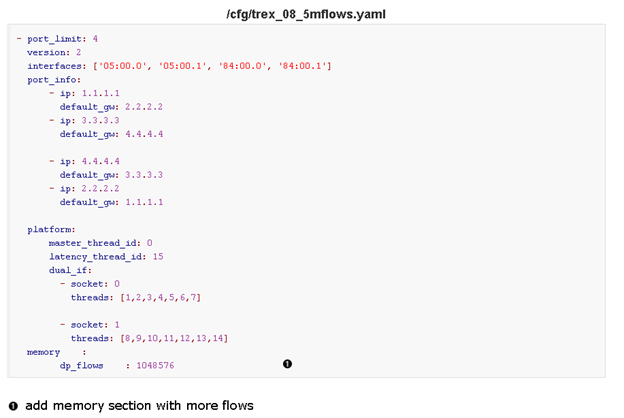

In order to increase the maximum number of active flows that TRex can allocate the user should edit the dp_flows field in the TRex config file. The following picture shows and example of the config file.

Config File

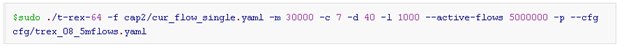

The following CLI can be used along with the --active-flows switch in order to scale the number of flows:

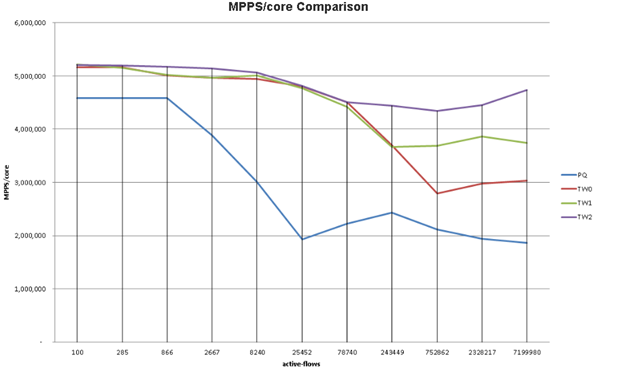

This following chart shows the MPPS per core when using TRex with a traffic profile of small UDP flows (containing 10 packets of 64B each). PQ label relates to the old scheduler version whereas TW2 label relates to te latest scheduler version (TW0 an TW1 are results of different execution permutations). It can be seen that the new version behaves better in case of a high number of active-flows and in the common case (~30K-300K flows) there is a significant difference in performance.

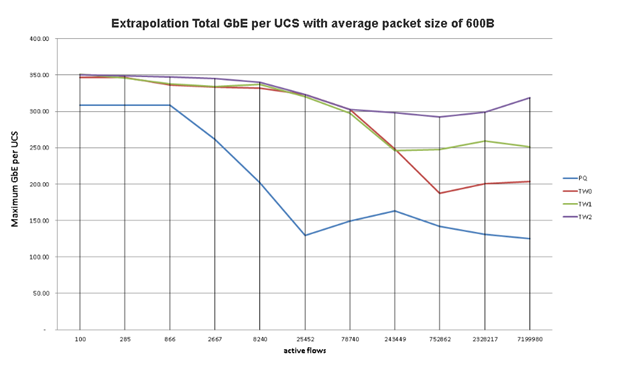

The following chart shows extrapolation with average packet size of 600B. It can be seen that with the new scheduler version, TRex can scale up to 350Gbps with one UCS.

More information can be found under:

https://trex-tgn.cisco.com/trex/doc/trex_manual.html#_more_active_flows

Please register to our TRex session that will be presented in the devnet zone Cisco Live

Berlin 2017 in order to learn more on our latest enhancements and advanced capabilities.

Content Catalog - Cisco Live EMEA 2017

more resource can be found http://trex-tgn.cisco.com and https://github.com/cisco-system-traffic-generator/trex-core

thanks

Hanoh