- Cisco Community

- Technology and Support

- Networking

- Routing

- Creative solution to limit Min/Max IP MTU

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2020 07:33 AM

Hey guys,

This is probably a strange one-off request but thought I would try anyway. We have a situation where our service provider consistently and continuously drops certain size IPSec packets. Long story short, contracts have been signed and accepted by all parties and no one is budging or fixing the issue and thus the link is useless. I'm an engineer and cannot fix stupid. However, as an engineer, I'm thinking perhaps there may be a way to circumvent this issue.

Cisco ASR1002-X router IOS-XE 15.x

Looking for a solution to change certain size IPSec packets to a different size and thus avoid the hole.

Example:

Packets in the range of 64 - 852 pass and are routed to remote sites

Packets in the range of 853 - 872 fail and are dropped by the service provider

Packets in the range of 873 - 1500 pass and are routed to remote sites

Thank you

Frank

Solved! Go to Solution.

- Labels:

-

ASR 1000 Series

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2020 09:01 AM - edited 01-07-2020 09:03 AM

Your service provider is very 'interesting', which is totally unacceptable. If i were you, I would keep escalate the issue...

Btw, with this interesting scenario, all you want to do is to increase the packet size for packets (that in the certain size).

To do this, I think encapsulating another ESP / GRE (IPSEC/GRE tunnel on top of your current IPSEC tunnel) is the only solution.

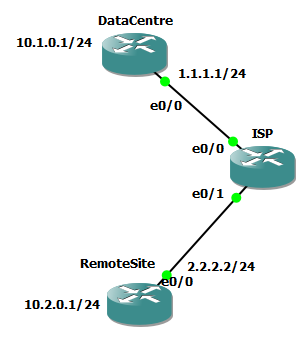

I have created a LAB for this .

And simulate the issue with the following configuration on ISP router:

route-map MTU-DROP permit 10 match length 853 872 set interface Null0 route-map MTU-DROP permit 20 interface range e0/0,e0/1 ip policy route-map MTU-DROP

Simulating your existing IPSEC tunnel (i assume it is a VTI tunnel first)

RemoteSite#sh run | s crypto crypto isakmp policy 10 encr aes authentication pre-share group 2 crypto isakmp key CHALLENGE-ACCEPTED address 0.0.0.0 crypto ipsec transform-set TS esp-aes esp-sha-hmac mode transport crypto ipsec profile BASE_VPN set transform-set TS

RemoteSite#sh run interface t0

interface Tunnel0

description base VPN tunnel

ip address 172.16.0.2 255.255.255.252

tunnel source Ethernet0/0

tunnel mode ipsec ipv4

tunnel destination 1.1.1.1

tunnel protection ipsec profile BASE_VPN

end

And Data centre side:

DataCentre#sh run | s crypto crypto isakmp policy 10 encr aes authentication pre-share group 2 crypto isakmp key CHALLENGE-ACCEPTED address 0.0.0.0 crypto ipsec transform-set TS esp-aes esp-sha-hmac mode transport crypto ipsec profile BASE_VPN set transform-set TS

DataCentre#sh run int t0

interface Tunnel0

description base VPN tunnel

ip address 172.16.0.1 255.255.255.252

tunnel source Ethernet0/0

tunnel mode ipsec ipv4

tunnel destination 2.2.2.2

tunnel protection ipsec profile BASE_VPN

end

So, the ESP & IPSEC header are around 66 Bytes, so the packet (before encapsulation) with around 787 Byte will be dropped.

RemoteSite#ping 10.1.0.1 so lo0 size 787 Type escape sequence to abort. Sending 5, 790-byte ICMP Echos to 10.1.0.1, timeout is 2 seconds: Packet sent with a source address of 10.2.0.1 ....

Since the gap between 853 - 872 is just about 20 Bytes. Any kind of encapsulation (e.g. GRE / IPSEC) could easily increase the overhead > 20 Bytes.

To address this, we first build another IPSEC tunnel on top the existing tunnel.

DataCentre# sh run | s crypto.*OVERLAY|interface Tunnel100 crypto ipsec profile OVERLAY_VPN set transform-set TS interface Tunnel100 description overlay VPN tunnel ip address 172.31.0.1 255.255.255.252 tunnel source Tunnel0 tunnel mode ipsec ipv4 tunnel destination 172.16.0.2 tunnel protection ipsec profile OVERLAY_VPN end

Same on the Remote Site Router:

RemoteSite# sh run | s crypto.*OVERLAY|interface Tunnel100 crypto ipsec profile OVERLAY_VPN set transform-set TS interface Tunnel100 description overlay VPN tunnel ip address 172.31.0.2 255.255.255.252 tunnel source Tunnel0 tunnel mode ipsec ipv4 tunnel destination 172.16.0.1 tunnel protection ipsec profile OVERLAY_VPN

Create a PBR on both side:

ip local policy route-map PBR

interface e0/1

ip policy route-map PBR

route-map PBR permit 10 match ip address CROSS_SITE_TRAFFIC match length 787 807 set ip next-hop 172.31.0.1

Then, It will successfully migration with the MTU problem.

RemoteSite#ping 10.1.0.1 so lo0 size 790 re 1 Type escape sequence to abort. Sending 1, 790-byte ICMP Echos to 10.1.0.1, timeout is 2 seconds: Packet sent with a source address of 10.2.0.1 *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, local feature, Policy Routing(3), rtype 2, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, sending *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, post-encap feature, IPSEC Post-encap output classification(16), rtype 2, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, sending full packet *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), g=172.16.0.1, len 856, forward *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), len 856, post-encap feature, IPSEC Post-encap output classification(16), rtype 0, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), len 856, sending full packet *Jan 7 16:38:37.518: IP: s=2.2.2.2 (local), d=1.1.1.1 (Ethernet0/0), g=2.2.2.254, len 920, forward *Jan 7 16:38:37.518: IP: s=2.2.2.2 (local), d=1.1.1.1 (Ethernet0/0), len 920, sending full packet.

=====

Yes, it look stupid. Just for fun, I would not using it on production environment which making everything become complicated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2020 09:01 AM - edited 01-07-2020 09:03 AM

Your service provider is very 'interesting', which is totally unacceptable. If i were you, I would keep escalate the issue...

Btw, with this interesting scenario, all you want to do is to increase the packet size for packets (that in the certain size).

To do this, I think encapsulating another ESP / GRE (IPSEC/GRE tunnel on top of your current IPSEC tunnel) is the only solution.

I have created a LAB for this .

And simulate the issue with the following configuration on ISP router:

route-map MTU-DROP permit 10 match length 853 872 set interface Null0 route-map MTU-DROP permit 20 interface range e0/0,e0/1 ip policy route-map MTU-DROP

Simulating your existing IPSEC tunnel (i assume it is a VTI tunnel first)

RemoteSite#sh run | s crypto crypto isakmp policy 10 encr aes authentication pre-share group 2 crypto isakmp key CHALLENGE-ACCEPTED address 0.0.0.0 crypto ipsec transform-set TS esp-aes esp-sha-hmac mode transport crypto ipsec profile BASE_VPN set transform-set TS

RemoteSite#sh run interface t0

interface Tunnel0

description base VPN tunnel

ip address 172.16.0.2 255.255.255.252

tunnel source Ethernet0/0

tunnel mode ipsec ipv4

tunnel destination 1.1.1.1

tunnel protection ipsec profile BASE_VPN

end

And Data centre side:

DataCentre#sh run | s crypto crypto isakmp policy 10 encr aes authentication pre-share group 2 crypto isakmp key CHALLENGE-ACCEPTED address 0.0.0.0 crypto ipsec transform-set TS esp-aes esp-sha-hmac mode transport crypto ipsec profile BASE_VPN set transform-set TS

DataCentre#sh run int t0

interface Tunnel0

description base VPN tunnel

ip address 172.16.0.1 255.255.255.252

tunnel source Ethernet0/0

tunnel mode ipsec ipv4

tunnel destination 2.2.2.2

tunnel protection ipsec profile BASE_VPN

end

So, the ESP & IPSEC header are around 66 Bytes, so the packet (before encapsulation) with around 787 Byte will be dropped.

RemoteSite#ping 10.1.0.1 so lo0 size 787 Type escape sequence to abort. Sending 5, 790-byte ICMP Echos to 10.1.0.1, timeout is 2 seconds: Packet sent with a source address of 10.2.0.1 ....

Since the gap between 853 - 872 is just about 20 Bytes. Any kind of encapsulation (e.g. GRE / IPSEC) could easily increase the overhead > 20 Bytes.

To address this, we first build another IPSEC tunnel on top the existing tunnel.

DataCentre# sh run | s crypto.*OVERLAY|interface Tunnel100 crypto ipsec profile OVERLAY_VPN set transform-set TS interface Tunnel100 description overlay VPN tunnel ip address 172.31.0.1 255.255.255.252 tunnel source Tunnel0 tunnel mode ipsec ipv4 tunnel destination 172.16.0.2 tunnel protection ipsec profile OVERLAY_VPN end

Same on the Remote Site Router:

RemoteSite# sh run | s crypto.*OVERLAY|interface Tunnel100 crypto ipsec profile OVERLAY_VPN set transform-set TS interface Tunnel100 description overlay VPN tunnel ip address 172.31.0.2 255.255.255.252 tunnel source Tunnel0 tunnel mode ipsec ipv4 tunnel destination 172.16.0.1 tunnel protection ipsec profile OVERLAY_VPN

Create a PBR on both side:

ip local policy route-map PBR

interface e0/1

ip policy route-map PBR

route-map PBR permit 10 match ip address CROSS_SITE_TRAFFIC match length 787 807 set ip next-hop 172.31.0.1

Then, It will successfully migration with the MTU problem.

RemoteSite#ping 10.1.0.1 so lo0 size 790 re 1 Type escape sequence to abort. Sending 1, 790-byte ICMP Echos to 10.1.0.1, timeout is 2 seconds: Packet sent with a source address of 10.2.0.1 *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, local feature, Policy Routing(3), rtype 2, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, sending *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, post-encap feature, IPSEC Post-encap output classification(16), rtype 2, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.504: IP: s=10.2.0.1 (local), d=10.1.0.1 (Tunnel100), len 790, sending full packet *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), g=172.16.0.1, len 856, forward *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), len 856, post-encap feature, IPSEC Post-encap output classification(16), rtype 0, forus FALSE, sendself FALSE, mtu 0, fwdchk FALSE *Jan 7 16:38:37.518: IP: s=172.16.0.2 (local), d=172.16.0.1 (Tunnel0), len 856, sending full packet *Jan 7 16:38:37.518: IP: s=2.2.2.2 (local), d=1.1.1.1 (Ethernet0/0), g=2.2.2.254, len 920, forward *Jan 7 16:38:37.518: IP: s=2.2.2.2 (local), d=1.1.1.1 (Ethernet0/0), len 920, sending full packet.

=====

Yes, it look stupid. Just for fun, I would not using it on production environment which making everything become complicated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2020 11:22 AM

Hi Sir (Ngkin2010),

Thank you for assisting with this issue! Really appreciate it. SP doesn’t use Cisco infrastructure, has too many customers and thus expect this type of issue to become mainstream in the near future.

Your idea looks promising!!! - I’ll need to incorporate your config snip-let into a lab-prod setup and test. Be in touch shortly.

Again THANK YOU for your efforts.

Frank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2020 03:10 AM

@ngkin2010

+5 elagant solution

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-15-2020 08:00 AM

Hi ngkin2010

Thanks again for your support in this issue.

SP indicated they swapped out some of their infrastructure and magically this issue disappeared. Although we didn't get a chance to implement your solution in our production net, I feel it would have worked. Lab efforts were well-on-the-way.

Thanks again

Frank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2020 02:13 AM

Hello,

since you are asking for creative solutions, you also might want to try the below. This would basically compress all packets sized between 853 and 872. I don't know if the compression pushes the size below 853, that is what you would need to test in a real environment:

class-map match-all FRAG

match packet length min 853 max 872

!

policy-map MTU

class FRAG

compress header ip

class class-default

fair-queue

!

interface GigabitEthernet0/0/0

service-policy output MTU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-15-2020 08:19 AM

Hi Georg,

Thanks for assisting. I didn't think of your idea before you presented it but I would have attempted if I had more time.

Thanks

Frank

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide