- Cisco Community

- Technology and Support

- Networking

- Routing

- OSPF hello and dead timers

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022 02:45 AM

Level of understanding: CCNA

There are different Hello and Dead timers for non-broadcast network types and broadcast and point-to-point network types. I know the timers, but i don't know why there is a difference? Why isn't it just the same for all network types?

Solved! Go to Solution.

- Labels:

-

Routing Protocols

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022

04:54 AM

- last edited on

11-22-2022

12:56 AM

by

Translator

![]()

Hello,

As @MHmh diagram points out the medium these timers are set on are typically for longer distances to travel. Usually the networks with the lower hello/dead time are local routers or in close proximity. The networks that need to traverse half the globe or are on slower links need a higher timer to compensate for the delay. You can manually configure the timers per interface or you can change the network type of the link with the command:

ip ospf network <networktype> - to set network type

show ip ospf interface <interface#/#> - to verify network type

To change the timers to reflect the network type.

Hope that helps.

-David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022 09:05 AM

Although both @MHM Cisco World and @David Ruess are correct about possible additional delay between router neighbors with some topologies, I suspect Cisco's settings might have more to due with the "complexity" of "hello" peering between routers on certain topologies and/or expectations of how long a network should wait to detect a lost router neighbor.

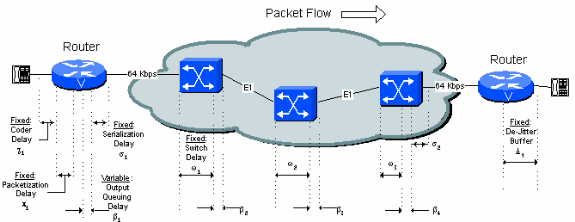

I.e. what kind of networks needs something like Cisco's default OSPF dead timers of 40 or (especially) 120 seconds? (In the past I've worked with global networks, using OSPF, running across "slow" [e.g. 64 Kbps] links. Even from one side of the world to the other, one direction latency generally didn't exceed a quarter of a second.)

A broadcast medium is likely some form of LAN and p2p will often detect loss of neighbor with a concurrent down interface on both ends of the p2p link. I.e. with a LAN, we might not have multiple peer detection of a lost neighbor from hardware, but can use broadcasts (or multicast) to "quickly" determine status of multiple routers on a shared segment, and as noted, with p2p likely have concurrent down interface detection.

With non-broadcast medium and/or multi-point, complexity of relaying peer status, between neighbors, is more involved.

If you read, for example, OSPFv2's RFC, its hello processing makes distinctions between broadcast, NBMA and multipoint (OSPF RFC hello protocol).

Likely, Cisco set its time defaults to be especially conservative, and to insure they didn't accidentally/incorrectly take down a working connection mistakenly believing it failed. (In an [earlier?] networking world where there were not many, if any, redundant paths, incorrectly taking down a live connection would be worse then "detecting" a real line drop 40 to 120 seconds after it already happened.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022 03:16 AM

from router to router through many L2 and/or L3 there is many delay and this delay must add to dead time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022

04:54 AM

- last edited on

11-22-2022

12:56 AM

by

Translator

![]()

Hello,

As @MHmh diagram points out the medium these timers are set on are typically for longer distances to travel. Usually the networks with the lower hello/dead time are local routers or in close proximity. The networks that need to traverse half the globe or are on slower links need a higher timer to compensate for the delay. You can manually configure the timers per interface or you can change the network type of the link with the command:

ip ospf network <networktype> - to set network type

show ip ospf interface <interface#/#> - to verify network type

To change the timers to reflect the network type.

Hope that helps.

-David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022 09:05 AM

Although both @MHM Cisco World and @David Ruess are correct about possible additional delay between router neighbors with some topologies, I suspect Cisco's settings might have more to due with the "complexity" of "hello" peering between routers on certain topologies and/or expectations of how long a network should wait to detect a lost router neighbor.

I.e. what kind of networks needs something like Cisco's default OSPF dead timers of 40 or (especially) 120 seconds? (In the past I've worked with global networks, using OSPF, running across "slow" [e.g. 64 Kbps] links. Even from one side of the world to the other, one direction latency generally didn't exceed a quarter of a second.)

A broadcast medium is likely some form of LAN and p2p will often detect loss of neighbor with a concurrent down interface on both ends of the p2p link. I.e. with a LAN, we might not have multiple peer detection of a lost neighbor from hardware, but can use broadcasts (or multicast) to "quickly" determine status of multiple routers on a shared segment, and as noted, with p2p likely have concurrent down interface detection.

With non-broadcast medium and/or multi-point, complexity of relaying peer status, between neighbors, is more involved.

If you read, for example, OSPFv2's RFC, its hello processing makes distinctions between broadcast, NBMA and multipoint (OSPF RFC hello protocol).

Likely, Cisco set its time defaults to be especially conservative, and to insure they didn't accidentally/incorrectly take down a working connection mistakenly believing it failed. (In an [earlier?] networking world where there were not many, if any, redundant paths, incorrectly taking down a live connection would be worse then "detecting" a real line drop 40 to 120 seconds after it already happened.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2022 09:24 AM

Long time ago I wonder the same thing

Note: original OSPF RFC specification has only 2 modes with with 30 second timers: Non-Broadcast and Point to Multipoint.

Regards, ML

**Please Rate All Helpful Responses **

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide