- Cisco Community

- Technology and Support

- Networking

- Switching

- Cisco 3650 High memory utilization

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cisco 3650 High memory utilization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2021 03:53 PM - edited 12-13-2021 04:37 PM

Currently I have a Cisco 3650 that has been up for 1 year and 22 weeks that is showing critical high memory

#sh platform resources

**State Acronym: H - Healthy, W - Warning, C - Critical

Resource Usage Max Warning Critical State

----------------------------------------------------------------------------------------------------

Control Processor 4.70% 100% 5% 10% C

DRAM 3814MB(98%) 3884MB 90% 95% C

and displaying the following error in the log,

Dec 13 18:03:55 est: %PLATFORM-4-ELEMENT_WARNING: Switch 1 R0/0: smand: 2/RP/0: Used Memory value 93% exceeds warning level 90%

I'm currently using the following IOS,

Switch Ports Model SW Version SW Image Mode

------ ----- ----- ---------- ---------- ----

* 1 52 WS-C3650-48PD 16.9.5 CAT3K_CAA-UNIVERSALK9 INSTALL

2 52 WS-C3650-48PD 16.9.5 CAT3K_CAA-UNIVERSALK9 INSTALL

Looking at the memory stats below I can't seem to figure out what is taking up all the memory, I know there is a Field Notice: FN - 70359 and 70416 that deal with high memory utilization on IOS versions, 16.3.x and 16.6.x but I'm using version 16.9.5. Any idea if I'm hitting a bug ? - I would appreciate any pointer with this.

Thanks, Paul

sh platform software status control-processor br

Load Average

Slot Status 1-Min 5-Min 15-Min

1-RP0 Healthy 0.21 0.24 0.26

2-RP0 Healthy 0.17 0.19 0.22

Memory (kB)

Slot Status Total Used (Pct) Free (Pct) Committed (Pct)

1-RP0 Critical 3977748 3905688 (98%) 72060 ( 2%) 4841952 (122%)

2-RP0 Warning 3977748 3698964 (93%) 278784 ( 7%) 4598720 (116%)

CPU Utilization

Slot CPU User System Nice Idle IRQ SIRQ IOwait

1-RP0 0 3.49 1.19 0.00 95.30 0.00 0.00 0.00

1 3.20 1.10 0.00 95.70 0.00 0.00 0.00

2 6.29 1.09 0.00 92.60 0.00 0.00 0.00

3 6.70 1.20 0.00 92.00 0.00 0.10 0.00

2-RP0 0 2.19 0.69 0.00 97.00 0.00 0.09 0.00

1 4.10 0.40 0.00 95.50 0.00 0.00 0.00

2 2.60 0.60 0.00 96.80 0.00 0.00 0.00

3 2.49 1.19 0.00 96.30 0.00 0.00 0.00

#sh processes memory sorted

Processor Pool Total: 852233264 Used: 358187528 Free: 494045736

lsmpi_io Pool Total: 6295128 Used: 6294296 Free: 832

PID TTY Allocated Freed Holding Getbufs Retbufs Process

0 0 339107056 45195168 264755576 0 0 *Init*

80 0 65939140216 46289440 22097800 0 18228 IOSD ipc task

521 0 21961296 6284608 14136920 0 0 Stby Cnfg Parse

0 0 2077885216 2253867072 7225728 17487479 0 *Dead*

458 0 4137712 152464 4042248 849828 0 EEM ED Syslog

1 0 1844024 79880 1809144 0 0 Chunk Manager

471 0 2230424 608912 1666512 0 0 EEM Server

274 0 4562424 2665760 1638488 0 0 XDR receive

0 0 0 0 1511184 0 0 *MallocLite*

89 0 4529720 793808 1290312 0 0 REDUNDANCY FSM

4 0 5978840 2075032 989336 2412 0 RF Slave Main Th

546 0 465086656 249582136 888032 0 0 OSPF-1 Router

84 0 1271504 308752 840440 0 0 NGWC_OIRSHIM_TAS

97 0 3356192 1832504 769320 2412 0 cpf_msg_rcvq_pro

469 0 44453688 42856432 518376 0 0 SAMsgThread

31 0 1899896 1904 441184 0 0 IPC Seat RX Cont

459 0 384104 5680 435424 72316 0 EEM ED Generic

281 0 1265496 539544 432520 0 0 IP RIB Update

#sh processes memory sorted allocated

Processor Pool Total: 852233264 Used: 358186880 Free: 494046384

lsmpi_io Pool Total: 6295128 Used: 6294296 Free: 832

PID TTY Allocated Freed Holding Getbufs Retbufs Process

113 0 188201667640 188201592832 119808 0 0 Crimson flush tr

535 0 184545855872 202459654520 117000 0 0 SNMP ENGINE

534 0 141019452208 123160208232 117000 0 0 PDU DISPATCHER

126 0 131317880888 35389852440 124608 0 0 IOSXE-RP Punt Se

80 0 65939220800 46289440 22097800 0 18228 IOSD ipc task

10 0 52198647768 52198788192 230800 49884999532 49885025288 Pool Manager

507 0 46809852872 46809852872 69000 0 0 mdns Timer Proce

38 0 35369918600 35369951464 45000 0 0 ARP Background

533 0 24875579128 24821022816 70640 0 0 IP SNMP

417 0 21597044088 21217062008 124120 0 0 TPS IPC Process

PID TTY Allocated Freed Holding Getbufs Retbufs Process

73 0 20781612840 20775702664 167264 0 0 Net Background

222 0 10018000224 10017960656 93224 0 0 CDP Protocol

312 0 6359377168 3444418976 168992 0 0 HTTP CORE

223 0 6177848520 6177849384 45000 0 0 DiagCard1/-1

37 0 3139270120 3139182576 88416 0 0 ARP Input

15 0 3032066488 3032066488 45000 0 0 DB Lock Manager

3 0 2877624128 2877624128 45112 0 0 MGMTE stats Proc

550 0 2436629512 2436565120 45000 0 0 DiagCard2/-1

0 0 2077885216 2253867072 7225728 17487479 0 *Dead*

186 0 1089084392 1089084392 45000 0 0 mcprp callhome p

70 0 868303304 868303304 45000 0 0 Licensing Auto U

362 0 719686064 719686064 57000 0 0 QoS stats process

#sh processes memory platform sorted

System memory: 3977748K total, 3906276K used, 71472K free,

Lowest: 71472K

Pid Text Data Stack Dynamic RSS Total Name

--------------------------------------------------------------------------------

20064 9005 2153036 132 2110664 2153036 2390980 fman_fp_image

12074 171452 608664 136 300 608664 2127072 linux_iosd-imag

12283 207 199280 132 72764 199280 1972756 fed main event

12083 395 71816 132 2960 71816 941424 sif_mgr

12181 1003 73548 132 2760 73548 687128 platform_mgr

23574 344 39152 132 6248 39152 420048 cli_agent

22201 178 57056 132 7580 57056 332580 sessmgrd

22456 8 24488 136 7380 24488 306620 python2.7

24561 8538 52112 132 2816 52112 305048 fman_rp

24041 198 44496 132 4048 44496 246068 dbm

25219 798 73328 132 27152 73328 233504 smand

3632 545 2580 132 132 2580 214824 libvirtd

19618 1533 55672 132 1608 55672 191364 nginx

21479 578 30220 132 2472 30220 180972 repm

19610 1533 12316 132 1608 12316 139596 nginx

7402 99 12172 132 1540 12172 64008 bt_logger

9022 200 11856 132 1888 11856 58432 lman

12029 483 13800 132 1688 13800 56340 stack_mgr

- Labels:

-

LAN Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2021 04:09 PM

Upgrade to the latest 16.6.X or 16.9.X (and avoid 16.12.X.).

It is not a good idea for 3650/3850, with 16.X.X, to have an uptime of >6 months.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2021 04:36 PM

Leo, thanks for your reply, I'm currently at 16.9.5 and getting that error, I was going to update to 16.12.05b gold image. Any particular reason I should stay away from that train? Also I have past Cisco SWs up for years with no issues. I would think that 1 year and 22 weeks is not that long for an enterprise switch.

thanks, Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2021 05:14 PM - edited 12-13-2021 10:45 PM

@paul amaral wrote:

Any particular reason I should stay away from that train?

16.12.X is for people who would like to "help Cisco find bugs".

@paul amaral wrote:

Also I have past Cisco SWs up for years with no issues. I would think that 1 year and 22 weeks is not that long for an enterprise switch.

Valid argument for platform (routers, switches, etc.) with "classic" IOS, however, with IOS-XE the same argument is not applicable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2021 03:50 PM

Leo, thanks for your opinion. I will certainly look at the XE devices uptime/memory more closely. I rebooted that SW and the memory is back to normal, now I'm wondering if I should upgrade to cat3k_caa-universalk9.16.12.05b.SPA.bin which is a gold star image or not based on your 16.12.x opinion. I would think that the gold star IOS has been put through more testing and its safer. I personally think that being on 16.9.5 and having the memory run up like that is unacceptable, especially since there doesn't seem to be a cause for it.

thanks for your input,

paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2021 06:19 PM

@paul amaral wrote:

I would think that the gold star IOS has been put through more testing and its safer.

Do not confuse "gold star" and Cisco Safe Harbor.

Cisco Safe Harbor, in my experience, is the epitome to "quality control" and "quality assurance". Safe Harbor gave every network admin a good night's rest because we were assured Cisco has done exhaustive testing (to certain scenarios).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 01:08 PM - edited 03-24-2022 01:09 PM

It's not. Garbage equipment needs to be rebooted every few months. The Premium price that we pay for Cisco takes us away from the garbage category. It's not uncommon for Cisco switches to be up 2 plus years. At least that's the way Cisco use to be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2022 04:21 PM

Anyone still interested in using 16.12.X?

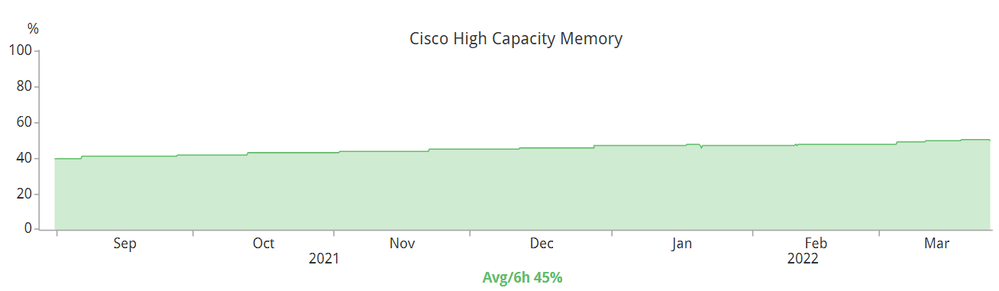

Have a look at the "sh process memory" graph below:

(This is an output from a stack of 9300, IOS-XE version 16.12.5, uptime 39 weeks. The graph is 210 days long.)

Just want to remind everyone the "sh process memory" or "sh platform resource" command will provide the AVERAGE memory of the entire stack. Take it in slowly: "average memory of the entire stack". The command will not provide a picture of what each stack member is "doing".

Have a look at the picture below:

This output is taken from the stack. The only difference is this: This is now a "snapshot" of what each stack member's memory utilization is. The above is taken from one member of the same stack. Notice how the picture is not different? First picture shows the memory utilization to be "stable" at around 25% but the 2nd picture shows the opposite? 2nd picture shows, from September 2021 until February 2022, to be slowly climbing but on March 2022 the memory utilization gets worst.

Hope this helps.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide