- Cisco Community

- Technology and Support

- Networking

- Switching

- Devices lose connectivity every few minutes for a few seconds

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 06:50 PM

Good evening, something very strange is happening at one of our offices and I am completely at a loss.

We have a 3750g switch stack at the core, and a couple of remote closet switches that connect to the core. All endpoints and servers/printers are on a single VLAN. Every few minutes, we start experiencing packet loss when pinging even devices on the same VLAN. This just started happening a few days ago. I've already looked at the common culprits such as bridging loops (spanning-tree is enabled on all ports except switch uplinks). I had a bunch of ports with portfast enabled. I disabled portfast on all ports. I am not seeing anything in the logs pointing towards a loop, also when we start seeing time outs, I check switch CPU (to see whether there is a spike) and it's normal. My hope is that by disabling portfast on all ports, if there is a loop that'll get picked up by spanning-tree.

Any ideas would be greatly appreciated.

Solved! Go to Solution.

- Labels:

-

LAN Switching

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 07:07 PM

couple point to check

1-are you config any ether channel ? if Yes check both Side of Etherchannel, there is sometime Half-Loop that cause IntraVLAN frame Drop

2-show interface X error counter

are you see and error counter ?? please share here

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 08:13 AM

All, it might be too soon to tell (fingers crossed) but I believe we have found the issue. I decided to perform a process of elimination by disabling ALL (except most critical) ports on the core switches and then re-enabling them in groups. Also worked with the server team and we narrowed it down to a server that is used for backups. Whenever a backup job would run and traffic started to ramp up beyond 700 Mbps on the 2Gbps ether-channel, timeouts would occur. This server also has 2 iSCSI NICs it uses to forward backup traffic to a Synology storage over the same switch. The 2 iSCSI NICs are teamed however not setup to aggregate traffic, which is what was likely causing the issue. As traffic ramped up beyond 700Mbps, server likely started offloading the traffic to the other iSCSI port which caused some sort of loop in the network and time outs occurred. We tried this several time and each time we were able to re-create and resolve the issue. So far we've disabled one of the 2 iSCSI links and I haven't seen any timeouts for over 2 hours. Keeping a close eye regardless.

Thank you all to that replied. I really appreciate the help from the community. I will respond back if the issue comes back but looks like we are good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 07:07 PM

couple point to check

1-are you config any ether channel ? if Yes check both Side of Etherchannel, there is sometime Half-Loop that cause IntraVLAN frame Drop

2-show interface X error counter

are you see and error counter ?? please share here

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 07:15 PM

Hi there, attaching the output for error counters. I did reload the switch stack yesterday and I am seeing large numbers of OutDiscards.

Also yes there are a bunch of ether channels, including an 8-port etherchannel to a Dell VRTX system. This system switch software was recently updated within the last few days so your first point could be valid. However now sure how to check for the half-loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 07:28 PM

check

""Overloaded or Oversubscribed VLAN""

in this doc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 07:50 PM

I noticed all of the ports with high OutDiscards are connected using FastEthernet speeds. That would explain the high numbers of OutDiscards. Referring to the document, this could be causing slowness on the VLAN. I'll have to check what's connected on these ports and whether it's a server.

Gi1/0/13 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/27 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/28 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/29 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/30 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/31 connected 1 a-full a-100 10/100/1000BaseTX

Gi1/0/37 connected trunk a-full a-100 10/100/1000BaseTX

Gi2/0/6 connected 1 a-full a-100 10/100/1000BaseTX

Gi2/0/15 Environmental Sens connected 1 a-full a-100 10/100/1000BaseTX

Gi2/0/45 connected 1 a-full a-100 10/100/1000BaseTX

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 08:23 PM

Total Output Drops are normal if the port does not have a properly configured QoS.

Can you ping the default-gateway of that subnet? Does the ping drop?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 08:39 PM

Default-gateway is the SVI on the core switch with an IP address of 172.18.6.1. I sourced ping from 172.18.6.1 to a device on that VLAN and observed the behavior by sending a million pings. Did this at the same time while pinging several other devices on the network and everything starts timing out simultaneously. Some devices drop more pings than others i.e. I can get a few replies here and there from some devices when slowness happens while a few others go completely dark.

Interestingly however, I don't get any time outs while pinging the SVI or the router IP from outside (i.e. over VPN from the data center). This tells me the issue is contained within the LAN itself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 10:11 PM

@Ricky Sandhu wrote:

Some devices drop more pings than others i.e. I can get a few replies here and there from some devices when slowness happens while a few others go completely dark.

Look at the CPU and memory utilization of the stack.

Pick one of the one with high utilization. Drop that link. Does the ping times improve?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 05:21 AM

show ether channel summary<- can you share this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-28-2022 11:07 PM

- For all switches , core and other configure a central syslog server as log connector , follow-up on messages send by the switches , look if you can find problematic logs.

M.

-- Each morning when I wake up and look into the mirror I always say ' Why am I so brilliant ? '

When the mirror will then always repond to me with ' The only thing that exceeds your brilliance is your beauty! '

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 04:49 AM

Hello

@Ricky Sandhu wrote:

All endpoints and servers/printers are on a single VLAN. Every few minutes, we start experiencing packet loss when pinging My hope is that by disabling portfast on all ports, if there is a loop that'll get picked up by spanning-tree.

Any ideas would be greatly appreciated.

If you have single vlan then you should have no need for trunks or are you saying it just specific to one vlan , if it is the former then no need for trunks?

Reenable portfast on all access ports, and append some L2 security (storm control/port security/dhcp snooping) this should negate any unwarranted attachment of switch devices, BUM traffic or rogue dhcp requests.

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 08:13 AM

All, it might be too soon to tell (fingers crossed) but I believe we have found the issue. I decided to perform a process of elimination by disabling ALL (except most critical) ports on the core switches and then re-enabling them in groups. Also worked with the server team and we narrowed it down to a server that is used for backups. Whenever a backup job would run and traffic started to ramp up beyond 700 Mbps on the 2Gbps ether-channel, timeouts would occur. This server also has 2 iSCSI NICs it uses to forward backup traffic to a Synology storage over the same switch. The 2 iSCSI NICs are teamed however not setup to aggregate traffic, which is what was likely causing the issue. As traffic ramped up beyond 700Mbps, server likely started offloading the traffic to the other iSCSI port which caused some sort of loop in the network and time outs occurred. We tried this several time and each time we were able to re-create and resolve the issue. So far we've disabled one of the 2 iSCSI links and I haven't seen any timeouts for over 2 hours. Keeping a close eye regardless.

Thank you all to that replied. I really appreciate the help from the community. I will respond back if the issue comes back but looks like we are good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 08:45 AM

as I inform you before half-loop,

SW1-SW2

SW1 ether channel is send multicast/broadcast to other side SW2 that not run ether channel so it receive from Port and resend the multicast/broadcast to other Port,

SW1 receive the multicast/broadcast and flood it to all port except the ether channel it come from.

so disable one iSCSI stop the half-loop and network become stable.

the issue is not from Your Side I think the Issue is from Server Team, Server Team must config teaming to prevent this HALF-LOOP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 09:46 AM - edited 05-29-2022 09:46 AM

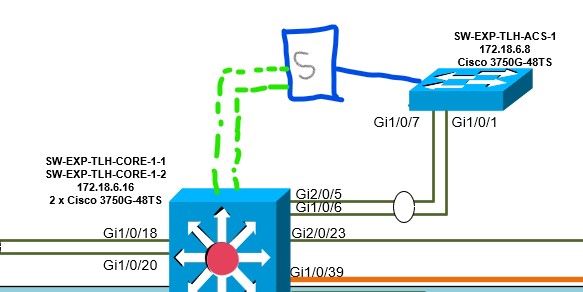

Here is a quick diagram of what we have. The bottom switch (Core-1) has an etherchannel over ports Gi2/0/5, Gi1/0/6 that go up to the ACS-1 switch. Etherchannel on both sides is working and configured properly. Switch ACS-1 has a server connected on a Gig port (Blue link). This server then has 2 x iSCSI links connected back to the first switch (Green links). The two iSCSI links connect to normal access ports on the core switch and these ports are not configured for etherchannel. Server team has the iSCSI NICs configured as Switch Independent links. If things remain stable, next step is to work with the server team and properly configure etherchannel on these ports.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 10:53 AM - edited 06-05-2022 09:08 AM

Hello

I would "STRONGLY" suggest you obtain clarification from the server team that its not an upgrade patch to their servers that's what causing the issue, request a timeline of their last patching scheduled and try and cross-reference that to when this issue started, if possible admin down one of the server teaming nics on the switch and see if this issue stops.

Infrastructure engineers are notorious for blaming everything on networks, In the past 4 weeks we have have 2 outages at client sites for this exact issue, upgrades to server that have for some reason messed up the teaming/Lb method of their servers which has caused unnecessary interface flaps of servers.

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide