- Cisco Community

- Technology and Support

- Networking

- Switching

- Should I set MSS on ALL network devices?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 12:53 PM

Hello.

Because this enterprise extensively utilizes DMVPN encapsulation, I am concluding that it is best to set every network device (including layer 2 switches) to tcp mss= 1360 and MTU to 1400 (1400 ma not be exactly correct, but I am providing for extra room for options).

QUESTIONS:

1. Do you agree with changing every enterprise network device to MTU=1400?

2. If I set MTU to 1400, is it still helpful, or is it unnecessary, to set tcp mss to 1360?

Thank you.

Solved! Go to Solution.

- Labels:

-

Other Switching

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 03:03 PM

"MTU to 1400 (1400 ma not be exactly correct, but I am providing for extra room for options)."

You don't need to adjust IP MTU for ip options. Why we adjust IP MTU is to allow for the encapsulation overhead, L3 and/or L4 options wouldn't make an impact. Basically, packet's original payload already takes them into account.

However, ip tcp adjust-mss is a different matter! Since we're "spoofing" it to insure encapsulation overhead can placed in a 1500 byte packet, if L3 and/or L4 options are being used, we need to allow for them (since they are more than the usual 20 + 20 L3/L4 overhead). So, there are 3 typical approaches. If most of your TCP traffic will have the "usual" IPv4 40 bytes of L3/L4 overhead, you just reduce IP MTU by 40 bytes for the MSS adjustment. If there are particular options used often, and you know their overhead, you reduce MSS by the additional overhead. If you want to handle any possible L3/L4 options, you reduce IP MTU by 120 bytes (i.e. worst case). The only issue with the latter two approaches, i.e. allowing for more option space usage then actually used, increases the overhead to payload ratio (bad).

Also, BTW, with IP MTU set on a tunnel interface, we don't "need" ip tcp adjust-mss to avoid fragmentation. So, why use both?

Unless sender sends packet with DF set, oversized packets will be fragmented.

If sender does send packet with DF set, packet will be dropped, and sender will need to retransmit the "corrected" packet size.

Two issues with the latter. First, early ICMP message didn't send how too big was too big by (fortunately, unlikely you'll bump into platforms still doing that.).

Second, even when packet size "corrected" to avoid fragmentation, PMTUD runs on a "timer" (usually 10 minutes), and when timer expires, it sends full size again to see what happens (which isn't going to change if always using a tunnel).

So, ip tcp adjust-mss is "better", as it avoids those two problems.

"1. Do you agree with changing every enterprise network device to MTU=1400?"

Disagree, as it entails much work to configure each host for that, and issue can be addressed at routers where the traffic will be encapsulated (i.e. the tunnel interfaces).

"2. If I set MTU to 1400, is it still helpful, or is it unnecessary, to set tcp mss to 1360?"

On every host? If so, yes, then tcp adjust-mss wouldn't be needed.

On the router with the tunnel interface? For that, you still want both, and with MTU is would be the IP MTU command, not the MTU only command.

Why both? Best explained here. (Short answer, though, ip tcp adjust-mss only works for TCP traffic, while IP MTU would apply to all IP traffic.) If unclear, post additional questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 12:57 PM

1. Do you agree with changing every enterprise network device to MTU=1400? <<- set MTU=1400 only apply under the tunnel interface, no need to change the default 1500 for other interface

2. If I set MTU to 1400, is it still helpful, or is it unnecessary, to set tcp mss to 1360? This need for tunnel interface, it modify the TCP win for traffic pass through the tunnel, and prevent client/server (TCP) from send large packet

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 01:09 PM

If many 1500 MTU datagrams enter DMVPN's, that means each 1500 datagram will split into 2 datagrams, second only approximately 100 bytes in data. Would it be best to make everything in network 1400 MTU, then there are no inefficient 100 byte datagrams in network?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 01:12 PM

Yes the problem with fragment is the load in CPU, and time need for CPU to defragment the packet, which lead in some case in congestion and drop.

so it recommend to set the MTU 1400 and tcp mss 1360.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 01:35 PM

Set 1400 MTU in all network devices, or just DMVPN devices?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 02:54 PM

first not all device

and the device that you run DMVPN only config these value under the tunnel, other interface keep it default value.

we need to reduce the MTU of tunnel because the tunnel use VPN and VPN add overhead to packet this reduce the available bytes for data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 03:18 PM

BTW, it's a tad worst then you think.

If 1500 byte packet hits a MTU of 1400, yes we're over by 100 bytes, however, fragmented packet has its own IP header, so the 2nd fragment would copy the original packet's IP header (including, options) (also modifies both IP headers, to indicate these two packets are fragments that need to be recombined at destination). I.e. 2nd packet would be at least 120 bytes.

For even more fun, although most keep in mind the overhead involved with a router fragmenting packets, and the additional bandwidth being consumed by the additional IP headers, consider what happens if the receiver gets the 1400 byte fragment but not the follow-on 120 byte fragment containing the additional 100 bytes split off.

TCP receivers ACK packets, and as the whole packet hasn't been received, the sender will retransmit the whole 1500 bytes, again, even though we've successfully received 1400 of its bytes. (If you think that's bad, having packets broken down into 48 byte per ATM cell, losing just one ATM cell, i.e. 1 to 48 bytes of a packet, due to ATM congestion drop, would also require sender to retransmit whole original packet again.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 02:46 PM

Hello

You dont mention how the DMVPN is setup, are you using gre/ipsec/NAT on the NHRP tunnels ?

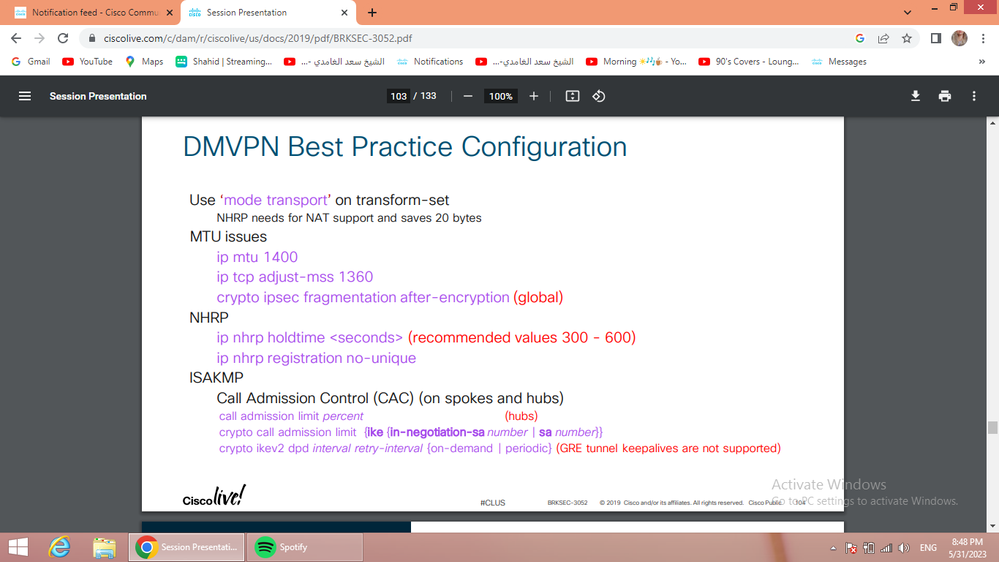

Best practice suggests-

Use ‘mode transport’ on transform-set NHRP needs for NAT support and saves 20 bytes

MTU issues

ip mtu 1400 ip

tcp adjust-mss 1360

crypto ipsec fragmentation after-encryption (global)

NHRP

ip nhrp holdtime (recommended values 300 - 600)

ip nhrp registration no-unique

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2023 03:03 PM

"MTU to 1400 (1400 ma not be exactly correct, but I am providing for extra room for options)."

You don't need to adjust IP MTU for ip options. Why we adjust IP MTU is to allow for the encapsulation overhead, L3 and/or L4 options wouldn't make an impact. Basically, packet's original payload already takes them into account.

However, ip tcp adjust-mss is a different matter! Since we're "spoofing" it to insure encapsulation overhead can placed in a 1500 byte packet, if L3 and/or L4 options are being used, we need to allow for them (since they are more than the usual 20 + 20 L3/L4 overhead). So, there are 3 typical approaches. If most of your TCP traffic will have the "usual" IPv4 40 bytes of L3/L4 overhead, you just reduce IP MTU by 40 bytes for the MSS adjustment. If there are particular options used often, and you know their overhead, you reduce MSS by the additional overhead. If you want to handle any possible L3/L4 options, you reduce IP MTU by 120 bytes (i.e. worst case). The only issue with the latter two approaches, i.e. allowing for more option space usage then actually used, increases the overhead to payload ratio (bad).

Also, BTW, with IP MTU set on a tunnel interface, we don't "need" ip tcp adjust-mss to avoid fragmentation. So, why use both?

Unless sender sends packet with DF set, oversized packets will be fragmented.

If sender does send packet with DF set, packet will be dropped, and sender will need to retransmit the "corrected" packet size.

Two issues with the latter. First, early ICMP message didn't send how too big was too big by (fortunately, unlikely you'll bump into platforms still doing that.).

Second, even when packet size "corrected" to avoid fragmentation, PMTUD runs on a "timer" (usually 10 minutes), and when timer expires, it sends full size again to see what happens (which isn't going to change if always using a tunnel).

So, ip tcp adjust-mss is "better", as it avoids those two problems.

"1. Do you agree with changing every enterprise network device to MTU=1400?"

Disagree, as it entails much work to configure each host for that, and issue can be addressed at routers where the traffic will be encapsulated (i.e. the tunnel interfaces).

"2. If I set MTU to 1400, is it still helpful, or is it unnecessary, to set tcp mss to 1360?"

On every host? If so, yes, then tcp adjust-mss wouldn't be needed.

On the router with the tunnel interface? For that, you still want both, and with MTU is would be the IP MTU command, not the MTU only command.

Why both? Best explained here. (Short answer, though, ip tcp adjust-mss only works for TCP traffic, while IP MTU would apply to all IP traffic.) If unclear, post additional questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 10:51 AM - edited 06-02-2023 08:33 AM

Mandatory both command need for DMVPN, AND this Cisco recommend' and use cisco recommend test value 1400/1360.

the IP MTU is 1400

the IP TCP MSS 1360

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 12:01 PM

"Mandatory both need for DMVPN, this Cisco recommend.

THE MTU is 1400

the TCP MSS 1360"

To clarify - "best practice" isn't truly mandatory, but should be seriously considered.

Also, as I noted earlier, and confirmed in the Cisco live sheet, it's not MTU 1400 but IP MTU 1400. It's a very important difference!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 12:07 PM

it mandatory, you need both for DMVPN tunnel interface. there is no option here, BOTH need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 01:55 PM

Disagree, will work without, just not nearly as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 02:43 PM - edited 05-31-2023 02:49 PM

Disagree what, the MTU/MSS is first think config under tunnel.

it not topology and how it design, it commands need and recommend by cisco.

all I-WAN book recommend both commend with same value.

if @MicJameson1 not config it, he later come again ask why my router performance is low why I have high packet drop and we then will answer him config MTU/MSS.

so from beginning he need to config right value.

this from I-WAN doc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2023 03:33 PM - edited 05-31-2023 03:35 PM

You're missing the point - big difference between "mandatory" vs. "recommend".

I.e. DMVPN can work without one or both; again, though, might not work very well without using those commands.

However, poor performance without using them, certainly possible, often even likely. I too fully recommend using them (properly set, not just lifting them from a "best practice" recommendation).

That aside, as to the actual recommended values, Cisco recommends 1400/1360 because that's almost always good. However, you can often increase both up a bit, often another 20 bytes; just don't go even one byte too many!

Also, as I wrote earlier, the 1360 value assume the IP and TCP headers are their usual 20 bytes each, but they might not be. [As @MicJameson1 even asked about.] If not, 1360 is not the correct value to use with 1400. (Hmm, maybe there's a footnote that goes with that best practice recommendation.)

Conversely, an actual path might not provide 1500 byte MTU, for such, you need to go even smaller than the "recommended" 1400/1360 values.

Or, I've done tunnels across topologies supporting 9K (jumbo) Ethernet MTUs, for those, if you're just sending 1500 byte packets, you need neither, and there's no issues. In fact, when I write no issues, I mean you don't have the cases where even the "optimal" IP MTU and TCP settings don't cover, like sending video, using UDP, sending max size packets, which don't set DF. I.e. those packets get fragmented, unless its 1500 over 9K!

Best practices are great, but it behooves one to understand why they are recommended and with such understanding know whether they should actually be used as recommended, modified, or not used at all. Above, I've noted cases where, in real world networks, the 1400/1360 values might not be used because doing so doesn't provide the optimal settings or possibly makes things worse (such as using them when doing 1500 over 9K).

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide