- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: APIC Inband Managment IP address not being advertised within mgmt:

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

APIC Inband Managment IP address not being advertised within mgmt:inb

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-20-2022 04:01 PM

Hello Cisco,

I'm trying to configure in-band management on the ACI fabric, but the APIC in-band management IP address is not being advertised into the in-band management VRF routing table. The Leafs can ping each other in the in-band management VRF but the APIC's cannot ping via the in-band management VRF.

Do I need to change the APIC Connectivity Preferences in order for the in-band management IP addresses to work on the APIC's

Thank you!

- Labels:

-

Cisco ACI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-20-2022 04:54 PM

You do not need to change the APIC connectivity preference.

Without reviewing your configuration, I'll share a common mistake when configuring InBand MGMT and the APIC is not working. Ensure that your Inband BD VLAN is configured in the VLAN pool for your APIC interfaces. So if APIC 1 connects to Leaf 101 Eth1/1, the appropriate Access policy configuration must be in place with the VLAN defined in that BD. You probably have a fault somewhere for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2022 09:46 AM

This is most likely the issue. I didn't perform any manual configuration on the APIC ports connected to the Leaf switches. The APIC ports connected to the leaf switches are currenly using the auto-discovery settings.

So your saying that I have to manually configure the APIC interfaces that connect to the Leaf switches using access policies to make sure the vlan tag is allowed on the Leaf interface connected to the APIC?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2022 10:35 AM

Quick question because I don't want to create an OUTAGE losing configuration connectivity to my Leaf switches. If I add access policy to the ports connected to my leaf switches, I will not lose configuration connectivity to the leaf switches?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-21-2022 04:03 PM

Hi @WarrenT-CO ,

Quick question because I don't want to create an OUTAGE losing configuration connectivity to my Leaf switches. If I add access policy to the ports connected to my leaf switches, I will not lose configuration connectivity to the leaf switches?

You won't have any connectivity interruption IF

- you leave LLDP enabled on the Access Port Policy Group (APPG) you apply to the APIC interfaces

- you don't do any other weird stuff in theAPPG, like set the interface speed to an incompatible setting

If you are paranoid, apply the APPG to one interface at a time, which might be tricky depending on your Interface Profile configuration.

Did you check my PM?

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2022 08:49 AM

Yes I read your previous post and your blog and followed the exact same steps. I even created a new vlan pool and applied the access policy to the APIC interface but still no success.

Is there a way to see the allowed vlans being trunked across the interface between the APIC and the connected Leaf switch?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2022 12:48 PM

Hi @WarrenT-CO ,

Yes I read your previous post and your blog and followed the exact same steps. I even created a new vlan pool and applied the access policy to the APIC interface but still no success.

OK. I may have to re-visit my blog - more research on this in the answer to your other question.

Is there a way to see the allowed vlans being trunked across the interface between the APIC and the connected Leaf switch?

Yes. I can answer that - but first you'll need to know the cannonical VLAN value of your mgmt VLAN on each attached switch. In my case I have only one APIC, and its connected to interface ethernet1/1 on both leaf 2201 and 2202 and my inb mgmt VLAN is vlan 203, so to find the canonical VLAN value I'll use the following (forget the fancy egrep bit - that's there to reduce the output):

apic1# fabric 2201-2202 show vlan extended | egrep "Node|--|vlan-203" ---------------------------------------------------------------- Node 2201 (Leaf2201) ---------------------------------------------------------------- ---- -------------------------------- ---------------- ------------------------ 10 mgmt:default:inb_EPG vlan-203 Eth1/1 ---------------------------------------------------------------- Node 2202 (Leaf2202) ---------------------------------------------------------------- ---- -------------------------------- ---------------- ------------------------ 13 mgmt:default:inb_EPG vlan-203 Eth1/1

so I can see that on leaf 2201, my inb mgmt canonical vlan is 10, and on leaf 2202, my inb mgmt canonical vlan is 13

Now, to see if these VLANs are enabled on interface ethernet1/1 on both leaf 2201 and 2202, its as simple as:

apic1# fabric 2201-2202 show interface ethernet 1/1 switchport ---------------------------------------------------------------- Node 2201 (Leaf2201) ---------------------------------------------------------------- Name: Ethernet1/1 Switchport: Enabled Switchport Monitor: not-a-span-dest Operational Mode: trunk Access Mode Vlan: unknown (default) Trunking Native Mode VLAN: unknown (default) Trunking VLANs Allowed: 8-10 FabricPath Topology List Allowed: 0 <snip> ---------------------------------------------------------------- Node 2202 (Leaf2202) ---------------------------------------------------------------- Name: Ethernet1/1 Switchport: Enabled Switchport Monitor: not-a-span-dest Operational Mode: trunk Access Mode Vlan: unknown (default) Trunking Native Mode VLAN: unknown (default) Trunking VLANs Allowed: 8,12-13 FabricPath Topology List Allowed: 0 <snip>

So. Sure enough, the mgmt vlan is enables on each leaf where the APIC connects.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2022 08:57 AM

Hey Thank you for the help but the issue is still that the APIC is not able to ping the Leaf switches but all the Leaf switches are able to communicate with one another over the inband managment VRF.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2022 02:15 PM - edited 10-22-2022 05:57 PM

Hi again @WarrenT-CO ,

OK, I guess I need to know what your l3Out config is - whether you are using the internal EPG method (method 1 in my bog) or the L3out method (method 3 in that blog).

My lab is using the L3out approach (because you need to set up that way for NDI and for multi-site), and the APIC is able to ping the leaves, (BUT NOT the spine - and that's always been the case)

However, if I was troubleshooting this, I'd start with this logic

- Check the routing tables on the APIC

- Check the routing tables the leaves

- Check the IP addresses of the leaves

- Check the ARP cache on the APIC

- Check the ARP cache on the APIC the leaves

- Check the MAC address tables on the leaves

In my case, my mgmt VLAN is vlan 203, which is canonical VLAN 10 on leaf 2201 and canonical VLAN 13 on leaf 2202 (see previous reply) and my mgmt subnet is 10.10.3.0/24. Here we go:

Check the routing tables on the APIC

apic1# bash admin@apic1:~> netstat -rn | grep 10\.10\.3\. 0.0.0.0 10.10.3.1 0.0.0.0 UG 0 0 0 bond0.203 10.10.0.0 10.10.3.1 255.255.0.0 UG 0 0 0 bond0.203 10.10.1.31 10.10.3.1 255.255.255.255 UGH 0 0 0 bond0.203 10.10.3.0 0.0.0.0 255.255.255.0 U 0 0 0 bond0.203 10.10.3.1 0.0.0.0 255.255.255.255 UH 0 0 0 bond0.203

10.10.3.1 is the L3Out IP address, and the mgmt subnet (10.10.3.0/24) is local to the mgmt vlan (203)

Check the routing tables the leaves

apic1# fabric 2201-2202 show ip route 10.10.3.0/24 vrf mgmt:inb

----------------------------------------------------------------

Node 2201 (Leaf2201)

----------------------------------------------------------------

IP Route Table for VRF "mgmt:inb"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%' in via output denotes VRF

10.10.3.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.2.24.64%overlay-1, [1/0], 1d12h, static

recursive next hop: 10.2.24.64/32%overlay-1

----------------------------------------------------------------

Node 2202 (Leaf2202)

----------------------------------------------------------------

IP Route Table for VRF "mgmt:inb"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%' in via output denotes VRF

10.10.3.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.2.24.64%overlay-1, [1/0], 1d12h, static

recursive next hop: 10.2.24.64/32%overlay-1

As expected the route exists locally on each leaf, and the next-hop for anything not on the local subnet is the spine proxy

Check the IP addresses of the leaves

apic1# fabric 2201-2202 show ip interface brief vrf mgmt:inb ---------------------------------------------------------------- Node 2201 (Leaf2201) ---------------------------------------------------------------- IP Interface Status for VRF "mgmt:inb"(5) Interface Address Interface Status vlan9 10.10.3.5/24 protocol-up/link-up/admin-up ---------------------------------------------------------------- Node 2202 (Leaf2202) ---------------------------------------------------------------- IP Interface Status for VRF "mgmt:inb"(23) Interface Address Interface Status vlan12 10.10.3.1/24 protocol-up/link-up/admin-up

Hmmm. Leaf2202's inb address is actually 10.10.3.6 - 10.10.3.1 is the IP address assigned to the inb Bridge Domain - so I expected it to show up, but I also expected 10.10.3.6 to show up too.

Let's look closer:

apic1# fabric 2202 show ip interface vlan12

----------------------------------------------------------------

Node 2202 (Leaf2202)

----------------------------------------------------------------

IP Interface Status for VRF "mgmt:inb"

vlan12, Interface status: protocol-up/link-up/admin-up, iod: 73, mode: pervasive

IP address: 10.10.3.6, IP subnet: 10.10.3.0/24 secondary

IP address: 10.10.3.1, IP subnet: 10.10.3.0/24

IP broadcast address: 255.255.255.255

IP primary address route-preference: 0, tag: 0

Ah. That explains how the IP addresses are assigned! And gives me a thought about the output of show ip interface brief for leaf 2201 - I'm going to check vlan9 on leaf2201

apic1# fabric 2201 show ip interface vlan9

----------------------------------------------------------------

Node 2202 (Leaf2202)

----------------------------------------------------------------

IP Interface Status for VRF "mgmt:inb"

vlan9, Interface status: protocol-up/link-up/admin-up, iod: 70, mode: pervasive

IP address: 10.10.3.5, IP subnet: 10.10.3.0/24

IP address: 10.10.3.1, IP subnet: 10.10.3.0/24 secondary

IP broadcast address: 255.255.255.255

IP primary address route-preference: 0, tag: 0

Of course - the BD IP address is on BOTH switches, but curiously shows up as a secondary IP address on leaf2201 (FWIW I later experimented with making the 10.10.3.1/24 IP address Primary in the BD and output as shown immediately above changed to swap the primary and secondary addresses.

Check the ARP cache on the APIC

Start by loading the ARP cache with some pings

admin@apic1:~> ping 10.10.3.5 -c 2 #Leaf2201 inb IP address

PING 10.10.3.5 (10.10.3.5) 56(84) bytes of data. 64 bytes from 10.10.3.5: icmp_seq=1 ttl=64 time=0.199 ms 64 bytes from 10.10.3.5: icmp_seq=2 ttl=64 time=0.250 ms --- 10.10.3.5 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1018ms rtt min/avg/max/mdev = 0.199/0.224/0.250/0.029 ms admin@apic1:~> ping 10.10.3.6 -c 2 #Leaf2202 inb IP address

PING 10.10.3.6 (10.10.3.6) 56(84) bytes of data. 64 bytes from 10.10.3.6: icmp_seq=1 ttl=63 time=0.221 ms 64 bytes from 10.10.3.6: icmp_seq=2 ttl=63 time=0.267 ms --- 10.10.3.6 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1005ms rtt min/avg/max/mdev = 0.221/0.244/0.267/0.023 ms admin@apic1:~> ping 10.10.3.1 -c 2 #Leaf2202 L#Out router IP address PING 10.10.3.1 (10.10.3.1) 56(84) bytes of data. 64 bytes from 10.10.3.1: icmp_seq=1 ttl=64 time=0.210 ms 64 bytes from 10.10.3.1: icmp_seq=2 ttl=64 time=0.203 ms --- 10.10.3.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1058ms rtt min/avg/max/mdev = 0.203/0.206/0.210/0.014 ms

Curious - the TTL for the replies from 10.10.3.6 (Leaf2202 inb IP address) was 63, indicating the the ICMP reply had passed through a router! Not sure what's going on there, might have something to do with the secondary address thing discovered above. Let's look at the ARP table

apic1# bash

admin@apic1:~> arp -ani bond0.203

? (10.10.3.6) at 00:22:bd:f8:19:fe [ether] on bond0.203

? (10.10.3.1) at 00:22:bd:f8:19:fe [ether] on bond0.203

? (10.10.3.8) at <incomplete> on bond0.203

? (10.10.3.5) at 00:22:bd:f8:19:fe [ether] on bond0.203

Well. That's going to be a problem! Multiple IP addresses sharing the same MAC address - which may explain that TTL of 63 above! Of course we already know that 10.10.3.1 and 10.10.3.6 share an interface, so they'd be expected to have the same MAC.

But this could well be why your APIC is having trouble pinging!

Now the interesting thing is that the MAC address for 10.10.3.1 is defined in the inb Bridge Domain - and so I tried changing the MAC address for 10.10.3.1 to 00:22:bd:f8:19:fd and NOW the ARP cache looks like:

admin@apic1:~> arp -ani bond0.203

? (10.10.3.6) at 00:22:bd:f8:19:fd [ether] on bond0.203

? (10.10.3.1) at 00:22:bd:f8:19:fd [ether] on bond0.203

? (10.10.3.8) at <incomplete> on bond0.203

? (10.10.3.5) at 00:22:bd:f8:19:fd [ether] on bond0.203

ALL entries changed - the reason for this might become clearer in the next couple of steps

Check the ARP cache on the leaves

apic1# fabric 2201,2202 show ip arp vrf mgmt:inb

----------------------------------------------------------------

Node 2201 (Leaf2201)

----------------------------------------------------------------

Flags: * - Adjacencies learnt on non-active FHRP router

+ - Adjacencies synced via CFSoE

# - Adjacencies Throttled for Glean

D - Static Adjacencies attached to down interface

IP ARP Table for context mgmt:inb

Total number of entries: 1

Address Age MAC Address Interface

10.10.5.1 00:17:11 0022.bdf8.19ff vlan3

----------------------------------------------------------------

Node 2202 (Leaf2202)

----------------------------------------------------------------

Flags: * - Adjacencies learnt on non-active FHRP router

+ - Adjacencies synced via CFSoE

# - Adjacencies Throttled for Glean

D - Static Adjacencies attached to down interface

IP ARP Table for context mgmt:inb

Total number of entries: 0

Address Age MAC Address Interface

WELL. That was useless! The 10.10.5.1 IP address is the L3Out address! Nothing showing for the 10.10.3.0/24 subnet!

So that didn't help much. (Sorry)

Check the MAC address tables on the leaves

Before I do this, I don't think is going to help much either, because we already know that the MAC address is repeated for each leaf, but what the heck. Let's look anyway. I know the APIC is attached via eth1/1 and the spine via eth1/51, so I'll look only at those interfaces.

apic1# fabric 2201,2202 show mac address-table interface eth1/1 ---------------------------------------------------------------- Node 2201 (Leaf2201) ---------------------------------------------------------------- Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False VLAN MAC Address Type age Secure NTFY Ports/SWID.SSID.LID ---------+-----------------+--------+---------+------+----+------------------ * 10 84b8.02f5.efd1 dynamic - F F eth1/1 * 8 84b8.02f5.efd1 dynamic - F F eth1/1 ---------------------------------------------------------------- Node 2202 (Leaf2202) ---------------------------------------------------------------- Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False VLAN MAC Address Type age Secure NTFY Ports/SWID.SSID.LID ---------+-----------------+--------+---------+------+----+------------------

apic1# fabric 2201,2202 show mac address-table interface eth1/51 ---------------------------------------------------------------- Node 2201 (Leaf2201) ---------------------------------------------------------------- Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False VLAN MAC Address Type age Secure NTFY Ports/SWID.SSID.LID ---------+-----------------+--------+---------+------+----+------------------ ---------------------------------------------------------------- Node 2202 (Leaf2202) ---------------------------------------------------------------- Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False VLAN MAC Address Type age Secure NTFY Ports/SWID.SSID.LID ---------+-----------------+--------+---------+------+----+------------------

As expected - pretty useless!

Where to from here?

I guess the burning question is

Why do you want to be able to ping these IP addresses?

I suspect the answer is "To give me warm fuzzy feelings" - which I count as a legitimate reason.

But from the point of view of what the inband management is used for, you really only care that external devices can access the inband management, so if that works OK, then maybe you should be happy.

Given the fact that ACI uses the same MAC address across multiple interfaces in the same broadcast domain, I think I've finally got the picture. It's worth a separate reply, so I'll post this as it is to show my working, and start a new more succinct reply.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2022 02:54 PM

Hello RedNectar, thanks for the detailed reply!

To answer your question: I want the APIC to be able to ping all the Leafs because in traditional networking you should be able to ping the default gateway and any host on the local subnet without any special network configuration. But I'm unable to ping the Bridge-Domain Default gateway or any of the leaf switches in the same subnet. Once local subnet connectivity is resolved I'll move further into the configuration and add shared-services connectivity to provide Inband-Management access to other EPG's.

Not sure what's the issue with this ACI Cluster, because the Inband-Management is a very straight forward configuration which I was able to bring up successfully on a second ACI Cluster, but I'll create a Cisco TAC case and ask Cisco to take a look at the configuration. Thank you very much for your assistance and your blog which help to configure the Inband-Management successfully on the second cluster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2022 08:11 PM

Hi @WarrenT-CO ,

To answer your question: I want the APIC to be able to ping all the Leafs because in traditional networking

ROFL. ACI ≠ traditional networking (I hope you have a sense of humour)

you should be able to ping the default gateway and any host on the local subnet without any special network configuration.

This is true, and TBH I'm not quite sure exactly how the special In-Band EPG works, (when tackling this last time I discovered that the Leaf inband IP was configured as a second address on the special reserved inb BD) but until I learn differently, I'd EXPECT the default GW IP to exist on every leaf, plus the leaf's individual inband IP, so the APIC ought to be able to ping all leaf inband IPs

HOWEVER, keep in mind that for NORMAL EPGs and BDs, the default GW will not exist on any switch until an endpoint has been configured to be attached to that switch!

But I'm unable to ping the Bridge-Domain Default gateway or any of the leaf switches in the same subnet.

I presume you mean unable to ping FROM the APIC. Yeah. Not sure why not for that one. I suspect it has something to do with the fact that the MAC addresses for all those inband leaf IP addresses are the same and can't be changed!

BUT - you should still be able to ping all leaf inband IPs from a different subnet (if a contract is in place)

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-22-2022 11:40 PM

Hi @WarrenT-CO ,

OK - I've just written a mammoth reply, read it if you want to see my brain ticking over, but I think the best way to answer this is to go back to ACI basics.

But to be really able to figure this out, I need to know if your design is the same as my L3 design, with your leaf inband management IPs in the same subnet as the IP address of the inb Bridge Domain.

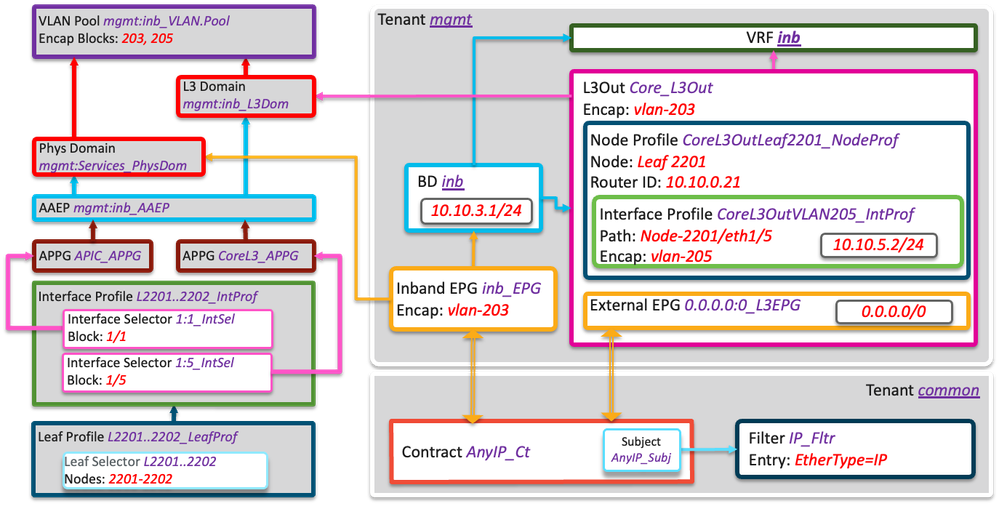

This is my setup (logical) System assigned names are underlined

The L3Out part is not that important as far as the problem we are discussing goes, it't more about the Inband EPG and the interfaces it applied to.

Now, what's missing is:

- any kind of static mapping in the Inband EPG to the trunk ports Eth1/1 on both leaves (where the APIC is attached). This is configured dynamically and automatically on any port where an APIC is discovered via LLDP

- the inband IP addresses for the leaves.

- They are (as I discovered in my last missive) are assigned as secondary IP addresses to the system inb Bridge Domain

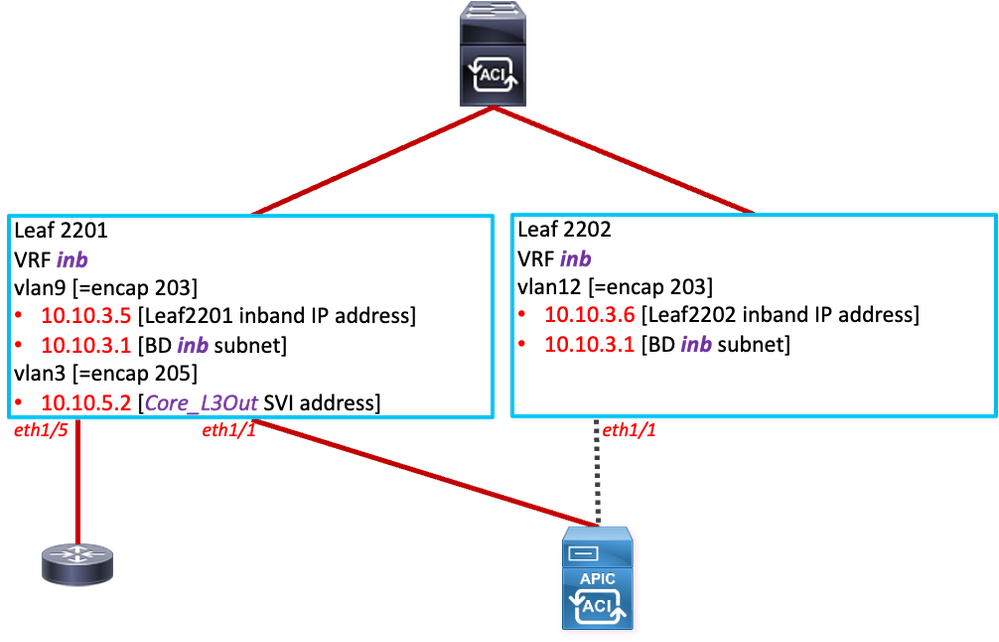

Physically, it looks like:

Anyway, let's see if this makes sense:

To begin, remember that the inband management is implemented as an EPG and Bridge domain.

Like any any other BD, ACI expects to communicate with devices that are attached to Leaves, as opposed to communicating WITH the leaf.

That make the APIC an endpoint (kind of) of the inb EPG - although it doesn't show up as an endpoint from a show tenant mgmt endpoints command.

So from the APIC, I'd expect to be able to be able to ping the IP address of the inb Bridge Domain, but not necessarily the leaf IPs themselves, although I'd probably expect replies from the IP address of the leaf that the particular APIC is connected to.

In my lab, I noticed that when I got ping replies on the APIC when pinging 10.10.3.6 (Leaf 2202 inband IP), the TTL of the reply was 63. I now realise that this is because the APIC only has direct connectivity to Leaf2201 - to get to Leaf2202, the ping (and reply) had to go the long way around - in other words, Leaf2201 was pushing the pings to the Spine Proxy.

Anyway - I hope I've shone some light on this for you. I've learned a bit along the way as well!

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-20-2022 09:55 PM - edited 10-20-2022 10:03 PM

Hi @WarrenT-CO,

If you do a search for Rednectar "Configuring in-band management for the APIC on Cisco ACI" , you might come across a series of three blog posts I wrote ages ago which may help! (But ignore the L2Out version - that's not a good method. Use an EPG or L3Out

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide