- Cisco Community

- Technology and Support

- Collaboration

- Collaboration Knowledge Base

- Unified Communications Virtualization Deployment Guide On UCS B-Series Blade Servers

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

10-16-2009

03:51 PM

- edited on

03-25-2019

06:26 PM

by

ciscomoderator

![]()

- Preface

- Audience

- Revision History

- UCS Architecture Overview

- UCS Architecture Building Blocks

- Utility Computing with Stateless Server

- The Unified Fabric

- Types of UCS Fabric Interconnect Ports

- Fabric Interconnect (6120XP) Ethernet Switching Modes

- End-host Mode

- Switch Mode

- Setting up UCS Manager

- Initial Configuration and Policies

- Configure Ports on the UCS Manager

- Connecting UCS-6120XP Switch to UCS-5108 Chassis

- Connecting UCS-6120XP Switch to Uplink Ethernet Switch

- Service Profile Creation

- Storage

- IPMI Profile

Preface

This document provides design considerations and guidelines for deploying enterprise network solutions that include the Cisco Unified Computing System (Cisco UCS). This document builds upon ideas and concepts presented in the Data Center, Storage Area Networking and Virtualization Design Guides which is available online at

http://www.cisco.com/go/datacenter

Due to the length and size of this document, the information will be divided into smaller sections and may comprise links to several other documents. The information in this document was created from devices in a specific lab environment. All of the devices used in this document started with a default configuration. If your network is live, make sure that you understand the potential impact of any command.

Audience

This design and deployment guide is intended for the system architects, designers, engineers, and Cisco channel partners who want to apply design practices for the Cisco Unified Computing System and Virtualization.

This document assumes that you are already familiar with basic Data Center, Virtualization and Storage Area Networking (SAN) terms and concepts and with the information presented in the Data Center Design Guides. To review those terms and concepts, refer to the documentation at the preceding URL.

Revision History

This document may be updated at any time without notice.

Revision Date

Comments

| November 16th, 2009 | Initial version of this document |

| April 23rd, 2010 | Added SAN Storage Deployment Chapter |

UCS Architecture Overview

Virtualization has created a market transition in which IT departments are trying to reduce costs and increase flexibility. IT organizations are constantly working against existing rigid, inflexible hardware platforms. Data center administrators have had to spend significant time on manual procedures for basic tasks instead of focusing on more strategic, proactive initiatives. Unified computing System unites Compute, Network, Storage access, and Virtualization into a single, highly available, cohesive system. The Cisco Unified Computing System is designed and optimized for various layers of virtualization (Server Virtualization, Network Virtualization etc.) to provide an environment where applications run on one or more uniform pools of server resources. The system integrates 10 Gigabit Ethernet unified network fabric with x86-architecture servers.

UCS Architecture Building Blocks

From hardware and software architecture point of view, there are mainly six building blocks.

- UCS Blade Server Chassis

- UCS B-Series Blade Server

- UCS Blade Server Adapters a.k.a Mezzanine Cards

- UCS Fabric Interconnect Switch

- UCS Fabric Extender

- UCS Manager

There are five physical and then one software block called UCS Manager.

UCS Blade Server Chassis

The Cisco UCS 5108 Blade Server Chassis, is six rack units (6RU) high, can mount in an industry-standard 19-inch rack. A chassis can accommodate up to eight half-width, or four full-width Cisco UCS B-Series Blade Servers form factors within the same chassis. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2104XP Fabric Extenders. A passive midplane provides up to 20 Gbps of I/O bandwidth per server slot and up to 40 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 40 Gigabit Ethernet standards. Furthermore, the midplane provides 8 blades with 1.2 terabits (Tb) of available Ethernet throughput for future I/O requirements.

UCS B-Series Blade Server

A blade server is a modual server optimized to minimize the use of physical space. It looks very much like a line card that is inserted into a blade server chassis or enclouser. Typically a blade server only provides compute resources (for instance processor, memory and IO cards etc.). The UCS-B200-M1 Server is a half-width, two socket blade server consisting of 2xHD Slot and 12 DIMM Slots. Cisco UCS B-Series Blade Servers are based on Intel Xeon 5500 Series processors.UCS B200-M1 series blade servers can support

- Two Intel Xeon 550 series processors

- Up to 96 GB of DDR3 memory

- Up to two optional Small Form Factor (SFF) Serial Attached SCSI (SAS) hard drives in 73GB 15K RPM and 146GB 10K RPM versions

- LSI Logic 1064e controller and integrated RAID

UCS Blade Server Adapters or Mezzanine Cards

Blade servers in the Cisco UCS Blade Server Chassis have access to the network fabric through mezzanine adapters that provide up to 40 Gbps of throughput per blade server.There are different adapters aka mezzanine cards options available for UCS B-Series blade server. It include either a Cisco UCS VIC M81KR Virtual Interface Card, a converged network adapter (Emulex or QLogic compatible), or a single 10GB Ethernet Adapter.

Refere to following URL for further details

http://www.cisco.com/en/US/prod/ps10265/ps10280/cna_models_comparison.html

Fabric Interconnect Switch

The fabric switch or the fabric interconnect switch, as we're going to call them, connects the blade servers to the rest of the data center. The Cisco UCS 6100 Series Fabric Interconnect Switch offers line-rate, low-latency, lossless 10 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE) functions.

The Cisco UCS 6100 Series Fabric Interconnect Switch provides the management and communication backbone for the Cisco UCS B-Series Blades and UCS 5100 Series Blade Server Chassis. They're built on the same ASICs as the Nexus 5000 Switch, although this is a different box. And it is not interchangeable with the Nexus 5000 series. You cannot upgrade from a Nexus 5K to a UCS-6120XP fabric interconnect switch for example. Nor you can downgrade from UCS-6120XP to Nexus 5K. They both are different systems and they have different internal components.

NOTE:

Nexus 5000 series swithes are mainly use for data center applications. The discussion about Nexus 5000 series switches is out of the scope of this document. Please refer to the following URL for more information about it.

http://www.cisco.com/en/US/products/ps9670/index.html

The Cisco 6100 Series hosts and runs the Cisco UCS Manager in a highly available configuration, enabling the fabric interconnects to fully manage all Cisco Unified Computing System elements.

Fabric Extender

Fabric Extender is a line card that inserts into one of the blade chassis enclosure slot. The actual wiring between UCS chassis and UCS Fabric Interconnect Swith ports runs through the Faric Extender module. The Cisco UCS 2100 Series Fabric Extenders bring the unified fabric into the blade server enclosure, providing 10 Gigabit Ethernet connections between blade servers and the fabric interconnect, simplifying diagnostics, cabling, and management. It has four 10 Gigabit Ethernet, FCoE-capable, Small Form-Factor Pluggable Plus (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2104XP has eight 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 80 Gbps of I/O to the chassis.

UCS Manager

Cisco UCS Manager acts as the central nervous system of the Cisco Unified Computing System. It integrates the system components from end to end so the system can be managed as a single logical entity. Cisco UCS Manager provides an intuitive GUI, a command-line interface (CLI), and a robust API so that it can be used alone or integrated with other third-party tools. Everything about server configuration-system identity, firmware revisions, network interface card (NIC) settings, HBA settings, and network profiles-can be managed from a single console, eliminating the need for separate element managers for every system component. Cisco UCS Manager is embedded in the fabric interconnects switches.

Utility Computing with Stateless Server

UCS provides the infrastructure to support stateless server environments. Utility Computing, in general allows for logical servers (OS/Applications) to be run on any physical server or blade, in a way that hides traditional H/W identifiers (WWN, MAC, UUID etc.) as an integral part of the logical server identity. This logical server identity is called "UCS Service Profile"

The Unified Fabric

The unified fabric is based on 10 Gigabit Ethernet. The unified fabric supports Ethernet as well as Fibre Channel over Ethernet (FCoE). Virtualized environments need I/O configurations that support the movement of virtual machines (VMs) across physical servers (also called compute) while maintaining individual VM bandwidth and security requirements. The Cisco Unified Computing System delivers on this need with 10-Gbps unified network fabric.

Types of UCS Fabric Interconnect Ports

Cisco UCS Fabric Interconnects provide these port types:

Server Ports

Server Ports handle data traffic between the Fabric Interconnect and the adapter cards on the servers.

You can only configure Server Ports on the fixed port module. Expansion modules do not support Server Ports.

Uplink Ethernet Ports

Uplink Ethernet Ports connect to external LAN Switches. Network bound Ethernet traffic is pinned to one of these ports.

You can configure Uplink Ethernet Ports on either the fixed module or an expansion module.

Uplink Ports are also known as border links or Northbound ports

Uplink Fibre Channel Ports

Uplink Fibre Channel Ports connect to external SAN Switches.

They can also directly connect to the SAN Storage Server itself using FC protocols (typically for LAB based environment).

Network bound Fibre Channel traffic is pinned to one of these ports.

You can only configure Uplink Fibre Channel Ports on an expansion module. The fixed module does not include Uplink Fibre Channel Ports.

Fabric Interconnect (6120XP) Ethernet Switching Modes

The Ethernet switching mode determines how the Fabric Interconnect behaves as a switching device between the servers and the network. The UCS fabric interconnect operates in either of the following Ethernet switching modes:

- End-Host Mode

- Switch Mode

End-host Mode

End-host mode allows the Fabric Interconnect to act as an end host to the network, representing all server (hosts) connected to it through vNICs. This is achieved by pinning (either dynamically pinned or hard pinned) vNICs to uplink ports, which provides redundancy toward the network, and makes the uplink ports appear as server ports to the rest of the fabric. When in end-host mode, the Fabric Interconnect does not run the Spanning Tree Protocol (STP) and avoids loops by denying uplink ports from forwarding traffic to each other, and by denying egress server traffic on more than one uplink port at a time.

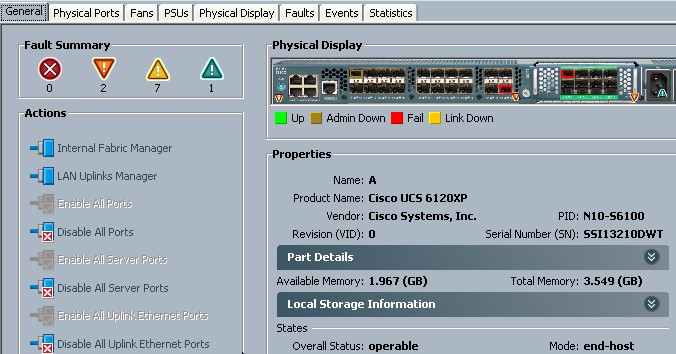

For the purpose of this document and for running other applications using VMware on UCS, it is recommended to configure the fabric interconnect in the End-Host mode. The setting can be easily located on the General Fabric Extender screen.

Switch Mode

Switch mode is the traditional Ethernet switching mode. In this mode the Fabric Interconnect runs STP to avoid loops, and broadcast and multicast packets are handled in the traditional way.

Switch mode is not the default Ethernet switching mode in UCS, and should be used only if the Fabric Interconnect is directly connected to a router, or if either of the following are used upstream:

- Layer 3 aggregation

- vLAN in a box

Click here for more information on the Ethernet Switching mode with UCS

Setting up UCS Manager

Following table explains various hardware components involved in deploying a UCS based setup. There are more pieces in a network than what is shown in the following table. Following table mentions only the important pieces in the UCS design.

Hardware Component Name

Description

| UCS-6120XP | 20 Fixed ports, 10GE/FCoE, 1 expansion module |

| UCS-2104XP | 4 Port Fabric Extender |

| UCS-B200-M1 | Half width blade server |

| Catalyst 6506 | Switch for uplink ethernet connectivity |

| LC to SC cable | Standard fiber cable to connect 6120XP to Cat6K Switch |

| SFP-10G-SR | Transceiver in 6120XP for ethernet uplink |

| X2-10GB-SR | Transceiver in CAT6K for ethernet connectivity with UCS-6120XP |

| SFP-H10GB-CU5M | Twinax cale to connect Fabric Extender to UCS-6120XP |

The following steps are based on a UCS Manager GUI based setup process although a CLI setup process is also available that is not discussed as part of this document. Open a standard web browser and browse the management ip address of UCS-6120XP switch.

Only for the first time, you will see the express setup option

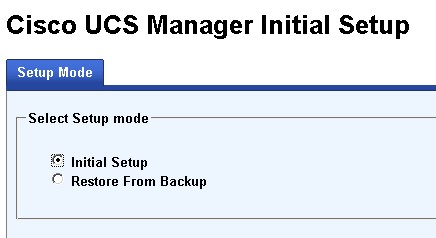

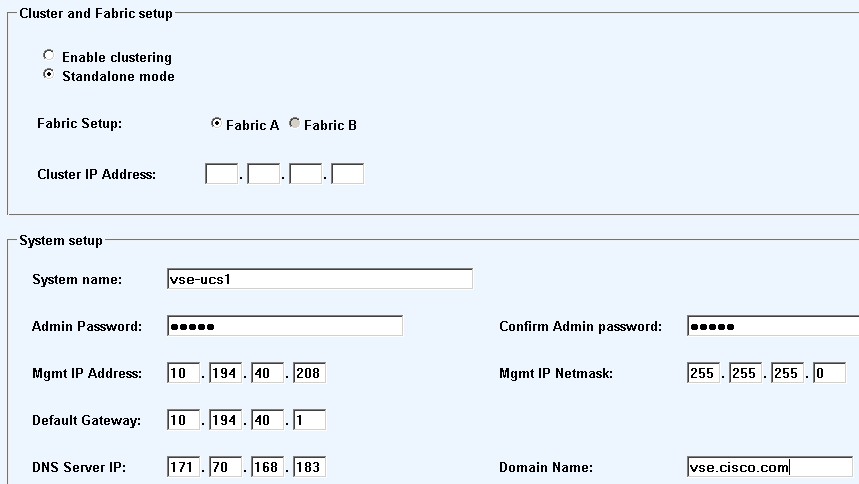

Continue with the express setup as follows

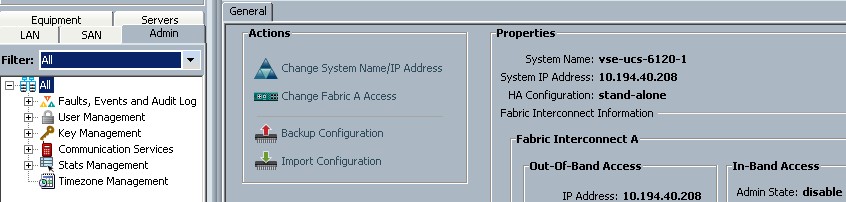

Since this configuration was done using single UCS chassis with single UCS-6120XP fabric interconnect switch, hence the Standalone mode is selected as shown in the following picture

- In typical deployment it is recommended to have redundancy by having two UCS chassis with two UCS-6120XP switch for high availability

- The high availability mode is called "Clustering"

- In clustering or redundant deployment mode, the secondary chassis setup would sync up with the primary chassis using a "Cluster Key ID" and the initial setup process of the secondary chassis would be streamlined based on the information downloaded from the first chassis.

After hitting submit, the fabric interconnect will reboot and after a while when you login, the "Express Setup" option is gone and you will see a following screen.

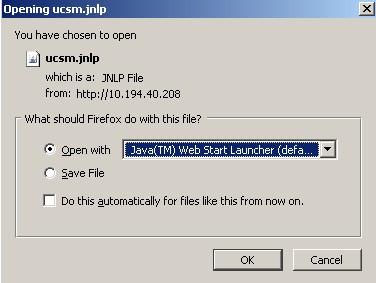

When you click Launch button, it will ask you to run the following java program.

NOTE: It is highly recommended to use latest JAVA JRE (Version 6 Update 17 was available at the time of writing of this document). Testing has shown that the previous versions might either crash, won't work, or hangs the session to the UCS Manager.

- You could save this file on your desktop or any other folder because next time you can only run this program to load the UCS Manager

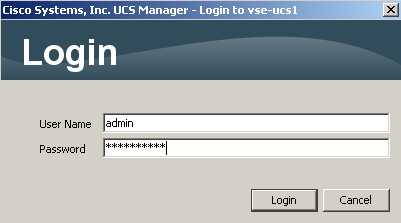

- Once the installation is done, you will be presented with the following login screen again.

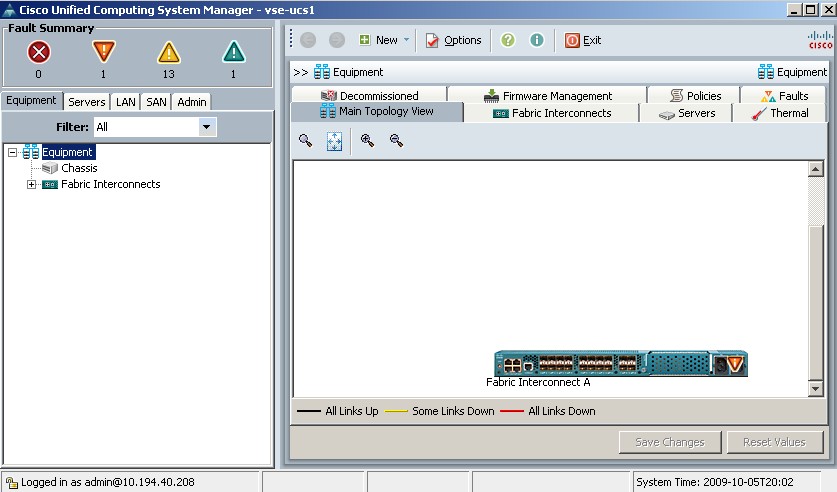

And now you will see the following screen with only one Fabric Interconnect shown and no chassis so far.

- Till this point the basic setup is completed

- From here onward it is your role to connect the chassis to the Fabric Interconnect in this "non-redundant" setup.

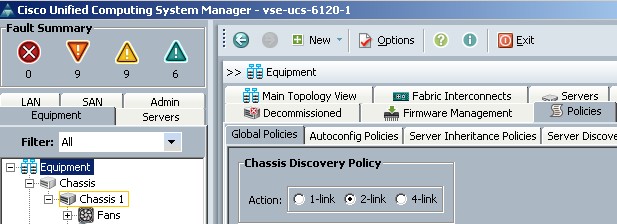

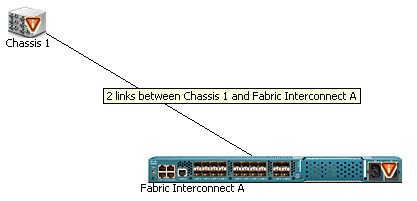

Initial Configuration and Policies

At this point it is good idea to validate few items before moving forward. If you are intended to use two black color twinax cables between UCS-6120XP and UCS Chassis then you should define it under the Equipment policy setting as shown below. Also notice that only 1, 2 or 4 links are possible between UCS-6120XP and UCS chassis fabric interconnect. The idea of these links is to distribute the blade server traffic load between 10GE twinax cables. In our scenario we have only two half width blades on our single chassis system. So blade#1 will be using link#1 and blade#2 will be using link#2. This assignment is automatic and cannot be changed.

Also check the following configuration to make sure that as admin you can see all the tasks because by default only "Faults and Events" are selected in the filter.

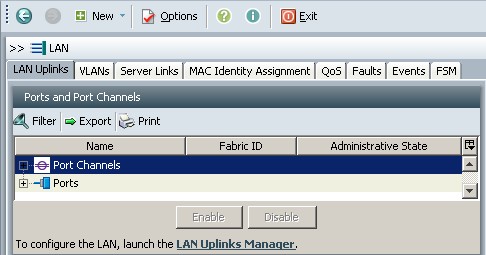

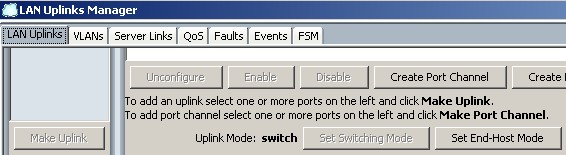

The recommended and default ethernet mode of operation for UCS-6120XP is "End-Host-Mode". When you change the Ethernet switching mode, the UCS Manager logs you out and restart the fabric interconnect (UCS-6120XP). For cluster configuration, the UCS Manager restarts booth fabric interconnect sequentially. Launch the "LAN Uplink Manager" to verify and configure the mode.

In the following picture, the mode is "Switch" right now. Change it to End-Host mode.

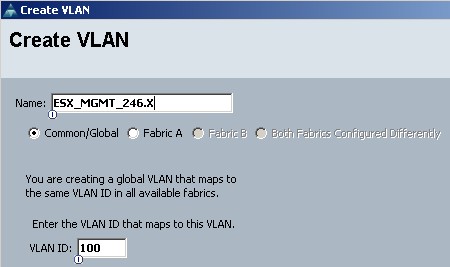

Also create a vlan that will be used to connect for the VMware ESX server management and for other management purposes. This should be a vlan that would not carry other traffic.

For VLAN ID configuration, its worth noting that Cisco does not recommend using VLAN 1 as most Enterprises either remove VLAN 1 or disable it. In addition, when configuring VLAN, it is worth considering configuring all the VLANs which the servers will need to be part of at this point instead of later down the line. If its later down the line, an updating template is necessary to propagate this information to the blade servers which may cause the server to reboot.

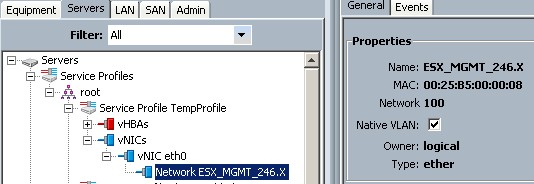

In the following diagram VLAN-id 100 is used as VMware ESX management VLAN.

On the CAT6K side configure following

interface TenGigabitEthernet5/2

description UCS-6120-XP Fabric Interconnect Port 20

switchport

switchport trunk encapsulation dot1q

switchport mode trunk

spanning-tree portfast edge

When we will reach to the point where we would configure the vNIC, in order to make it work or accessible, one will have to make sure to select Native VLAN option as follows. This could be different if you had native vlan configured on the CAT6K trunk port. If you had native vlan keyword configured on the CAT6K then you should not check on the native vlan option on the UCS Manager.

Configure Ports on the UCS Manager

There are three different types of ports that can be configured on the UCS-6120 switch

- Uplink Ethernet Port

This is used to connect UCS-6120 to the uplink device which is typically a Nexus 5K Access Layer Switch. But other options like Cisco Catalyst 6500, Cisco 4900M or C4948 10GB Switches are also supported. In this setup UCS-6120 is connected to CAT6K's 10GE port (using X2-10GB-SR transceiver in CAT6K with SC-LC fiber cable) for uplink ethernet connectivity.

- Server Port

Server ports connect UCS-6120 to the UCS fabric extender that is physically plugged into the UCS chassis (UCS fabric interconnect switch connects to the blade servers via UCS fabric extender)

- Uplink Fiber Channel Port

Fiber Channel Port doesn't come by default with the UCS-6120 switch. It has to be ordered as an expansion slot line item during UCS order and it plugs into the UCS-6120 expansion slot on the very right hand side. This port provides connectivity to the SAN Storage Array. In this setup Cisco Video Surveillance 4-RU Storage System with 42 x 1000-GB drives ( Part number CIVS-SS-4U-42000) is used as a SAN storage device (OMEed from NexSan SataBeast Storage Array).

It supports FC as well as iSCSI protocol. In a live deployments the SAN storage is carefully selected and it is not the scope of this document to discuss the SAN storage array selection criteria. For the purpose of this document, the focus is on the initial setup of UCS. Please refer to one of the Data Center SRND/CVD documents for the SAN storage discussion

http://www.cisco.com/go/datacenter

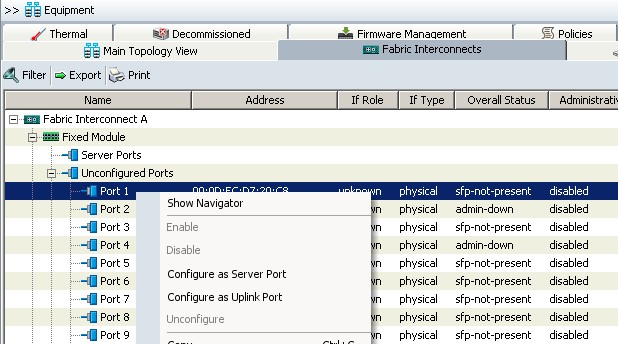

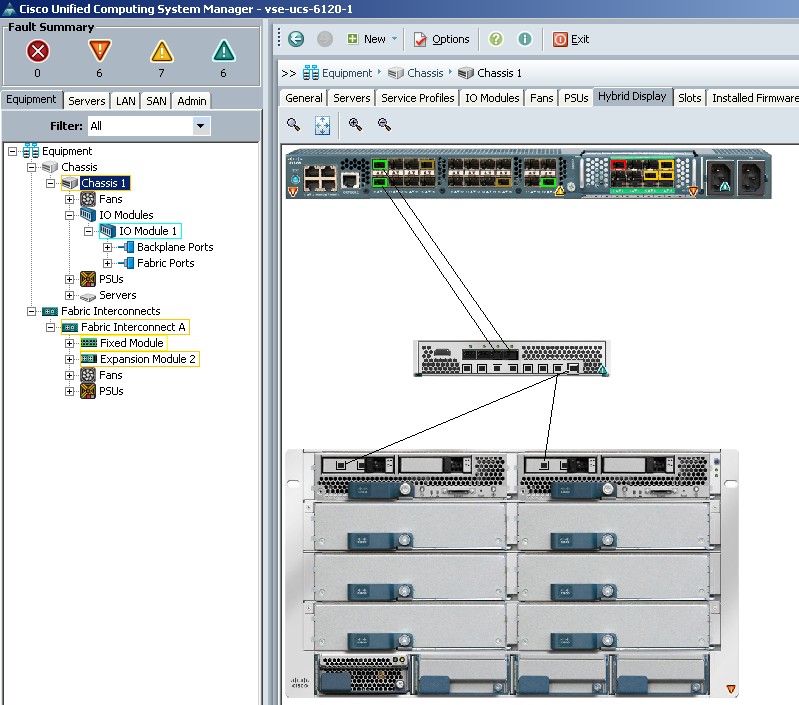

Connecting UCS-6120XP Switch to UCS-5108 Chassis

Now it is time to configure the Unified Fabric Interconnect Switch (UCS-6120) Port to be connected to the Chassis.

Assuming that the twinax cable is already connected between the chassis and the UCS-6120, configure the port as follows by selecting Configure as Server port.

Since we have two twinax cables on UCS-6120 connected to the two ports in the same fabric interconnect, we have selected second port as well

Note that like may other tasks, the same task can be archived using different GUI options so your steps may vary but the objective is same to set port as Server Port

And now when you look at it in the Main Topology View window, you can see two links connected

Following picture shows how the internal backplane port are connected to fabric extender and then fabric extender connects to the fabric interconnect switch.

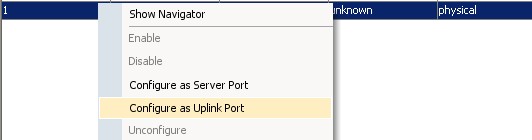

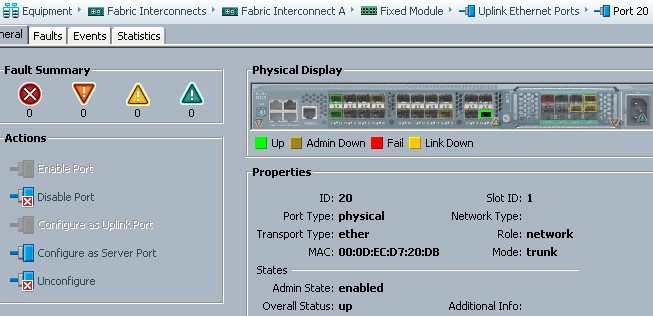

Connecting UCS-6120XP Switch to Uplink Ethernet Switch

Each UCS Fabric Interconnect provides ports that can be configured as either Server Ports or Uplink Ports. Ports are not reserved for specific use, but they must be configured using the UCS manager (preferably). You can add expansion modules to increase the number of Uplink Ports on the Fabric Interconnect. LAN Pin Groups are created to pin traffic from servers to a specific uplink Port.

UCS-6120XP ports are 10GE ports and hence the regular SFP transceiver cannot be used to connect it to the uplink ethernet port. Unlike 10/100/1000 bits ethernet port, 10GE ethernet port cannot do auto-negotiation of speed and duplex and hence the UCS-6120XP port should be connected to the 10GE uplink ethernet port. A 10GE transceiver (e.g SFP-10G-SR) is required on the UCS-6120XP switch. Make sure to order it as part of your UCS order because the Netformix ordering tool will not give you any indication if you had missed it.

On the CAT6K switch, X2-10GB-SR SC interface type transceiver can be used and a regular LC-SC fiber cable can be used.

Or you could have CVR-X2-SFP10G adapter inside tha X2-10GB-SR to convert it into a LC type connection and can use regular fiber cable or twinax (SFP-H10GB-CU5M) cable to connect UCS-6120XP to uplink Ethernet Switch.

The port configuration on UCS-6120XP are done at the Service-Profile vNIC level. VLAN trunking could be seleted either YES or NO. In a typicaly deployment this setting should be set to YES. And on the CAT6K switch side the port should be configured as a trunk port. Following is an example of CAT6K configuration

interface TenGigabitEthernet5/2

description UCS-6120-XP Fabric Interconnect Port 20

switchport

switchport trunk encapsulation dot1q

switchport mode trunk

spanning-tree portfast edge

!

show interfaces tenGigabitEthernet 5/2

TenGigabitEthernet5/2 is up, line protocol is up (connected)

Hardware is C6k 10000Mb 802.3, address is 0023.04d7.1d5d (bia 0023.04d7.1d5d)

Description: UCS-6120-XP Fabric Interconnect Port 20

MTU 1500 bytes, BW 10000000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

On the UCS-6120XP side the steps are very similar to configuring the server port. In the UCS Manager GUI, right click on the port that you want to configure as a uplink ethernet port and just select the option.

Once the connection with the ethernet switch is established the port will be moved under the Uplink Ethernet port group and will show enabled/up state as follows.

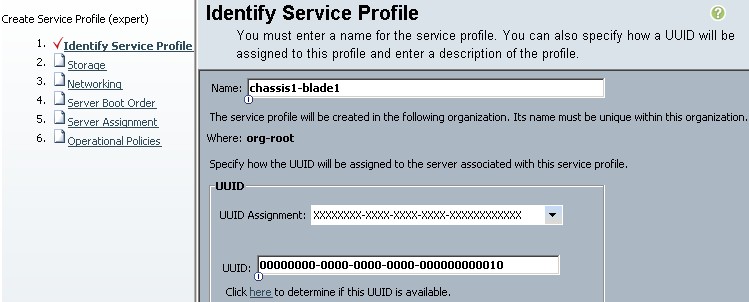

Service Profile Creation

Service Profiles are what define a blade server as a "complete server". Service Profiles contains all the information related to a server.

For example the NIC card that it has and the MAC address that is going to be assigned to the NIC etc. Service Profile is the definition of actual physical hardware.

In the UCS Manager in the"Server" tab, you will notice that root organization is already created with different default options. Right now the information that is available is enough for us to create a service profile and apply it to the blade server.

There are many different possible ways of doing these steps and this document only discusses one possible way. In a Multi-Tenant environment or for large organizations where there is a need to separate resources based on various functional groups for example Engineering, Support, Sales etc. You can define sub-organization under "root" organization too. However, this document does not discuss above or other advanced options.

Under the server tab select "Create Service Profile (expert)" option. This is very important that you select this option otherwise you will end up getting error messages to fix other steps. That are not very difficult to fix but this approach is the fast and easy approach to create a service profile and apply it to the physical blade

Specify the unique identifier for the service profile. This name can be between 1 and 16 alphanumeric characters. You cannot use spaces or any special characters, and you cannot change this name after the object has been saved.

The UUID drop-down list enables you to specify how you want the UUID to be set. There are multiple options available.

You can use the UUID assigned to the server by the manufacturer by choosing Hardware Default. If you choose this option, the UUID remains unassigned until the service profile is associated with a server. At that point, the UUID is set to the UUID value assigned to the server by the manufacturer. If the service profile is later moved to a different server, the UUID is changed to match the new server. So in order to keep the machine stateless, we have picked up option to specify a UUID manually. Typically a customer would create a UUID pool and then it should be picked up from the pool automatically. Also it is important to make sure the UUID is available by clicking the corresponding link.

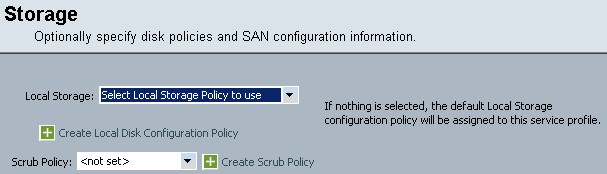

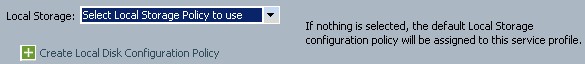

Storage

Next step is to configure the storage options. If you want to use the default local disk policy, leave this field set to Select Local Storage Policy to use. If you want to create a local disk policy that can only be accessed by this service profile, then choose Create a Specific Storage Policy and choose a mode from the Mode drop-down list.

We have selected Create Local Disk Configuration Policy link. It enables you to create a local disk configuration policy that all service profiles can access. After you create the policy, you can then choose it in the Local Storage drop-down list.

Select simple in the following screen.

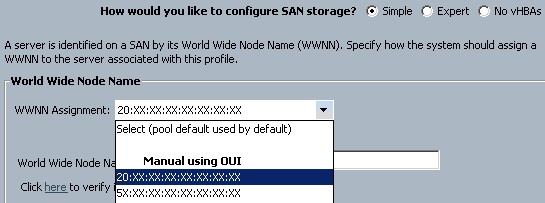

Simple You can create a maximum of two vHBAs for this service profile

Expert You can create an unlimited number of vHBAs for this service profile

No vHBAs You cannot create any vHBAs for this service profile

If you want to:

Use the default WWNN pool, leave this field set to Select (pool default used by default).

Use a WWNN derived from the first vHBA associated with this profile, choose "Derived from vHBA".

A specific WWNN, choose 20:XX:XX:XX:XX:XX:XX:XX or 5X:XX:XX:XX:XX:XX:XX:XXand enter the WWNN in the World Wide Node Name field.

A WWNN from a pool, select the pool name from the list. Each pool name is followed by number of available/total WWNNs in the pool.

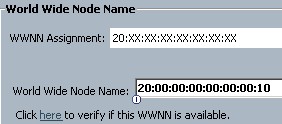

We randomly picked up following WWNN name. Because we didn't want to assign the WWNN name from the physical HBA to make our server stateless. Make sure to verify that it is available.

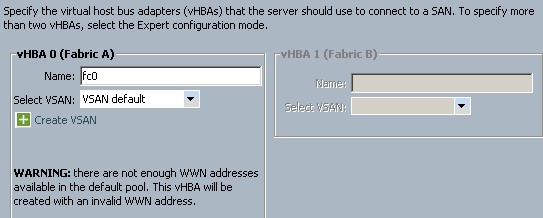

Ignore the warning in the following screen

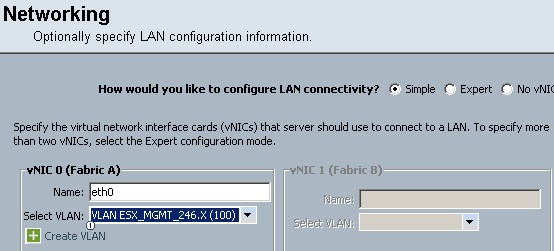

Configure the networking portion of the service profile creation

This is important to note that a service profile that has two vNICs assigned would require a blade in a chassis with two IOMs connected to two fabric interconnects, and that service profile would fail to associate to a blade in a chassis with only a single IOM.

Similarly, a service profile that defines both vNICs and vHBAs (assuming the presence of the “Menlo” or “Palo” adapters) would fail to associate to a blade with an “Oplin” adapter because the “Oplin” adapter doesn’t provide vHBA functionality.

The bottom line is that the administrator needs to ensure that the service profile is properly configured for the hardware by making sure that the policies and profiles are properly configured.

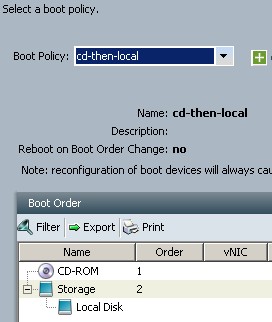

For now we have selected virtual CD as the first boot device and local hard drive as second. It is also a good practice to have it this way in case your local hard drive has some issues so that you can always boot from the CD.

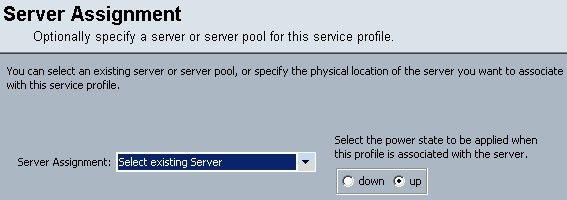

Now the fundamental portion of service profile are configured and the profile will be assigned to one blade server. It is again worth mentioning that in a trypical deployment you would want to use Service Profile Templates rather than creating Srvice Profile profiles for each and every single blade in your system

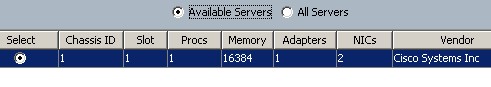

The following list shows the available servers in the system

IPMI Profile

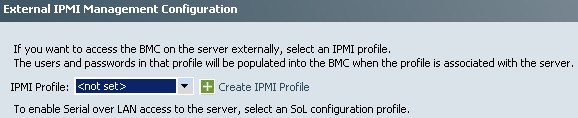

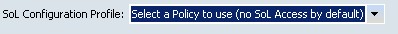

Intelligent Platform Management Interface (IPMI) technology defines how administrators monitor system hardware and sensors, control system components and retrieve logs all by using remote management and recovery over IP. IPMI runs on the BMC (Baseboard Management Controller) of the blade server and thus operates independently of the operating system (or the hypervisor in case of virtualization). Since IPMI operates independent of the operating system, it provides administrators with the ability to monitor, manage, diagnose and recover systems, even if the operating system has hung or the server is powered down.

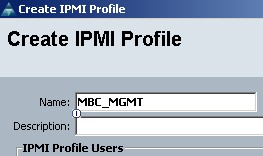

In order to provide direct access to the BMC to make IPMI services available, IPMI profiles are created and assigned to the blades. An IPMI profile contains a username, password and a role for that username.

Profile name is defined in the following screen

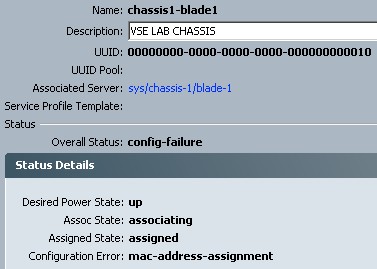

Notice that in the following screen you will notice a configuration error saying "mac-address-assignment"

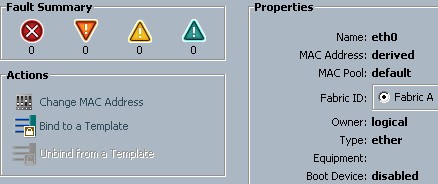

On the blade server properties page you can click on "Change MAC Address" option to change its MAC.

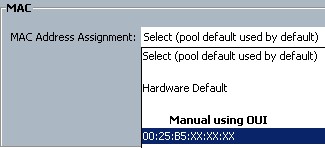

You may assign the MAC from the MAC pool or you may elect to assgin it manually. Here is is assigned manually.

After completing all these stpes, the blade server is configured and is ready to be used. In the next section of this deployment guide we will discuss how to install VMware ESX Server (Hypervisor) to be installed on the UCS blade server.

Next Steps:

VMWare ESXi 4.0 Installation on UCS Blade Server with UCS Manager KVM

Understanding and Deploying UCS B-Series LAN Networking

Understanding and Deploying UCS B-Series Storage Area Networking

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Can you confirm that this document is relevent for Cisco UCS Manager version 1.4.1 ?

Regards,

Sanjeev

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That link about mezzanine cards comparison is invalid :(

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Tks. This is what i needed.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

this is the link for the mezzanine card comparison :

http://www.cisco.com/en/US/products/ps10277/prod_models_comparison.html

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is cisco supporting uc with other 3rd patry VM on the same blade?

Regards

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: