- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- ACI Multi-Site deployment with initial configuration and verification

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-27-2018 06:48 PM - edited 03-01-2019 06:08 AM

- Introduction

- Prerequisites

- Requirements

- Components Used

- Configure:

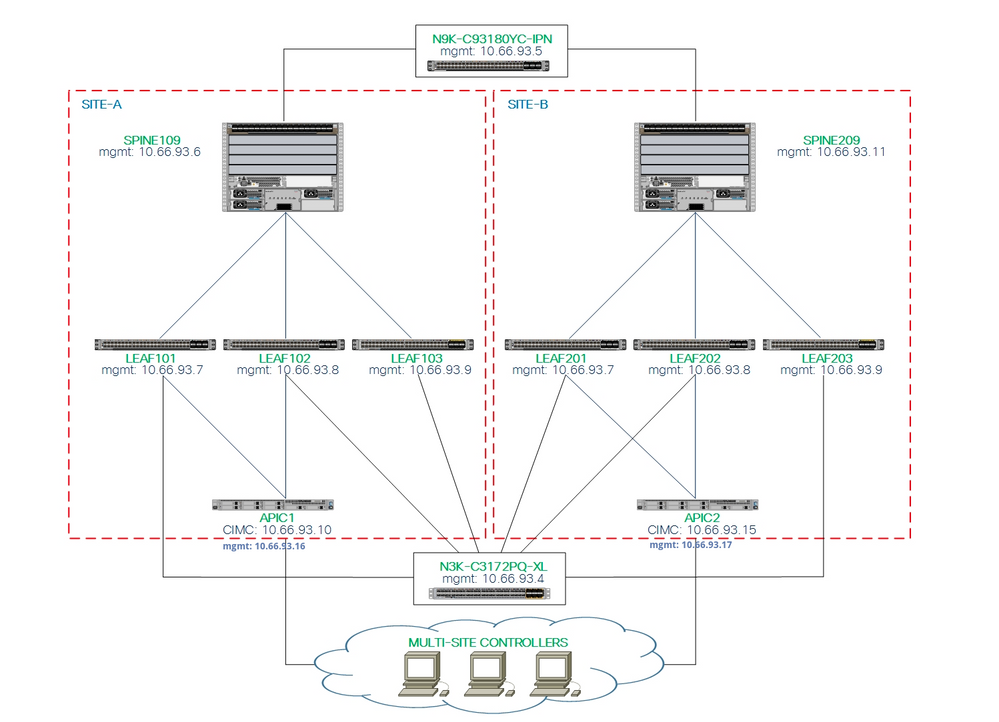

- Logical network diagram

- Configurations:

- IPN Switch configuration

- Required configuration from APIC

- Multi-Site Controller Configuration

- Verify

- Step 1: Verify infra configuration from APIC GUI on each APIC cluster

- Step 2: Verify OSPF/BGP sessionfrom Spine CLI on each APIC cluster

- Reference

Introduction

This document describes the steps to setup and configure ACI multi-site fabric from the scratch.

The ACI Multi-Site feature introduced in version 3.0 allows you to interconnect separate Cisco ACI Application Policy Infrastructure Controller (APIC) cluster domains (fabrics), each site that represents a different availability zone. This helps ensure multi-tenant Layer 2 and Layer 3 network connectivity across sites, and it also extends the policy domain end-to-end across fabrics. You can create policies in the Multi-Site GUI and push them to all integrated sites or selected sites. Alternatively, you can import tenants and their policies from a single site and deploy them on other sites.

Contributed by Linda Wang, Cisco TAC Engineer

Prerequisites

Requirements

1. Setup MultiSite Controller (MSC) by following the Cisco ACI Multi-Site Installation Guide below.

2. ACI fabrics have been fully discovered in two or more sites.

3. Make sure the APIC clusters deployed in separte sites have the Out of Band (OOB) management connectivity to the MSC nodes.

Components Used

The hardware and software used in this example configuration is listed in the table.

| Site A | |

|---|---|

| Hardware Device | Logical Name |

| N9K-C9504 w/ N9K-X9732C-EX | spine109 |

| N9K-C93180YC-EX | leaf101 |

| N9K-C93180YC-EX | leaf102 |

| N9K-C9372PX-E | leaf103 |

| APIC-SERVER-M2 | apic1 |

| Site B | |

|---|---|

| Hardware Device | Logical Name |

| N9K-C9504 w/ N9K-X9732C-EX | spine209 |

| N9K-C93180YC-EX | leaf201 |

| N9K-C93180YC-EX | leaf202 |

| N9K-C9372PX-E | leaf203 |

| APIC-SERVER-M2 | apic2 |

| IP Network(IPN) | N9K-C93180YC-EX |

| Hardware | Version |

|---|---|

| APIC | Version 3.1(2m) |

| MSC |

Version: 1.2(2b)

|

| IPN | NXOS: version 7.0(3)I4(8a) |

Note: The Cross-site namespace normalization is performed by the connecting spine switches, this would require the 2nd generation or newer Cisco Nexus 9000 Series switches with "EX" or "FX" on the end of the product name. Alternatively Nexus 9364C is supported in 1.1(x) and later ACI Multi-Site release.

For more details on hardware requirements and compatibility information, refer to ACI Multi-Site Hardware Requirements Guide .

Configure:

Logical network diagram

Configurations:

This document mainly focuses on ACI and MSC side configuration for Multi-Site deployment and the IPN switch configuration details are not fully covered. However, a few important configurations from IPN switch are listed below for reference purpose.

IPN Switch configuration

The following are the configuration used in the IPN device connected to the ACI Spines.

vrf context intersite description VRF for Multi-Site lab

feature ospf

router ospf intersite

vrf intersite

| //Towards to Spine109 in Site-A |

// Towards to Spine209 in Site-B |

interface Ethernet1/49 speed 100000 mtu 9216 no negotiate auto no shutdown interface Ethernet1/49.4 mtu 9150 encapsulation dot1q 4 vrf member intersite ip address 172.16.1.34/27 ip ospf network point-to-point ip router ospf intersite area 0.0.0.1 no shutdown |

interface Ethernet1/50 speed 100000 mtu 9216 no negotiate auto no shutdown interface Ethernet1/50.4 mtu 9150 encapsulation dot1q 4 vrf member intersite ip address 172.16.2.34/27 ip ospf network point-to-point ip router ospf intersite area 0.0.0.1 no shutdown |

In this example, we are using the default Control Plane MTU size (9000 bytes) on the Spine nodes.

Required configuration from APIC

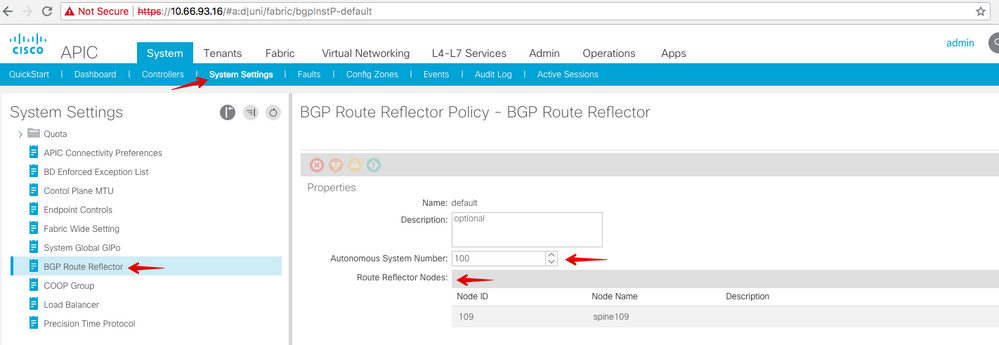

Step 1: Configure iBGP AS and Route Reflector for each site from APIC GUI

1. Login the site's APIC and configure iBGP AS and Route Reflector for each site's APIC cluster ( APIC GUI--> System --> System Settings --> BGP Route Reflector) , this is the default BGP Route Route Reflector Policy which will be used for the fabric pod profile.

2. Configure the Fabric POD Profile for each Site's APIC cluster, Go to APIC GUI--> Fabric --> Fabric Policies --> Pod Policies --> Policy Groups, click on the default Pod policy group, select the default "BGP Route Reflector Policy" from the dropdown list, shown as blow.

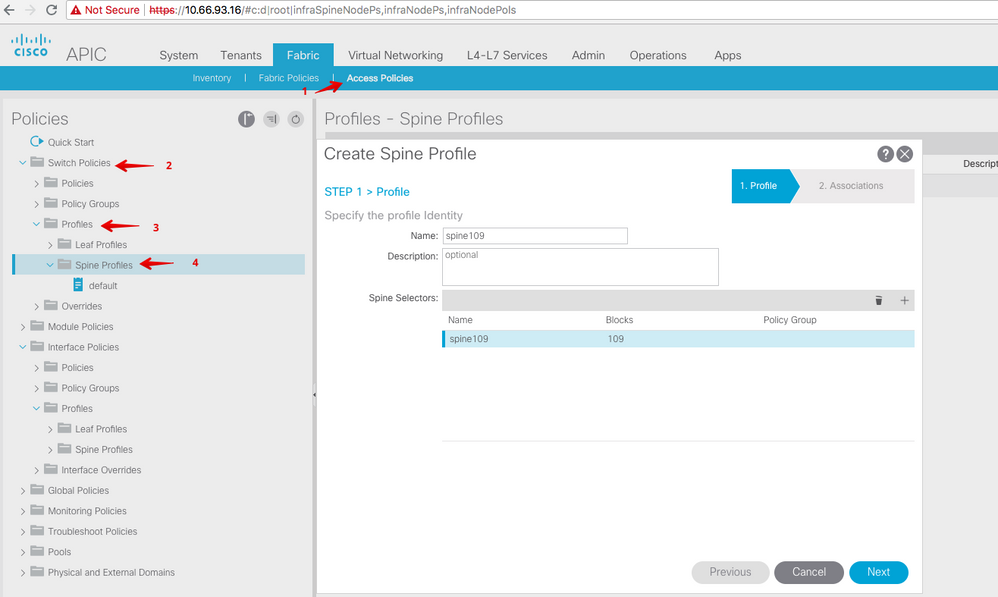

Step 2: Configure Spine Access Policies include External Routed Domains for each site from APIC GUI

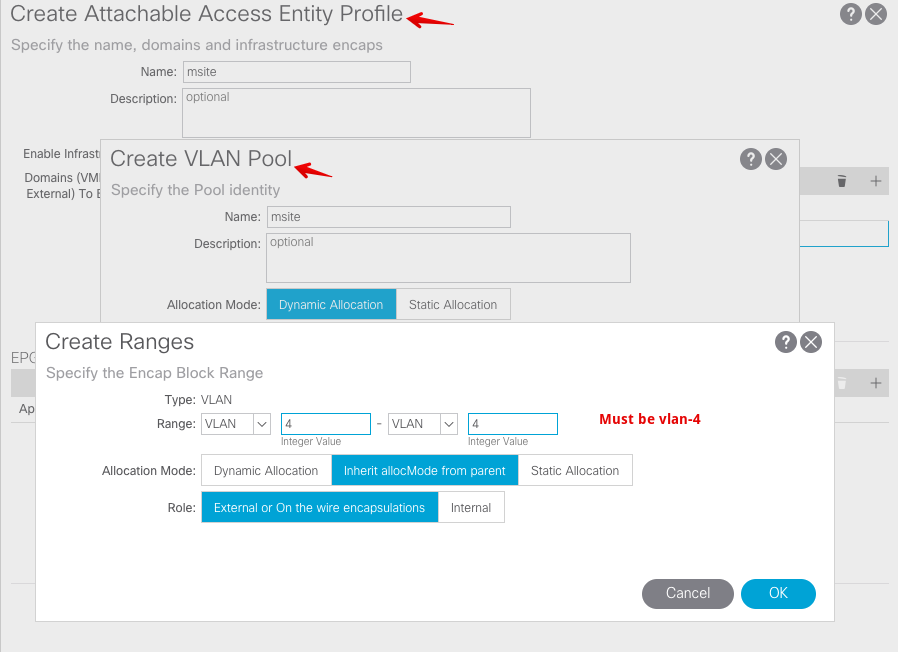

Configure the Spine access policies for Spine uplink to IPN switch with an AEP(Access Entity Profile) and L3 routed domain( APIC GUI--> Fabric --> Access Policies), this part configuration steps will be omitted here, providing with the screenshots for reference.

1) create the switch profile

2) create the AAEP (Attachable Access Entity Profile), Layer 3 Routed domain and VLAN Pool under access policies, shown as below.

3) create the Spine access port policy Group, here associate the Attached Entity Profile "msite".

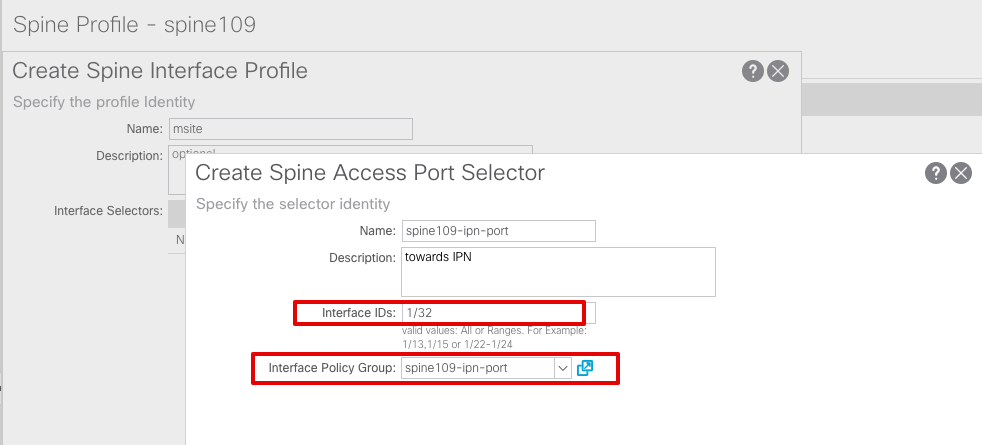

4) create the spine interface profile, associate the IPN facing spine access port to the interface policy group created in previous step.

Notes: As for now, there is no need to configure L3Out of OSPF under infra tenant from APIC GUI. We will configure this part via MSC and push the configuration to each site directly later on.

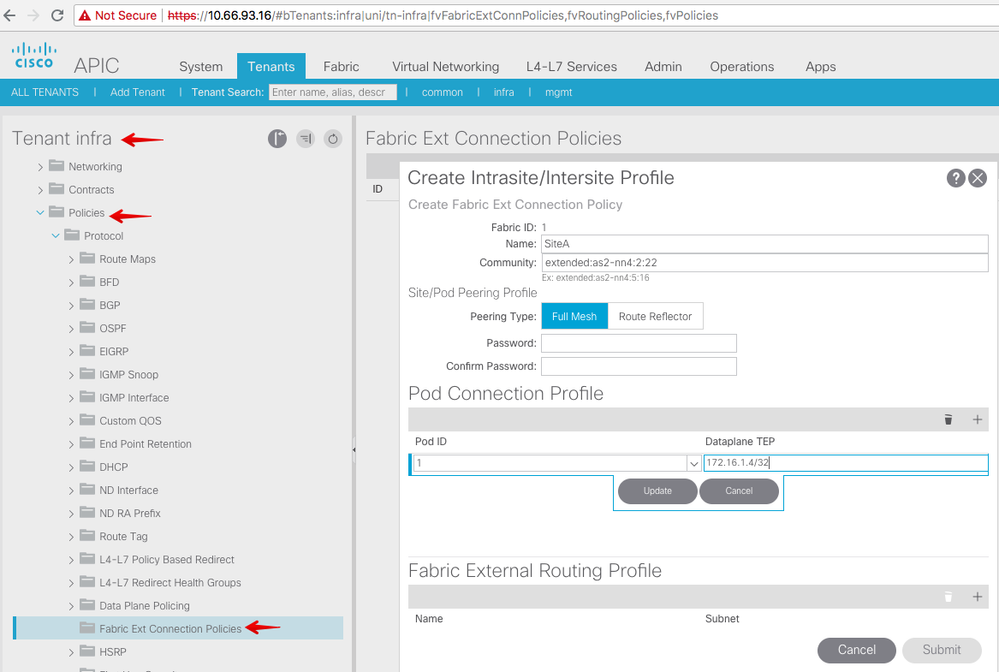

Step 3: Configure the External Dataplane TEP per site from APIC GUI

Go to APIC GUI--> Infra --> Policies --> Protocol --> Fabric Ext Connection Policies, then create an Intrasite/Intersite Profile, see screenshot below.

Repeat the above steps to complete APIC side configuration for SiteB ACI fabric.

Multi-Site Controller Configuration

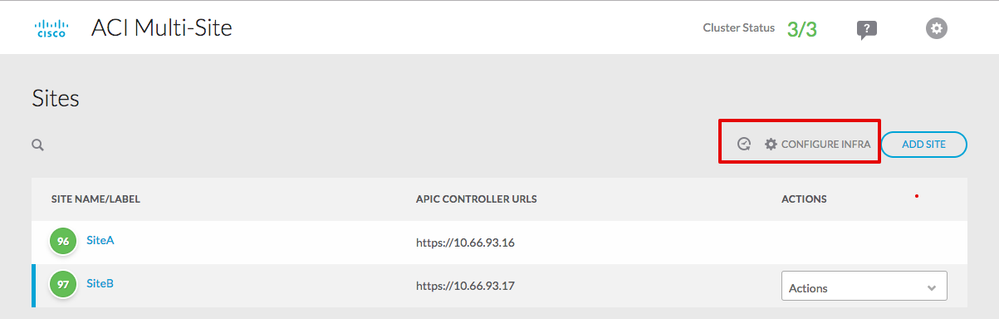

Step 1: Add each site one by one in MSC.

- Connect and login to MSC GUI.

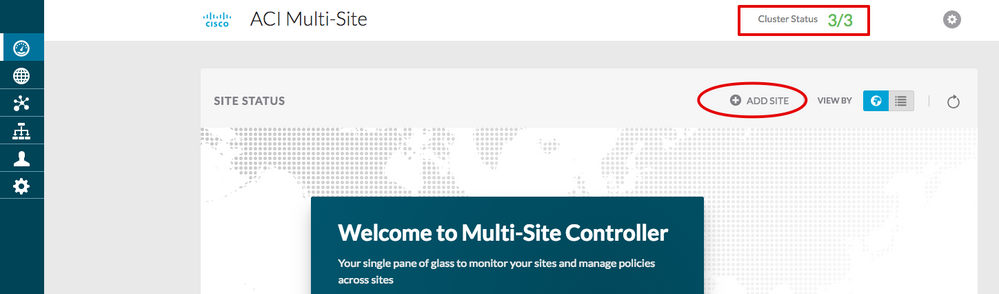

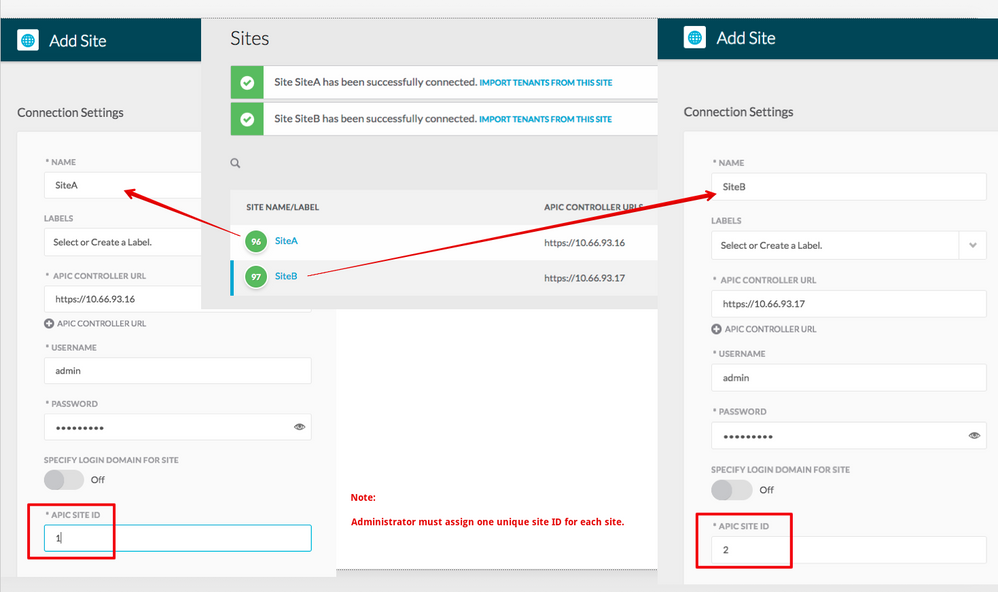

- Click on the "ADD SITE" menu in order to register the Sites one by one in MSC, you could also see the cluster status on the top right as highlighted.

- Use one of the APIC's ip address and assign one unique site ID for each site. (1-127)

Step 2: Configure Infra policies per Site in MSC.

- Login to MSC GUI, select "Sites" from the left-hand panel, then click on the "CONFIGURE INFRA" menu as shown in the screenshot below.

-

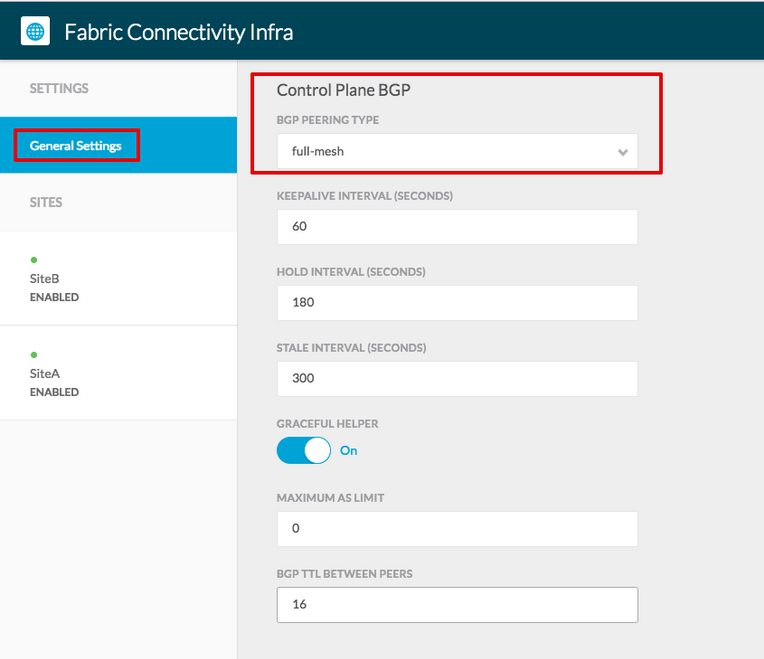

Configure Fabric Infra General settings: BGP peer type (full mesh - EBGP /route reflector - IBGP)

-

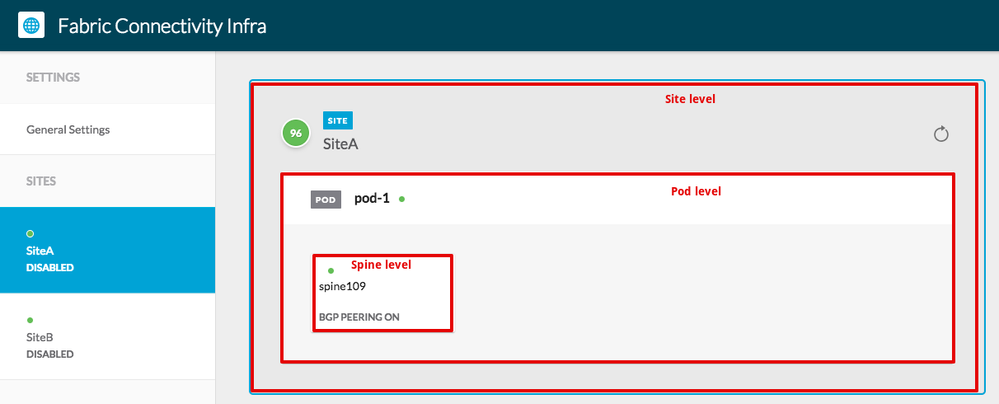

Once it's done, select one of the sites from the left panel. Then you will see site info in the middle, there are three different configuration level by clicking on different area. If you can click on The Site , the Pod or the Spine, It will allow different settings on the config panel (right panel)

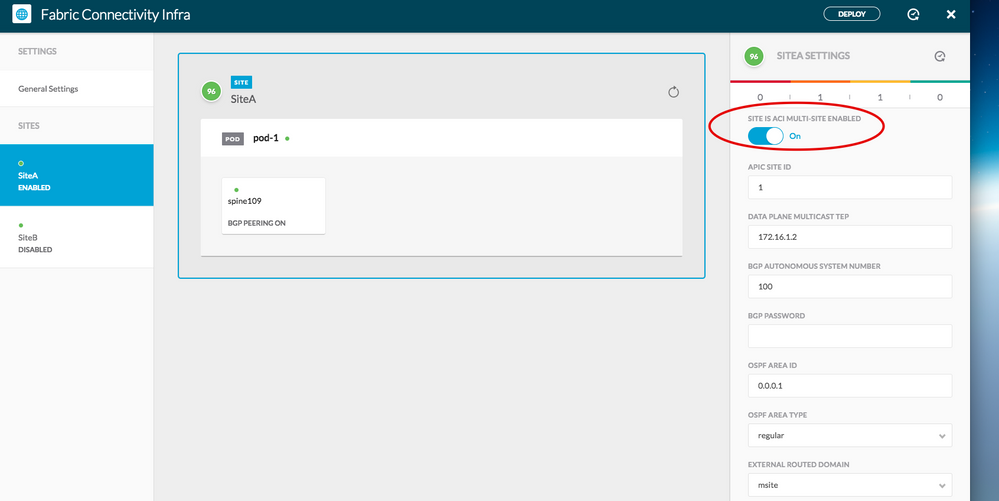

- Once we click on each site on the left panel, the site level configurations(Multi-Site Enable (On), Dataplane Multicast TEP, BGP ASN, BGP Community (e.g. extended:as2-nn4:2:22) , OSPF Area ID, OSPF Area Type (stub prevent tep pool advertising), External Route Domain .etc) will show on the right panel. Here we can configure or modify following.

- Specify Dataplane Multicast TEP (one lo per site) used for HREP

- BGP AS (matching AS from the site configured in apic)

- OSPF area type and area id

- External Routed Domain

In most cases, the attribute values would have already been retrieved automatically from APIC to MSC.

Here we need to specify the Data Plane Unicast TEP, as shown below.

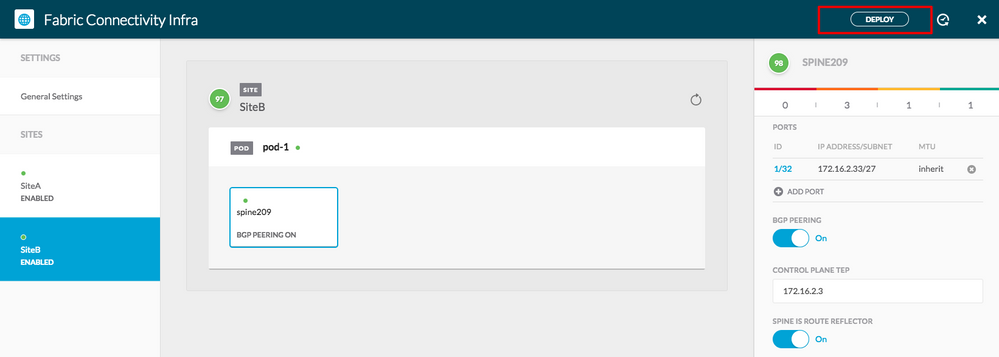

6. Then click on the Spine area and go to Spine specific infra settings, here we can configure followings (as shown in the screenshot below):

For each interface from spine towards to IPN Switch, set IP address and mask,

BGP peer on (yes),

Control Plane TEP (Router Id),

Spine is Reflector (On)

7. Repeat the steps above for other sites and complete the infra configuration in MSC.

8. Click on the "DEPLOY" button at the top, and this will push the infra configuration to APICs in both sites.

Verify

Step 1: Verify infra configuration from APIC GUI on each APIC cluster

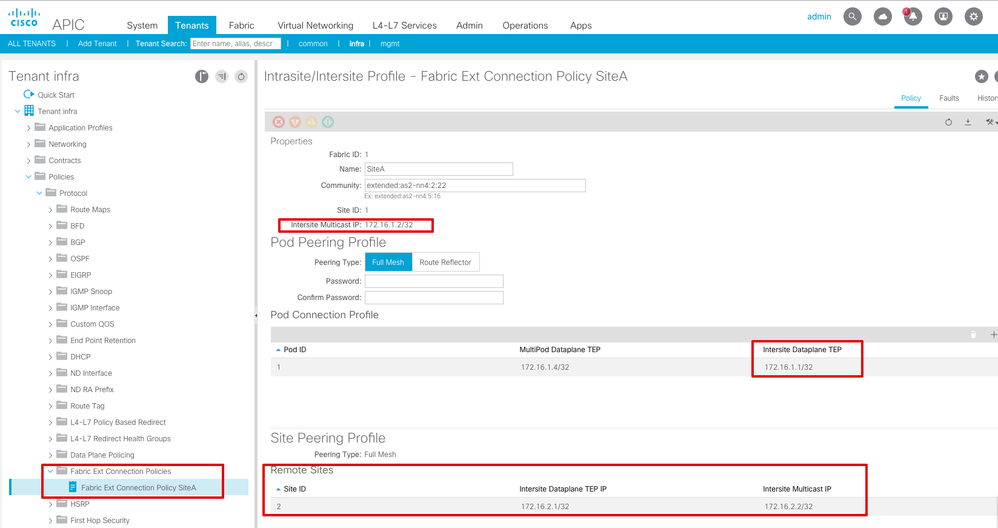

- Verify the Intrasite/Intersite Profile was configured under infra tenant on each apic cluster.

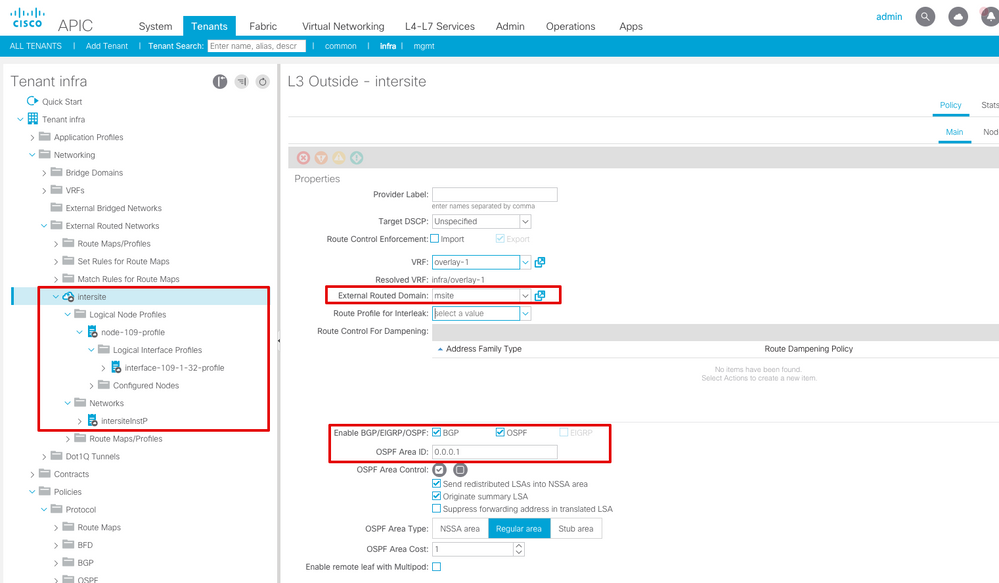

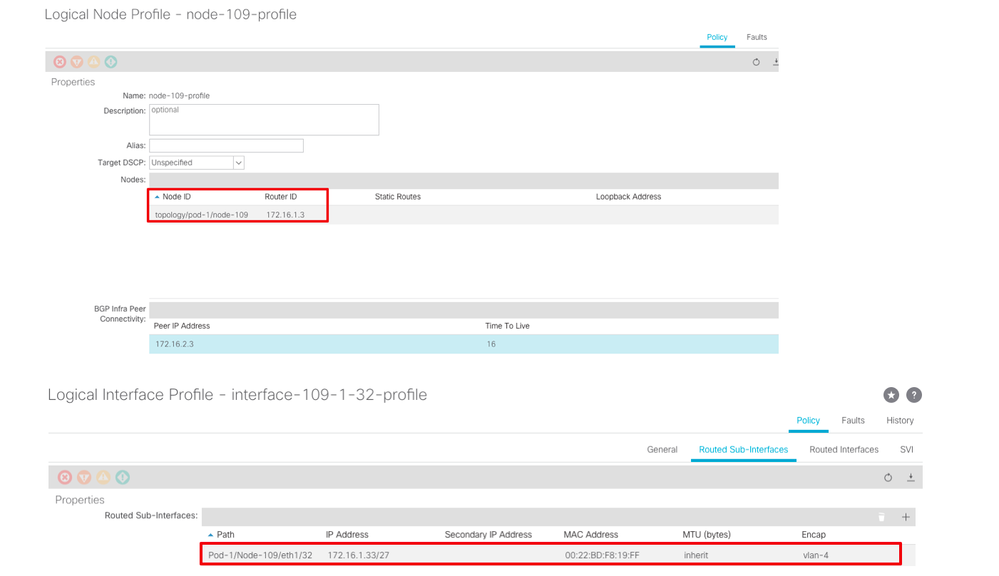

- Verify the infra L3Out (intersite), ospf, bgp was configured on each apic cluster (APIC GUI)

-

Login the site's APIC and verify the Intrasite/Intersite Profile under Tenant infra> Policies > Protocol > Fabric Ext Connection Policies, the Intersite profile will look like following when site is fully configured/managed by MSC.

- Go to APIC GUI --> Tenant Infra --> Networking --> External Routed Networks, here the "intersite" L3Out profile should be created automatically under tenant infra in both sites.

And also, the L3Out logical node and interface profile configuration is correctly set in vlan 4.

Step 2: Verify OSPF/BGP session from Spine CLI on each APIC cluster

- Verify OSPF is up on spine and gets routes from IPN (Spine CLI)

- Verify BGP session is up to remote site (Spine CLI)

Login to Spine CLI, verify the BGP l2vpn evpn and OSPF is up on each spine, and also verify the node-role for BGP is msite-speaker.

spine109# show ip ospf neighbors vrf overlay-1 OSPF Process ID default VRF overlay-1 Total number of neighbors: 1 Neighbor ID Pri State Up Time Address Interface 172.16.1.34 1 FULL/ - 04:13:07 172.16.1.34 Eth1/32.32 spine109#

spine109# show bgp l2vpn evpn summary vrf overlay-1

BGP summary information for VRF overlay-1, address family L2VPN EVPN

BGP router identifier 172.16.1.3, local AS number 100

BGP table version is 235, L2VPN EVPN config peers 1, capable peers 1

0 network entries and 0 paths using 0 bytes of memory

BGP attribute entries [0/0], BGP AS path entries [0/0]

BGP community entries [0/0], BGP clusterlist entries [0/0]

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

172.16.2.3 4 200 259 259 235 0 0 04:15:39 0

spine109#

spine109# vsh -c 'show bgp internal node-role'

Node role : : MSITE_SPEAKER

spine209# show ip ospf neighbors vrf overlay-1 OSPF Process ID default VRF overlay-1 Total number of neighbors: 1 Neighbor ID Pri State Up Time Address Interface 172.16.1.34 1 FULL/ - 04:20:36 172.16.2.34 Eth1/32.32 spine209# spine209# show bgp l2vpn evpn summary vrf overlay-1 BGP summary information for VRF overlay-1, address family L2VPN EVPN BGP router identifier 172.16.2.3, local AS number 200 BGP table version is 270, L2VPN EVPN config peers 1, capable peers 1 0 network entries and 0 paths using 0 bytes of memory BGP attribute entries [0/0], BGP AS path entries [0/0] BGP community entries [0/0], BGP clusterlist entries [0/0] Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 172.16.1.3 4 100 264 264 270 0 0 04:20:40 0 spine209# spine209# vsh -c 'show bgp internal node-role' Node role : : MSITE_SPEAKER

Login to Spine CLI to check and verify Overlay-1 interfaces.

ETEP (Multipod Dataplane TEP)

The Dataplane Tunnel Endpoint address used to route traffic between multiple Pods within the single ACI fabric.

DCI-UCAST (Intersite Dataplane unicast ETEP (anycast per site)

This anycast dataplane ETEP address is unique per site, it's assigned to all the spines connected to the IPN/ISN device and used to receive L2/L3 unicast traffic.

DCI-MCAST-HREP (Intersite Dataplane multicast TEP)

This anycast ETEP address is assigned to all the spines connected to the IPN/ISN device and used to receive L2 BUM (Broadcast, Unknown unicast and Multicast) traffic.

MSCP-ETEP (Multi-Site Control-plane ETEP)

This is the control plane ETEP address, which is also known as BGP Router ID on each spine for MP-BGP EVPN.

spine109# show ip int vrf overlay-1 <snip> lo17, Interface status: protocol-up/link-up/admin-up, iod: 83, mode: etep IP address: 172.16.1.4, IP subnet: 172.16.1.4/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo18, Interface status: protocol-up/link-up/admin-up, iod: 84, mode: dci-ucast IP address: 172.16.1.1, IP subnet: 172.16.1.1/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo19, Interface status: protocol-up/link-up/admin-up, iod: 85, mode: dci-mcast-hrep IP address: 172.16.1.2, IP subnet: 172.16.1.2/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo20, Interface status: protocol-up/link-up/admin-up, iod: 87, mode: mscp-etep IP address: 172.16.1.3, IP subnet: 172.16.1.3/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0

spine209# show ip int vrf overlay-1 <snip> lo13, Interface status: protocol-up/link-up/admin-up, iod: 83, mode: etep IP address: 172.16.2.4, IP subnet: 172.16.2.4/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo14, Interface status: protocol-up/link-up/admin-up, iod: 84, mode: dci-ucast IP address: 172.16.2.1, IP subnet: 172.16.2.1/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo15, Interface status: protocol-up/link-up/admin-up, iod: 85, mode: dci-mcast-hrep IP address: 172.16.2.2, IP subnet: 172.16.2.2/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0 lo16, Interface status: protocol-up/link-up/admin-up, iod: 87, mode: mscp-etep IP address: 172.16.2.3, IP subnet: 172.16.2.3/32 IP broadcast address: 255.255.255.255 IP primary address route-preference: 1, tag: 0

At the end, Make sure no faults is seen from MSC (multi-site controller)

Reference

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Very helpful document for Multisite!

Keep them coming :)

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you for putting this together, it was really helpful!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Amazing!!

I was looking for more information about the requirements of the Intersite network:

- what are the supported interconnection technologies that used connect the mutli-site: the white paper was showing Dark fiber from 10G to 100GB, ...is it possible to use MPLS with a bandwidth of 500 MB

- Which kind of switched L3 could be used as an IPN switch? is it enough an Switch Layer 3 or it needs an ASR?

Thank you for the post and your help.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is a great document, but it needs to be updated now for version 4.2, please add remote L3out configuration and Verification Scenario as well.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Siemmina

Thanks for your message.

The answers to your questions are documented in the white paper, as quoted below.

Q1: what are the supported interconnection technologies that used connect the mutli-site In terms of bandwidth?

"The recommended connection bandwidth between nodes in a Cisco ACI Multi-Site Orchestrator cluster is from 300 Mbps to 1 Gbps. These numbers are based on internal stress testing while adding very large configurations and deleting them at high frequency. In these tests, bursts of traffic of up to 250 Mbps were observed during high-scale configurations, and bursts of up to 500 Mbps were observed during deletion of a configuration consisting of 10,000 EPGs (that is, beyond the supported scale of 3000 EPGs for Cisco ACI Multi-Site deployments in Cisco ACI Release 3.2(1)). "

Q2: - Which kind of switched L3 could be used as an IPN switch? is it enough an Switch Layer 3 or it needs an ASR?

A2: The Inter-Site Network (ISN) is instead a simpler routed infrastructure required to interconnect the different fabrics (only requiring support for increased MTU). So it doesn't need to be an ASR.

Hope it helps,

Cheers,

Linda

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for your comments, @nkhawaja1

This document explains the steps to setup and configure ACI multi-site fabric from the scratch, it also applies to later release of MSO, remote L3out configuration and Verification Scenario can be a separated document.

Cheers,

Linda

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Linda,

Great and very helpful document especially the verification portion.

Thanks for help

Sajid Arain

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Linda.

Thank you very much for share this document. Really very helpful.

I've a doubt about WAN connection. When I've a cenario with ISN connected a CPE router, the interface must to be associated to vrf and the SP must be vrf end-to-end toward other site right?

There is any problem in configure it out of vrf?

I ask this because I've a customer that haven't MPLS on WAN link.

Best Regards,

Rod.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Helpful Document

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @lindawa

I would like to know the Minimum Bandwidth Requirement that we need to plan for the Site to Site Connectivity - in case of Cisco Multisite - Active / Active DC. Considering VLAN STRETCHING between the site. Please consider the Site to site Fabric connectivity requirement as well as production data flow ( infra traffic ).

Thanks & regards

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: