- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-26-2009 08:17 AM - edited 03-01-2019 04:27 PM

C3750 Switch Family Egress QOS Explained

Understanding the Egress QOS Logic on the C3750/C3560/C3750E/C3560E platforms.

(Alternate Title: Egress QOS for dummies)

Table of Contents

C3750 Switch Family Egress QOS Explained. 1

1 Optimizing Egress Traffic. 3

2 How the Egress Logic Works. 5

2.1 Basics on the Egress Logic of the Switch. 5

2.2 The Queue-set is Your Friend. 9

2.2.1 Elements of the Queue-set Explained. 9

2.2.2 Example of Queue-set configuration. 11

2.2.3 Queue-set configuration Guidelines. 12

2.2.4 More Queue-set Information. 13

2.3 How the transmit Queue is determined. 13

2.4 Will the packet be dropped by WTD?. 15

2.4.1 Threshold Modification Strategy. 16

2.5 Where are the packets going. 16

2.6 Order of Egress: Shaped, Shared or Both. 18

2.6.1 What does the Shaping value really do. 19

2.6.2 Shared Weights Explained. 20

2.6.3 Shaped and Shared Combination. 21

2.6.4 What does the Expedite Queue do?. 22

2.6.5 Limiting Bandwidth on an Interface. 22

2.7 Detailed Description of QOS Label Table. 23

3 Solutions and Strategies. 24

3.1 Quick and Easy Egress Buffer Optimizing Solution. 24

3.1.1 Assumptions for this Solution. 24

3.2 Optimize Single COS Traffic in non-stack. 24

3.2.2 Execution and Results for Single COS traffic. 25

3.2.3 Conclusion and Additional Info. 29

3.2.4 How to configure for traffic not using DSCP 0. 30

3.3 Strategy for Using 2 WTD Thresholds. 30

3.4 Simple Desktop Scenario. 32

3.4.1 Building a Configuration from traffic counts. 34

3.4.2 Egress Queue Strategy, What’s the plan. 35

3.4.3 Making the configuration changes. 37

4 The Entire QOS Label Table. 40

1 Optimizing Egress Traffic

“Optimizing traffic” for the purposes of this paper refers to reducing packet drop due to egress congestion. The focus of this document is explaining how the egress QOS logic works and how to configure the switch to make the egress QOS work for you.

1.1 Why have this paper

The Egress QOS logic on the C3750 family of switches is very flexible. With the flexibility comes complexity. The flexibility allows for Network Administrators to finely tune their switches to get optimal performance. To get the fine tuning, one needs to understand the relationship the configurable components have with each other.

The QOS flexibility has been one of the most troublesome aspects of the C3750 family of switches. Thus a paper is needed mostly for that reason. There needs to be a document that explains each component in enough detail that the reader will understand how to make each component work.

This paper has two main parts. The first explain how each invidual component works. The other is to give examples of how to solve problems.

1.2 Intended Audience

The paper is directed towards Customer Support personnel. Those individuals that will have to answer customer questions regarding Egress QOS behavior for the C3750/C3560 family of switches.

1.3 Disclaiminer

The QOS behavior of the C3750/C3560 switch families will be different from C3750E/C3560E switches. This has to do with the changes to the switching ASIC. Additionally each Switching ASIC under goes revisions that effect the QOS behavior. This way even a C3750 switching product may behave differently depending upon the revision of the switching ASIC. The development teams have done their best to ensure that the user interface remains the same for platforms based on either of the two switching ASICs. The paper intends to be switching ASIC agnostic. It may be necessary to point out differences between the two ASICs at certain points. This paper will refer to “platform” as a generic term for a C3750/C3560/C3750E/C3560E type switch. As the reader, you need to understand that there are differences in each flavor of the Switching ASIC. This paper will also use the term “C3750” as generic term to a switch, and not to mention a specific switch and ASIC revision.

1.4 Not covered

Ingress QOS is outside the scope of this document. Ingress congestion/buffer usually occurs during stack cable congestion.. In a non-stacked switch, you should not be experiencing packet drops due to congestion on the Ingress path. Optimizing for Stack cable congestion is a separate topic. Also, Ingress QOS topics such as policing and marking are outside the scope of this document.

These subjects are not covered by this document at this time. .

- EtherChannels

- IP Phones

- 10Gig links

- All forms of ingress QOS logic. This includes ingress queuing, and classification.

2 How the Egress Logic Works

In order to optimize the egress logic to prevent traffic loss for specific traffic flows, an understanding of how the egress logic works is needed. This chapter starts with a summary of the basics, and then gives details on the components. If you don’t care how it works and want to know how to change the configuration to optimize your traffic, skip this chapter. You can always refer back later if you have questions.

In total there are 20 configurable QOS items and that doesn’t include ACL’s. The QOS solution for the C3750 family of switches is very flexible. And because it’s so flexible, it can be confusing and difficult to understand.

2.1 Basics on the Egress Logic of the Switch

The QOS related hardware characteristics of the switch are well documented in many places. The C3750 Configuration Guide is a good source for those trying to get a basic understanding of the entire QOS feature set of the C3750 family. This summary section will briefly discuss the Egress logic characteristics at a high level. The idea is to support the detail sections written below, and to give the reader some understanding of the bigger egress logic picture. This section can be skipped if the reader has a basic understanding already.

Every packet that is to be transmitted out an interface is placed in one or more egress buffers. The egress buffers allow an interface to store packets when there are more packets to be transmitted than can physically be sent (ie: the interface is experiencing congestion). If the switch drops a packet, the lone reason is that it could not allocate enough buffers to hold the packet. Availability of egress buffers determine if a packet is transmitted or not. When it comes to reducing packet drops, understanding how the switch uses egress buffers is key. The switch does concern itself with packets. Rather it’s the number of buffers that a give packet will require should it be enqueued.

The amount of egress buffering varies from platform to platform (ie:C3750E family is different from C3750 family). In the C3750 switch family each switch divides the egress buffers into two pools, the reserved pool, and the common pool. The switch uses the common pool when the reserved pool for the egress interface has already been consumed. The common pool is the “backup” storage area, should the number of reserved buffers get consumed because of congestion.

The number of buffers placed into the common pool is equal to the total number of buffers less the total reserved buffers. At init, IOS computes the number of reserved buffers for each asic in the switch. Of the reserved buffers, the egress ports consume the majority. The CPU and other system entities also reserve buffers. With the default configuration, the common buffer pool has more than half the buffers. The ratio of reserved:common buffers varies from platform to platform. The ratio of reserved:common buffers can be modified by end user configuration. This is discussed below.

Because the number of egress buffers differs across platforms the IOS CLI does not deal in hard numbers, but rather percentages. The IOS CLI does not allow for the modification of the number of reserved buffers for each port. The IOS CLI allows for the modification of how those reserved buffers are allocated amongst the transmit queues (tx queues).

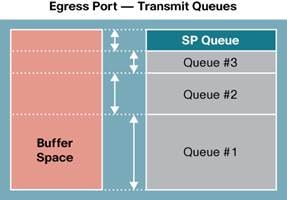

Each port is comprised of 4 Egress Transmit Queues (tx queue). Each tx queue reserves some egress buffers to ensure that congestion on other ports (or queues) don’t consume all the egress buffers and prevent traffic from being enqueued to a tx queue that is empty. Each packet being enqueued must be copied into an egress buffer(s) before being transmitted. The number of buffers reserved for each tx queue can and does vary.

In the Configuration Guide for C3750 figure 36-13 (shown below) shows a picture of the buffer pool. The reserved buffer pool is the same for each Queue for each port. In the default state, that’s how the reserved buffer pool is allocated. Each tx queue on each port is the same.

The buffer pool for each tx queue doesn’t have to be equal. When changes are made to the queue-set configuration, then the picture changes. The buffer pool can look like the figure below where the reserved buffer space for each tx queue is different for each port. It’s highly likely that each tx queue will have the same number of reserved buffers across the different ports. Tx Queue1 will have the same reserved buffer space for each port., and tx queue2 will be the same and so on. Tx queue1 will be different than tx queue2. The tx queue buffer space is determined by the queue-set configuration. Ports that are configured for the same queue-set will have the same characteristics. The queue-set configuration is discussed in detail in the sections below.

Before a packet is placed into an egress tx queue, the Asic ensures that this packet is allowed in by making several checks.

1. Is the reserved buffer count for the target tx queue empty enough to allow this packet in, and is the total number of buffers currently used by this port tx queue less than configured threshold value? if so, then enqueue the packet using buffers from the reserved pool.

2. Else are there enough free buffers in the common buffer pool for this packet? And is the total number of buffers currently used by this Port tx queue less than the configured threshold value? If both conditions are true the packet is enqueued using buffers from the common pool.

3. Else the packet is dropped.

The term “threshold” was introduced above. In this paper, the term “Threshold” is a maximum count of buffers allowed for a tx queue and a given class of traffic. Since mulitiple classes of traffic can and will use the same tx queue (via mapping), a threshold value is used to further differentiate amongst classes sharing a tx queue. The “threshold” configuration and behavior is discussed in more detail below. For now, you need to know that the switch keeps a count of packets current in the egress buffer pool (reserved and common) and that at some point if that count gets too high, packets will be dropped because the current count has exceeded some threshold.

Here’s an example of the logic for placing a packet into a tx queue. A packet requiring 4 buffers is to be egressed on Port 1. The arriving packet has an associated class of traffic that maps it to tx queue 4 and the first Threshold value. Port 1 tx queue 4 is configured for 50 reserved buffers. The first configured threshold on tx queue 4 is 250 buffers. At the time this packet arrives at the egress logic, tx queue 4 has its 50 reserved buffers already consumed. Thus criteria 1 above is not met. The egress logic then goes to criteria 2. The total number of buffers currently queued up for tx queue is 240, and the common buffer pool has 50 buffers available. This packet is enqueued because the total number of buffers consumed by tx queue 4 after this packet is added will be 244, and that is less than the threshold value of 250 buffers. This passes criteria 2 above.

It’s important to understand also that there are 3 possible thresholds that can be used for comparison for enqueuing into a tx queue. Packets that are to be enqueued are compared against a “configured” threshold. This means that packets destined for the same egress tx queue can have different thresholds. This allows for further differentiation & prioritization of traffic at the tx queue level. If COS 0 and COS1 are mapped to tx queue 4, then you can have COS 0 traffic be measured against threshold 1, and COS 1 traffic be measured against threshold2. If Threshold2 value is larger than Threshold1, then COS 0 traffic will be dropped before COS 1 traffic (if the port is congesting on this tx queue).

Once a packet is placed into a tx queue, the egress logic uses Shaped Round Robin (SRR) to drain the 4 tx queues. The SRR functionality is configurable allowing for some queues to drain faster than other queues. The tx queues in SRR can be “shaped” or “shared”. The default is for tx queue 1 to be shaped, and other tx queues to be shared. “Shared” does what it sounds like, in that all transmit queues share the egress bandwidth. “Shaped” means that one or more queues are shaped to a certain bandwidth. They are guaranteed to get that egress bandwidth, and no more. The Egress logic, for a shaped transmit queue, will service the queue at a certain rate; thereby shaping the traffic to a specific CIR. There is much detail on the SRR functionality in the configuration guide. This behavior of the egress logic is documented in the existing documents (C3750 Configuration Guide). It’s also discussed in more detail below.

2.1.1 QOS Defaults

QOS is disabled by default on the switch. This does not really affect the egress logic. It works the same regardless of whether QOS is enabled or not. When QOS is disabled, then egress resources are configured using values that are not modifiable by the end user. The egress resource configurations values are “hard coded” to default values when QOS is disabled. When QOS is disabled then egress buffering is done with minimal per port buffer reservation and most of the egress buffering is available in the common buffer pool. From the end user perspective all traffic is treated as best effort traffic (COS 0). For those situations where the vast majority of user traffic is the same COS, then going with QOS disabled may be a viable option (and assuming no other QOS features are needed). QOS disabled, allows congested ports to use common resources. Some egress buffering resources are reserved for the CPU traffic tx queues so they will not be starved out. CPU protocol traffic is always flowing in/out of the switch. QOS disabled reserves enough buffers so that no one port can prevent CPU traffic from getting the required egress buffer resources. QOS disabled resource configurations are not made available to the end user.

When QOS is enabled, then user modifiable configurations are moved into the hardware. Egress logic is suspended while this happening. IOS needs to program the hardware. End user traffic will be dropped while the Egress Logic configuration is programmed in the hardware. The hardware reprogamming is done when ever the egress logic configuration is changed, not just at QOS enable time.

Once “mls qos” has been enabled on the switch, and the hardware has been reprogrammed, the number of buffers available to best effort traffic is reduced. If all you do is enable QOS with “msl qos” command then, the switch is likely to have worse performance rather than better. It’s a common misconception that enabling QOS will improve throughput. Just enabling QOS will not. Additional QOS configuration is needed to improve throughput.

To see how any interface is configured for egress queuing use the “show mls qos interface [interface id] queueing” command.

2.2 The Queue-set is Your Friend

In order to fully understand the Egress Logic it’s important to understand the “queue-set” concept. The queue-set is a table that defines how the buffer resources of the 4 egress tx queues will be used. There are 2 user configurable queue-sets on the switch. For each tx queue, the queue-set defines the buffers allocated from the port, threshold1 value, threshold2 value, reserved buffers, and maximum buffers.

Queue-sets are only used for modifying egress logic behavior; ingress buffering does not use queue-sets.

An interface is assigned to one of the two queue-sets. Multiple interfaces can use the same queue-set. Not all interfaces on the same switching asic need to have the same queue-set assigned. The configuration in a queue-set defines how the interface it’s applied to will allocate and use it egress buffer resources. The user is allowed to configure the each queue-sets (numbers 1 & 2). By default, when QOS is enabled, all interfaces are members of queue-set 1.

The two queue-set tables allow the users to tune the egress logic for multiple ports in one place, rather than have to do it for each port and each tx queue which could get cumbersome. Modification of a queue-set table effects all interfaces assigned to that queue-set.

When the documentation describes Weighted Tail Drop (WTD) it is referring to the thresholds that are defined in the queue-set. There are really three thresholds. 2 are explicit and called out by name “threshold 1”, & “threshold 2”. The third threshold is implicit and that’s common buffer pool, or “maximum”.

2.2.1 Elements of the Queue-set Explained

Here’s a sample output of queue-set 1 in its default state:

switch2#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 25 25 25 25

threshold1: 100 200 100 100

threshold2: 100 200 100 100

reserved : 50 50 50 50

maximum : 400 400 400 400

Here is a detailed explanation of each row in the table above. After each row is described, several examples are given to further help with understanding.

“buffers” – percentage of system assigned buffers for the interface that are allocated to the 4 tx queues. The values for tx queues 1 – 4 should add up to 100. The total number of system assigned buffers for an interface remains the same regardless of how the percentages are configured for the 4 tx queues. The total number of system assigned buffers for the interface cannot be changed. This percentage value is not stored in HW, but IOS uses it to compute the values for the rows in the rest of the table. That’s why it’s first. In the example above, each tx queue is given an allocation of 25% of the interfaces assigned buffers. At the switch level, all physical interfaces with the same max speed are given the same amount of system assigned buffers to start with.

“Threshold1” & “Threshold2” – units are a percentage of total buffers allocated to the tx queue (from the “buffers” calculation). Range is 1- 3200. In the example above this is 100% (or 200% for tx queue 2) And that equates to a buffer value that is equivalent to the number of buffers allocated to this tx queue. Thresholds are a value used to compare with the current tx queue buffer usage. There are 2 different threshold values given in the table so that the end user can prioritize different traffic types that are queued to the same tx queue. By making Threshold1 larger than threshold2, that traffic type (as determined by QOS label described below) which uses threshold2 will be dropped before traffic using threshold1. The larger the value, the more packets (and buffers) that can be consumbed by the tx queue. In the formal user documentation the values assigned here are the values used when performing Weighted Tail Drop (WTD). When reading about WTD for the egress ports, you should imagine the Threshold values configured for the tx queue.

“reserved” – This is the percentage of allocated buffers (from the “buffers” percentage above) that the tx queue will actually reserve. The range is 1-100. In the table above, “50” is given. This means that the tx queue is only reserving 50% of the allocated buffers. Allocated buffers that are not reserved are placed into the common buffer pool.

“maximum” – units are a percentage of the total buffers allocated to the tx queue. Range is 1- 3200. 100% equates to the number of buffers that are allocated by the “buffers” percentage above. Anything over 100% means the tx queue can use common buffers to queue packets. The default value for this field is 400%. When threshold 1 & 2 are not applied to a packet (see QOS Label), then this value is used to compare with current tx queue buffer usage. If current tx queue buffer usage is below the “maximum”, then the packet is enqueued. This is the third threshold value.

The terms “reserved” and “allocated” are a little overused. There are buffers reserved for a port, and buffers reserved for a tx queue. Depending upon how the reserved buffers are configured, this changes how buffers are allocated. Confused? Think of it like this. Each port is given X number of buffers by IOS to distribute to each tx queue according to how the queue-set is configured. This is the allocated buffer amount. This is a percentage of the total assigned to the port. The actual number of buffers reserved by a tx queue is the percentage configured in “reserved” column. Unreserved buffers are released back into the common pool.

2.2.2 Example of Queue-set configuration

Real World Example of queue-set as programmed in HW.

In this example, the focus will be on a single port. The values in the columns with “%” are the values configured in the queue-set. The value in the column to the right is the actual value programmed in HW.. Units in these non percent columns are in buffers. Note: the rows for “buffers” and “reserved” are used together to determine what the actual reserved buffer value will be.

Q1% | Q1 buffer | Q2% | Q2 buffer | Q3% | Q3 buffer | Q4% | Q4 buffer | |

buffers | 25 | 25 | 25 | 25 | ||||

Thresh1 | 100 | 50 | 100 | 50 | 100 | 50 | 100 | 50 |

Thresh2 | 100 | 50 | 100 | 50 | 100 | 50 | 100 | 50 |

Reserved | 50 | 25 | 50 | 25 | 50 | 25 | 50 | 25 |

maximum | 400 | 200 | 400 | 200 | 400 | 200 | 400 | 200 |

In the example above, the port has 200 buffers that have been assigned by IOS. Each tx Queue is configured to have 25% of the total assigned to the port. Each tx Queue is allocated 50 buffers. Then only 50% of the allocated amount is then reserved. The actual number of reserved buffers is 25 per tx queue. The buffer allocation of 50 for each tx queue is used by Thresh1, Thresh2, and maximum rows to compute their values.

Another example again using 200 as the number of IOS assigned buffers for the port. This time change the percent of IOS assigned buffers allocated to each tx queue to 10, 20, 30, and 40 respectively. Here’s the CLI command:

Switch(config)#mls qos queue-set output 1 buffers 10 20 30 40

Keeping all other configuration items the same, here’s the values in HW

Q1% | Q1 buffer | Q2% | Q2 buffer | Q3% | Q3 buffer | Q4% | Q4 buffer | |

buffers | 10 | 20 | 30 | 40 | ||||

Thresh1 | 100 | 20 | 100 | 40 | 100 | 60 | 100 | 80 |

Thresh2 | 100 | 20 | 100 | 40 | 100 | 60 | 100 | 80 |

Reserved | 50 | 16* | 50 | 20 | 50 | 30 | 50 | 40 |

maximum | 400 | 80 | 400 | 160 | 400 | 240 | 400 | 320 |

‘*’: IOS will not program the HW with any value below 16 (0x10).

A third example, building on the previous. Leave, the buffer allocation percentage, in the “buffers” row the same at 10, 20, 30, 40. This time make Threshold 1 a higher priority for WTD than Threshold 2. Increase the Threshold1 value for each tx queue to show the effect this will have., and leave the value of Threshold 2 the same. Additional modify the Reserved value for the each tx queue to 30, 40, 60, 70 to see what effect that has on the rest of the table.

Here are the CLI commands:

Switch(config)#mls qos queue-set output 1 buffers 10 20 30 40

Switch(config)#mls qos queue-set output 1 threshold 1 200 100 30 1000

Switch(config)#mls qos queue-set output 1 threshold 2 300 100 40 1000

Switch(config)#mls qos queue-set output 1 threshold 3 400 100 60 1000

Switch(config)#mls qos queue-set output 1 threshold 4 500 100 70 1000

Q1% | Q1 buffer | Q2% | Q2 buffer | Q3% | Q3 buffer | Q4% | Q4 buffer | |

buffers | 10 | 20 | 30 | 40 | ||||

Thresh1 | 200 | 40 | 300 | 120 | 400 | 240 | 500 | 400 |

Thresh2 | 100 | 20 | 100 | 40 | 100 | 60 | 100 | 80 |

Reserved | 30 | 16* | 40 | 16 | 60 | 36 | 70 | 56 |

maximum | 1000 | 200 | 1000 | 400 | 1000 | 600 | 1000 | 8000 |

For tx queue1 the buffer allocation was 20, q2 it was 40, q3 it was 60, and q4 it was 80. This is shown by the Threshold2 values because that is still at 100%. Use these buffer allocation numbers as the basis for your computations when filling in the values for the remaining rows in the table.

2.2.3 Queue-set configuration Guidelines

1. Because percentages are used, it’s possible to set the threshold levels really low by configuring percentages that are below than 100. Users are discouraged from configuring threshold percentages levels below 100. Doing so is potentially a waste of egress buffer resources. Consider a case where the threshold value is less than the reserved buffer count. If the reserved buffer count for the tx queue is empty, the HW always checks to make sure that threshold value mapped to the packet will not be exceeded for the tx queue. Some care needs to be taken that the threshold values are not so low, that they prevent the use of reserved buffers.

2. Increasing the “buffers” and/or the “reserved” fields for a given tx queue will increase the reserve buffer pool for the entire asic, and thereby decreasing the common buffer pool. Users need to remember that the buffer pool is finite. The default settings for the queue-sets don’t allocate too many buffers out of the common buffer pool.

3. Consider increasing the “thresholds” and the “maximum” values before modifying the “buffers” and “reserved” values to allow for traffic in a given tx queue to be queued during congestion. Increasing thresholds should always be the first change when trying to prevent traffic drop due to congestion.

4. If you have a few ports with special needs (i.e. uplinks) consider applying queue-set 2 to those ports, and leave the other ports in queue-set1. Then when changes are made to queue-set 2, the potential impact to overall buffer resources is limited to the number of interfaces assigned to queue-set 2.

2.2.4 More Queue-set Information

IOS ensures at least 16 buffers are reserved for each tx queue no matter how it’s configured. 16* 256 bytes = 4096 Bytes are reserved. This is enough for 2 baby giant frames (1516 bytes). Remember that each buffer is 256 bytes. Even if the configured values for fields “buffers” are “reserved” below 16, IOS will still reserve 16 packets. Basically, IOS won’t let you remove all reserved buffers from a tx queue.

2.3 How the transmit Queue is determined

When you look at Egressing the packet, the first thing the egress logic determines is the physical interface(s) to egress the traffic. The second thing is the tx queue to enqueue the packet into.

You have to go all the way back to when the packet first ingressed into the switch to know which egress tx queue the packet will be assigned. On ingress each packet is assigned a QOS Label during the “classification process”. The QOS label is an index into a table. The complete table is given at the end of the document. A QOS label is a value between 0 – 255. The majority of user traffic will use QOS labels in range 0 – 74. Other QOS labels are for internal traffic, and special scenarios. The QOS label is associated with the packet all the way until it successfully placed into an egress buffer.

Depending upon how the switch and the ports are configured, the QOS label assigned to the incoming packet will vary. With QOS disabled on the switch, all packets ingressed are assigned QOS label 0. When QOS is enabled on the switch, but all ports are still considered to be untrusted, then all ingressed packets are assigned QOS label 1. When ports are configured for “mls qos trust dscp” then the assigned QOS label is DSCP value + 2. When “mls qos trust cos” is configured then QOS Label is COS value + 66.

The QOS Label value identifies all possible queuing actions that are performed on this packet. One of the actions in the QOS table is to identify the Egress Q, the other is identify threshold value to compare against. There are 3 possible threshold values that can be assigned to a packet. It’s a number 0, 1 or 2. As explained in the overview section above, the packet is not enqueued to the tx queue unless is passes the threshold check.

You can see the Egress Queue and the threshold value for each QOS label. The output of “show platform qos label” gives this mapping. There are three columns in the output. Only the middle column is under discussion here. The first column is the mapping for the ingress queing logic. The third column is the mappping of the packet when DSCP re-write is being performed on a ingressed packet. Here’s a sample of the table in its default state:

switch2#show platform qos label

Label : Rx queue-thr : Tx queue-thr : rewrite dscp-cos

--------------------------------------------------------------

0 2 - 2 3 - 2 FF - FF

1 2 - 0 1 - 0 0 - 0

2 2 - 0 1 - 0 0 - 0

3 2 - 0 1 - 0 1 - 0

4 2 - 0 1 - 0 2 - 0

5 2 - 0 1 - 0 3 - 0

6 2 - 0 1 - 0 4 - 0

7 2 - 0 1 - 0 5 - 0

8 2 - 0 1 - 0 6 - 0

9 2 - 0 1 - 0 7 - 0

A 2 - 0 1 - 0 8 - 1

B 2 - 0 1 - 0 9 - 1

C 2 - 0 1 - 0 A - 1

D 2 - 0 1 - 0 B - 1

E 2 - 0 1 - 0 C - 1

F 2 - 0 1 - 0 D - 1

10 2 - 0 1 - 0 E - 1

11 2 - 0 1 - 0 F - 1

12 2 - 0 2 - 0 10 - 2

13 2 - 0 2 - 0 11 - 2

14 2 - 0 2 - 0 12 - 2

15 2 - 0 2 - 0 13 - 2

16 2 - 0 2 - 0 14 - 2

-- more --

The output of this command is 0 based. So, add 1 to all Tx queue and Threshold values to get actual number.

In example above, Label 0 shows that the egress TxQueue will be Queue 3(actually tx queue 4), and the threshold value will be 2 (which is actually “maximum” as far as user is concerned). When QOS is disabled, all user data packets that ingress the switch are assigned QOS label 0.

A quick example: When QOS is enabled, and the ports are still untrusted (True when the ingress ports have not been explicitly put into a trust state), then QOS label 1 is assigned to all packets. By default this will mean the packet will use transmit queue 2 (shown as 1 above), and will use the Threshold value assigned by Threshold1 (shown as 0 above)

When QOS is enabled, and “mls qos trust dscp” is configured on the ingress interface, then the output of the CLI command “show mls qos maps dscp-output-q” shows the egress tx queue and threshold value for all DSCP values. The tx queue and threshold mappings to each DSCP value are configurable.

Here’s an example of the default config:

Switch#show mls qos maps dscp-output-q

Dscp-outputq-threshold map:

d1 :d2 0 1 2 3 4 5 6 7 8 9

------------------------------------------------------------

0 : 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01

1 : 02-01 02-01 02-01 02-01 02-01 02-01 03-01 03-01 03-01 03-01

2 : 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01

3 : 03-01 03-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

4 : 01-01 01-01 01-01 01-01 01-01 01-01 01-01 01-01 04-01 04-01

5 : 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

6 : 04-01 04-01 04-01 04-01

Based on the DSCP mapping from above, packets ingressing with DSCP 0 will egress in tx queue 2. Packets with DSCP 20 will egress in tx queue 3, and packets with DSCP 50 will egress in tx queue 4. Unlike the QOS label table above, the values in this table are 1 based.

A similar command exists for COS output queue mapping. “show mls qos maps cos-output-q” gives the output tx queue and threshold mapping for each COS value.

The COS and DSCP to output-q mapping tables are mirrors of the QOS label table. The QOS label table is what is programmed into hardware. Updates to the DSCP or COS to output-q mapping tables are reflected in the QOS Label table. Here’s an example of what happens when changes are made to DSCP output-q mapping. Changing DSCP 0 to use tx queue 3 instead of 2. Same threshold value.

Switch(config)#mls qos srr-queue output dscp-map queue 3 0

Switch(config)# end

Switch#show mls qos maps dscp-output-q

Dscp-outputq-threshold map:

d1 :d2 0 1 2 3 4 5 6 7 8 9

------------------------------------------------------------

0 : 03-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01

1 : 02-01 02-01 02-01 02-01 02-01 02-01 03-01 03-01 03-01 03-01

2 : 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01

3 : 03-01 03-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

4 : 01-01 01-01 01-01 01-01 01-01 01-01 01-01 01-01 04-01 04-01

5 : 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

6 : 04-01 04-01 04-01 04-01

Switch#show platform qos label

Label : Rx queue-thr : Tx queue-thr : rewrite dscp-cos

--------------------------------------------------------------

0 2 - 2 3 - 2 FF - FF

1 2 - 0 1 - 0 0 - 0

2 2 - 0 2 - 0 0 - 0

3 2 - 0 1 - 0 1 - 0

4 2 - 0 1 - 0 2 - 0

5 2 - 0 1 - 0 3 - 0

.

.

.

Above you see that the QOS label table was also updated. Note that the change was made only to the DSCP 0 value when DSCP is trusted on the ingress interface. If DSCP is not trusted, then QOS label 1 is used, and the egress tx queue will still be 2.

(remember that QOS Label table output is 0 based).

2.4 Will the packet be dropped by WTD?

The QOS label assigned at ingress is again referenced by the egress logic to get the threshold index (a number 1-3) for the packet. Threshold number 3 is equal to the “maximum” allowed. In the queue-set section above, there was a “maximum” value, along with threshold1 and threshold2. Threshold3 is the maximum.

The egress logic uses Weighted Tail Drop(WTD) to drop the packet if the port is too congested to allow this packet to be enqueued. Thresholds operate on current buffer counts for a given tx queue. If the packet (i.e.: the buffers it will require) plus the current number of buffers in use by the tx queue exceed the threshold value, then the packet is dropped.

There are 3 different thresholds per tx queue so that further differentiation of the traffic can be made. Meaning higher priority traffic can be mapped to a different threshold value than lower priority traffic. The threshold value for the higher priority traffic will be higher. Using the queue-set, the thresholds are configurable for each tx queue. So, the threshold values for tx queue1 do not have to be the same for tx queue2, or tx queue3.

The mapping of DSCP or COS values to a particular threshold uses the same configuration commands as the DSCP or COS to tx queue mapping commands used in the previous section.

2.4.1 Threshold Modification Strategy

By default, threshold 1 is the mapped threshold for DSCP and COS mappings. Modifying threshold values really means giving access to the common buffer pool. That’s how it should be considered. When granting access to the common buffer pool, should it be done for all traffic, or just special high priority traffic? Since all traffic is using threshold1, to allow all traffic to use the common buffer pool, and have as few dropped packets as possible, then update the threshold 1 value in the queue-set. To allow only special or certain flows of traffic to use the common buffer pool, map that traffic to use threshold2. Then modify threshold 2 values for tx queue(s) used by the special traffic.

To get more information on the QOS Label table and how to modify its configuration see the detail section on QOS label below.

2.5 Where are the packets going

It’s pretty safe to say that the packets are either enqueued to a tx queue or they are dropped. The good news is that it’s possible to get counts for all packets enqueued and all packets dropped.

Use the CLI command “show mls qos interface [interface id] statistics”, to see all DSCP and COS mapped packets that have ingressed and egressed on this interface.

Switch-38#sh mls qos interface gi1/0/25 statistics

GigabitEthernet1/0/25

dscp: incoming

-------------------------------

0 - 4 : 200 0 0 0 0

5 - 9 : 0 0 0 0 0

10 - 14 : 0 0 0 0 0

15 - 19 : 0 0 0 0 0

20 - 24 : 0 0 0 0 0

25 - 29 : 0 0 0 0 0

30 - 34 : 0 0 0 0 0

35 - 39 : 0 0 0 0 0

40 - 44 : 0 0 0 0 0

45 - 49 : 0 0 0 0 0

50 - 54 : 0 0 0 0 0

55 - 59 : 0 0 0 0 0

60 - 64 : 0 0 0 0

dscp: outgoing

-------------------------------

0 - 4 : 3676 0 0 0 0

5 - 9 : 0 0 0 0 0

10 - 14 : 0 0 0 0 0

15 - 19 : 0 0 0 0 0

20 - 24 : 0 0 0 0 0

25 - 29 : 0 0 0 0 0

30 - 34 : 0 0 0 0 0

35 - 39 : 0 0 0 0 0

40 - 44 : 0 0 0 0 0

45 - 49 : 0 0 0 162 0

50 - 54 : 0 0 0 0 0

55 - 59 : 0 0 0 0 0

60 - 64 : 0 0 0 0

cos: incoming

-------------------------------

0 - 4 : 202 0 0 0 0

5 - 7 : 0 0 0

cos: outgoing

-------------------------------

0 - 4 : 4235 0 0 0 0

5 - 7 : 0 0 1748

Policer: Inprofile: 0 OutofProfile: 0

There are some platform level commands that can also be used. The platform level commands will show which threshold was matched before enqueuing the packet into the tx queue.

In the following example, the switch has been configured with QOS enabled, but ports are untrusted. QOS label 1 is being used for all packets. This QOS label defaults to tx queue 2, and threshold 1 value. DSCP is not used unless DSCP trust is explicitly configured on the interface. Again, the output from the “show platform …” command is 0 based.

Switch#show platform port-asic stats enqueue gi1/0/25

Interface Gi1/0/25 TxQueue Enqueue Statistics

Queue 0

Weight 0 Frames 2

Weight 1 Frames 0

Weight 2 Frames 0

Queue 1

Weight 0 Frames 3729

Weight 1 Frames 91

Weight 2 Frames 1894

Queue 2

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 0

Queue 3

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 577

The user data traffic in this example is all being mapped to tx queue 2 (shown as Queue 1), and threshold 1 (shown as Weight 0). The other traffic is CPU generated traffic which uses a different QOS label. The output of this command does not take into consideration the source of the traffic. Thus, packets originating from the CPU are counted.

When packets are being dropped due to congestion, use the “show platform port-asic stats drop [interface id]” command to see which tx queue and which threshold are dropping. The failure of a packet to get under the threshold that is causing the packets to be discarded.

Switch-38#show platform port-asic stats drop gi1/0/25

Interface Gi1/0/25 TxQueue Drop Statistics

Queue 0

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 0

Queue 1

Weight 0 Frames 5

Weight 1 Frames 0

Weight 2 Frames 0

Queue 2

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 0

Queue 3

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 0

Note: 8 queues will be shown. Only the first 4 are used.

2.6 Order of Egress: Shaped, Shared or Both

Not to be outdone by the queue-set concept, the egress servicing logic is also very flexible. The C3750 family of switches support Shaped Round Robin (SRR). This means that it can Shape to a specific bandwidth percentage and it can share the bandwidth amongst the tx queues. The sharing of the interface bandwidth is based on weights configured on the interface. The sharing acts like Weighted Round Robin. Since the egress logic can Shape and Share, it’s called Shaped Round Robin or SRR for short. It can also be thought of as WRR+.

Configuration to the egress servicing logic is done per interface, not per Asic, or per queue-set. All interfaces can be configured differently.

When a tx queue for an interface is “shaped”, that means the tx queue is guaranteed a certain percentage of the available bandwidth, and no more than that. When a tx queue is “shared” that means it also is guaranteed a certain percentage of the available egress interface bandwidth, but can use more of the available bandwidth if other tx queues are empty. “shared” is not rate limited. Note that the term “available” was used. That was done because its possible to limit the interfaces physical bandwidth. So, the available bandwidth may not be the same as the physical link. This is explained below.

By default, when QOS is enabled, tx queue1 is configured to shape for every physical interface. Tx queue’s 2-4 are shared. The “shape” value for tx queue 1 is 25. The “shared” values are 25 for each tx queue. If a tx queue has a nonzero value in the “shaped” config, then the “weight” in the shared config is not used for sharing. A zero value in the shaped configuration means the tx queue is shared.

To view the egress servicing configuration use the cli command “show mls qos interface [intf id] queueing” command. Here’s an example:

Switch#show mls qos interface gi1/0/25 queueing

GigabitEthernet1/0/25

Egress Priority Queue : disabled

Shaped queue weights (absolute) : 25 0 0 0

Shared queue weights : 25 25 25 25

The port bandwidth limit : 100 (Operational Bandwidth:100.0)

The port is mapped to qset : 1

There is one interface level configuration command to configure shaping, and 1 to configure sharing. Here’s an example of the interface level configuration commands.

Switch(config)#interface gi1/0/26

Switch(config-if)#srr-queue bandwidth shape 50 4 0 0

Switch(config-if)#srr-queue bandwidth share 25 25 25 25

In the above example tx queue 1 was shaped to 2%, tx queue 2 to 25%, and shaping disabled for tx queues 3 & 4. Tx queues 3 & 4 are each guaranteed 50 % of the available bandwidth. The shaping and sharing configurations above are explained below. If you’re confused read on.

2.6.1 What does the Shaping value really do

The configuration guide states the shaping value is actually an inverse ratio to the actual shaped bandwidth. The default value is 25. According to the configuration guide this is 1/25 or 4%. So, by default tx queue 1 is shaped to 4% of the available egress bandwidth. If an interface is operating in 100MB mode, then that is 4MB. The smaller the number the higher percentage of bandwidth that is guaranteed. If the shaped value for tx queue 1 is 8, then that tx queue is guaranteed 12.5% of the available egress bandwidth[1].

The configuration guide also states that end users should configure the lowest numbered tx queues for shaping. This is because the hardware algorithm used to service the queues starts at the lowest numbered queue first. Lowered numbered queues will be shaped smoother than higher numbered queues.

The range of weights for shaping is 1-65535. When 65535 is used, that’s a very small percentage of the available egress bandwidth. This command is used for 1Gig interfaces as well as 10Mb interfaces. The larger numbers will have more meaning for faster interfaces than slower. To shape a tx queue to 64,000 bits on a 1Gig interface the weight will be 15625. If shaping were supported on a 10Gig interface, then the lowest shaped rate possible with weight 65535 is 152,590 bits/second. Shaping is not used on 10Gig interfaces[2].

The granularity is for the shaper is not exact. If you configure 50 as a shaped value, that’s 2% of the available egress bandwidth. You can expect that the queue may receive 3% of the available egress bandwidth. This goes for all shaped values (and shared values). There’s always a chance the actual granularity provided by the egress scheduler will be off by 1-3%.

2.6.2 Shared Weights Explained

The “weights” in sharing refer to the weights in a Weighted Round Robin. WRR is how the egress logic is sharing the interface with all tx queues. The configured weight value entered in the CLI in the sharing configuration is not an inverse value like shaping. The sharing weights are ratios with respect to the other weights on the same interface. The configuration guide gives a good example. If the shape weights for an interface are 1, 2, 3, 4 for tx queues 1 – 4 respectively, then tx queue 1 will get 10% of the interface bandwidth, tx queue 2 will get 20%, tx queue 3 will get 30% and tx queue 4 will get 40%. For tx queue 4 with a weight of 4, the ratio is computed like this: 4 / (1+2+3+4) = 4/10 = .4 or 40%. Tx queue 4 has 40% of the weight for all queues configured on the interface. Using weights of 1, 2, 3, 4 is equivalent to using weights of 10, 20, 30, 40.

By default the shared weight is 25 for all tx queues. This means each tx queue is guaranteed at least 25% (25/100) of the interface bandwidth.(assume for now that tx queue 1 is not shaped) regardless of the current tx queue buffer usage.

The tx queue servicing logic maintains the WRR states for all tx queues. The servicing logic shares the egress bandwidth when attempting to service a tx queue that is empty. When the service logic time slot falls to an empty queue, a tx queue with enqueued packets will be given the open time slot. Thus one tx queue can exceed its guaranteed bandwidth if it has enqueued packets and the other tx queues are empty.

The servicing logic uses bytes transmitted as the units for maintaining the weights. It does not use packet counts or buffer counts. The tx queue servicing logic maintains a distribution of packets from each tx queue according to the configured shared weights. If each tx queue has packets enqueued waiting on egress, then the tx queue servicing logic would not drain multiple packets from the tx queue with the highest weight back to back. The servicing logic works by looping through all the tx queues. After sending 1 packet from a tx queue, the servicing logic moves onto the next tx queue. If that tx queue is not empty, and the servicing logic determines that this tx queue is due to transmit, then that tx queue is serviced. If its not ready, or its weight is low enough that its not its time to egress, the servicing logic moves onto the next tx queue. Over time the packets from each tx queue will egress the interface at the weight ratios that have been configured.

2.6.3 Shaped and Shared Combination

By default, each interface is shaped and shared. Tx queue 1 is shaped to 4% of the bandwidth (shape weight is 25), and the other tx queues are guaranteed at least 25% of the available bandwidth. If tx queue 1 congests it will drop packets. When tx queues 2-4 congest they will leak into the available bandwidth of the other tx queue’s before they drop packets. Tx queue’s 2-4 will only drop packets when packets are being serviced at link rate for all tx queues, and buffer consumption for tx queues 2-4 exceed the configured threshold for the to be enqueued packets. As long as 1 of the 4 tx queues is configured to share, then the egress servicing logic can fill 100% of the available egress bandwidth.

If tx queue 1 has enqueued packets, then it will not be prevented from egressing provided it has not exceeded its shape. Tx queue’s 2-4 cannot eat into tx queue 1’s guaranteed bandwidth if tx queue 1 has enqueued packets.

When one tx queue is configured to shape, the shared queues still use a ratio to determine the actual guaranteed minimum bandwidth for each shared queue. The shared weight of shaped queue is not used in computing the ratio. The CLI requires you to enter a non-zero number for every queue. Because the queue is configured to shape, the shared weight is ignored. Configure the weights for the non-shaped queues knowing the shared queue weight will not be used. The combined weights of all shared queue’s is used to determine the guaranteed bandwidth of any tx queue.

Here’s an example where things may work differently than it appears.

Switch(config)#int gi1/0/26

Switch(config-if)#srr-queue bandwidth shape 4 0 0 0

Switch(config-if)#srr-queue bandwidth share 1 25 25 25

In this example, tx queue 1 is shaped to 25% (1/4) of available egress bandwidth. In the shared configuration tx queue 1 is given a weight of 1. Because tx queue 1 is shaped its shared weight value is ignored., Queues 2-4 are not guaranteed 25% of the egress bandwidth, they are guaranteed 33%. The share weights do not take into account the shared values of shaped queues.

Its possible to over allocate available egress bandwidth with a combination of shaped and shared weights. Oversubscribing the available egress bandwidth is legal, and permitted by IOS. If the switch is configued in such a way, then shaped tx queues will take priority over shared tx queues. If tx queue 1 & 2 are configured to shape at 33% of the egress bandwidth, and tx queues 3 & 4 are configured to share a 25% each, then what happens to the guarantee for the shared tx queues. This guarantee cannot be met. More than 100% of the available egress bandwidth has been configured. Here’s how the interface has been configured:

Switch(config)#int gi1/0/26

Switch(config-if)#srr-queue bandwidth shape 3 3 0 0

Switch-38(config-if)#srr-queue bandwidth share 1 1 25 25

When this port becomes completely over subscribed on all tx queues, the tx queues will drain like this:

- tx queue 1 and 2 output roughly 33% each

- tx queue 3 and 4 output roughly 15% each

The term “roughly” is used as packet sizes will affect overall bandwidth, and packets sizes vary. When all packet sizes are the same size across all tx queues you can expect to see bandwidth utilization numbers close to the percentages above.

Based on this example, shaped is given priority over shared by the egress logic when all tx queues are congested.

2.6.4 What does the Expedite Queue do?

The expedite queue is tx queue 1 for egress. When the expedite queue is enabled, its no longer shaped or shared. When the expedite queue is enabled for an interface, when ever there’s a packet enqueued to tx queue 1, the egress logic will service it.

All weights (shaped or shared) for tx queue 1 are ignored. This needs to be taken into consideration when configuring the shared weights for the other queues. The shared weight configured for tx queue 1 is not included in the ratio computation. If you use the default weights of 25, 25, 25, 25 for tx queues 1-4 respectively, then tx queues 2-4 would each be guaranteed 33% of the egress bandwidth. As a best practice, when the expedite queue is enabled, it’s best to configure the share weight for tx queue 1 to be low (eg: 10)[3].

The shaped weights assigned to the other tx queues are not affected by this. The shape weight for tx queue 1 is ignored. The shape weights for a tx queue are independent of the other shaped weights[4]. The bandwidth percentages that those weights compute to, will still be true provided the traffic from tx queue 1 does not eat into them.

2.6.5 Limiting Bandwidth on an Interface

In some cases, it may be desirable to limit the overall egress bandwidth of an interface to something less than 100%. Use the interface level cli command “srr-queue bandwidth limit [weight]” to do this. The range of “weight” is 10 – 90. The units are percentages of egress bandwidth. When this interface level configuration command is given, then the available egress bandwidth will be less than the physical bandwidth.

An interesting side note is that this command will work even if QOS is disabled on the switch. An even more interesting side note, is the actual bandwidth limit enforced by the schedule will change slightly when QOS transitions from disabled to enabled. The CLI configuration will not change. The hardware gets reprogrammed, and somehow the bandwidth limit values get modified. As of this writing, this has been identified as a defect and will be corrected. This is the behavior for IOS images 12.2(46)SE and earlier.

2.7 Detailed Description of QOS Label Table

There are some predefined Labels that are of interest. Many of the predefined labels come into play outside the scope of this discussion.

Label Description

-------------- -------------------------------------

0 Default for QOS disabled

1 Default for QOS enabled (ports untrusted)

2 – 65 DSCP 0 – 64. All the DSCP values map to these labels (2-0x41) (trust dscp)

66 – 74 COS Values 0 – 7 map to these labels (0x42-0x4A) (trust cos)

79 COS0 pass through DSCP

80 Default disabled DSCP (for PBR interaction)

143 – 205 Reserved Disabled DSCP

The QOS label table is not modified directly. The QOS label table is modified indirectly when the dscp-output-q or cos-output-q tables are modifed.

The QOS label table gives the ingress queue mapping as well as the COS – DSCP re-write values. For the purposes of this document only the tx queue and tx thresholds have been discussed.

The entire QOS label table with default values can be seen at the end of this document.

3 Solutions and Strategies

The section shows how to use the egress queue behavior to maximize resources. There are multiple examples in which each example explains a problem, and then shows how to configure the egress logic to remedy the problem.

Within the examples, this section covers how to know if your port is experiencing congestion and dropping packets. It may be obvious that the port is dropping packets because on the ends of the connection there are packets missing. The final example in this section will show how to examine the switch behavior to see what type of traffic is being queued up, and how to maximize resources.

3.1 Quick and Easy Egress Buffer Optimizing Solution

This is for those seeking a minor tweak to fix a problem with the occasional egress packet drop. The quick answer is to increase the drop thresholds used by WTD.

There are 3 WTD thresholds per tx queue, and 4 tx queues per interface. In this example the WTD values for all 4 tx queues will be modified. By default the WTD values are either 100 or 200. These are percentages. The quick fix is to increase these values to 1000%.

Example:

Switch#config term

Switch(config)#mls qos queue-set output 1 threshold 1 1000 1000 50 1000

Switch(config)#mls qos queue-set output 1 threshold 2 1000 1000 50 1000

Switch(config)#mls qos queue-set output 1 threshold 3 1000 1000 50 1000

Switch(config)#mls qos queue-set output 1 threshold 4 1000 1000 50 1000

3.1.1 Assumptions for this Solution

The following assumption have been made with this solution:

- QOS has been enabled on the switch with the “mls qos” configuration command.

- The interfaces that are dropping packets are using queue-set 1. By default queue-set 1 is the configured queue-set for all interfaces. If queue-set 2 is configured the above commands could be used on queue-set 2 instead.

- The WTD threshold values have not already been modified.

3.2 Optimize Single COS Traffic in non-stack

Single COS traffic is when the majority of the egress traffic is egressing from a single tx queue across all the interfaces. The other tx queues are unused or lightly used. The output of “show mls qos interface <intf id> statistics” will show that the majority of traffic is egressing from a single COS or DSCP. Single COS traffic is an excellent case to show how changes to the queue-set configuration effect throughput.

Goal for this case: Show how to make QOS configuration changes to get better throughput than QOS disabled. QOS disabled is good for single COS type traffic because all traffic is mapped to COS 0 anyway. However, the egress logic with QOS disabled does not take full advantage of common buffer pool, as will be shown.

3.2.1 Test Case SETUP

To congest the interface, a very simple 2 ingress to 1 egress oversubscription is used. The 2 ingress ports will ingress traffic at line rate, with packets switched to a single egress port. The egress port is the port under test. Two ingress ports at line rate will easily exceed the egress bandwidth causing the port to congest. Then WTD will kick in to drop packets.

All ingress packets will use DSCP value 0. They are untagged packets.

Each ingress interface will simultaneously send a fixed amount of traffic into the switch. Thus depending upon the egress QOS configuration, different numbers of packets will egress the port.

The QOS configuration for DSCP to egress tx queue mapping will not be altered. The only changes to QOS configuration during this test will be to the queue-set. The ingress ports are setup to trust DSCP “mls qos trust dscp”. The test device is setting the DSCP values to 0 for the incoming packets. These packets will map to egress tx queue 2, and drop threshold 1. The queue-set modifications will focus on tx queue 2 because of this. In the table below, when the configuration modifications are discussed, the tx queue is implied to be tx queue 2. No other tx queues are modified unless explicitly stated that multiple tx queues were modified.

To see the DSCP to output queue mapping use “show mls qos maps dscp-output-q” command.

3.2.2 Execution and Results for Single COS traffic

The execution of this test shows an iterative approach to changing the egress configuration to achieve fewer packet drops than QOS disabled. Each run shows a small configuration change and its impact on the test results. This was done so that impact of each small change can be seen. For each run, the “Port 3 Egress Count” is given. This count is number of packets that were received by the tester. The higher this count, the fewer packets that are dropped.

3.2.2.1 Test Case Execution Setup

Size for transmitted packets is 200 bytes(1 buffer each). Here are the results from the tests. Port 3 is the port under test. Counts are of packets sent and received from the external tester. The value from column “Egress port 3” is the number of packets received by the tester. The ingress counts on port 1 and 2 are always 1000. A switch in the C3750E family was used for this test.

3.2.2.2 Run 1, QOS disabled, setting the benchmark.

Description: No QOS configuration. Its disabled. The egress count is the benchmark for this test to beat

Port 3 Egress count: 1440

3.2.2.3 Run 2, QOS enabled, using defaults

Description: MLS OQS enabled, but that’s it. Ingress port is untrusted

Port 3 Egress Count: 1099

Summary: Quite a drop off. When “mls qos” is enabled, IOS changes the buffers for the port under test.

3.2.2.4 Run 3, Trust DSCP on ingress

Description: only change from run 2 is trust the packets on ingress. Not expecting any change since the packets are mapped to same tx queue.

Port 3 Egress Counts:1099

Summary: just putting the ingressing packets into a trusted state doesn’t really change the buffering. This is expected.

3.2.2.5 Run 4, Increase the WTD thresholds

Description: Increase the WTD threshold value for threshold 1 from 200 to 400.

Port 3 Egress Counts:1196

Summary: A nice bump. Not good enough. Does show that just increasing the WTD value does have an impact.

3.2.2.6 Run 5, Another Increase in WTD thresholds

Description: Increase the WTD threshold value for threshold 1 from 400 to 1000. Increase the maximum threshold value to 1000 as well.

Port 3 Egress Counts: 1197

Summary: Expected more than just 1 additional packet. This change did not have much impact.

3.2.2.7 Run 6, Yet another increase in WTD thresholds

Description: Increase the WTD threshold value for threshold 1 from 1000 to 2000. Increase the maximum threshold value to 2000 as well. Testing to see if doubling this will have any impact.

Port 3 Egress Counts: 1197

Summary: No impact. Not that surprising.

3.2.2.8 Run 7, Modify the reserved buffers in Queue-set 1

Description: Change the reserved buffers on queue-set 1 to be 100% for tx queue 2 from 50% which is the default, and lowerthe threshold values back to 1000 from 2000

Port 3 Egress Counts:1197

Summary: No change in egress counts. Updating the reserved percentage will effect the amount of buffers given back to the common pool, but it does not impact the percentage for threshold computation. Just updating the reserved percentages all by itself does not seem to have much effect.

3.2.2.9 Run 8, Increase the WTD again

Description: Keeping the same configuration for the reserved percentages made in the previous run, this time increase the WTD values from 1000 to 2000 to see if this will impact the egress counts.

Port 3 Egress Counts: 1197

Summary: No increase. The WTD values are having limited impact.

3.2.2.10 Run 9, ReAllocate the buffers amongst the Tx Queues

Description: modify the egress buffer allocation for the port under test. Give tx queue 2 50% of the allocated buffers. The other 3 queues share the other 50%. The WTD thresholds remain at 2000, reserved remains at 100%. See Notes for Run 9 below to see the configuration change.

Port 3 Egress Counts: 1397

Summary: A nice bump. It shows that allocating more buffers to tx queue 2 is a good strategy.

3.2.2.11 Run 10, Increase the WTD Again

Description: Keep the configuration from Run 9, and increase the WTD for threshold1 from 2000 to the maximum of 3200.

Port 3 Egress Counts: 1397

Summary: Consistent. Once again increasing the WTD didn’t have any impact. Even though number of buffers allocated was increased in run 9.

3.2.2.12 Run 11, Increase the buffers to Tx Queue 2 Again

Description: Increase the allocated percentage Tx Queue to 85%. The other tx queues are allocated 5% each. Keep the reserved value at 100%. See the Note for Run 11 below to see the queue-set configuration.

Port 3 Egress Counts: 1677

Summary: A nice bump. Also this is more than the target amount 1440 achieved with default configuration. This proves that egress resources can be allocated more efficiently for traffic mapped to a single tx queue with “mls qos” enabled.

3.2.2.13 Run 12, Decrease the buffers to Tx Queue

Description: Decrease the allocated buffers to Tx Queue 2 to observe changes in egress counts. The idea is to give an example of how the egress counts can effected by small changes in the allocated percentages. Give Tx Queue 80% of the allocated buffers. The other tx queues get 7, 7, & 6% respectively. See Note for Run 12 below to view the configuration.

Port 3 Egress Counts: 1637

Summary: Still much better than QOS disabled. Its kinder to the other tx queues too.

3.2.2.14 Run 13, Further Decrease in buffers allocated to Tx Queue 2

Description: Descrease the allocated buffers to Tx Queue 2 to 58%. Give 14% to the other Tx Queues. This is much kinder to traffic on the other queues. See Note for Run 13 below to view the last configuration of the queue-set.

Port 3 Egress Counts: 1462

Summary: Still better than mls qos disabled.

Notes for Runs 9 - 13:

Run 9: Heres’e the configuration of queue-set 1 after change was made. Tx queue 2 was given 50% of total buffers for the port. All other queue-set items remain the same as the previous run.

Switch#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 20 50 15 15

threshold1: 100 2000 100 100

threshold2: 100 200 100 100

reserved : 50 100 50 50

maximum : 400 2000 400 400

Run 11: In this case, the maximum amount of buffers that can be allocated to a single tx queue is done. Tx queue 2 is reserving 100%. The other tx queues are still reserving 50% which is next to go low.

Switch#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 5 85 5 5

threshold1: 100 3200 100 100

threshold2: 100 200 100 100

reserved : 50 100 50 50

maximum : 400 3200 400 400

Run 12: just to show the impact the reserved buffer plays , decrease by 5% the amount given to tx queue 2.

Switch#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 7 80 7 6

threshold1: 100 3200 100 100

threshold2: 100 200 100 100

reserved : 50 100 50 50

maximum : 400 3200 400 400

Run 13: This is the queue-set configuration that allows tx queue 2 to drop fewer packets than tx queue 2 did with QOS disabled. Additionally this steals less buffer resources from the other tx queues.

Switch#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 14 58 14 14

threshold1: 100 3200 100 100

threshold2: 100 200 100 100

reserved : 50 100 50 50

maximum : 400 3200 400 400

3.2.3 Conclusion and Additional Info

The queue-set configuration can be modified to decrease the number of drops for any tx queue. In this example the first step to getting an incremental increase in throughput (a decrease in drops) was to increase the threshold values (Run 4). This is basically the same configuration change that was made in the first example (4.1). Just increasing the threshold values has its limits on effectiveness as further significant increases in threshold values did not have any effect on the throughput (Runs 5-7). The next big step to increasing throughput on the port under test was to increase the number of reserved buffers allocated to the tx queue. That meant taking allocated buffers from the other tx queues. Each port is only given so many, and the queue-set distributes those amongst the queues. This is described in detail above in the queue-set description section. Runs 9-13 show different configurations for changing the buffer allocations amongst the tx queues and the effect it had on throughput.

Depriving the other tx queues of reserved buffers may or may not be OK. If there is no traffic egressing the port(s) on those tx queues, then those reserved buffers are wasted while the tx queue in use drops packets because of WTD. This is really dependent upon the traffic patterns. The goal of this example was to demonstrate how to modify the egress configuration to optimize for traffic on a single COS. Run 11 was the most aggressive in terms of allocating buffers to a single tx queue. Configuration for Run 11 is the closet to the goal for allocating buffer resources to a single type of traffic.

Keep in mind that the CPU generates packets control plane packets (ie STP, CDP, RIP, …). These packets will use tx queue 1 and tx queue 2. Some buffers must remain allocated for these tx queues. Under normal conditions, CPU generated traffic is generally very small compared to a 1 gig link (or a 100MB link for that matter). The maximum number of packets the CPU can generate out a physical interface is outside the scope of this document.

Because the majority of traffic was only egressing from 1 tx queue, the shape and share weight values for egress servicing logic did not come into play. Tx queue 2 was configuerd to share, and it consumed all the egress bandwidth

3.2.4 How to configure for traffic not using DSCP 0

In the example above, DSCP 0 was used to keep things simple. What tx queue should be modified if its not DSCP 0 that is the majorify of the traffic? What changes are needed if the DSCP value is 16 not 0.

First determine which tx queue is being used. The preferred means to determine which tx queue is being used: view the output of “show mls qos interface Gig1/0/1 statistics”. This shows the ingress and egress counts for every DSCP and COS value on the interface. Find the dscp: outgoing value that is most heavily used. In this case its 16.

Look at the DSCP to output-q table. Use the command “show mls qos maps dscp-output-q” to see the tx-queue for all DSCP values. Assuming that the dscp-output-q table has not been modified, and its still in its default state, for DSCP value 16, the tx queue is 3, and the WTD compare is against threshold 1.

To give tx queue 3 50% of the ports allocated buffers.

Switch#config term

Switch(config)#mls qos queue-set output 1 buffers 20 15 50 15

To modify tx queue 3 threshold values

Switch(config)#mls qos queue-set output 1 threshold 3 2000 200 50 2000

3.3 Strategy for Using 2 WTD Thresholds

By default all packets that egress the switch will be compared against the Threshold 1 WTD values for the targetted tx queue. The default configuration does not use Threshold 2 WTD values at all. By default COS 0 and COS 1 are treated the same by the egress logic. Both are enqueued to tx queue 2, and the will be compared against WTD threshold value 1.

The two thresholds values are available and can be used to provide differentation and/or prioritization of the egressing traffic.

Prioritizing COS 1 traffic over COS 0 is a two step process requiring two configuration commands. Step 1 is to remap COS 1 to a different WTD threshold value than COS 0. In this example, WTD threshold 2 will be considered higher priority than WTD threshold value 1. Whether WTD threshold 2 is considerd higher in priority than WTD threshold 1 is completely abritrary. Step 2 is to modify the queue-set configuration for threshold 2 values. By default queue-set 1 is assigned to all interfaces, thus queue-set 1 will be modified.

Here’s the COS to output Queue mapping before the configuration change, note the changes in BOLD:

Switch#show mls qos maps cos-output-q

Cos-outputq-threshold map:

cos: 0 1 2 3 4 5 6 7

------------------------------------

queue-threshold: 2-1 2-1 3-1 3-1 4-1 1-1 4-1 4-1

Make the Configuration change.

Switch# configure term

Switch(config)#! map COS 1 to threshold 2

Switch(config)#mls qos srr-queue output cos-map threshold 2 1

Switch#show mls qos maps cos-output-q

Cos-outputq-threshold map:

cos: 0 1 2 3 4 5 6 7

------------------------------------

queue-threshold: 2-1 2-2 3-1 3-1 4-1 1-1 4-1 4-1

Next modify the queue-set configuration so that WTD threshold 2 for tx queue 2 has a higher drop percentage than threshold 1.

Here’s the default values of queue-set 1. Again changes are in bold.

Switch#show mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 25 25 25 25

threshold1: 100 200 100 100

threshold2: 100 200 100 100

reserved : 50 50 50 50

maximum : 400 400 400 400

Make the Configuration change.

Switch# configure term

Switch(config)#! change the WTD thresholds for tx queue 2

Switch(config)#! Threshold 2 is greater than Threshold 1

Switch(config)#mls qos queue-set output 1 threshold 2 100 400 50 400

Switch(config)#end

Switch#

With the changes, queue-set 1 now looks like:

Switch-38#sh mls qos queue-set 1

Queueset: 1

Queue : 1 2 3 4

----------------------------------------------

buffers : 25 25 25 25

threshold1: 100 100 100 100

threshold2: 100 400 100 100

reserved : 50 50 50 50

maximum : 400 400 400 400

COS 0 traffic will be dropped before COS 1 traffic because threshold 1 is set to a lower value.

DSCP and COS work the same because each COS or DSCP value maps to a tx queue and threshold. The same WTD Threshold prioritization could have been done for DSCP by modifying the DSCP to output-q mapping. Use this IOS configuration command “mls qos srr-queue out dscp-map threshold <WTD Threshold 1-3> <DSCP value 0-63>”. The queue-set changes would remain the same (provided that the DSCP mapped to output-queue 2).

This example used a simple COS 1 & 2 scenario. A slightly more complex scenario would involve the marking of out of profile packets by the ingress policing logic. The marking process would modify the ingress DSCP value of the packet. The DSCP value assigned to the marked packets would be a known value (its configured by the user). The marked DSCP value would be mapped to a lower WTD threshold value than the DSCP value of un-marked packets.

3.4 Simple Desktop Scenario

This solution scenario will focus on a maximizing resources for a switch deployed in a desktop environment. The Ethernet Switch deployed in a closet connected to end users in an office setting (e.g., cube farm). The ethernet switch is deployed at the Access Layer of the network. The end users will “typically” have a desktop workstation and/or an IP phone connected to one Ethernet interface on the Switch. The ethernet switch will have 1 active uplink into an aggregation device (the redundant uplink is not active). In this “typical” scenario there isn’t any internally switched traffic. All traffic should be considered “North/South”. From the ethernet switches perspective, the most probable congestion point is at the uplink. The end user facing interfaces are all running at 1gigabit, and the lone active uplink is also 1gigabit.

In this scenario, the traffic ingressing from the end user is not being policed or marked. The DSCP values are accepted “as is”. The desktop facing interfaces have been put into “trust dscp” state. Security features such as Port Security, and 802.1X have not been enabled. There’s badge access to the building, so once in, the end user is “trusted”.

The goal of this solution example is to show a configuration that prevents packet drops due to congestion and give priority to higher priority packets (keeps latency low). The first step is to understand what types of traffic are being switched across the switch. Since DSCP is trusted on the interface, what DSCP values are being ingressed/egressed by the end users? The DSCP ingress/egress statistics on the uplink ports will give that information. Since none of the ingressing packets are being modified by the ACLs, the per DSCP counts show packets as marked by the submitting end station. When viewing uplink statistics keep in mind that egressing traffic is from the end user workstations, and the ingressing traffic is from the network and will be switched to the end uesr work stations

Switch#show mls qos interface Gi1/0/24 statistics

GigabitEthernet1/0/24 (All statistics are in packets)

dscp: incoming ------------------------------- 0 - 4 : 2136597 0 0 0 56 5 - 9 : 0 0 0 2767088 0 10 - 14 : 0 0 0 0 0 15 - 19 : 0 3662 0 0 0 20 - 24 : 0 0 0 0 160923 25 - 29 : 0 0 0 0 0 30 - 34 : 0 0 588 0 0 35 - 39 : 0 0 0 0 0 40 - 44 : 1164996 0 0 0 0 45 - 49 : 0 2844335 0 621957 0 50 - 54 : 0 0 0 0 0 55 - 59 : 0 391354 0 0 0 60 - 64 : 0 0 0 0 dscp: outgoing ------------------------------- 0 - 4 : 15479669 0 0 0 0 5 - 9 : 0 4535 0 12 0 10 - 14 : 0 0 0 0 0 15 - 19 : 0 0 0 0 0 20 - 24 : 0 0 0 0 486691 25 - 29 : 0 0 0 0 0 30 - 34 : 0 0 0 0 2729 35 - 39 : 0 0 0 0 0 40 - 44 : 0 0 0 0 0 45 - 49 : 0 4488773 0 21 0 50 - 54 : 0 0 0 0 0 55 - 59 : 0 0 0 0 0 60 - 64 : 0 0 0 0 cos: incoming ------------------------------- 0 - 4 : 18793682 2767088 4542 160923 588 5 - 7 : 4009470 621957 2323394 cos: outgoing ------------------------------- 0 - 4 : 15497474 0 0 486691 0 5 - 7 : 4488773 21 3905417 Policer: Inprofile: 0 OutofProfile: 0

The end user workstations and devices are sending significant counts of packets with DSCP values:0, 24, & 46. IOS is using DSCP 48 for its control packets. Some other DSCP values have sent/received insignificant packet counts. This example will not focus on the packets with those low volume DSCP values. However, nothing in the configurations to follow will prevent packets with those DSCP values from being switched successfully.

DSCP 0 is best effort traffic. More packets are marked with DSCP 0 than any other DSCP. DSCP 46 is VoIP traffic. The Cisco IP phones set the DSCP value to 46. DSCP 46 is High Priority traffic. The packets with other DSCP are associated with other end user applications.

3.4.1 Building a Configuration from traffic counts

What follows is an example on how to configure the switch based on the DSCP counts of the traffic from a specific switch. The goal of this step is to show how to configure the Egress QOS logic to minimze latency and packet drops.

Disclaimer: Using traffic pattern counts from a single switch for a short period of time is not the supported means of configuring Egress QOS logic on a switch. Its done here for instructional purposes only. The egress QOS logic should be consistent across all switches in a network, and implement the QOS policy for that network.

To make this exercise more realistic some additional information is needed about this switch.

1. This is a 24 port switch. Ports 1-22 are connected to end user work stations, and ports 23 & 24 are being used as uplinks. On this switch Port 23 is not in the forwarding state, and is probably STP blocked.

2. Before any changes are made, all ports are using Queue-set 1. This is the default Queue-set for all ports.

3. “mls qos trust dscp” has been configured on all interfaces.

4. Since Cisco VOIP phones are present, the expedite queue will be enabled.

5. the Auto QOS configuration commands are a recommended way to easily configure a switch that has Cisco VOIP phones connected. This example will not use auto qos.

6. Since the traffic counts from above for this switch show different DSCP and COS counts, “mls qos” has already be enabled.

Because the uplink interfaces (Gi0/23 & Gi0/24) have a different role than interfaces Gi0/1 – Gi0/22, this example will use the two queue-sets to define the egress resources for the interfaces with different roles. Queue-set 1 will be used for desktop facing interfaces (Gi0/1 – Gi0/22) and queue-set 2 will be used for network facing interfaces (Gi0/23 & Gi0/24).

3.4.2 Egress Queue Strategy, What’s the plan