- Cisco Community

- Technology and Support

- Networking

- SD-WAN and Cloud Networking

- Re: SD-WAN – VPN0 routing & control connections

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-21-2019 11:29 AM - edited 03-08-2019 05:33 PM

Hello community,

I have a question regarding underlay network routing (VPN0) and how it affects control connections and ultimately ability to build IPsec tunnels.

Explanation of my current setup:

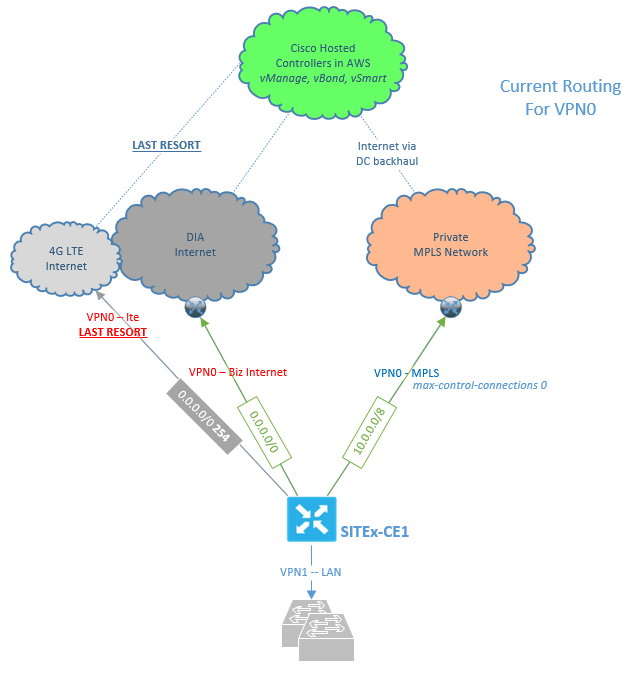

I currently have MPLS, Biz-Internet, & LTE connections connected to my remote office ISR1K cEdge. The LTE connection is configured as last resort and is only used when both MPLS and Biz-Internet connections are down. I have two static routes configured, 0.0.0.0/0 points at the Biz-Internet next hop PER, and 10.0.0.0/8 points at my MPLS next hop PER. I have a cisco hosted controller solution, so my controllers live in AWS (only accessible via public internet). MPLS has the ability to get to the internet via backhaul to datacenter locations. Due to my existing problem explained below, I currently have the mpls interface set to “max-control-connections 0” (I don’t fully understand why, but I know previously I was told I needed to set “max-control-connections 0” to get these tunnels up).

Issue(s) I am experiencing:

When I lose my Biz-Internet connection I am also losing control connections to my hosted controllers and cannot make changes in this failure scenario (edges are in vManage mode). First issue I see is in VPN0 I have a shared routing table, so adding multiple default routes to cover the potential need for both Biz-Internet and MPLS to access the internet does not seem to be feasible. I have tried adding both default routes equal cost and this results in breaking one or the other connection. I have also tried a default route with a higher distance (a.k.a. floating static) on the MPLS connection which does allow the control connections to form on the MPLS link when Biz-Internet is down. However, when Biz-Internet is up control connections will not form on MPLS and as a result tunnels won’t build (max-control-connections 0 command was removed for this test).

Just wondering what others are doing to cover this scenario? Are there any best practices around the underlay routing in this scenario where multiple connections need to access to the internet? Am I just missing something obvious (very possible😊 )?

Solved! Go to Solution.

- Labels:

-

Other SD-WAN

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-23-2019 10:08 AM - edited 01-23-2019 10:08 AM

Update on this. So it appears that the reboot is not putting it in a good state, just so happens that 8.8.8.8 worked after a reload:

TEST-CE1#ping 8.8.8.8 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 8.8.8.8, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 8/9/12 ms TEST-CE1#ping 8.8.8.8 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 8.8.8.8, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 8/14/24 ms ============================================================================== TEST-CE1#ping 1.1.1.1 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 1.1.1.1, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 ..... Success rate is 0 percent (0/5) TEST-CE1#ping 1.1.1.1 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 1.1.1.1, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 ..... Success rate is 0 percent (0/5) ============================================================================== TEST-CE1#ping 9.9.9.9 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 9.9.9.9, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 ..... Success rate is 0 percent (0/5) TEST-CE1#ping 9.9.9.9 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 9.9.9.9, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 48/48/48 ms ============================================================================== TEST-CE1#ping 192.16.31.23 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 192.16.31.23, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 312/337/348 ms TEST-CE1#ping 192.16.31.23 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 192.16.31.23, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 48/50/56 ms

TAC pointed me to this bug, which I think is our issue CSCvn55971 - cedge: Locally sourced packets using wrong interface with ECMP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-21-2019 12:25 PM

>>First issue I see is in VPN0 I have a shared routing table, so adding multiple default routes to cover the potential need for both Biz-Internet and MPLS to access the internet does not seem to be feasible.

It's feasible and ok to have more than one default route.

>>I have tried adding both default routes equal cost and this results in breaking one or the other connection.

That should not be like this.

>>I have also tried a default route with a higher distance (a.k.a. floating static) on the MPLS connection which does allow the control connections to form on the MPLS link when Biz-Internet is down.

So do you have actual internet connectivity via your MPLS circuit as well? I didn't get it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-22-2019 06:22 AM

what you are doing would seem correct.

so "max-control-connections 0" allows the mpls tloc to be advertised to the vsmart via the omp peering on biz-internet. Subsequently this allows for the ipsec connections to be formed to other branches over mpls. The idea here is that normally control connections are expected to be formed on all colors. In this case you can't reach the controllers as they are in the cloud and require internet access which is not available easily from the mpls circuit. the command allows mpls to work properly without having the required control connections.

this behavior however does come with the side effect that if biz-internet is down then the device is not manageable. the dataplane however does stay up for 12 hours by default in what is called graceful restart. so even if you lose control connections the users are not affected. this does lock the control plane and obviously no config changes can be made during this time as well.

biz-internet being down is hopefully is somewhat rare. However if this becomes a problem you would need to provide some sort of internet breakout to reach the controllers on the mpls path. That way if you form control over both paths you will have manageability if either path is down or can't reach the controllers.

as for the testing. the typical routing design would have multiple default routes via any available path. on MPLS you don't need a default route if you are not forming control to the vmanage and vsmart. However you still need routes to reach the other vedges. the concept here is that in vpn 0 there is just ipsec, tls and routing protocols traffic. Outside of direct internet access there is no transit traffic in vpn 0. ipsec and tls terminate in vpn 0 and the transit traffic is forwarded in the service vpns. You can't use the floating static like you did in the test, ipsec tunnels will not form because there is no route over the mpls interface unless biz-internet is down.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-22-2019 12:38 PM - edited 01-22-2019 12:42 PM

@ekhabaro & @David Aicher Thank you for the replies and the explanation. The "max-control-connections 0" makes complete sense to me now. In my case I do have connectivity to the internet via my MPLS path and I'm adding the second default route to allow control connections to be up in case biz-internet fails. Not having this has bit me already thus the reason I am changing. I did end up opening a TAC case on this because I was getting too much variability in behavior (partly my fault / part not... read below).

One thing I want to mention first... I was missing a source address in my firewall rules allowing the mpls interface access to the internet for the tls/dtls ports. This added to my confusion, but was not the overall issue.

After a ~2 hour TAC call this is what we came up with:

When I add the second equal cost default route to a running cEdge that currently only has the one there are issues. I get into one of three states:

1. I can ping out only one of the two interfaces to 8.8.8.8

2. I can ping out neither of the two interfaces to 8.8.8.8

3. In some cases the cEdge loses control connection and can't reconnect

* states 1 & 2 do seem to build control connections on both paths most of the time

But... If I add the second default route and then reboot the cEdge it comes back up and everything looks healthy (able to ping 8.8.8.8 out of both interfaces / control connections build from both).

Current theory is there is something where the second default route is not being "programed" correctly when it's added while running. With a fresh boot containing the dual default route config this is being "programed" correctly. Seems specific the the ISR platform (ISR1K in my case). I uploaded logs and they are still looking into it.

Also for clarity I am on IOS XE 16.10.1 and hardware is C1111-8PLTEEA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-23-2019 10:08 AM - edited 01-23-2019 10:08 AM

Update on this. So it appears that the reboot is not putting it in a good state, just so happens that 8.8.8.8 worked after a reload:

TEST-CE1#ping 8.8.8.8 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 8.8.8.8, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 8/9/12 ms TEST-CE1#ping 8.8.8.8 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 8.8.8.8, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 8/14/24 ms ============================================================================== TEST-CE1#ping 1.1.1.1 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 1.1.1.1, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 ..... Success rate is 0 percent (0/5) TEST-CE1#ping 1.1.1.1 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 1.1.1.1, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 ..... Success rate is 0 percent (0/5) ============================================================================== TEST-CE1#ping 9.9.9.9 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 9.9.9.9, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 ..... Success rate is 0 percent (0/5) TEST-CE1#ping 9.9.9.9 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 9.9.9.9, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 48/48/48 ms ============================================================================== TEST-CE1#ping 192.16.31.23 source 192.168.1.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 192.16.31.23, timeout is 2 seconds: Packet sent with a source address of 192.168.1.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 312/337/348 ms TEST-CE1#ping 192.16.31.23 source 10.229.10.201 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 192.16.31.23, timeout is 2 seconds: Packet sent with a source address of 10.229.10.201 !!!!! Success rate is 100 percent (5/5), round-trip min/avg/max = 48/50/56 ms

TAC pointed me to this bug, which I think is our issue CSCvn55971 - cedge: Locally sourced packets using wrong interface with ECMP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2019 06:36 AM

Thanks for posting that bug. The default routing in VPN0 confused me as well. My understanding is both underlay interfaces will be in VPN0. While VPN0 does share a common routing table the interfaces will only use routes it learns on their local interface.

Say you have gi 0 and gi 1 in VPN0. You will never see gi 1 use a route learned on gi 0 , even if its a more specific route.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2019 11:38 PM - edited 01-31-2019 11:40 PM

@Will Kerr please don't be confused by this bug, as I explained in this topic, routing via default routes and building data plane tunnels follows "colors" logic.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide