- Cisco Community

- Technology and Support

- Data Center and Cloud

- Server Networking

- vPC and MAC Address Learning Riddle

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

vPC and MAC Address Learning Riddle

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2014 01:50 PM

I have been thinking up an interesting scenario in my head, and I'm convinced the answer is a simple one that I'm overlooking. However, I've been at it for a while and decided to post the concept here and get some eyes on it.

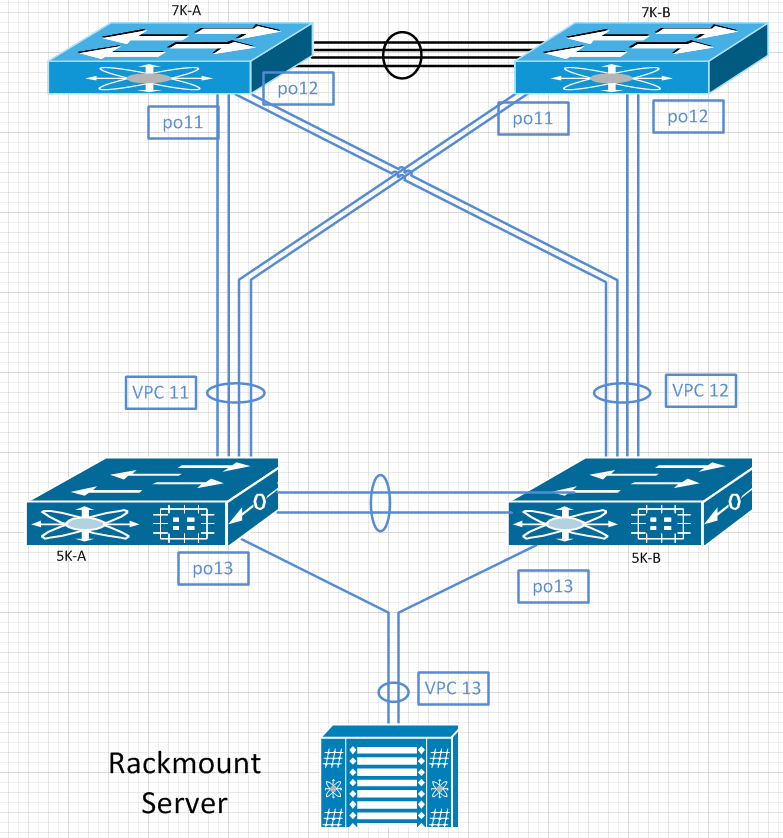

Let's say your topology includes a pair of Nexus 7Ks, a pair of Nexus 5Ks, and a single server running VMware ESXI (lets say the vSwitch is the Nexus 1000v). This is just a simple rackmount server without anything special like a Cisco VIC - just a pair of NICs connected to the same vSwitch.

Because of this, we're able to choose between pretty much two vSwitch load sharing policies. In vSphere, the default policy is "route based on originating virtual port ID" - which isnt' exactly the identical to, but will result in the same general behavior as source MAC hashing. Let's say we're using the same policy on our Nexus 1000v. Effectively, a single source in the hypervisor will only send traffic up one link. This means that traffic from this virtual machine should only ever go to one Nexus 5K in the pair.

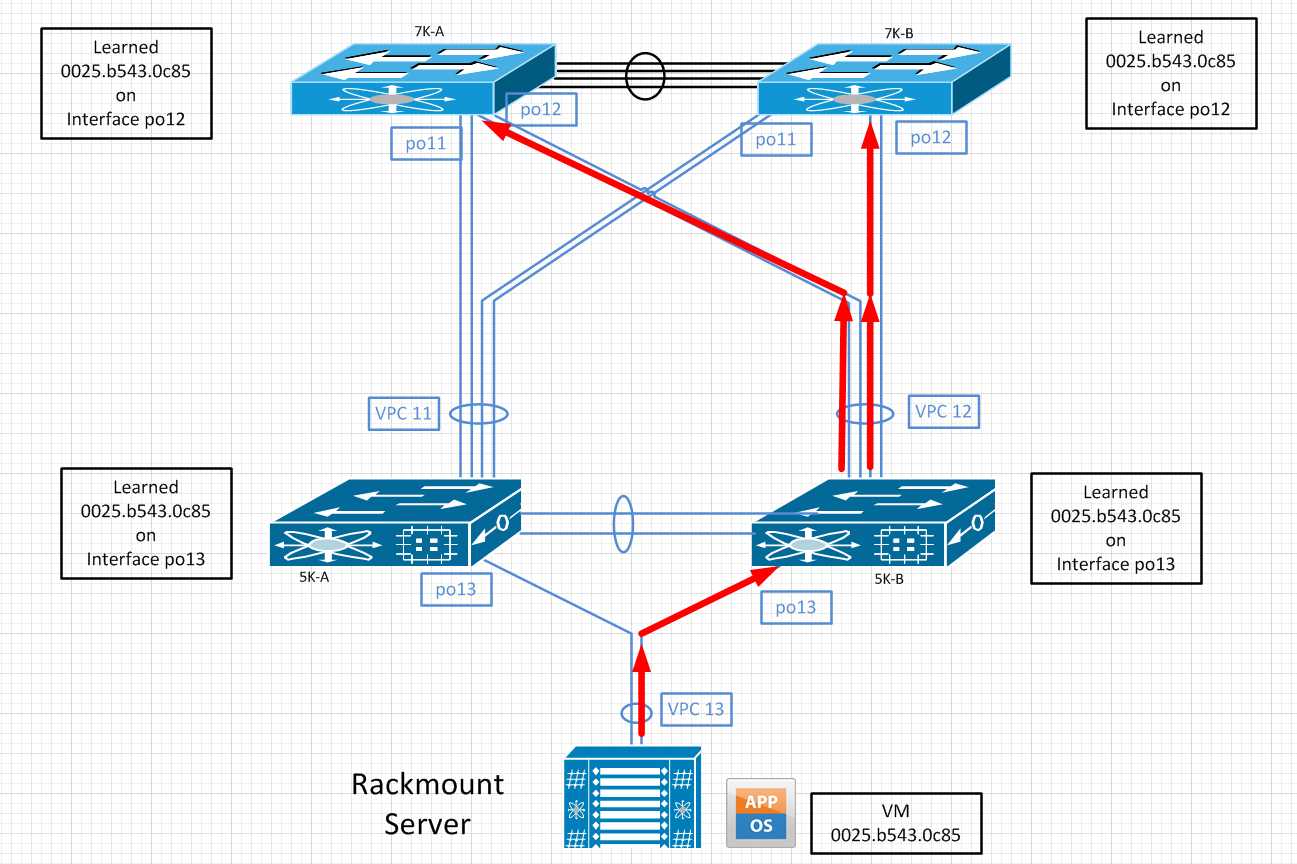

The result as shown above is that this virtual machine's MAC address is learned on po13 on both 5Ks (synchronized to the other switch because of the vPC peer-link). Traffic from the 5K to the 7K pair is load balanced based on ip hash, so it could go to either 7K from there, but that doesn't matter because, again, vPC synchronization will result in that MAC address being learned on port channel 12 in any case. I don't see a problem in this topology because MAC addresses can only be learned on one interface (even if it's a port channel interface).

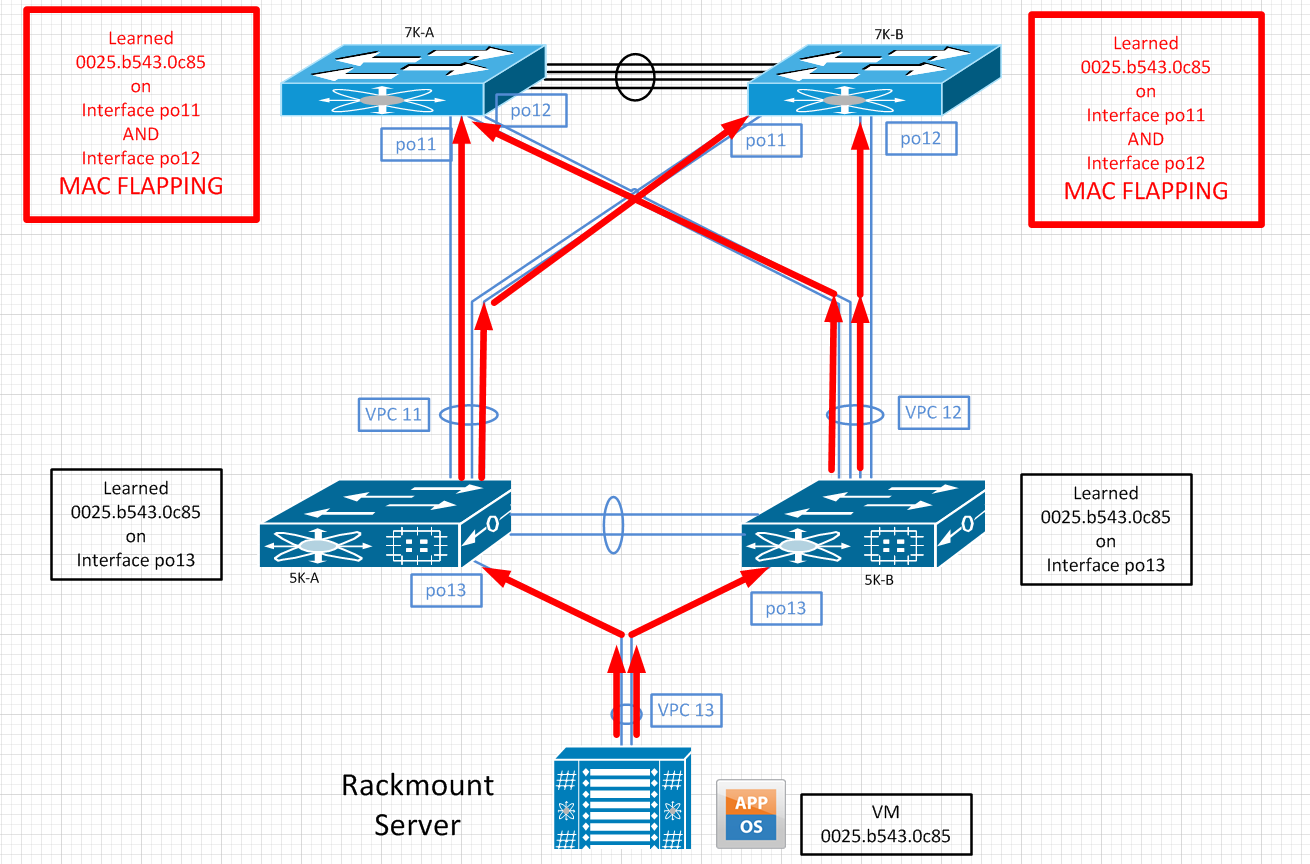

However, things get more interesting when we start load-balancing based on IP hash on the server as well. In this scenario, traffic from a single virtual machine (and therefore single MAC address) could egress out of either link - it all depends on the IP source and destination.

From a 5K perspective, we don't have a problem - even if we send traffic up both links to the 5K pair, vPC synchronization once again saves the day and the MAC address tables on both 5Ks read correctly that the MAC address for our virtual machine has been learned on po13.

This gets to be a problem when you realize that both 5K switches are connected to the 7K pair on separate vPCs (and separate port channel IDs). Since traffic from our virtual machine will be entering the 7K pair from both 5Ks, this MAC address will be learned on po11 and po12 alternately - a less brutal way of describing a MAC flap. vPC synchronization doesn't help us here because the MAC address is being learned on multiple interfaces - vPC synchronization only helps us if the other switch is receiving frames from the same source on the same interface name, which is not the case here.

As a result of this, I'm concerned I'm missing something fundamental to how these components work. Anyone who can help and point out my gap in knowledge is certainly welcome to do so.

- Labels:

-

Server Networking

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2014 03:58 PM

Hello Matt,

I understand the issue and I ask you why do not use a vpc with back-to-back topology?

In your scenario you are connecting a "single switch" (vpc N5K domain) with two different switches above that are talking to each other.

Although you are protected from L2 loop in the vpc loop prevention mechanism (in the N5K vpc domain) you still can face mac issues and duplicate frames from this design.

Regards.

Richard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2014 04:04 PM

I had considered back-to-back vPC but was interested to know if that's the right direction. So in this topology, the only proper configuration is a back-to-back vPC, and my concerns about MAC flapping are valid?

EDIT: Better yet, what if the two switches on top weren't Nexus switches - or even capable of any kind of MLAG? Wouldn't we have the same problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2014 06:21 PM

Hello Matt,

I can see a lot of problems that can happen, not just about mac flapping but L2 inconsistency overall.

If you have in the upstream a different kind of box, you can not connect them as a loop or this box will learn the same mac from two different sources or you will take blocked links by spanning-tree.

A vpc back-to-back topology is the best option for sure, if you have vlans overlapping among the boxes or not. It will give you all paths as active and no L2 issues.

Regards,

Richard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2014 08:35 AM

Thanks, Richard. So what I'm hearing is that when you have two pairs of vPC-capable switches like this, and you're not using anything like FabricPath, then back-to-back vPC is really the way to go. Right?

Not that I don't believe you (because I do ) but I'm very interested in any documentation you may know about that talks about this scenario, and that recommends a back-to-back topology. I'm going to use this example in a few teaching excercises and it'd be nice to have material to cite.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2014 10:28 AM

Hello Matt,

Definitely vpc B2B is the best option for L2 without fabricpath.

About documentation, the only thing related is that vpc b2b is fully supported, since you keep the vpc domains with different numbers.

You can find some documentation in the DCI subject that also mention vpc b2b.

Please rate useful answers.

Best Regards.

Richard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2014 10:47 AM

Interesting discussion. And great that you took the time to produce diagrams to clearly explain the problem.

Nothing to add over and above what Richard has already stated, but on the documentation side of things, it may be worth reviewing the Design and Configuration Guide: Best Practices for Virtual Port Channels (vPC) on Cisco Nexus 7000 Series Switches.

If you look at the section vPC Deployment scenarios from page 8, this discusses single and double sided (back-to-back) vPC. For the latter it provides a number of benefits that double-sided vPC offers over single sided vPC.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2014 11:00 AM

Thanks for adding Steve.

Is always great see a very well explained question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-10-2015 01:51 AM

Hi,

Me too have a question with similar setup.

Can you pls help me.?

https://learningnetwork.cisco.com/thread/80886

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-27-2015 07:46 AM

Normal Spanning-tree process would put either Po11 or Po12 in Blocking, assuming that the N7K is the root. The VPC peer-link is ALWAYS forwarding, but that doesn't mean that both uplinks would be forwarding.

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-17-2018 07:34 AM

You are simply not considering spanning tree.

STP will block either po11 or po12 and prevent the loop to occur

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide