- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Nexus: Unable to ping SVI over vPC

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nexus: Unable to ping SVI over vPC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2016 04:52 AM - edited 03-08-2019 05:03 AM

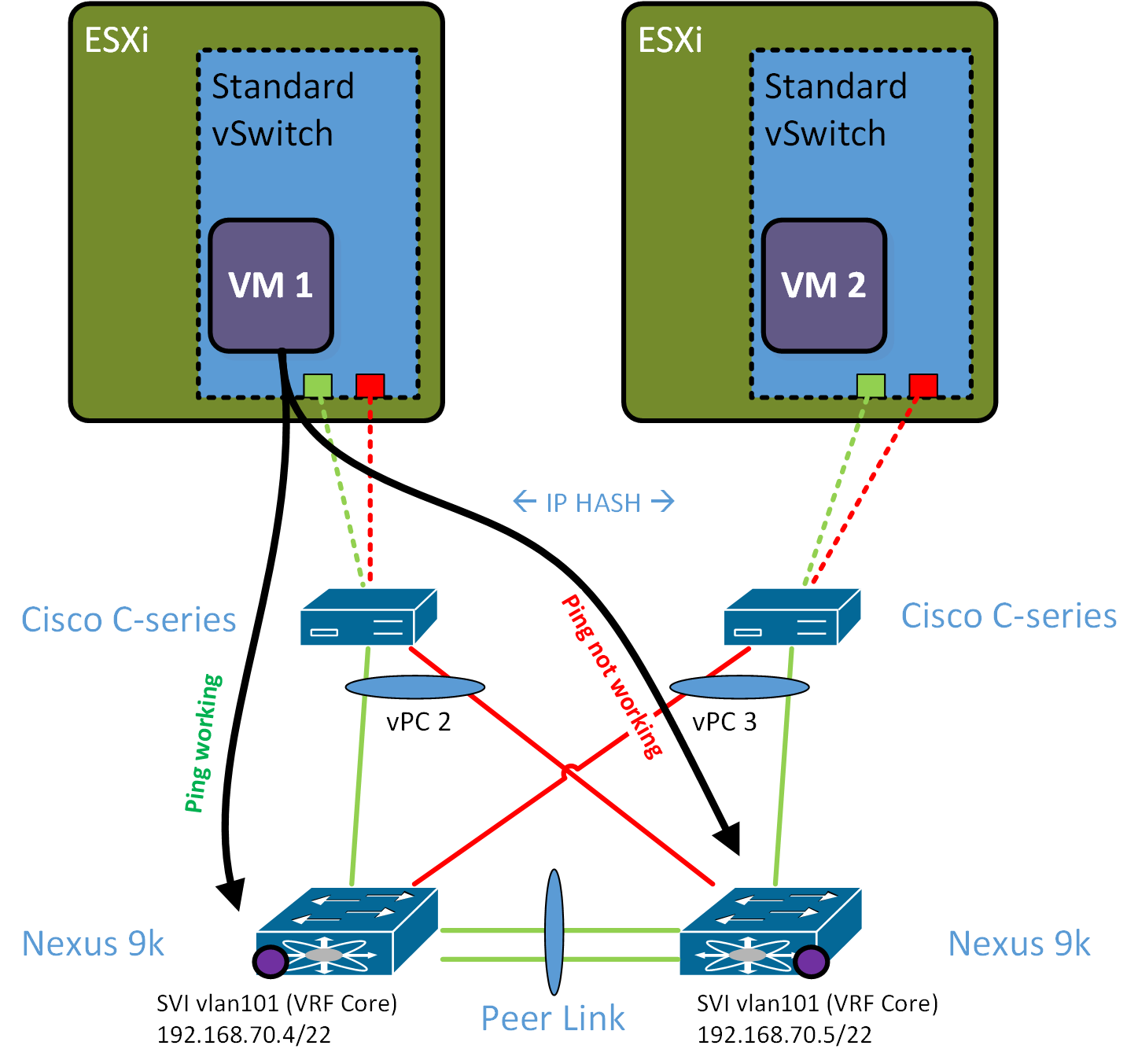

I am struggling a bit with a problem in a small data center environment. I drew a visual representation of the problem down below.

The DC as two ESXi:s connected to a Nexus 9k pair running vPC. In the ESXi end there is a standard vSwitch with two uplink NICs teamed as active/active with a load-balance algorithm set to IP-hash, so basically it should handle traffic as a PortChannel. In the Nexus end, the link towards the ESXi is a vPC with two static underlying PortChannel interfaces (channel-group X mode on).

Now, this setup works fine for L2 traffic going east-west between the ESXi:s and between the ESXi:s and a storage system. It also works fine for uplink L2 traffic to the rest of the network.

Planning for a future migration of gateway functionality to the Nexus pair, we have set up two SVIs in the VM VLAN 101.

We are now seing L3 connectivity issues when trying to reach these SVI:s. For example:

We can ping from VM1 to SVI VLAN 101 on the left Nexus switch, but we can't ping from VM1 to the corresponding SVI on the right Nexus switch. The same goes for VM 2, which can reach the SVI on the right Nexus switch, but cannot reach the SVI on the left Nexus switch.

My first thought was that this should be related to the load-balancing (IP-hashing) and I expected to see odd numbered IP:s being able to reach one Nexus switch, and even numbered being able to reach the other Nexus switch. However, it looks as if it's actually only possible to reach the left Nexus from the left ESXi, and vice versa on the righthand side.

I was also thinking this might be related to the vPC loop prevention mechanism, but it shouldn't be since I am pinging the actual SVI on the Nexus switches, and the response should be a new packet generated by the pinged Nexus and should be able to be returned to the pining VM on a local vPC member port on the pinged Nexus.

One thing that I noticed was that it's not possible to ping the other way around either, so I can't ping from the right Nexus to VM1. When checking the ARP table, it comes up as incomplete.

Should I be able to ping the SVI:s from a server connected in this manner over a vPC? Forget ESXi for a moment. Is the setup sound?

Could this be an ESXi issue, that it's dropping packets because it sees them as comming in on the wrong interface? I was thinking about the ARP here for example.

- Labels:

-

Other Switches

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2016 01:24 PM

Hello.

As far as I understood, there is no problem reaching outside networks.

If so, did you try to assign different MAC addresses per SVI on VPC peers ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2016 01:43 PM

Hi, and thank you for your input.

You are correct about reaching outside networks, the VMs can reach other hosts within the same subnet and they can get out of the subnet. They are routed by an ASA firewall at the moment.

I don't follow you on the MAC address assigning. Are you suggesting that I manually set the MAC addresses used by the SVI's? I've never done that before, and I'm not sure of what difference it would make.

//Clayton

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2017 10:22 AM - edited 11-29-2017 10:25 AM

Hi Clayton,

Did you manage to resolve this issue? I’m seeing the exact same thing on a pair of 9ks.

With the debugs I’ve done we can see arp requests reaching the switch but the switch doesn’t respond to the arp request!

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2017 10:31 AM

Does the ping work if you shutdown the straight link between UCS and N9k and just let the cross link up between the UCS and N9k. Also, are you able to ping from N9k to N9k SVI? From N9k switches to host VM?

Also, what is the software version that you are running on N9k?

Could you please share the below outputs as well from both N9k's:

- show ip arp vl 101

- show mac address vl 101

--Vinit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2017 10:38 AM - edited 11-29-2017 10:39 AM

Hi vinet,

In my case it is a windows host doing lacp. The MAC address is shown in both 9ks down the correct interface, however the arp table shows incomplete for the host on one of the 9ks.

If I shut the link down to the server from the offending switch and thus have the traffic traverse the peer link it then responds!

Bring the link back up and the arp entry disappears and again the host can’t ping the svi.

Further testing - if we shut down the other link, so left with a single link connected between the host and the offending switch we STILL can’t ping the svi - but we can still ping the OTHER 9k across the peer link.

At all times doing ethanalyser shows the offending switch is receiving the arp request - it just chooses not to reply to it when the host is connected directly to it.

Been working with TAC today and will post our resolution if we get one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2017 10:43 AM

You should be able to ping all devices from the VM servers. Do you have a layer-3 routed link between the 9ks?

Can you post the HSRP or VRRP config from the 9ks for vlan 101?

What switch is primary vpc and is your HSRP/VRRP config matches the same device? For example, if 9k-1 is primary VPC, it should also be the VRRP master.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2017 10:48 AM

Also we do have the peer-gateway and arp sync commands used so I would expect the arp tables to be accurate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-30-2018 09:07 AM

did you ever fix this issue ? we are seeing the same with a pair of n9k version 7.0(3)I2(2a)

cheers

nigel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-30-2018 12:10 PM

Yeah ours was a fex programming bug.fix was to recreate the port channel and upgrade iOS. I’ll update with the bug id that tac provided when I get onto my laptop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-15-2019 12:21 PM

Hello can you provide the bug is number? we are facing the same issue on a pair of Nexus 56128 in vPC. They have FEX connected dual-home.

symptom: SW1 cannot ping HSRP address of SW2 until we do a shut/no shut on interface vlan xxx on SW2

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide