- Cisco Community

- Technology and Support

- Networking

- Switching

- (Possibly) spanning tree problem: Some ports stop forwarding aft

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

(Possibly) spanning tree problem: Some ports stop forwarding after blocking for long time

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2012 08:07 AM - edited 03-07-2019 09:59 AM

This may or may not be a spanning tree problem but it's the first thing I'm thinking of.

We're growing a pretty large switch network with a combination of managed and unmanaged switches (yes I know, bad idea, tell that to Finance) that use Catalyst switches at the core and distribution layers, and a combination of unmanaged or managed Cisco SF series switches at the access layer. The unmanaged ones are there because of their physical size, and replacing them all with managed switches would require some major construction work to make room for them.

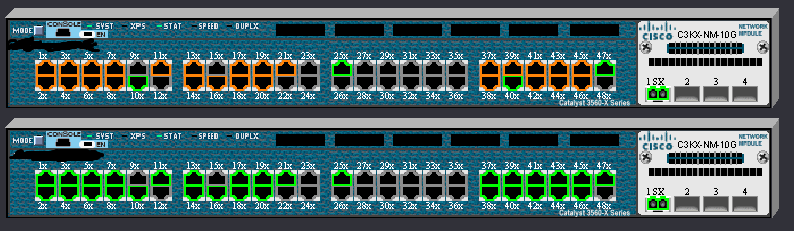

In one distribution closet I have two 3560-X switches cabled to the cores:

Ports gi1/1 are cabled to the cores. Ports 0/47 are cabled to each other so they're reachable in case one core line drops. And as you can see from the LED colours, the majority of the ports on one of the switches is in a blocking state; these ports are cabled to unmanaged switches at the access layer, and the corresponding ports on the other switch are in a forwarding state; this is my intended design and disconnecting one will cause the other to take over and start forwarding after a few seconds. I have rapid-pvst enabled too.

So for the most part I have a pair of fwd / blk ports for each unmanaged access switch. Some of the switches are managed and support rapid-pvst; these ones have a pair of fwd / fwd ports, Gi0/25 and Gi0/40 in this case, and the managed switch at the access layer is doing the blocking. This too is my intended design and, for the most part, works like the unmanaged switch ports do.

However, if I try to replace an unmanaged switch with a managed switch at the access layer, and I reconfigure the affected ports to use the managed switch, both ports will stay in a fwd state but disconnecting the working port will break the connection to the access-layer. I can't ping anything. Even more interesting is the display from the affected SBS switch:

25 GE1 Enabled Disabled STP Designated 20000 128 Forwarding 32768-44:e4:d9:31:e0:64 128-49 20007 1

26 GE2 Enabled Disabled STP Root 20000 128 Forwarding 16385-f8:66:f2:44:0a:00 128-10 7

Ports 25 and 26 on the SBS (in this case a SBS24) are showing incorrect values for bridge IDs. By comparison, a working SBS will have these values:

49 GE1 Enabled Disabled STP Alternate 20000 128 Discarding 16385-f8:66:f2:44:0a:00 128-40 7 0

50 GE2 Enabled Disabled STP Root 20000 128 Forwarding 12289-f8:66:f2:58:94:80 128-40 4

(Yes, I changed the priority on the 3560-X switches and that's why you see 16384 and 12288 as the priority values. The cores have priorities 0 and 4096.)

In the working SF200-48, I'm seeing one port as root and one as alternate as I should. It even shows being connected to ports Gi0/40 (128-40) on the 3560-X switches. I can disconnect one cable and the other takes over as I intended after a few seconds. If I move the non-working SF200-24 to ports Gi0/40, it works properly. If I move it to any other pair of ports that haven't been used, it works properly. If I put it back on ports Gi0/10 it stops working and shows the previous state.

I checked and rechecked the configs for ports Gi0/10 and they are identical to those for Gi0/40, Gi0/26 and others I've tested. For the unmanaged switches I'm using static access VLANs, and for the managed switches I'm using Dot1Q trunks, and having the managed switches provide the static access ports.

Is this happening because I've had an unmanaged switch connected to the affected ports for a long time? Is there some counter or something I have to clear before this will work again? Do I have to reload the 3560-X switches?

--

(13 NOV 2012) Colour me confused. The MAC address that the affected SF200 switch sees on its GE1 port (44:e4:*) belongs to the affected SF200 switch. It should be seeing the 3560-X switch at MAC address f8:66:f2:58:94:80 with RSTP priority 12289. This doesn't happen when connected to a different set of ports. I wonder why the switch is seeing itself instead of the 3560-X.

- Labels:

-

Other Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2012 06:18 PM

Can you provide a diagram of your network showing how all devices are connected together?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-13-2012 05:37 AM

All right, first, an overall view of the Catalyst switch network:

Switch names redacted. The core is a pair of 4900M switches.The distribution switches are either pairs of 3560-X switches or pairs of 3750G switches set up as stacks. The 3560-X switches are not set up as stacks, virtual or otherwise. The pair of switches on the bottom are the 3560-X pair I'm dealing with. The standalone switches are not using any redundant links so, in theory, they shouldn't be concerned about spanning-tree protocol.

I configured rapid-pvst such that the cores are priorities 0 and 4096, and the 3750 stacks and one of two 3560-X are priority 16384. I changed the other 3560-X to 12288, but I realize it doesn't make a difference because whichever switch has the lower value MAC address would take priority over the other. I'll do some VLAN priority changes later, to do load balancing, but right now all VLANs have the same priority values.

Now at my pair of 3560-X switches, I have this:

Not including the fiber links back to the cores (the Visio templates don't have the SFP modules), I have a combination of SF100D unmanaged switches and SF200 managed switches.

A SF100D will cause one link to forward and the other to block, like the first image shows. A SF200 will cause both links to forward, because the SF200 is supposed to do the blocking. This works well unless I use a pair of ports for the SF200 that were recently used by a SF100D; the SF200 shows the display I posted earlier, and exhibits the behaviour I described.

With the SF100D, the 3560-X port pair is an access port on a specific VLAN. With the SF200 the port pair is a Dot1Q trunk pair with a specific set of allowed VLANs, and the SF200 presents access ports instead.

--

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2012 08:14 AM

Reloading the affected switch -- the switch that had higher RSTP priority -- allowed the affected ports to work again. Both the SF100D and SF200 access switches worked correctly and disconnecting one line would keep the access switches accessible. Reconvergence would take from 15 to 30 seconds with portfast disabled, but this should be fine for our application.

I don't consider reloading (rebooting) a switch a solution, however. Is there a register or a log kept somewhere in the switch's memory that tells it to stop servicing a given port? I had already tried shutdown / no shutdown and clear interface without any effect. If reloading is the only fix, then it was a good thing the other switch took over instantly -- I guess that's the "rapid" part of "rapid-pvst."

I had given up on other possible solutions when I tried connecting a host PC to one of the affected ports. The link light was green, but it wouldn't pass packets. It was like the affected ports were completely catatonic until I reloaded the switch.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide