- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Shared Subnets | Campus In-house Data Centers

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Shared Subnets | Campus In-house Data Centers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 07:08 AM - edited 03-08-2019 12:12 PM

Gents,

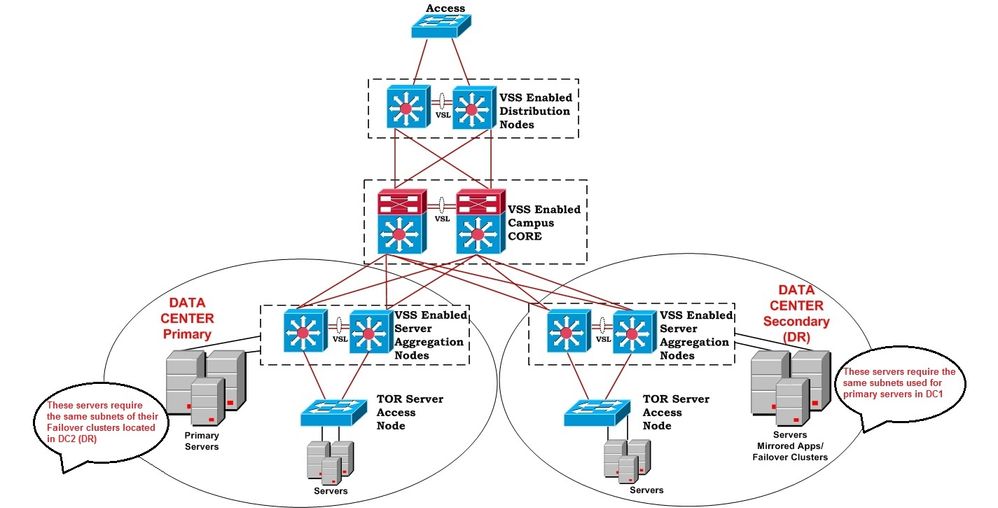

I'm working on a campus network which has two data centers (within the same premises) having Primary and Secondary servers to ensure the smooth failover.

Today, our client's server team came up with a new requirement of having 2 same subnets shared between both the Data Centers for some servers to share the mirrored data with their corresponding failover clusters.

Please have a look to the below topology and let me know if we can avoid running VRRP to share the subnets between both the Data Centers.

- Labels:

-

Other Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 07:22 AM

Hi,

Where is the inter-routing takes place for all vlans for both data centers?

If it is the core VSS, than you can simply trunk all vlans in both data centers and they all will be in the same subnets/vlans. BTW, are you using catalyst for data center? If you have not purchased these yet, Nexus is a better choice for data center use.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 07:27 AM

Thanks Reza for prompt response!

All the SVIs are residing on the Disribution/Aggregation nodes, and between them and Core we've OSPF.

Both the Data Centers are in different OSPF areas similar to the distribution block.

Yes, these are Catalyst boxes - existing in the topology otherwise Nexus was a better choise.

BR,

Umer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 07:47 AM

Hi Umer,

Since you are routing between the 2 data centers, this is a little a harder to do. One option could be that you add a separate physical link (layer-2) between the 2 data centers through the core and put that one specific vlan in that link only and deploy the SVI for it on the core. So, all other vlans are routed on the aggregation switches and only that vlan is on the core and the traffic between that vlan and the rest of the vlans is through on the core. Are these physical servers or VMs?

Is the server team sure that these server have to be on the same subnet for clustering to work?

Maybe you want to have a talk with the vendor for the app they use.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 07:54 AM - edited 09-29-2017 07:58 AM

Hello Reza,

I tried proposing this to the client but their network team doesn't allow any SVIs on the campus Core.

Yes, their servers team has already communicated with the Vendor and they confirmed the requirement of two shared subnets for their newly deployed Virtual Machines.

The other option is to run VRRP between the Server Aggs of both the DCs, but that would require additional interfaces.

Any other Idea Reza?

Thanks again for the usual support.

Kind Ragards,

Umer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2017 08:17 AM

Hi Umer,

I have never done VRRP or HSRP between 2 sets of VSS. If it is possible, how about a simple physical layer-2 link with only that vlan between the aggregation switches running VRRP or HSRP?

If HSRP and VRRP is not possible than the same thing but with one svi on one of set of aggregation switches as the gateway for both data centers. No matter how you do this, it gets messy.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-30-2017 04:23 AM - edited 09-30-2017 04:31 AM

Hello Reza,

I'm thinking of arranging the Transceivers to add additional L2 links to carry the shared vlans.

Moreover, the plan is to keep the networks of these shared SVIs in a different area (that'd be shared among both the server aggs) which are already connected to the capmus Core through backbone area.

I'll keep you posted on the final outcome.

Kind Regards,

Umer

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide