- Cisco Community

- Technology and Support

- Collaboration

- Unified Communications Infrastructure

- 6.1.5 to 9.1.2 Jump Upgrade Fails

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

6.1.5 to 9.1.2 Jump Upgrade Fails

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-16-2014 05:47 PM - edited 03-19-2019 07:46 AM

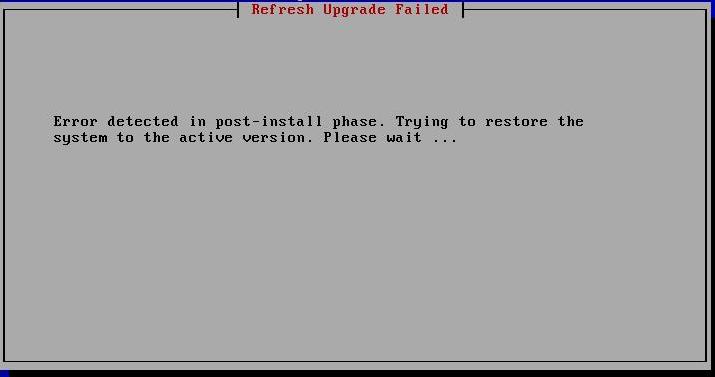

6.1.5 to 9.1.2 Jump Upgrade Fails. Receive the refresh upgrade fails screen and then a file system error after it attempts to roll back to previous version.

A summary of the environment and steps thus far.

6.1.5 SU1 - Pub, 2 Subs

- Installed three 6.1.5 SU1 servers using v8 ova on UCS C240 M3, VMware ESXi 5.0.

- VM OS changed to RHEL 3 32-bit

- Hostnames, IPs, DNS, Domain, Cert settings during install all matching current cluster. NTP server is different IP but accessible in lab. DNS servers are not.

- Restored entire production cluster DRS to new VMs. NTP server on VM Pub remained with IP from Lab on when VMs were installed with bootable media in first step. Didn't revert to IP from prod environment. Assume NTP server data isn't part of DRS since it didn't revert?

- Installed latest v3 of refresh cop.

- Attempted refresh upgrade to 9.1.2 on Pub.

Any ideas? Is DNS not being reachable during refresh an issue? Does the old NTP server IP still exist in the database somewhere? If I look at NTP Server in OS Admin it shows my Lab NTP server IP and is accessible.

Thanks in advance.

- Labels:

-

UC Migration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-30-2014 05:45 AM

Joseph,

Thanks for the reply -

There are no Tandberg devices on the cluster

The production cluster shows only cmterm-7936-sccp-3-3-16.cop and cmterm-7936-sccp-3-3-20.cop as COP files in "show version active".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 10:57 AM

I have the same problem.

1) I cannot upgrade the production cluster, so I replicated it in the lab - installed CUCM 6.1.3a on the Lab MCS server, installed all cop files and restored database.

2) upgraded the MCS server to CUCM 6.1.4 and created backup

3) created VM on UCS using ova template, changed Red Hat version (to v3), installed 6.1.4 and restored configuration

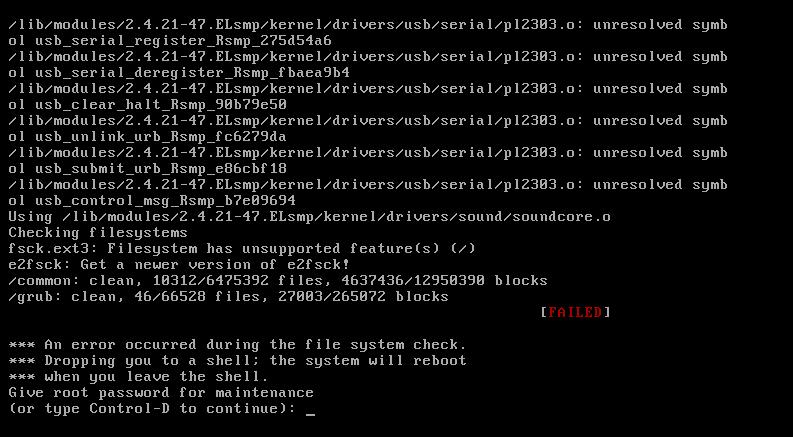

Upgrade to CUCM 9.1.2 failed with the following error:

e2fsck: Get a newer version of e2fsck

*** An error occurred during the file system check.

*** Dropping you to a shell: the system will reboot

*** when you leave the shell.

Give root password for maintenance

(or type Control-D to continue):

Tried to fix the problem with recovery disk, but with no success.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 12:45 PM

Upgrade 6.1.4 to 7.1.5 in lab prior to upgrading to 9.12. Then when your 9.1 upgrade fails you can at least gather logs. 6.1.4 cannot recover from a refresh upgrade failure, which is why you receive '

e2fsck: Get a newer version of e2fsck' error. Once you figure out the root cause you can address it on 6.1.4 and at that point go straight to 9.1.2.

-Aaron

- « Previous

-

- 1

- 2

- Next »

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide