- Cisco Community

- Technology and Support

- Data Center and Cloud

- Unified Computing System (UCS)

- Unified Computing System Blogs

- Implement Red Hat OpenShift Container Platform 4.5 on Cisco UCS Infrastructure

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

In today’s fast paced digitization, Kubernetes enables enterprises to rapidly deploy new updates and features at scale while maintaining environmental consistency across test/dev/prod. Kubernetes lays the foundation for cloud-native apps which can be packaged in container images and can be ported to diverse platforms. Containers with micro-service architecture, managed and orchestrated by Kubernetes, help organizations embark on a modern development pattern. Moreover, Kubernetes has become a de facto standard for container orchestration and offers the core for on-premise container cloud for enterprises. it's a single cloud-agnostic infrastructure with a rich open source ecosystem. It allocates, isolates, and manages resources across many tenants at scale as needed in elastic fashion, thereby, giving efficient infrastructure resource utilization.

OpenShift Container Platform (OCP) with its core foundation in Kubernetes is a platform for developing and running containerized applications. It is designed to allow applications and the data centers that support them to expand from just a few machines and applications to thousands of machines that serve millions of clients. OCP helps organizations use the cloud delivery model and simplify continuous delivery of applications and services on OCP, the cloud-native way.

In this document, I’ll be outlining step by steps guide how to deploy OCP on Cisco UCS servers. In the reference topology, I started the deployment with 3 x controllers and 1 x worker node on Cisco UCS C240 M5 Servers connected with Cisco UCS Fabric interconnect 6332. I added the worker node after installing the controllers which is running Red Hat Enterprise Linux whereas controllers are running Red Hat CoreOS. By following the same steps, many worker nodes can be added as you scale the environment.

Deployment

Logical Topology

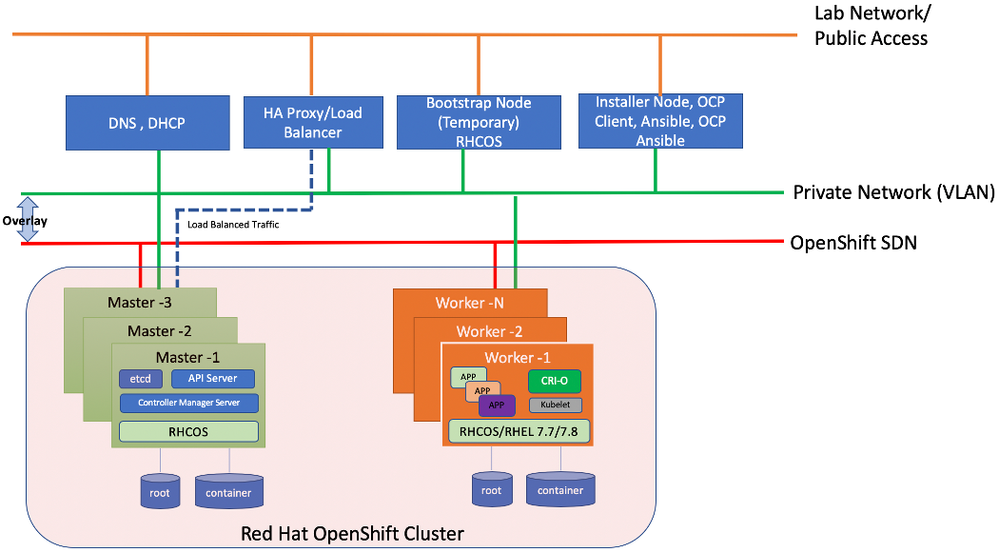

Figure 1 shows the logical topology of this reference design.

Figure 1: Logical Topology

Software Versions

|

Software |

Version |

|

Red Hat OpenShift |

4.5 |

|

Red Hat CoreOS |

4.5.2 |

|

Red Hat Enterprise Linux |

7.8 |

|

Ansible |

2.9.10 |

|

OpenShift Installation Program on Linux |

4.5.2 |

|

OpenShift CLI on Linux |

4.5.2 |

|

Kubernetes |

1.16 with CRI-O runtime |

Prerequisites

- Configure DHCP or set static IP addresses on each node. This document sets static IP addresses to the nodes.

- Provision the required load balancers.

- DNS is absolute MUST. Without proper DNS configuration, installation will not continue.

- Ensure network connectivity among nodes, DNS, DHCP (if used), HAProxy, Installer or Bastion node.

- Red Hat Subscription with access to OpenShift repositories.

Note: It is recommended to use the DHCP server to manage the machines for the cluster long-term. Ensure that the DHCP server is configured to provide persistent IP addresses and host names to the cluster machines.

Minimum Required Machines

The smallest OpenShift Container Platform clusters require the following hosts:

- 1 x temporary bootstrap machine

- 3 x Control plane, or master machines

- 2+ compute machines, which are also known as worker machines (Minimum 2+ worker nodes are recommended. However, in this reference design, I setup 1 x RHEL worker or compute node for the sake of simplicity. Another node can be added by repeating the same procedure as outlined in this document)

Note: The cluster requires the bootstrap machine to deploy the OCP cluster on the three control plane machines. Bootstrap machine can be removed later, after the install is completed.

Note: For control plane high availability, it is recommended to use separate physical machine.

Note: Bootstrap and control plane must use Red Hat Enterprise Linux CoreOS (RHCOS) as the operating system. For compute or worker node RHCOS or RHEL can be used.

Lab Setup

|

Server |

IP |

Specs |

OS |

Role |

|

Lab Jump Host |

10.16.1.6 Public IP |

Virtual Machine |

Windows Sever |

DNS, NAT (DNS server and Default Gateway for all the nodes) |

|

rhel1.hdp3.cisco.com |

10.16.1.31

|

Cisco UCS C240 M5 |

RHEL 7.6 |

RHEL install host also termed as “Bastion” node. OpenShift installation program, oc client, HAproxy, Webserver (httpd), Ansible, OpenShift Ansible Playbook

|

|

bootstrap.ocp4.sjc02.lab.cisco.com |

10.16.1.160 |

Cisco UCS C240 M5 |

CoreOS 4.5.2 |

Bootstrap node (temporary) |

|

master0.ocp4.sjc02.lab.cisco.com |

10.16.1.161 |

Cisco UCS C240 M5 |

CoreOS 4.5.2 |

OpenShift control plane master node + worker node |

|

master1.ocp4.sjc02.lab.cisco.com |

10.16.1.162 |

Cisco UCS C240 M5 |

CoreOS 4.5.2 |

OpenShift control plane master node + worker node |

|

master2.ocp4.sjc02.lab.cisco.com |

10.16.1.163 |

Cisco UCS C240 M5 |

CoreOS 4.5.2 |

OpenShift control plane master node + worker node |

|

worker0.ocp4.sjc02.lab.cisco.com |

10.16.1.164 |

Cisco UCS C240 M5 |

RHEL 7.8 |

OpenShift Worker or compute node |

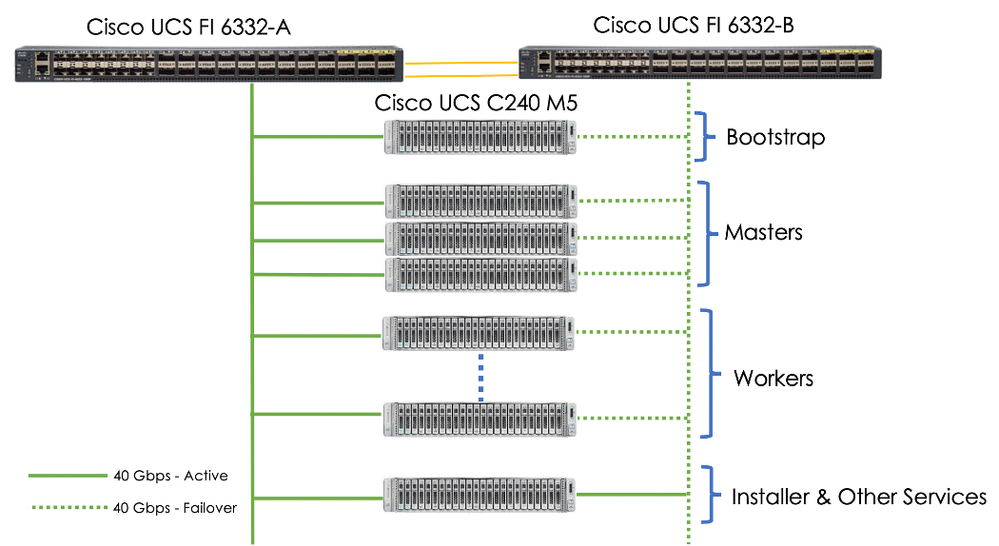

Reference Physical Topology

Below Figure shows the reference design physical setup

Note:Bootstrap node is temporary and will be removed once the installation is completed.

- For simplicity, single vNIC is configured in each node in Cisco UCS Manager. In large scale design, multiple vNICs is recommended to separate different kinds of traffic such as control traffic and OpenShift SDN traffic.

- vNIC high-availability is maintained at Cisco UCS Fabric Interconnect level by mapping vNIC to Fabric-A and failover to Fabric-B

- This solution does not implement Linux bonding with multiple active vNIC. However, installation of CoreOS does offer bond configuration at the time of first boot.

- Bootstrap node is only used during the install time. its main purpose is to run bootkube. bootkube technically provides a temporary single node master k8 for bootstraping. After installation, this node can be repurposed.

- Master nodes will provide the control plane services to the cluster. it runs API server, scheduler, controller manager, etcd, coredns, and more. Three master nodes are required front-end by the load balancer. Master nodes runs control plane services inside containers. Masters can also be schedulable for PODs and containers.

- Workloads runs on compute nodes. Infrastructure can be scaled by adding more compute nodes when additional resources are needed. Compute nodes can have specialized hardware such as NVMe, SSDs, GPU, FPGA, and etc.

High level install check list

There are two approaches for OCP deployment.

- Installer Provisioned Infrastructure (IPI)

- User Provisioned Infrastructure (UPI)

To learn more about these installation types, please refer to Red Hat documentation.

This OCP deployment is based on UPI.

UPI based install involved the following high-level steps:

- Acquisition of appropriate hardware

- Configure DNS

- Configure Load Balancer

- Setup non-cluster host to run a web server reachable by all nodes.

- Setup OCP installer program

- Setup DHCP (Optional if using static IP)

- Create install-config.yaml as per your environment.

- Use the openshift-install command to generate the RHEL CoreOS ignition manifests, host these files via the web server.

- PXE or ISO boot the cluster members to start the RHEL CoreOS and OpenShift install

- Monitor install progress with the openshift-install command

- Validate install using OpenShift client tool or launch the web console.

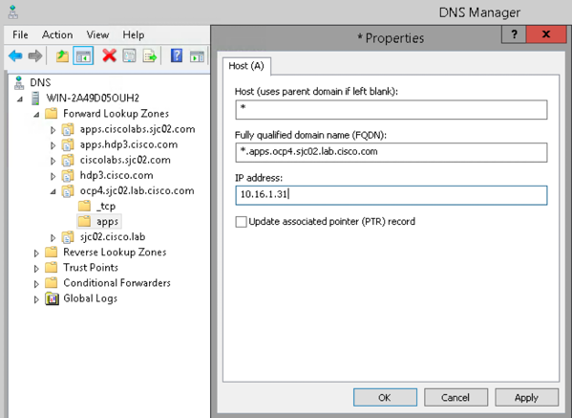

Setup DNS

In this deployment, I utilized DNS Manger to configure DNS records in Windows Server. However, Linux server can also be configured for DNS.

Note: It is important to pay special attention to DNS record. Most unsuccessful install issues arise from incorrect DNS entries

|

Component |

DNS A/AAA Record |

IP (For Reference Only) |

Description |

|

Kubernetes API |

api.ocp4.sjc02.lab.cisco.com |

10.16.1.31 |

IP address of the load balancer. |

|

api-int.ocp4.sjc02.lab.cisco.com |

10.16.1.31 |

||

|

Bootstrap |

bootstrap.ocp4.sjc02.lab.cisco.com |

10.16.1.160 |

IP address for bootstrap node |

|

Master<index> |

master0.ocp4.sjc02.lab.cisco.com |

10.16.1.161 |

IP address of nodes dedicated for master’s role in OpenShift cluster |

|

master1.ocp4.sjc02.lab.cisco.com |

10.16.1.162 |

||

|

master2.ocp4.sjc02.lab.cisco.com |

10.16.1.163 |

||

|

Worker<index> |

worker0.ocp4.sjc02.lab.cisco.com |

10.16.1.164 |

IP address of worker node. For each worker node, add A/AAA DNS record. |

|

Etcd-<index> |

etcd-0.ocp4.sjc02.lab.cisco.com |

10.16.1.161 |

Each etcd instance to point to the control plane machines that host the instances. Etcd will be running in respective master nodes |

|

etcd-1.ocp4.sjc02.lab.cisco.com |

10.16.1.162 |

||

|

etcd-2.ocp4.sjc02.lab.cisco.com |

10.16.1.163 |

||

|

|

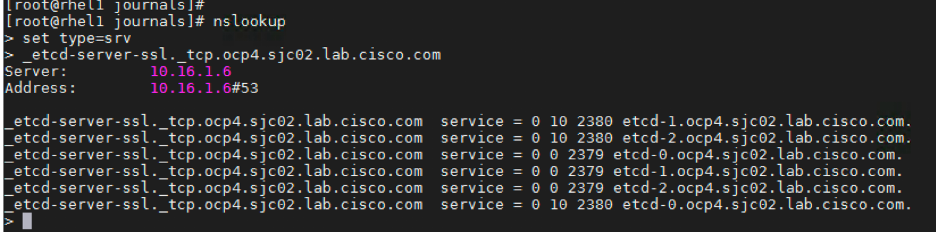

_etcd-server-ssl._tcp.ocp4.sjc02.lab.cisco.com |

etcd-0.ocp4.sjc02.lab.cisco.com |

For each control plane machine, OpenShift Container Platform also requires an SRV DNS record for etcd server on that machine with priority 0, weight 10 and port 2380. A cluster that uses three control plane machines requires the following records:

|

|

_etcd-server-ssl._tcp.ocp4.sjc02.lab.cisco.com |

etcd-1.ocp4.sjc02.lab.cisco.com |

||

|

_etcd-server-ssl._tcp.ocp4.sjc02.lab.cisco.com |

etcd-2.ocp4.sjc02.lab.cisco.com |

||

|

Routes |

*.apps.ocp4.sjc02.lab.cisco.com |

10.16.1.31 |

IP address of load balancer |

DNS AAA Record

Etcd SRV Record

Routes DNS Record

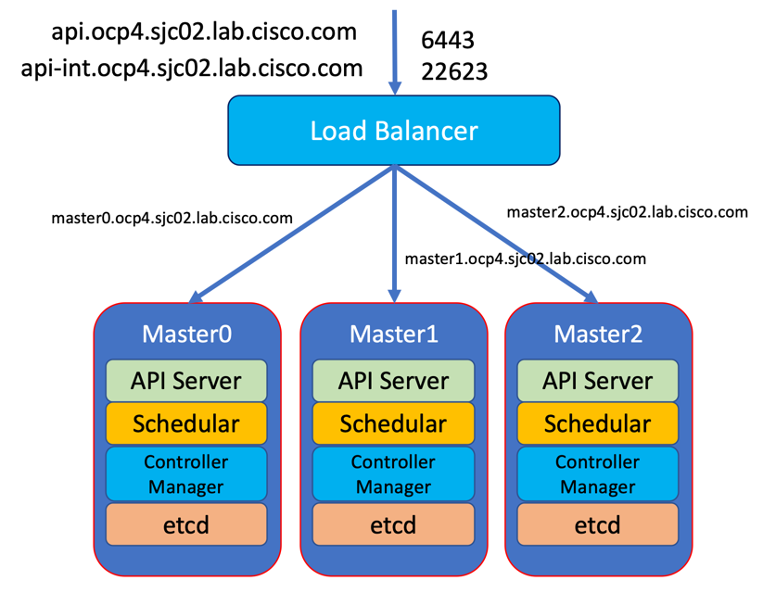

Setup Load Balancer

Load balancer is required for Kubernetes API server, both internal and external as well as for OpenShift router.

In this deployment, for simplicity we used HAProxy to be installed and configured in Linux server. However, existing load balancer can also be configured as long as it can reach to all OpenShift nodes. This document does not make any official recommendation for any specific load balancer.

In this reference design, we installed HA proxy in rhel1 server by running the following command.

[root@rhel1 ~]# yum install haproxy

After the install configure /etc/haproxy/haproxy.cfg file. We need to configure port 6443 and 22623 to point to bootstrap and master nodes. We also need to configure port 80 and 443 to point to the worker nodes. Below is the example of HAproxy config used in this reference design.

[root@rhel1 ~]# vi /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

#option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend openshift-api-server

bind *:6443

default_backend openshift-api-server

mode tcp

option tcplog

backend openshift-api-server

balance source

mode tcp

# comment out or delete bootstrap entry after the successful install and restart haproxy

server bootstrap.ocp4.sjc02.lab.cisco.com 10.16.1.160:6443 check

server master0.ocp4.sjc02.lab.cisco.com 10.16.1.161:6443 check

server master1.ocp4.sjc02.lab.cisco.com 10.16.1.162:6443 check

server master2.ocp4.sjc02.lab.cisco.com 10.16.1.163:6443 check

frontend machine-config-server

bind *:22623

default_backend machine-config-server

mode tcp

option tcplog

backend machine-config-server

balance source

mode tcp

# comment out or delete bootstrap entry after the successful install and restart haproxy

server bootstrap.ocp4.sjc02.lab.cisco.com 10.16.1.160:22623 check

server master0.ocp4.sjc02.lab.cisco.com 10.16.1.161:22623 check

server master1.ocp4.sjc02.lab.cisco.com 10.16.1.162:22623 check

server master2.ocp4.sjc02.lab.cisco.com 10.16.1.163:22623 check

frontend ingress-http

bind *:80

default_backend ingress-http

mode tcp

option tcplog

backend ingress-http

balance source

mode tcp

server worker0.ocp4.sjc02.lab.cisco.com 10.16.1.164:80 check

# Specify master nodes as they are also acting as worker node in this setup

# Master node entries are not required if masters are not acting as worker node as well.

server master0.ocp4.sjc02.lab.cisco.com 10.16.1.161:80 check

server master1.ocp4.sjc02.lab.cisco.com 10.16.1.162:80 check

server master2.ocp4.sjc02.lab.cisco.com 10.16.1.163:80 check

frontend ingress-https

bind *:443

default_backend ingress-https

mode tcp

option tcplog

backend ingress-https

balance source

mode tcp

server worker0.ocp4.sjc02.lab.cisco.com 10.16.1.164:443 check

# Specify master nodes as they are also acting as worker node in this setup

server master0.ocp4.sjc02.lab.cisco.com 10.16.1.161:443 check

server master1.ocp4.sjc02.lab.cisco.com 10.16.1.162:443 check

server master2.ocp4.sjc02.lab.cisco.com 10.16.1.163:443 check

Restart HAProxy service and verify that it is running without any issues.

[root@rhel1 ~]# systemctl restart haproxy [root@rhel1 ~]# systemctl status haproxy

Covering line by line details of haproxy.cfg file is beyond the scope of this document, please refer to haproxy documentations available online for details. Make sure all the ports specified in haproxy are opened in the server where it is running.

Setup Webserver

A webserver is also required to be setup for placing ignition configurations and installation images for Red Hat CoreOS. Webserver must be reached by bootstrap, master, and worker nodes during the install. In this design, we setup Apache web server (httpd).

If webserver is not already installed. Run the following command in installer server.

[root@rhel1 ~]# yum install httpd

Create a folder for ignition files and CoreOS image.

[root@rhel1 ~]# mkdir -p /var/www/html/ignition-install

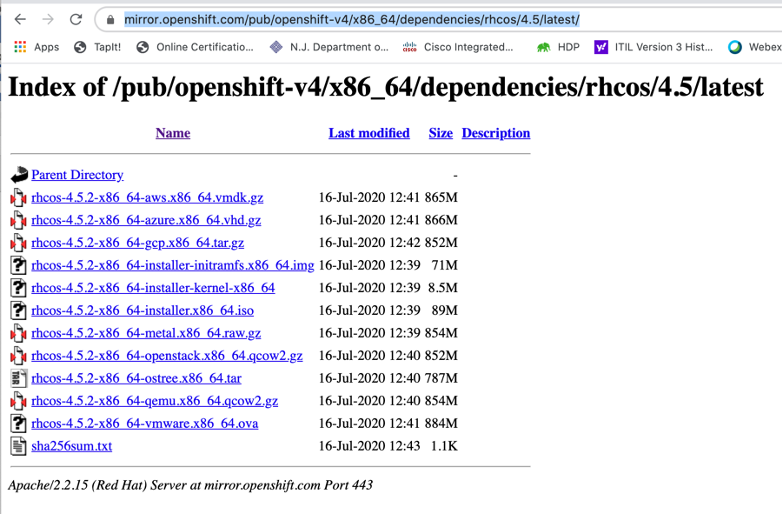

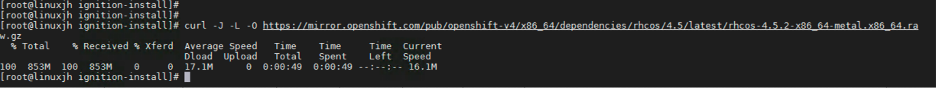

Download Red Hat CoreOS image to this folder

[root@rhel1 ~] cd /var/www/html/ignition-install

[root@rhel1 ignition-install]# curl -J -L -O https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.5/latest/rhcos-4.5.2-x86_64-metal.x86_64.raw.gz

Setup DHCP (Optional)

DHCP is recommended for high scale production grade deployment to provide persistent IP addresses and host names to all the cluster nodes. Use IP reservation so that IP should not change during node reboots.

However, for simplicity I used static IP addresses in this deployment for all the nodes.

Installation

This installation is comprised of 3 x master nodes, 1 x worker nodes, and 1 x bootstrap node. Install will be performed from Linux host which we also call as “Bastion” node. This is installed will be using static IP.

Create install folder in bastion node as follows:

# mkdir ocp-install # cd ocp-install

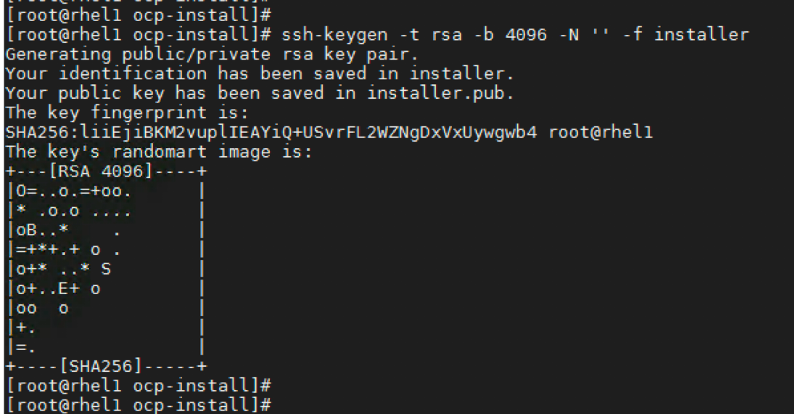

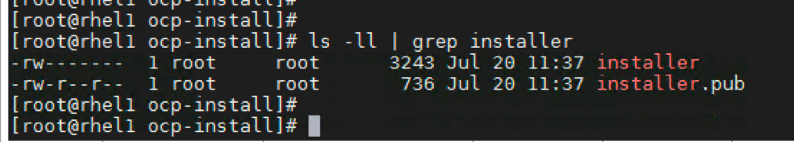

Generate SSH private key and add it to the agent

Create an SSH private key which will be used to SSH into master and bootstrap nodes as the user core. The key is added to the core users’ ~/.ssh/authorized_keys list via the ignition files.

# ssh-keygen -t rsa -b 4096 -N '' -f installer

Verify if ssh-agent is running or otherwise start the ssh-agent as a background task

# eval "$(ssh-agent -s)"

Add the SSH private key to the ssh-agent

[root@linuxjh ocp-install]# ssh-add installer

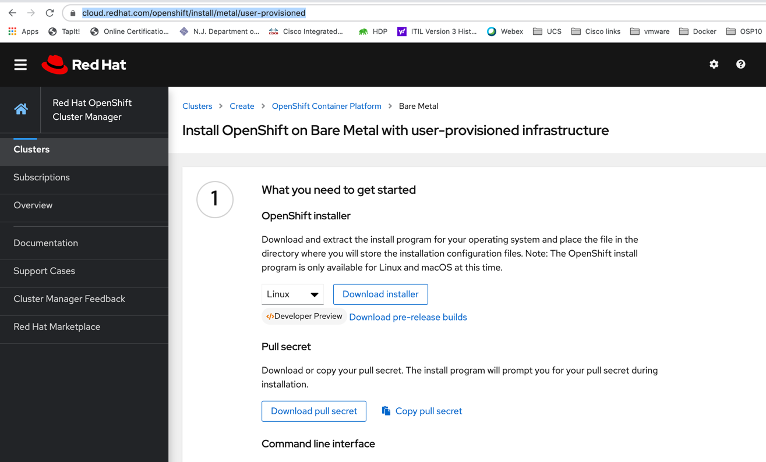

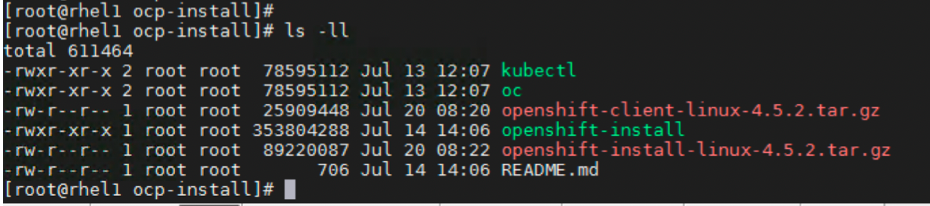

Obtain Installation Program

Before you begin installation for OpenShift Container Platform, it is important to download OpenShift installer and set it up in bastion host.

# curl -J -L -O https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.5.2/openshift-install-linux-4.5.2.tar.gz # tar -zxvf openshift-install-linux-4.5.2.tar.gz # chmod 777 openshift-install

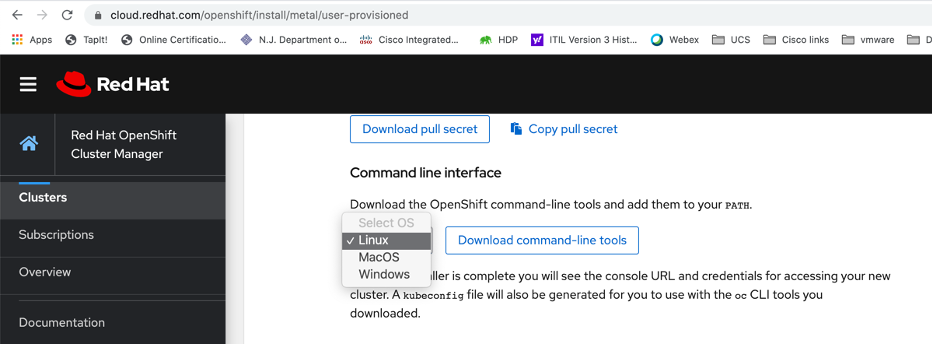

OpenShift installer can also be obtained from the following URL. This requires Red Hat login registered with Red Hat customer portal.

https://cloud.redhat.com/openshift/install/metal/user-provisioned

At this time, OpenShift installer is available for Mac and Linux OS.

Download Command Line Interface (CLI) Tool

You can install the OpenShift CLI(oc) binary on Linux by using the following procedure.

[root@rhel1 ocp-install]# curl -J -L -O https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.5.2/openshift-client-linux-4.5.2.tar.gz

Unpack the archive

[root@rhel1 ocp-install]# tar -zxvf openshift-client-linux-4.5.2.tar.gz

Place the oc binary in a directory that is on your PATH

# echo $PATH # export PATH=$PATH:/root/ocp-install/

CLI can also be downloaded from

https://cloud.redhat.com/openshift/install/metal/user-provisioned

OpenShift command line interface is available for Linux, MacOS, and Windows at the time of this writing.

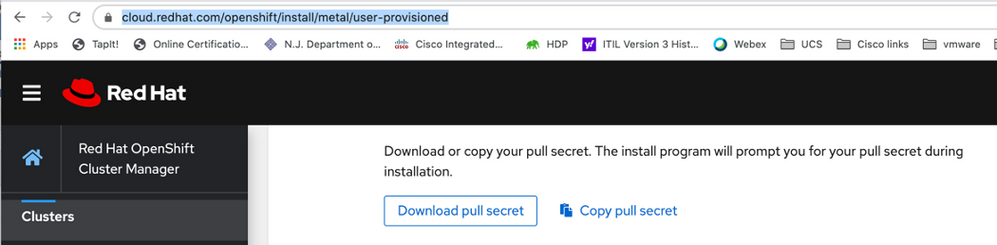

Download pull secret

Installation program requires pull secret. This pull secret allows you to authenticate with the services that are provided by the included authorities, including Quay.io, which serves the container images for OpenShift Container Platform components.

Without pull secret, installation will not continue. It will be specified in install config file in the later section of this document.

From the OpenShift Cluster Manger site (https://cloud.redhat.com/openshift/install/metal/user-provisioned), pull secret can either be downloaded as .txt file or copied directly in the clipboard.

Get the pull secret and save it in pullsecret.txt file

# cat pullsecret.txt

Create install configuration

Run the following command to create the install-config.yaml file

# cat <<EOF > install-config.yaml

apiVersion: v1

baseDomain: sjc02.lab.cisco.com

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: ocp4

networking:

clusterNetworks:

- cidr: 10.254.0.0/16

hostPrefix: 24

networkType: OpenShiftSDN

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

pullSecret: '$(< /root/ocp-install/pullsecret.txt)'

sshKey: '$(< /root/ocp-install/installer.pub)'

EOF

Note: Make a backup of install-config.yaml - as this file is deleted once the ignition-config are created. Or it can be recreated by running the above command.

Please note/change the following according to your environment:

- baseDomain – This is the domain in your environment. For example, I configure ocp4.sjc02.lab.cisco.com as the base domain earlier in the steps.

- metadata.name – This would be clusterId.

Note: This will effectively make all FQDNS ocp4.sjco2.lab.cisco.com

- pullSecret - This pull secret can be obtained by going to cloud.redhat.com

- Login with your Red Hat account

- Click on "Bare Metal"

- Either "Download Pull Secret" or "Copy Pull Secret"

- sshKey - This is your public SSH key (e.g. id_rsa.pub) - # cat ~/.ssh/id_rsa.pub

Generate Ignition Configuration Files

Ignition is a tool for manipulating configuration during early boot, before the operating system starts. This includes things like writing files (regular files, systemd units, networkd units, etc.) and configuring users. Think of it as a cloud-init that runs once (during first boot).

OpenShift 4 installer generates these ignition configs to prepare the node as an OpenShift either bootstrap, master, or worker node. From within your working directory (in this example it's ~/ocp-install) generate the ignition configs.

[root@linuxjh ocp-install]# ./openshift-install create ignition-configs --dir=/root/ocp-install

Note: Make sure install-config.yaml file should be in the working director such as /root/ocp-install directory in this case.

Note: Creating ignition config will result in the removal of install-config.yaml file. Make a backup of install-config.yaml before creating ignition configs. You may have to recreate the new one if you need to re-create the ignition config files

This will create the following files.

. ├── auth │ ├── kubeadmin-password │ └── kubeconfig ├── bootstrap.ign ├── master.ign ├── metadata.json └── worker.ign

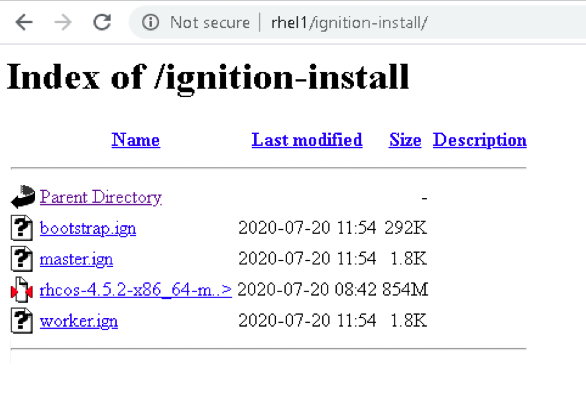

The list of the install folder is shown in the below figure.

Copy the .ign file to your webserver

# cp *.ign /var/www/html/ignition-install/

Give appropriate permissions, otherwise, it will not work

# chmod o+r /var/www/html/ignition-install/*.ign

Before we begin installing RHCOS in bootstrap and master nodes, make sure you have the following files available in your webserver as show in below figure.

Download RHCOS iso

Download the RHCOS 4.5.2 iso from the following link.

Install RHCOS

Use the ISO to start the RHCOS installation. PXE can also be setup for RHCOS installs, however, it is not covered in this document and beyond the scope of this writing.

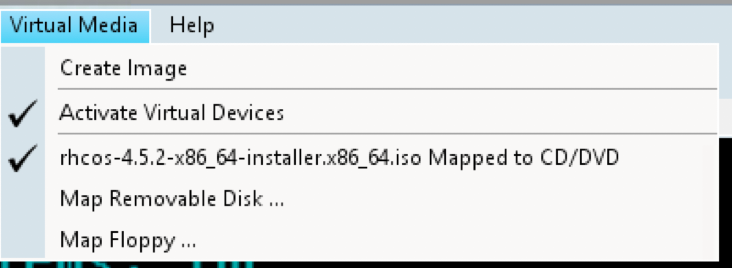

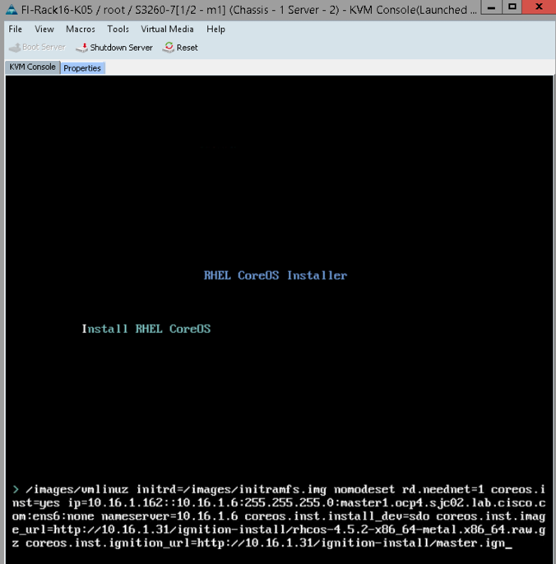

Activate virtual media in Cisco UCS Manager and map this iso as shown in Figure in Cisco UCSM KVM

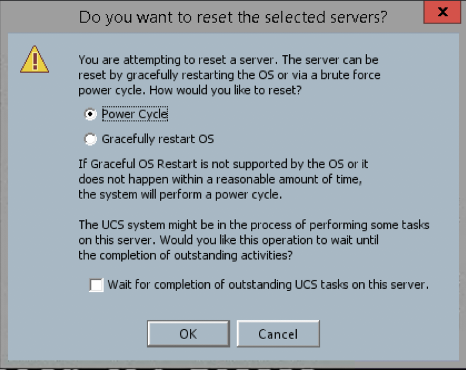

Power cycle the server from UCSM

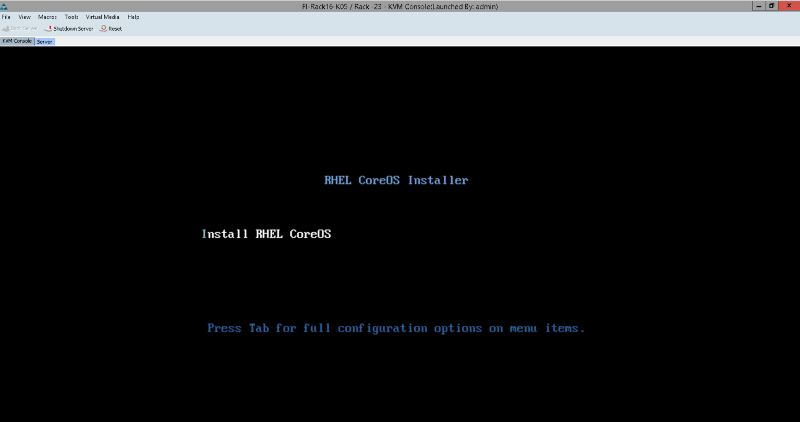

After the instance boots, press the “TAB” or “E” key to edit the kernel command line.

Add the following parameters to the kernel command line:

coreos.inst=yes coreos.inst.install_dev=sda coreos.inst.image_url=http://10.16.1.31/ignition-install/rhcos-4.5.2-x86_64-metal.x86_64.raw.gz coreos.inst.ignition_url=http://10.16.1.31/ignition-install/bootstrap.ign ip=10.16.1.160::10.16.1.6:255.255.255.0:bootstrap.ocp4.sjc02.lab.cisco.com:enp63s0:none nameserver=10.16.1.6 nameserver=8.8.8.8 bond=<bonded_interface> if any

NOTE: Make sure above parameters should be supplied in one line (means no enter key). it is written above with enter key or new line for readability purposes.

Syntax for the static ip is: ip=$IPADDR::$DEFAULTGW:$NETMASK:$HOSTNAME:$INTERFACE:none:$DNS or for DHCP it is: ip=dhcp

If you are providing DNS name for image_url and ignition_url, make sure when coreos iso boots up , it should be able to resolve it. Means your DHCP set the nameserver you are using or instead specify as static

In Cisco UCS KVM, you can also paste it from the clipboard. From KVM window, click File-->Paste Text From Clipboard

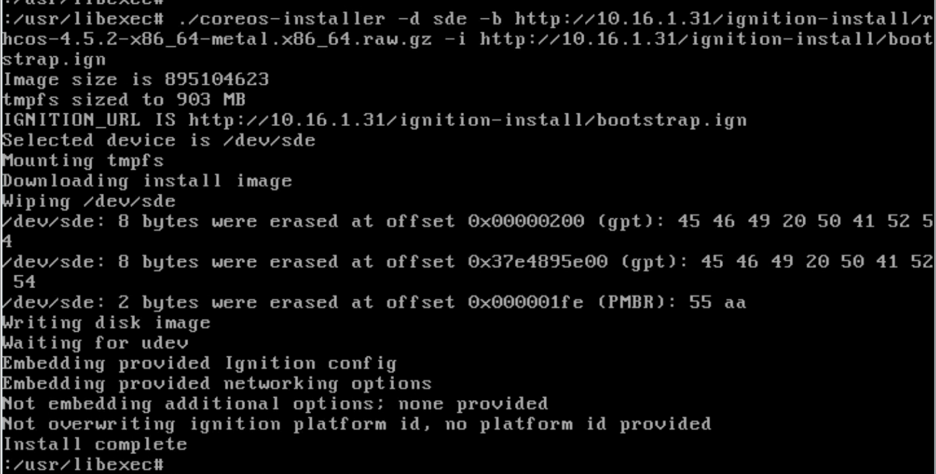

In case, if the RHCOS install did not go through as a result of typo or providing incorrect values for parameters. You can perform the following as shown in figure once the system boots up with iso.

You can run coreos-installer in /usr/libexec folder as below

/usr/libexec/coreos-installer -d sdi -b http://10.16.1.31/ignition-install/rhcos-4.5.2-x86_64-metal.x86_64.raw.gz -i http://10.16.1.31/ignition-install/master.ign

Unmap the media mount in UCSM KVM

Reboot the bootstrap after install

After the reboot, to ssh bootstrap

# ssh core@bootstrap.ocp4.sjc02.lab.cisco.com

Monitor the install

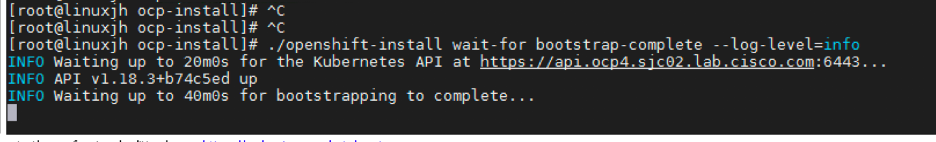

Once the bootstrap server is up and running, the install is actually already in progress. First the masters "check in" to the bootstrap server for its configuration. After the masters are done being configured, the bootstrap server "hands off" responsibility to the masters. You can track the bootstrap process with the following command.

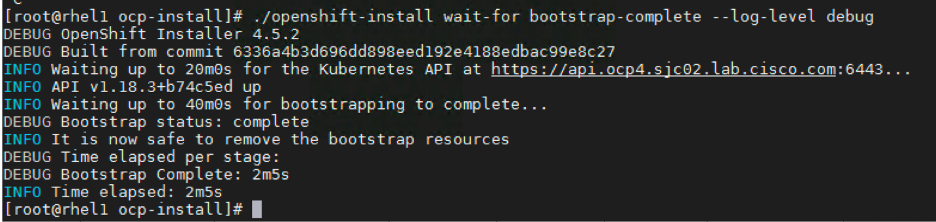

./openshift-install wait-for bootstrap-complete --log-level debug

You can monitor the detailed installation progress by SSH to bootstrap node and run the following

# ssh core@bootstrap.ocp4.sjc02.lab.cisco.com # journalctl -b -f -u release-image.service -u bootkube.service

Install RHCOS in Master Nodes

Repeat the same steps as outlined to install the RHCOS iso in bootstrap node. For master, specify masters ip and FQDN and master.ign ignition config file as shown for example.

Reboot server for ignition to be loaded.

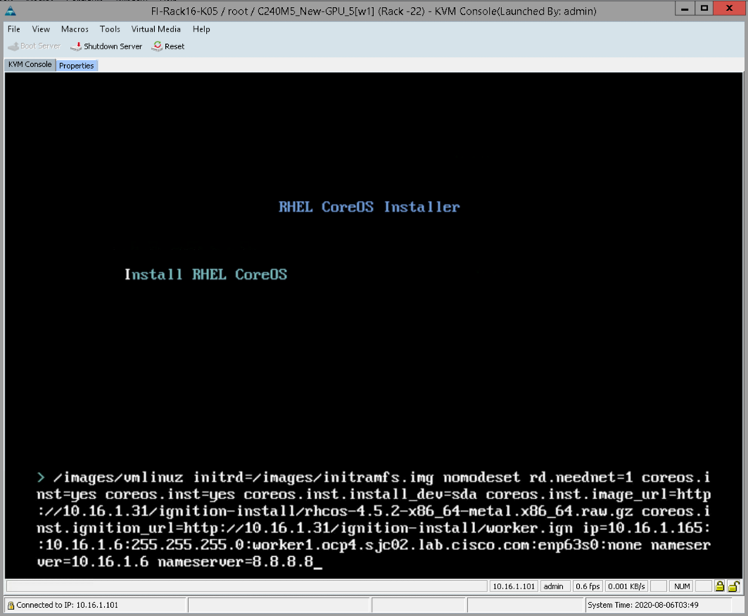

Install RHCOS in Worker Nodes

In this setup, I'll be deploying RHEL in the worker node which will be covered in the subsequent section of this document. If you decide to deploy RHCOS in the worker node, follow the steps as outlined for installing the bootstrap node. Make sure to specify worker.ign ignition file in coreos.inst.ignition_url parameter. For example, coreos.inst.ignition_url=http://10.16.1.31/ignition-install/worker.ign

As for example, apply the following as configuration parameters after pressing the Tab key

coreos.inst=yes

coreos.inst.install_dev=sda

coreos.inst.image_url=http://10.16.1.31/ignition-install/rhcos-4.5.2-x86_64-metal.x86_64.raw.gz

coreos.inst.ignition_url=http://10.16.1.31/ignition-install/worker.ign

ip=10.16.1.165::10.16.1.6:255.255.255.0:worker1.ocp4.sjc02.lab.cisco.com:enp63s0:none

nameserver=10.16.1.6 nameserver=8.8.8.8

Note: Next line or enter key in the above commands are added for readability. it should be typed as one line.

Figure below shows the RHCOS install in worker node

Once the worker node is up, you can ssh to worker node and watch the status by running the following command

# ssh core@worker0.ocp4.sjc02.lab.cisco.com # journalctl -b -f

Troubleshooting

Following are the troubleshooting tips in case if you experience any issues during the install

Pulling debug from installer machine. log files help in finding issues during the install.

# ./openshift-install gather bootstrap --bootstrap bootstrap.ocp4.sjc02.lab.cisco.com --master master0.ocp4.sjc02.lab.cisco.com --master master1.ocp4.sjc02.lab.cisco.com --master master2.ocp4.sjc02.lab.cisco.com # tar xvf log-bundle-20200720162221.tar.gz look for container logs in /root/ocp-install/log-bundle-20200720162221/bootstrap/containers Look for bootstrap logs in /root/ocp-install/log-bundle-20200720162221/bootstrap

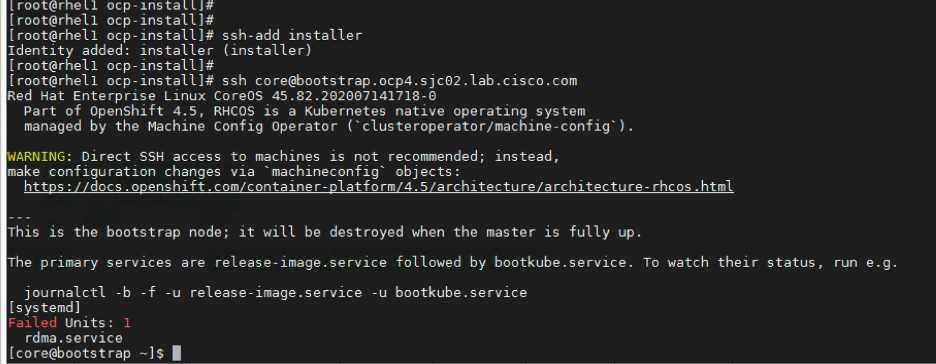

You can also ssh to the node such as bootstrap as shown below and look for any issues by running the following command

[root@rhel1 ocp-install]# ssh core@bootstrap.ocp4.sjc02.lab.cisco.com Red Hat Enterprise Linux CoreOS 45.82.202007141718-0 Part of OpenShift 4.5, RHCOS is a Kubernetes native operating system managed by the Machine Config Operator (`clusteroperator/machine-config`). WARNING: Direct SSH access to machines is not recommended; instead, make configuration changes via `machineconfig` objects: https://docs.openshift.com/container-platform/4.5/architecture/architecture-rhcos.html --- This is the bootstrap node; it will be destroyed when the master is fully up. The primary services are release-image.service followed by bootkube.service. To watch their status, run e.g. journalctl -b -f -u release-image.service -u bootkube.service Last login: Tue Jul 21 00:14:00 2020 from 10.16.1.31 [systemd] Failed Units: 1 rdma.service [core@bootstrap ~]$ journalctl -b -f -u release-image.service -u bootkube.service

If you are experiencing DNS issues. Run nslookup to see if they can be resolved. Pay special attention to etcd SRV records.

If you are running etcd related issues. Look for clock for nodes. Masters clocks are usually in sync. Bootstrap clock could be out of sync with masters. you can use the following command to verify. if you find clock out of sync by running the following, fix it.

[root@rhel1 ocp4.3-install]# for i in {bootstrap,master0,master1,master2}; do ssh core@$i.ocp4.sjc02.lab.cisco.com date; done;

If you experience any issues where worker node is not getting registered with master nodes (cluster) or forbidden user "system:anonymous" access while connecting to API server, check to see if you have any pending CSR in queue. if so, approve those CSR, you can use the following commands

[root@rhel1 ocp-install]# oc get csr -o name [root@rhel1 ocp-install]# oc get csr -o name | xargs oc adm certificate approve

If you experience any certificate related issues, look into if certificate is not expired. Following command will come handy for this purpose.

[core@bootstrap ~]$ echo | openssl s_client -connect api-int.ocp4.sjc02.lab.cisco.com:6443 | openssl x509 -noout -text

Finishing up the install

Once the bootstrap process is finished, you'll see the following message.

Remove the bootstrap from /etc/haproxy/haproxy.cfg file and restart haproxy service.

Validate Install

# export KUBECONFIG=auth/kubeconfig # oc get nodes# oc get pods --all-namespaces

Web console

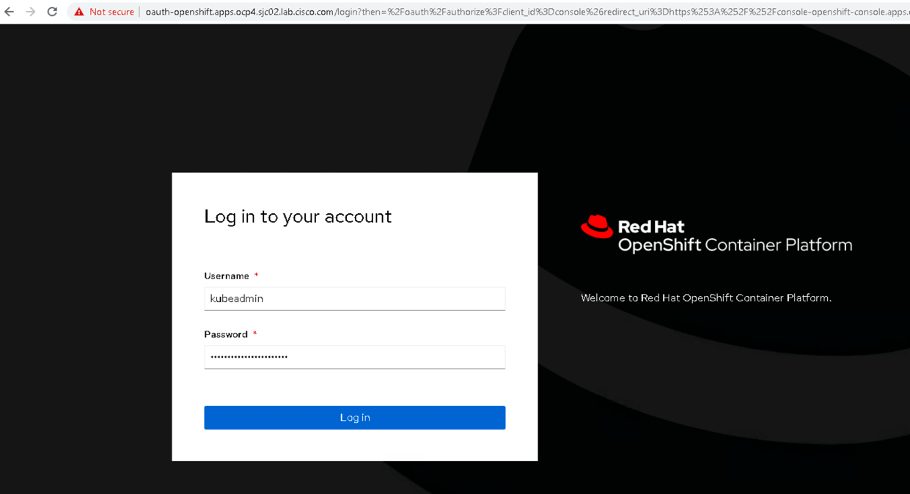

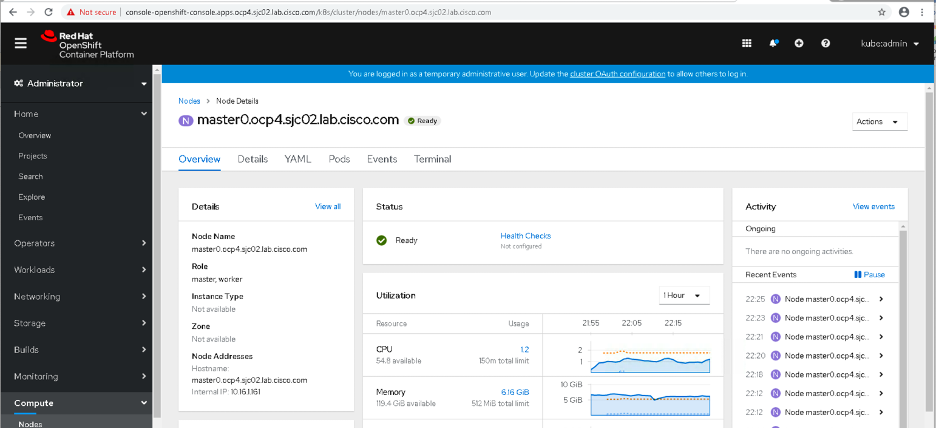

The OpenShift Container Platform web console is a user interface accessible from a web browser. Developers can use the web console to visualize, browse, and manage the contents of projects.

The web console runs as a pod on the master. The static assets required to run the web console are served by the pod. Once OpenShift Container Platform is successfully installed, find the URL for the web console and login credentials for your installed cluster in the CLI output of the installation program

To launch the web console, get the kubeadmin password. It is stored in auth/kubeadmin-password file

Launch the OpenShift console by typing the following in the browser.

https://console-openshift-console.apps.ocp4.sjc02.lab.cisco.com

Specify username and password. Username as kubeadmin and password retrieved from auth/kubeadmin-password file.

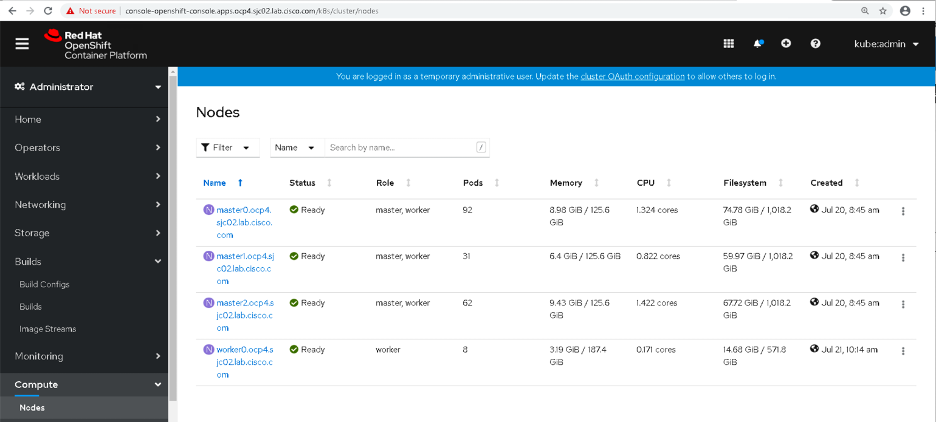

Click Compute-->Nodes

Click on one of the Node

Adding RHEL compute machines to an OpenShift Container Platform cluster

In OpenShift Container Platform 4.5, you have the option of using Red Hat Enterprise Linux (RHEL) machines as compute machines, which are also known as worker machines, in your cluster if you use a user-provisioned infrastructure installation. You must use Red Hat Enterprise Linux CoreOS (RHCOS) machines for the control plane, or master, machines in your cluster.

Note: if you choose to use the RHEL compute machines in your cluster, it is assumed that you take the responsibility of all OS lifecycle, management, and maintenance; which also include performing upgrades, patches, and all other OS tasks.

Prerequisites

- You must have active OpenShift Container Platform subscription on your Red Hat account

- Base OS should be RHEL 7.7 – 7.8

- Networking requirement. RHEL compute host must be accessible from control-plane

Note: Only RHEL 7.7-7.8 is supported in OpenShift Container Platform 4.5. You must not upgrade your compute machines to RHEL 8.

Install RHEL

- Download Red Hat Enterprise Linux 7.8 Binary DVD iso from access.redhat.com

- Activate the virtual media in Cisco UCS Manager KVM and map this iso file. Power cycle the server for it to boot from virtual media.

- Follow along the instructions of installing RHEL 7.8

- Under network and host name, specify as shown in Figure

- Reboot to finish the install.

Before you add a Red Hat Enterprise Linux (RHEL) machine to your OpenShift Container Platform cluster, you must register each host with Red Hat Subscription Manager (RHSM), attach an active OpenShift Container Platform subscription, and enable the required repositories.

# subscription-manager register --username <username> --password <password> # subscription-manager list --available --matches '*openshift*' # subscription-manager attach --pool=<pool id> # subscription-manager repos --disable="*" # yum repolist # subscription-manager repos --enable="rhel-7-server-rpms" --enable="rhel-7-server-extras-rpms" --enable="rhel-7-server-ose-4.5-rpms"

Stop and disable firewall on the host

# systemctl disable --now firewalld.service

Note: You must not enable firewalld later. Doing so will block your access to OCP logs on the worker node

Prepare the installer/bastion node to run the playbook

Perform the following steps on the machine that you prepared to run the playbook. In this deployment, I used the same installer host to setup Ansible.

Make sure you have ansible installed. This document does not focus on installing Ansible.

Note: Ansible 2.9.5 or later is required. you can run # yum update ansible if required

Verify ansible by running the following command.

# [root@rhel1 ocp-install]# ansible localhost -m ping

localhost | SUCCESS => {

"changed": false,

"ping": "pong"

}

[root@rhel1 ocp-install]#

Setup openshift-ansible, perform the following:

# git clone https://github.com/openshift/openshift-ansible.git

Create host file for newly added worker nodes

[root@rhel1 ocp-install]# cat openshift-ansible/inventory/hosts [all:vars] ansible_user=root #ansible_become=True openshift_kubeconfig_path="/root/ocp-install/auth/kubeconfig" [new_workers] worker0.ocp4.sjc02.lab.cisco.com [root@rhel1 ocp-install]#

Copy installer SSH key to RHEL host for password less login

# ssh-copy-id -i ~/.ssh/id_rsa.pub root@worker0.ocp4.sjc02.lab.cisco.com

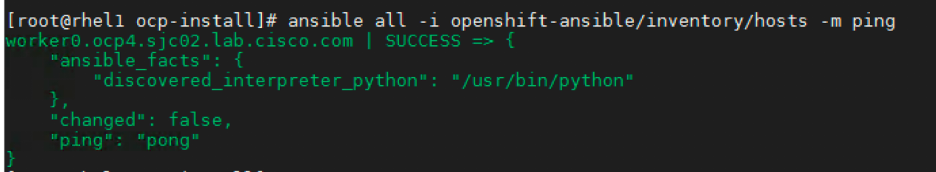

Before running the playbook make sure you can access the worker node via ansible ping.

$ ssh-copy-id -i ~/.ssh/id_rsa.pub root@worker0.ocp4.sjc02.lab.cisco.com $ ansible all -i openshift-ansible/inventory/hosts -m ping

Run the playbook

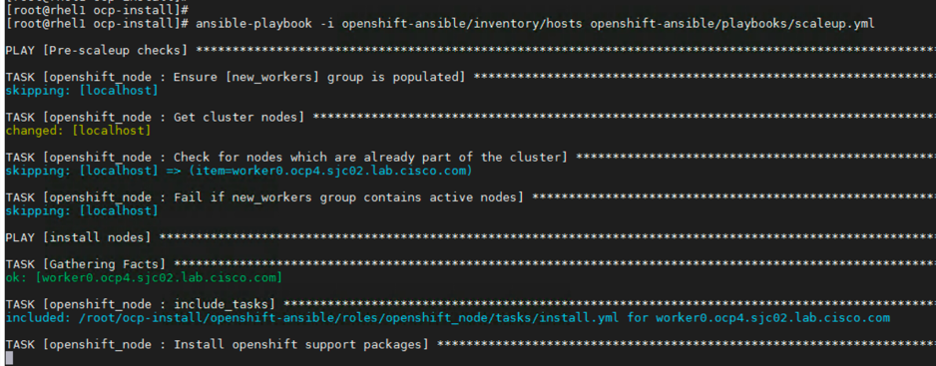

[root@rhel1 ocp-install]# ansible-playbook -i openshift-ansible/inventory/hosts openshift-ansible/playbooks/scaleup.yml

Install will begin as shown

You can ssh to worker node and can also watch the progress or any issues with the following command

# journalctl -b -f # tail -f /var/log/messages

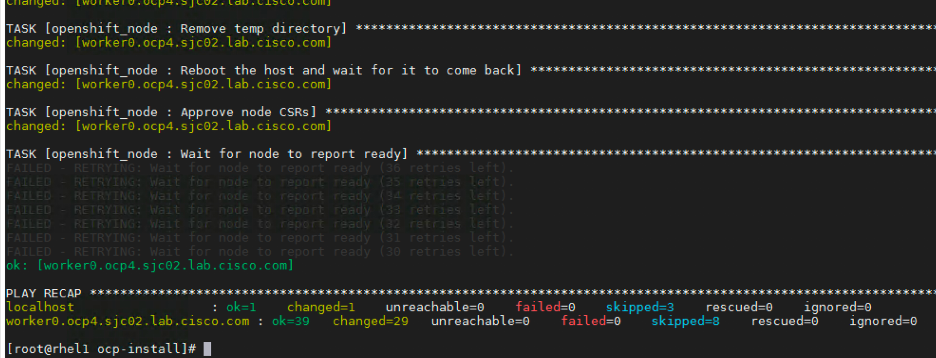

Newly added worker node will reboot at some point.

Install will complete successfully if no issues occurred as shown below.

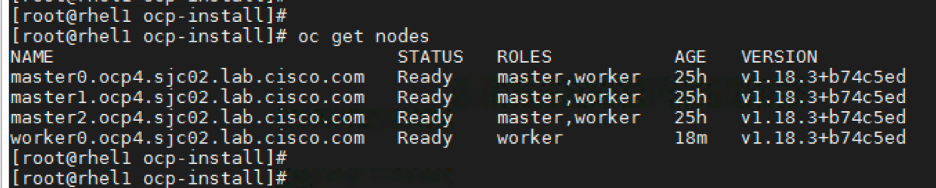

Run the following to verify if the worker node joined the cluster

# oc get nodes

Launch the web console and verify if worker node is added.

Sample Application Deploy in OpenShift

MySQL

In this example, I will be deploying MySQL and exposing the service to create a route to validate the install.

- Login to OCP and create a new project

# oc new-project p02

- Create a service by using oc new-app command

# oc new-app \

--name my-mysql-rhel8-01 \

--env MYSQL_DATABASE=opdb \

--env MYSQL_USER=user \

--env MYSQL_PASSWORD=pass \

registry.redhat.io/rhel8/mysql-80

Run the following command to verify if the service is created

# oc get svc

- Expose the service to create the route.

[root@rhel1 ocp-install]# oc expose svc/my-mysql-rhel8-01 route.route.openshift.io/my-mysql-rhel8-01 exposed

- You can get the route information from the following command

# oc get route my-mysql-rhel8-01

- Login to worker node and run the following command.

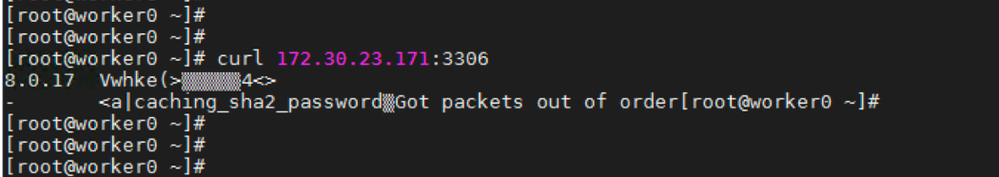

# curl 172.30.23.171:3306

The examples in this section use a MySQL service, which requires a client application. If you get a string of characters with the “Got packets out of order” message as shown in the below figure means you are connected to the service.

# mysql -h 172.30.23.171 -u user -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. MySQL [(none)]>

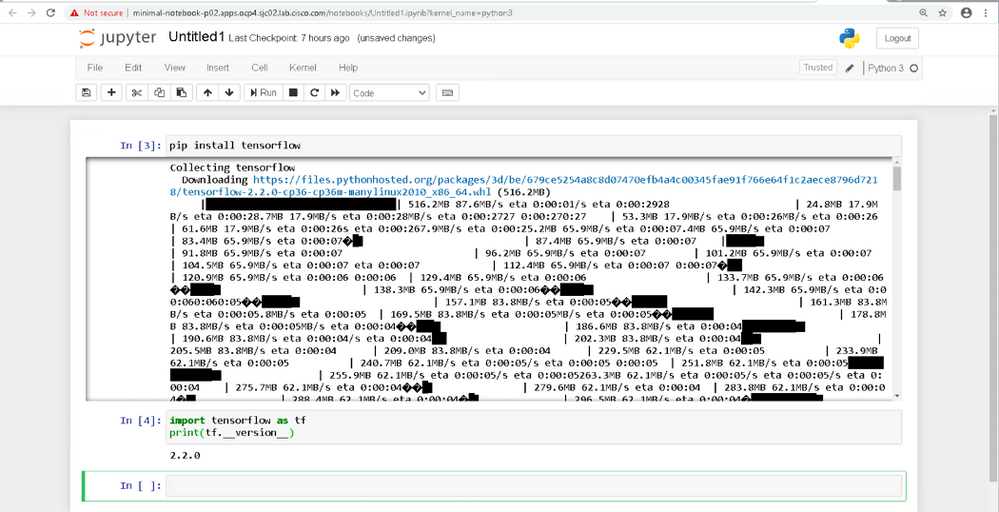

Jupyter Notebook

Create a notebook image using the supplied image stream definition as below

# oc create -f https://raw.githubusercontent.com/jupyter-on-openshift/jupyter-notebooks/master/image-streams/s2i-minimal-notebook.json

Once the image stream definition is loaded, the project it is loaded into should have the tagged image:

s2i-minimal-notebook:3.6

Deploy the minimal notebook using the following command under the same project created earlier as “p02”

# oc new-app s2i-minimal-notebook:3.6 --name minimal-notebook --env JUPYTER_NOTEBOOK_PASSWORD=mypassword

The JUPYTER_NOTEBOOK_PASSWORD environment variable will allow you to access the notebook instance with a known password.

You need to expose the notebook by running the following command by creating the route.

# oc create route edge minimal-notebook --service minimal-notebook --insecure-policy Redirect

To see the hostname, which is assigned to the notebook instance, run:

# oc get route/minimal-notebook

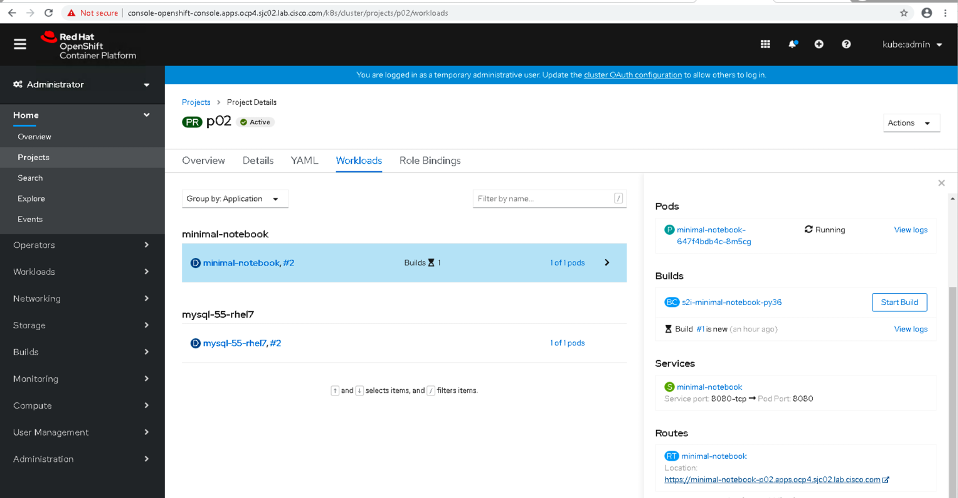

You can also verify the successful creating of the notebook in the web console UI

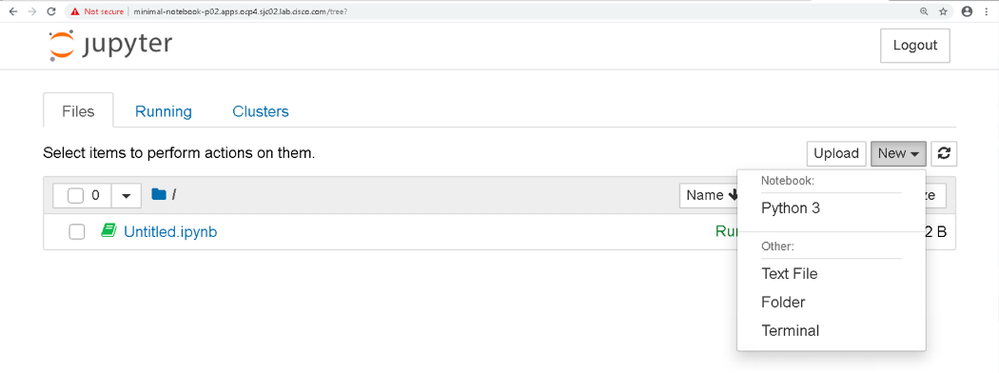

Click on the location URL under Routes section or launch the browser, type https://minimal-notebook-p02.apps.ocp4.sjc02.lab.cisco.com/. Notebook will be launched in the browser as shown.

Type the supplied password such as “mypassword” set earlier at the time of creation of this application.

Now you can start coding in Jupyter notebook. For example, click New and then Python 3 as shown below

Now, you are ready to code in Python as shown below.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: