Anyone else like pioneering? Trying new things? Tackling problems head on? Let's be honest, if it was easy, it wouldn't be worth it. I love my job and being able to overcome new challenges (almost daily) is extremely rewarding. Yesterday was no different.

A counterpart of mine was setting up a Cisco C240 M5 (rack mount server) with the NVIDIA Tesla P40 GPU for several upcoming trade show demos.

After running into a couple of snags, he reached out describing the issues he was facing. I started digging into the hardware and software configurations and after several hours of banging my head against the keyboard, I started to make some progress.

A Little Bit of Background

With Windows 10/Server 2016, the host requirements to run virtual desktops/applications have changed significantly. Out of the box, Windows 10 needs ~30% more GPU over Windows 7. The same is true with the applications that a typical knowledge worker/task worker leverage on a day to day basis (Microsoft Office components can consume upwards of 80% more GPU than previous versions). Websites have become much more visually appealing and resource demands have increased to deliver the rich content being published.

I typically attend industry shows like Citrix Synergy, VMware's VMworld, and NVIDIA's GTC to speak with customers/partners about these new challenges and how to overcome them. I am a "visual learner" so I love being able to show the end user experience at these shows.

In order to demonstrate the benefits, we usually build a hardware appliance with the appropriate hardware/software to support the demo (hence the C240 M5 and NVIDIA P40 mentioned earlier). The end user experience is what drives a successful VDI deployment; "if your end user's ain't happy, ain't nobody happy...".

The Problem

As I was describing earlier, we were having some challenges with the demo server. We tried different versions of ESXi, different drivers, even different VM hardware versions. Nothing worked! The VM would not power on with a shared PCI device (i.e. vGPU) and there were no descriptive errors to help identify why it wouldn't power on. I was at the point where I wanted to bring up a different host to test but I held off knowing this was something that could be fixed if we spent a little more time on it.

The Solution

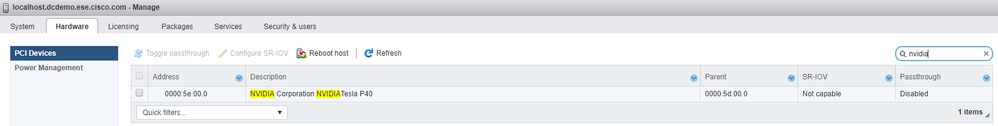

First, you want to make sure passthrough is disabled on the GPU. This is located via ESXi. Ours was showing "not capable". Having deployed vGPU enabled environments in the past, I thought this was very odd. The GPU supports passthrough and you typically see "Disabled" via the Hardware PCI Devices section of the host's configuration in the ESXi web console. See below:

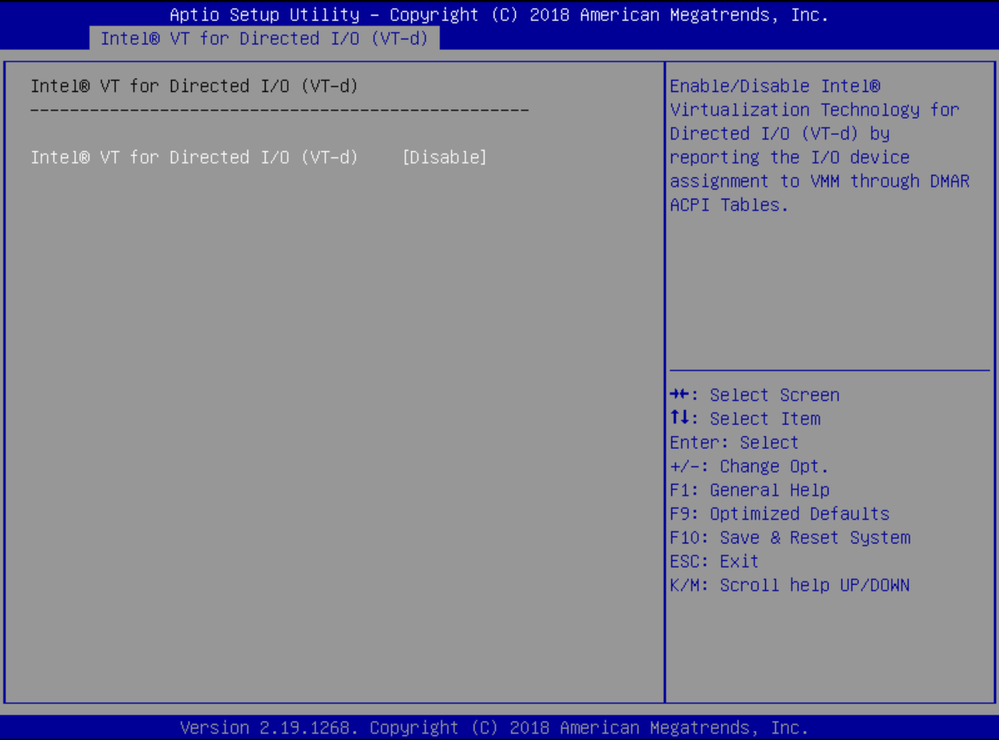

This led me to believe we had the wrong VIB. Not the case. After uninstalling/reinstalling VIBs and testing different versions of the VIB, I decided to go down a different path. While digging through the BIOS, I noticed VT-d was somehow disabled. Cisco's UCS Manager would have made this MUCH easier to deploy at scale using a BIOS policy but we were working on a standalone server for the appliance:

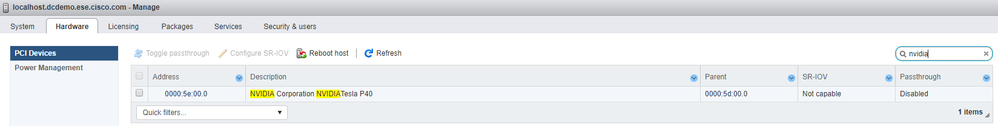

Enabling VT-d brought us one step closer to success but we weren't out of the woods yet. ESXi would now allow us to toggle passthrough. For vGPU, you want to make sure passthrough is disabled (the host requires a reboot if it is enabled and you toggle passthrough):

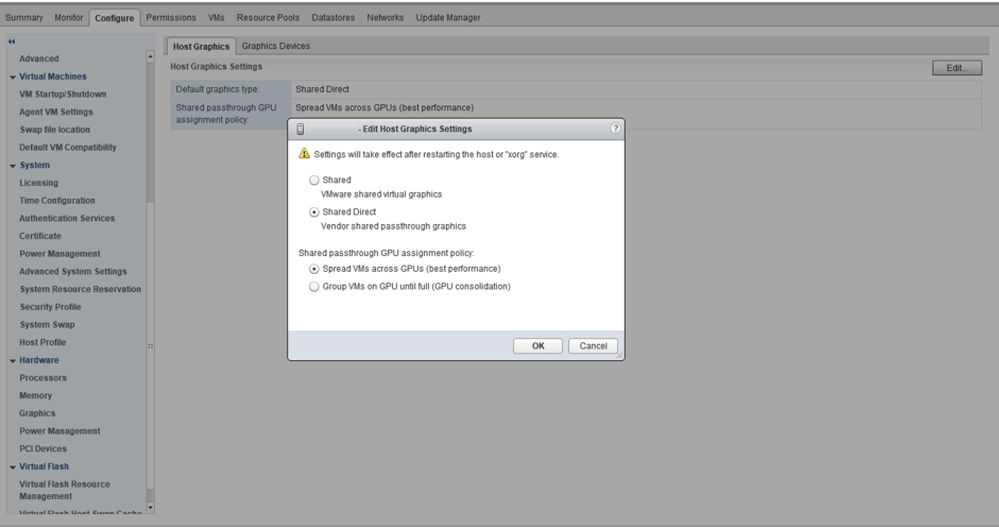

Next, I wanted to make sure that vCenter was set to properly use "Shared Direct" for the host graphics. This is located in the Graphics section of the the host's hardware configuration in vCenter:

After validating things looked good from a configuration standpoint, the vGPU enabled VM would still not power up. vCenter now generated the error “could not initialize plugin '/usr/lib64/vmware/plugin/libnvidia-vgx.so' for vgpu”. Hey! That is something I can Google!

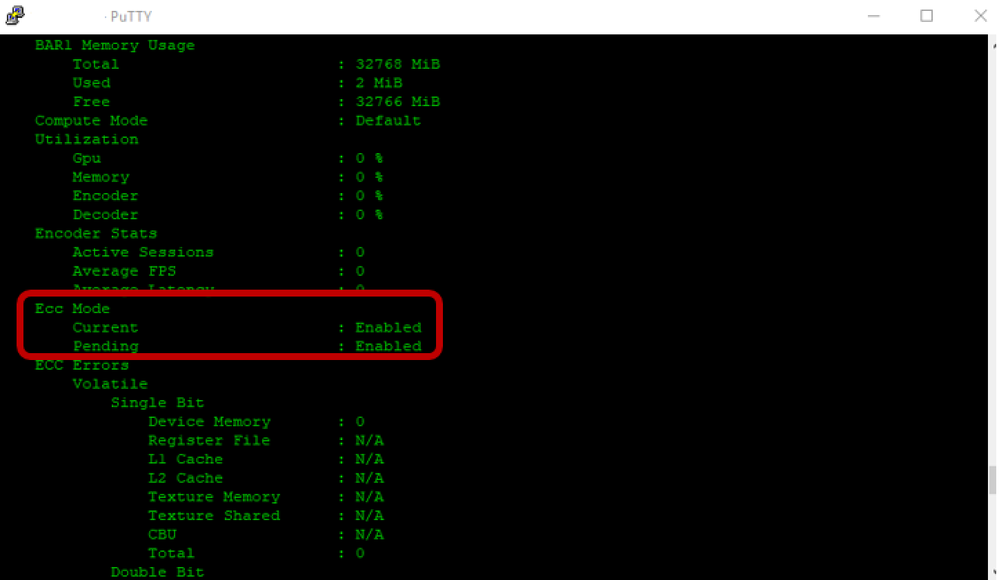

Further investigation led me down the path of ECC being enabled on the GPU. For vGPU, ECC must be disabled on Pascal based GPUs. To check the status, SSH the host (if using a Windows device, download Putty, it rocks for saving multiple SSH sessions) and run nvidia-smi -q:

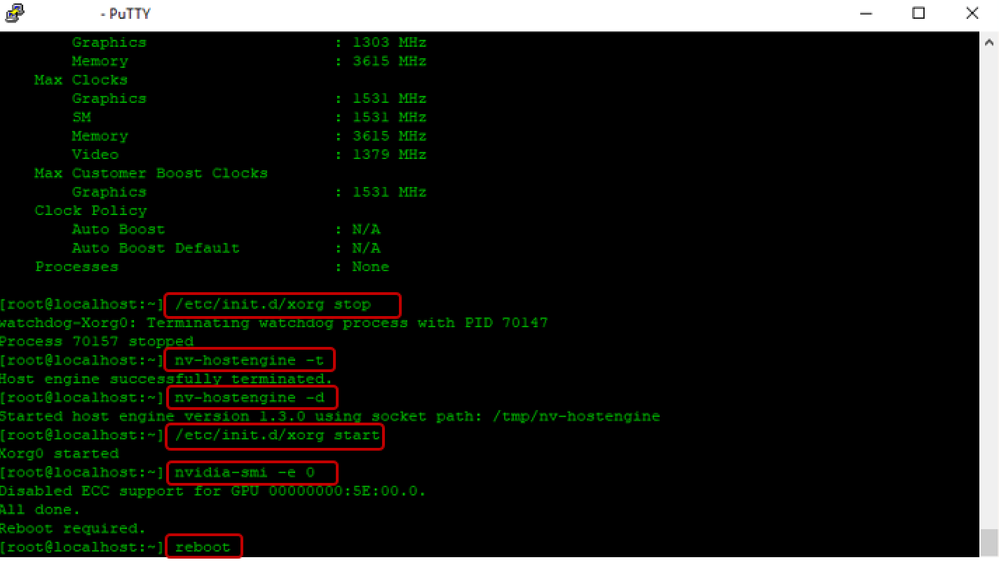

Now you may be asking "how the heck do I disable ECC?" (I know I was...). Thanks to a blog by @citrixguyblog, the process is really quite simple. There are several commands you run via SSH (make sure you vMotion the powered on VMs off the host BEFORE rebooting):

/etc/init.d/xorg stop

nv-hostengine -t

nv-hostengine -d

/etc/init.d/xorg start

nvidia-smi -e 0

reboot

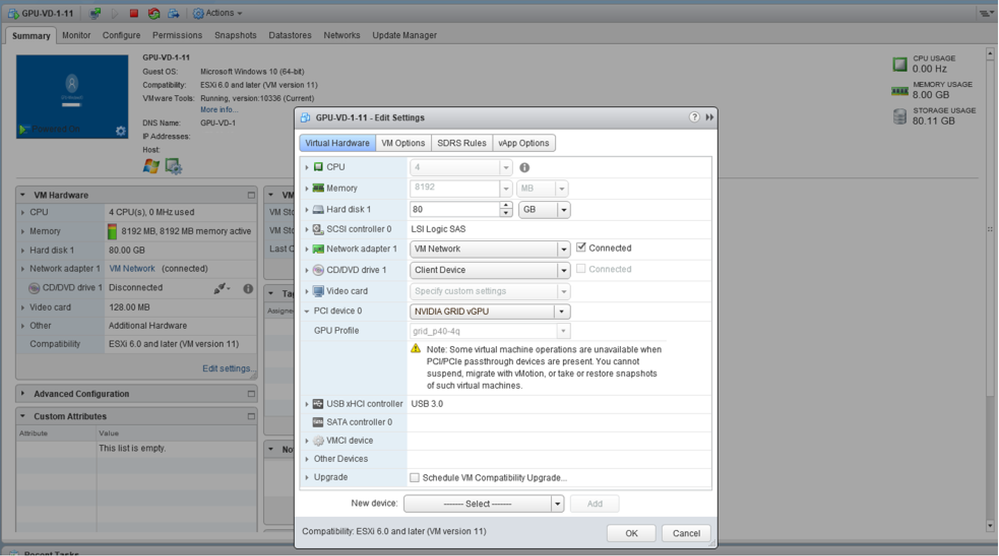

Eureka! We NOW have a vGPU enabled VM that can power on!

The Reason

Hopefully this blog will help folks that may run into similar scenarios as GPU adoption grows with VDI. As I stated earlier, graphics accelerated VDI adoption is growing significantly. Having a global responsibility has awarded me with an incredible opportunity to work with customers all around the world and hearing their the stories/use cases for VDI has been eye opening.

Some that may say VDI has been around for a while and not much has changed, but if you consider the way you work (the devices you carry, the peripherals you use, and the applications that enable you to perform your business functions), you can see the end user experience has drastically changed. We walk around with 4K capable phones in our pockets, we have 4K monitors sitting on our desks, training videos are delivered in HD, we now leverage video conferencing on a daily basis, and now we have Windows 10 which has an extremely elegant UI. The business end user environment demands excellence; our desktop delivery model must follow suit.