- Cisco Community

- Technology and Support

- Service Providers

- XR OS and Platforms

- Re: ASR 9000 series PPP session limit

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2014 10:37 AM

Hello!

Guys could you plese tell how many ppp sessions ASR 9000 can terminate.We are planning no build SP network I know 7600 series routers cаn terminate 64k ppp session per box ( 16K per ESP+ module 8K per SIP module).

Unfortunately I could not find any information regarding this matter anyware.

A believe A9K-SIP-700 module can do that.

Thanks a lot

Solved! Go to Solution.

- Labels:

-

XR OS and Platforms

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2014 04:35 AM

hi sergei,

so if you are looking at pure PPP, in sort of a leased line mode (no subscribers), then you need the sIP700 to support

channelized and POS based interfaces.

With this sip700 and say a 2xOC12 SPA (that can do STM also), you can create 1000 interfaces per SPA and since the sip700 can carry 2 of those spa's 2000 per sip.

An interface is really the equivalent of a channel group here, so each of those interfaces can have a number of DS0's in it depending on the speed required.

If you are looking at PPPoE sessions, for suscriber termination, we support that on the typhoon linecards.

we can currently do 128K sessions per chassis, 64k per Linecard and 32k per NPU.

(each LC has a number of NPU's, the MOD80 has 2 npu's, the 24x10 has 8 NPU's etc).

This is being built out to 256k and there is a roadmap to go even further then that too!

Just want to make sure we separate PPP circuits of a channelized (requiring SIP) from PPPoE subscribers (aka BNG).

IT can all co-exist in the same chassis, well as you know (now) the sip is not carried in the 99xx chassis.

Am I making things easier to undestand or still tricky?

regards

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-25-2014 02:10 PM

Hi Sergei,

I also responded to your email, but this response just so everyone knows that might be interested in this topic:

there is no compatibility between the sip700 and the 99xx chassis (not planned either )

if TDM is a requirement, you probably are best of usng the sip700 with the 9010/9006 or use the 99xx chassis

with a satellite using the ASR901/903. (note this is different then the asr9001, the 90x platforms is a smaller chassis but can be used as a satellite of the asr9000 series)

cheers!

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-25-2014 02:26 PM

Thank you Alexander,

So if we go with 9010 platform and use sip-700 how many ppp sessions can it terminate.

And 9922 9912 may be different module for ppp ( limitations)?

Actually is any Cisco Router can terminate 256k pppoe sessios+ ability to support 40 and 100 GE cards. We are looking for BRAS solution currently considering 4 X 7606 which can terminate up to 64k ppp per box.

Can you advice on that, please

Really appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2014 04:35 AM

hi sergei,

so if you are looking at pure PPP, in sort of a leased line mode (no subscribers), then you need the sIP700 to support

channelized and POS based interfaces.

With this sip700 and say a 2xOC12 SPA (that can do STM also), you can create 1000 interfaces per SPA and since the sip700 can carry 2 of those spa's 2000 per sip.

An interface is really the equivalent of a channel group here, so each of those interfaces can have a number of DS0's in it depending on the speed required.

If you are looking at PPPoE sessions, for suscriber termination, we support that on the typhoon linecards.

we can currently do 128K sessions per chassis, 64k per Linecard and 32k per NPU.

(each LC has a number of NPU's, the MOD80 has 2 npu's, the 24x10 has 8 NPU's etc).

This is being built out to 256k and there is a roadmap to go even further then that too!

Just want to make sure we separate PPP circuits of a channelized (requiring SIP) from PPPoE subscribers (aka BNG).

IT can all co-exist in the same chassis, well as you know (now) the sip is not carried in the 99xx chassis.

Am I making things easier to undestand or still tricky?

regards

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2014 05:36 AM

Thank you great explanation.

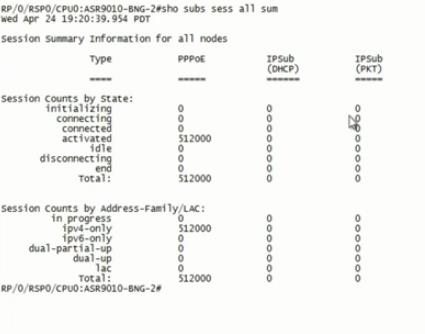

Considering pppoe limit 512K

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2014 07:09 AM

Hi sergei, thanks, and yeah it's massive, the subscriber scale for PPPoE and IPoE sessions is massive.

Coming XR 5.1.1 we are also supporting Linecard based subscribers, which means that the whole control plane is running completely distributed

over the LC's that would multiply the subscriber scale even further in a single chassis, so we're not done there yet

cheers!

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2014 02:02 AM

Hi Alexander,

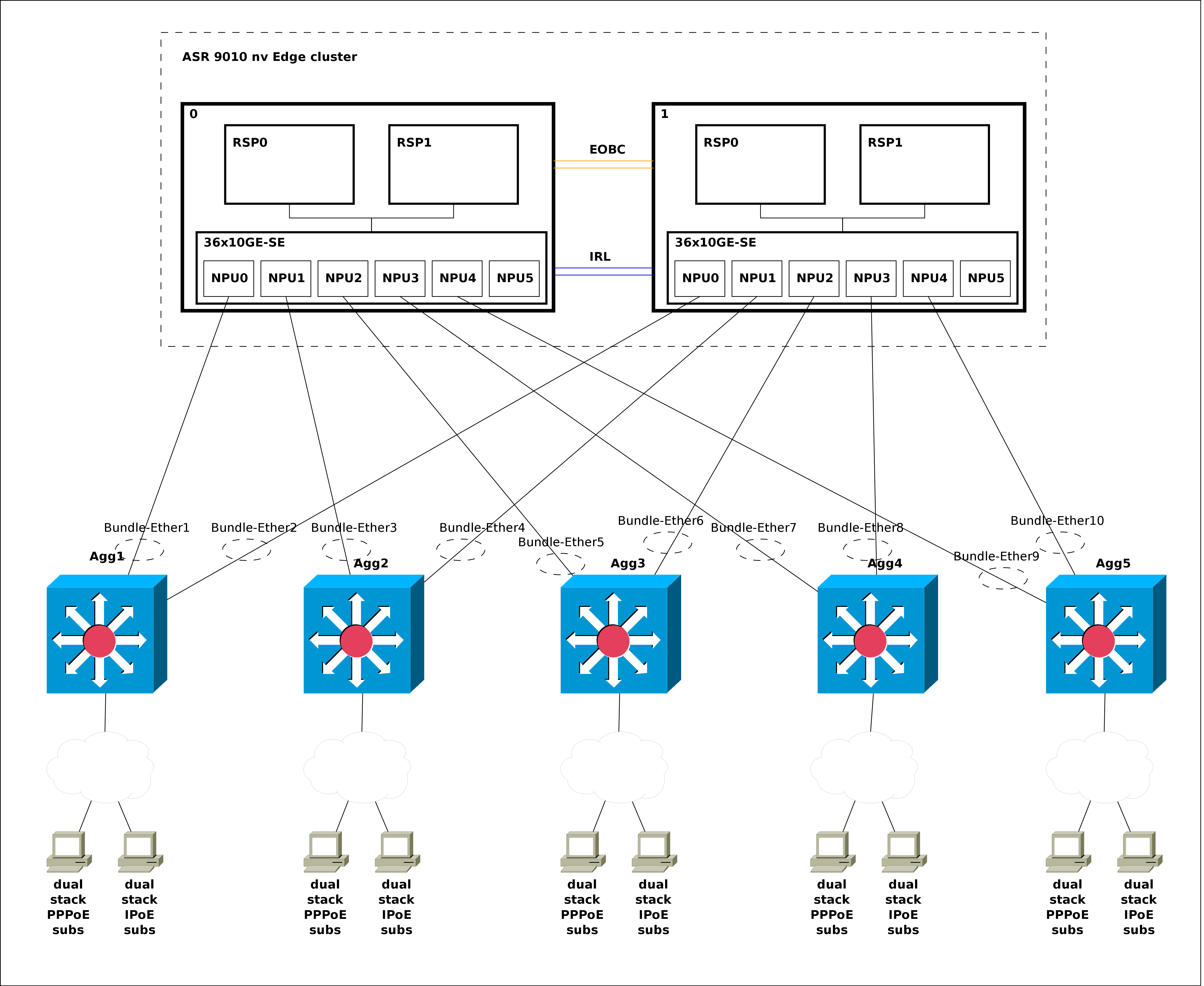

We are considering the following design for BNG solution in our network.

As shown on the diagram we have cluster of two ASR9010 with 36x10G LC and two RSP 440 in each chassis. We are going to terminate subs sessions on RSP only cause we need pQoS and service accounting for each customer.

What will be the limits in terms of subscriber sessions for this design ? We believe that we can achieve 128K in total and 32K per aggregation. Are we right ? Is limitation of 64K subs per LC is true in our case ?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2014 04:11 AM

hi olegch,

- There is a limitation of 32k subs per NPU.

- 32k per physical interface,

- A limitation of 8k per vlan exists when shaped qos is in use, but with 4 vlans you can reach the 32k still.

- 64k per linecard (So need at minimum 2 NPUs)

- 128k per system today. (would require at minimum 2 linecards terminating subscribers)

this number per LC and total system will increase as we go forward. the 32k per NPU is a hard resource limit of the npu.

So as long as you keep it within these limits in whatever favorite spread you'd like, things will be fine.

regards

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2014 07:50 AM

Hi xander,

In our design we have one LC in each cluster node and we terminate subs on RSP (not LC). And according to your BNG deplyment guide :

"When you are using bundle interfaces, all features enabled on the subscriber are programmed to the NP's where the bundle has members on.

So running a bundle with 2 members effectively creates the subscriber on both NPU's for those members, because of the phenomenal failover support it has."

So in our particular design will we get only 64K total subs (cause we are restricted by "64K per LC" limitation and every session takes resources on both LC) or 128K ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2014 08:37 AM

Correct, when you are using a bundle, the resources are replicated and programmed on all NPU's that have members in that bundle.

So for instance, if you have a MOD80 linecard, and you have 2 members in a bundle, and each member is located on a different NPU, then you will be limited to 32k subs TOTAL on that linecard, because the first limit

- There is a limitation of 32k subs per NPU.

is already met.

And well stated indeed! The disadvantage is that we burn the same structures on multiple NPU's, so duplication of programming maybe, but it does provide for Advtange of seamless failover between bundle members.

To continue on your design: if I'd have a 36x10 card, which 6 NPU's and 2 member bundles, I can easily reach 64k

even if the bundle members are spread over 2 NPU's.

I would need 2 linecards for this obviously.

With a MOD80, having 2 NPU's, and 2 member bundles whereby the members are connected to each NPU, I am limited to 32k subs on that LC because of the first limit, but if I have 4 linecards like this I can have 4x32k subs no problemo

because then we hit the system scale clause.

Not sure if this makes it more clear or whether I am confusing you more, but let me know if this makes it more complex

and I'll try again

regards

Xander Thuijs CCIE #6775

Principal Engineer

ASR9000, CRS, NCS6000 & IOS-XR

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2014 12:45 AM

So the only way to get 128K is to change bundles as shown on the diagram2. (actually making 2 bundles from every aggregation and every bundle with only one member ) Right ?

PS: But we lost some seamless failover in this case and will have some additional headache with IPoE subs.

PSS: The second way is oviously adding two more LCs of course.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2014 04:48 AM

Hi Olegh,

you are correct.

in this mode you lose the seamless failover, and in this model cluster doesnt buy you much...

If you want to make the upmost use of redundancy, you probably want to add 2 linecards so you can bundle over the 2 racks.

Or limit in this design to 64k subs whereby you bundle to each LC in each rack.

Or those are no options, you might as well remove the cluster from this and keep the design as in your picture as you'll save some cluster licenses and IRL ports in that case.

regards!!

xander

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide