- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center Switches

- Re: ECMP over EVPN VXLAN fabric with vPC

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ECMP over EVPN VXLAN fabric with vPC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2020 11:10 PM

Hi!

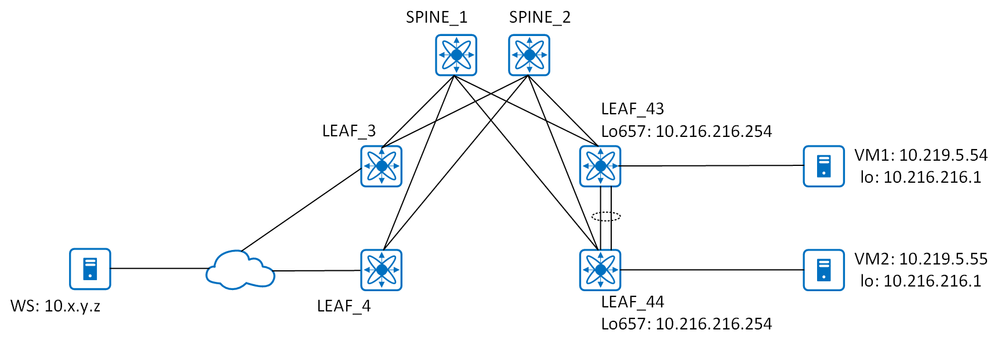

I have following network

Initial task is to enable service deployed on both hosts (VM1 and VM2) via their loopback interfaces with address 10.216.216.1 be available from any other host within same VRF in EVPN fabric and from networks behind the cloud (let it be legacy data centers).

What I did - Nexus 9000-based EVPN fabric is up and running, in a nutshell - iBGP, spines are route-reflectors, all leaf pairs from vPC peerings. On LEAF_43 and LEAF_44 configured eBGP peering from loopback interfaces to physical addresses of VMs. Then I experienced these problems:

Everything works fine and as expected when there is only one eBGP session is active with whatever VM and whatever leaf, that is when only one route is installed throughout the whole fabric. But when I enable eBGP session with second pair of VM and leaf - traffic gets stuck and I can't figure out why and where. All necessary routes are in place and look Ok. Inside EVPN fabric traceroute from any network shows just default gateway. From external locations traceroute stops at LEAF_4.

It looks like I am hitting some platform limitation and even not the one, but several ones in this case, but I could not find anything in Config Guides and available Design Guides how this specific case should be implemented. If anyone has a link to such document please share. I want to understand what is going wrong in this scenario and how to get this construction working.

A little background. I have already implemented similar thingy in legacy data centers based on 3-Tier vPC fabrics - everything runs smoothly without any problems. Also I have exactly the same setup in the same EVPN fabric with a slight difference - occasionally both VMs (for another project with different addresses) appeared on different leafs in different racks, that is in different vPC pairs; also the eBGP peering is done not with loopback addresses on leafs but with anycast gateway address. And everything works Ok with them. I tried to configure my current problem VMs to peer with anycast gateway address - it also does not help. The only thing which make it work is moving VMs away from each other to different vPC pairs and everything works fine, no matter where eBGP connection point is configured on leafs - loopback interface or anycast gateway SVI.

What am I doing wrong, why and what is the best way to solve such a task (even if it will require rebuilding the network in some way)?

If needed, I can share any config part or 'show' outputs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-24-2021 01:44 AM

Better late than never

After further investigations it appeared to be a bug https://bst.cloudapps.cisco.com/bugsearch/bug/CSCvt76173/?rfs=iqvred

Workaround - reboot both border-leafs helped.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-09-2022 09:51 AM

Well, quite an interesting development of the situation, I must admit.

I have seen similar things on N9K-C9396PX and N3K-C3164Q. In my case I had been using a VPC pair as a spine for the underlay OSPF network towards a downstream layer of switches. That is, I didn't have a pure spine layer only doing routing but I had a NVE VTEP on the switches acting as spine and also some of them were VPC nodes. This was a nightmare always leading to part of the traffic being blackholed without any convention in this, it was random blackholing of random traffic of random VXLAN-bridged VLANs between VTEPs. I only managed to fix this by dedicating a spine layer only doing spine function and seems stable till now. I have even built two or three different spine layers because my topology is relatively big (say, a Datacenter main spine, then a Cage spine, then a TOR spine in each rack and then VPC nodes connected to the spines etc). Even that kind of multi-spine deployment is working more than fine compared to the downstream routing through VPC which I had been doing till then.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide