- Cisco Community

- Technology and Support

- Data Center and Cloud

- Other Data Center Subjects

- Re: Ask the Expert: Troubleshooting Nexus 5000/2000 series switc

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ask the Expert: Troubleshooting Nexus 5000/2000 series switches

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-18-2012 08:27 AM - edited 03-01-2019 07:07 AM

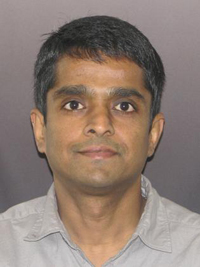

With Prashanth Krishnappa

With Prashanth Krishnappa

Welcome to the Cisco Support Community Ask the Expert conversation. This is an opportunity to learn about how to troubleshoot the Nexus 5000/2000 series switches.

Prashanth Krishnappa is an escalation engineer for datacenter switching at the Cisco Technical Assistance Center in Research Triangle Park, North Carolina. His current responsibilities include escalations in which he troubleshoots complex issues related to the Cisco Catalyst, Nexus and MDS product lines as well as providing training and author documentation. He joined Cisco in 2000 as an engineer in the Technical Assistance Center. He holds a bachelor's degree in electronics and communication engineering from Bangalore University, India, and a master's degree in electrical engineering from Wichita State University, Kansas. He also holds CCIE certification (#18057).

Remember to use the rating system to let Prashanth know if you have received an adequate response.

Prashanth might not be able to answer each question due to the volume expected during this event. Remember that you can continue the conversation on the Data Center sub-community discussion forum shortly after the event. This event lasts through June 29, 2012. Visit this forum often to view responses to your questions and the questions of other community members.

- Labels:

-

Other Data Center Topics

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2012 09:26 AM

Hi Prashanth,

I have migrated my FCOE set up from a pair of 5020s to a pair of Nexus 5500s and my vFCs is not coming up. Configurations have been triple checked and they are identical - can you help?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2012 02:25 PM

Hello Sarah

Unlike 50x0, in 5500s, until 5.1(3)N1(1), FCOE queues are not created by default when you enable "feature fcoe"

Make sure you have the following policies under system QoS

system qos

service-policy type queuing input fcoe-default-in-policy

service-policy type queuing output fcoe-default-out-policy

service-policy type qos input fcoe-default-in-policy

service-policy type network-qos fcoe-default-nq-policy

Thanks

-Prashanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-02-2014 12:47 AM

Hi,

I have a couple nexus 5k which are peer together. I linked a 3750 Switch to this peer with trunk port. I've configured all vlans on VPC peers as 3750.

I have eigrp on 3750 and I want to migrate all routing from 3750 to new nexus switches. 3750 can see nexus switches hsrp IP address on one of the vlans and vise versa.

Can I use thus vlan inteface in both sides for eigrp neighborship or I have to create L3 interface on nexus switchs instead of existing trunk port?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-03-2014 12:17 AM

Hi Kamin-ganji.

I believe that you have the same restrictions on a N5K as on a N7K. But check the design guides on cisco.com. This is from the N7K guide.

Layer 3 and vPC: Guidelines and Restrictions

Attaching a L3 device (router or firewall configured in routed mode for instance) to vPC domain using a vPC is not a supported design because of vPC loop avoidance rule.

To connect a L3 device to vPC domain, simply use L3 links from L3 device to each vPC peer device.

L3 device will be able to initiate L3 routing protocol adjacencies with both vPC peer devices.

One or multiple L3 links can be used to connect to L3 device to each vPC peer device. NEXUS 7000 series support L3 Equal Cost Multipathing (ECMP) with up to 16 hardware load-sharing paths per prefix. Traffic from vPC peer device to L3 device can be load-balanced across all the L3 links interconnecting the 2 devices together.

Using Layer 3 ECMP on the L3 device can effectively use all Layer 3 links from this device to vPC domain. Traffic from L3 device to vPC domain (i.e vPC peer device 1 and vPC peer device 2) can be load-balanced across all the L3 links interconnecting the 2 entities together.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-21-2012 08:20 AM

Hi Prashanth,

Thanks for having this session. First question that I have is whether Jumbo MTU is supported across the vPC Peer Link on N5Ks? Below is the output when I tried to configure this in N7K, but I presume N5K may have the same symptom. Thanks.

RCS-WG1(config-if)# int po10

RCS-WG1(config-if)# mtu 9216

ERROR: port-channel10: Cannot configure port MTU on Peer-Link.

RCS-WG1(config-if)# sh int po10

port-channel10 is up

Hardware: Port-Channel, address: 70ca.9bf8.eef5 (bia 70ca.9bf8.eef5)

Description: *** vPC Peer Link ***

MTU 1500 bytes, BW 20000000 Kbit, DLY 10 usec

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA

Port mode is trunk

full-duplex, 10 Gb/s

Input flow-control is off, output flow-control is off

Switchport monitor is off

EtherType is 0x8100

Members in this channel: Eth3/1, Eth3/2

Last clearing of "show interface" counters never

30 seconds input rate 23120 bits/sec, 34 packets/sec

30 seconds output rate 23096 bits/sec, 34 packets/sec

Load-Interval #2: 5 minute (300 seconds)

input rate 23.05 Kbps, 32 pps; output rate 23.06 Kbps, 31 pps

RX

12 unicast packets 60639 multicast packets 6 broadcast packets

60657 input packets 5024553 bytes

0 jumbo packets 0 storm suppression packets

0 runts 0 giants 0 CRC 0 no buffer

0 input error 0 short frame 0 overrun 0 underrun 0 ignored

0 watchdog 0 bad etype drop 0 bad proto drop 0 if down drop

0 input with dribble 0 input discard

0 Rx pause

TX

12 unicast packets 60688 multicast packets 340 broadcast packets

61040 output packets 5568549 bytes

0 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

2 interface resets

RCS-WG1(config-if)#

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2012 05:22 AM

Hello YapChinHoong

Jumbo QoS configuration on Nexus 5000 is configured per QoS class of group using QoS configurations and not per interface. When applied, the QoS setting applies to all ethernet interfaces including the peer-link

Note that when you are configuring jumbo on switches configured for FCoE, use the following

policy-map type network-qos fcoe+jumbo-policy class type network-qos class-fcoe pause no-drop mtu 2158 class type network-qos class-default mtu 9216 multicast-optimize system qos service-policy type network-qos fcoe+jumbo-policy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-21-2012 08:25 AM

Hi Prashanth,

The 2nd question that I have is regarding the effectiveness of storm control on the Nexus platform, partcilarly N5K and N7K.

The concern that I have is the storm control falling threshold capability as with the mid-range Catalyst platforms (eg: C3750), in which the port blocks traffic when the rising threshold is reached. The port remains blocked until the traffic rate drops below the falling threshold (if one is specified) and then resumes normal forwarding. The graph below is excerpted from OReilly – Network Warrior.

N7K and N5K never mentions anything about the falling threshold mechanism, so the graphs looks like this (as get from N7K config guide).

In the event of broadcast storms, theoretically the graph looks like this.

This means only 50% of the broadcast packets will be suppressed or dropped. Assuming 300’000 broadcast packets hitting the SVI within a second, 150’000 will be hitting the SVI, which is often sufficient to cause high CPU, switch starts not responding to UDLD from peers, peer devices blocking ports due to UDLD, and then a disastrous network meltdown.

Appreciate your comment upon this. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2012 05:26 AM

Hello YapChinHoong

In addition to any user configured storm control, the SVI/CPU is also protected by default Control plane policing. In lab, I tested sending 10Gig line rate broadcast in a 5500 and noticed that the switch CPU and other control plane protocols were not affected.

F340.24.10-5548-1# sh policy-map interface control-plane class copp-system-class-default

control Plane

service-policy input: copp-system-policy-default

class-map copp-system-class-default (match-any)

match protocol default

police cir 2048 kbps , bc 6400000 bytes

conformed 45941275 bytes; action: transmit

violated 149875654008 bytes; action: drop<<<---------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2012 12:31 PM

Hello Prashanth,

I have few questions about Nexus 500s and 5500s

Hardware port channel resources-

According to the below document 16 hardware PortChannels is the limit on 5020 and 5010 switches . Does the 5548s with layer 3 daughter card has any kind limitation on the hardware portchannel resources.Does a FEX (2248 or 2232) dualhomed to a 5548 with L3 consume a Hardware portchannel?

Can you please confirm the below designs- 5548 are running on 5.1(3)N1(1), 5010s are on 5.0(3)N2(1)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2012 02:53 PM

Hello Siddhartham

55xx support 48 local port channels. But only port-channels having more than one interface in it count against this 48 limit. Since your FEX only has one interface per Nexus 5k, it

does not use up a resource.

Regarding your topologies, you are referring to the Enhanced vPC(E-vPC). E-vPC is only

supported on the 55xx platforms. So your second topology is not supported since it used Nexus 5010 as parent switch for the FEX.

Thanks

-Prashanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-26-2012 07:20 AM

Thanks Prashanth.

Since the number of FEXs supported by 5548 with L3 card is eight, even if I use two 10Gig links between a FEX and each 5548, this will only consume 8 hardware portchannels out of available 48.

Do we have any limit on the 2248/2232 FEXs, will the port channel on a FEX count against the Limit of a 5500.

Siddhartha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-26-2012 09:25 AM

The FEX port-channels do not count against the limit of the 5500.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-26-2012 04:25 PM

Since the number of FEXs supported by 5548 with L3 card is eight,

"Up to 24 fabric extenders per Cisco Nexus 5548P, 5548UP, and 5596UP switch (8 fabric extenders for Layer 3 configurations)" - This line was taken from the data sheet of the B22 (Table 2).

"Up to 24 fabric extenders per Cisco Nexus 5548P, 5548UP, 5596UP switch (16 fabric extenders for L3 configurations): up to 1152 Gigabit Ethernet servers and 768 10 Gigabit Ethernet servers per switch" - This line was taken from the data sheet of the Nexus 2000 (Table 2).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-26-2012 05:59 PM

The FEX support with layer 3 has now been increased to 16

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide