- Cisco Community

- Technology and Support

- Networking

- Routing

- Whether or not memory leak occurs with snmp trap all.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

12:01 AM

- last edited on

02-22-2022

04:25 AM

by

Translator

![]()

Hi.

Is there a difference between enabling and disabling

snmp-server enable traps all

on the switch?

We suspect that the above configuration is causing a memory leak.

I was able to see the following in the Bug case on the C4500:

https://bst.cloudapps.cisco.com/bugsearch/bug/CSCud55965

Fix memory leak by excluding CISCO-PROCESS-MIB

Is there any possibility of memory leak by requesting SNMP of too much information from NMS as above?

Please help.

Solved! Go to Solution.

- Labels:

-

LAN Switching

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

02:07 AM

- last edited on

02-22-2022

04:43 AM

by

Translator

![]()

Hello @inb ,

SNMP traps are unsolicited messages sent by the switch to the NMS when some threshold is crossed.

the configuration described in the bug is about SNMP polling with GET messages from the NMS to the switch

>>

Conditions: * Sup7E, Sup7L-E or 4500X * switches running 3.3.0SG, 3.3.1SG, 3.3.2SG or 3.4.0SG * switches being polled for OIDs under CISCO-PROCESS-MIB

Workaround: Exclude polling of the CISCO-PROCESS-MIB using an SNMP view: snmp-server view restrict iso included snmp-server view restrict ciscoProcessMIB excluded snmp-server community cisco view restrict RO

However, as noted you may want to avoid to enable all snmp traps in an effort to reduce the SNMP load on the CPU of the switch.

As asked by @Leo Laohoo can you post

show version

of the affected switch ?

and

show module

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 06:37 PM - edited 02-15-2022 06:39 PM

@inb wrote:

Is there any documentation that says the stack is more problematic?

Read some of the bugs. And learn to "read between the lines". A lot of bugs always gets triggered when it is in a stack. Always.

Aside from Google result, have a look at CSCuz57493 (>5400 TAC Cases) & CSCuo14511 (>500 TAC Cases).

And since the 9200 is on 16.11.1, please have a look at CSCvq56135 & CSCvq48005.

I can go on forever. There is a long list. An endless list of crashes due to stack-mgr &/or "stack merge".

Classic IOS never had issues but IOS-XE simply is a magnet for them.

What I am trying to say is this: Stack switches with IOS-XE needs to be constantly monitored for any CPU &/or memory leak.

Below, a friend shared me a picture below of an incident that occurred on 11 January 2022:

The picture is the memory utilization of a 3850 stack and running IOS-XE version 16.12.6 (it was the latest version). The memory leak was caused by a lose stacking cable.

Cisco IOS-XE have an EEM script that spits out a log entry when the memory &/or CPU is creeping. But in the above scenario, the EEM probably did not have time to do anything since the memory just went through the roof very quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022 12:08 AM

What is the model of the platform and what is the exact IOS version?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

04:12 PM

- last edited on

02-19-2022

04:51 AM

by

Translator

![]()

C4500E 3.4.0SG, 3.11.3ae

C9200 16.11.1

C3650 16.3.8, 16.9.6

etc...

This is happening on many switch series.

By default, the customer switch/router has enabled all snmp traps by entering the

snmp-server enable traps

command.

After the introduction of the new NMS, problems are occurring, and measures have been taken to prevent the collection of unnecessary information from the new NMS.

Yet it is still ongoing.

TAC cannot analyze the cause and recommends only the latest OS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

04:46 PM

- last edited on

02-19-2022

04:51 AM

by

Translator

![]()

Memory leak?

For the 9200/9200L and 3650, post the complete output to the command

sh platform software status control-processor brief

.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

05:16 PM

- last edited on

02-19-2022

04:53 AM

by

Translator

![]()

//////

C9200 16.11.1

c9200#show platform software status control-processor brief

Load Average

Slot Status 1-Min 5-Min 15-Min

1-RP0 Healthy 0.34 0.45 0.47

Memory (kB)

Slot Status Total Used (Pct) Free (Pct) Committed (Pct)

1-RP0 Healthy 4060196 2370248 (58%) 1689948 (42%) 2840652 (70%)

CPU Utilization

Slot CPU User System Nice Idle IRQ SIRQ IOwait

1-RP0 0 2.45 0.81 0.00 96.72 0.00 0.00 0.00

1 2.75 0.40 0.00 96.83 0.00 0.00 0.00

2 2.15 0.61 0.00 97.22 0.00 0.00 0.00

3 2.27 0.72 0.00 96.90 0.00 0.10 0.00

////// C9200 Log

https://bst.cloudapps.cisco.com/bugsearch/bug/%20CSCvn30230/?reffering_site=dumpcr

---------------------------------------------------------

////// C3650 16.3.6 16.3.8 16.9.6

C3650#show platform software status control-processor brief

Load Average

Slot Status 1-Min 5-Min 15-Min

1-RP0 Healthy 0.21 0.52 0.54

2-RP0 Healthy 0.15 0.20 0.16

Memory (kB)

Slot Status Total Used (Pct) Free (Pct) Committed (Pct)

1-RP0 Healthy 3977740 1706068 (43%) 2271672 (57%) 2526208 (64%)

2-RP0 Healthy 3977740 1672148 (42%) 2305592 (58%) 2501076 (63%)

CPU Utilization

Slot CPU User System Nice Idle IRQ SIRQ IOwait

1-RP0 0 6.80 2.50 0.00 90.60 0.00 0.10 0.00

1 10.10 2.90 0.00 87.00 0.00 0.00 0.00

2 10.47 2.79 0.00 86.62 0.00 0.09 0.00

3 6.38 2.29 0.00 91.21 0.00 0.09 0.00

2-RP0 0 2.60 1.00 0.00 96.20 0.00 0.20 0.00

1 2.60 0.60 0.00 96.70 0.00 0.10 0.00

2 3.40 0.30 0.00 96.30 0.00 0.00 0.00

3 2.50 0.50 0.00 97.00 0.00 0.00 0.00

///// C3650 Log

https://bst.cloudapps.cisco.com/bugsearch/bug/CSCvj16271

https://bst.cloudapps.cisco.com/bugsearch/bug/CSCvh56835

C9200/C3650

Typically, the devices identified with the most memory leak bugs were as above.

A few months after OS upgrade, a memory leak occurs with a similar log again.

Other customers have no problem even with the lower version, so they suspect it is an SNMP or NMS problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:20 PM

@inb wrote:

Memory (kB) Slot Status Total Used (Pct) Free (Pct) Committed (Pct) 1-RP0 Healthy 4060196 2370248 (58%) 1689948 (42%) 2840652 (70%)

Apologies for not seeing this earlier.

58% is abnormally high.

Post the complete output to the following commands:

show version show process memory platform sorted location switch active r0

NOTE: I only need to see the "first page" to the 2nd command (do not post the entire output).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:24 PM

Thank you for answer.

C9200 is confirmed to be a memory leak.

I currently have 2-30 switches in operation, and I've been monitoring them for the last year or so.

It is increasing by 1-2% every month.

We only have this customer network problem and we want to reduce and monitor NMS and SNMP traps.

All we can try is this.

No issues with the rest of the settings or network configuration.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:38 PM - edited 02-15-2022 05:43 PM

Because the 9200 is on 16.11.1 firmware, big trouble is assured. Guaranteed.

NOTE: In regards to 3650/3850, 9200, 9300 platform, 16.10.X and 16.11.X is very widely known to be (the start) of memory leaks, CPU hogs and stacking-mgr process crashing that Cisco cannot fix.

For 9200/9200L, upgrade to 16.12.3 (do not go over this version).

Finally, switch running IOS-XE that are stacked are more prone to crashes due to memory leaks and CPU hogs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:48 PM

Thanks for your comments.

We already know that all of the above devices are memory leaks.

with Cisco TAC answer

It is necessary to analyze the root cause.

Cisco TAC always replies only OS Bug.

As a result, customers are losing trust in Cisco.

Is there any documentation that says the stack is more problematic?

Thanks for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 06:37 PM - edited 02-15-2022 06:39 PM

@inb wrote:

Is there any documentation that says the stack is more problematic?

Read some of the bugs. And learn to "read between the lines". A lot of bugs always gets triggered when it is in a stack. Always.

Aside from Google result, have a look at CSCuz57493 (>5400 TAC Cases) & CSCuo14511 (>500 TAC Cases).

And since the 9200 is on 16.11.1, please have a look at CSCvq56135 & CSCvq48005.

I can go on forever. There is a long list. An endless list of crashes due to stack-mgr &/or "stack merge".

Classic IOS never had issues but IOS-XE simply is a magnet for them.

What I am trying to say is this: Stack switches with IOS-XE needs to be constantly monitored for any CPU &/or memory leak.

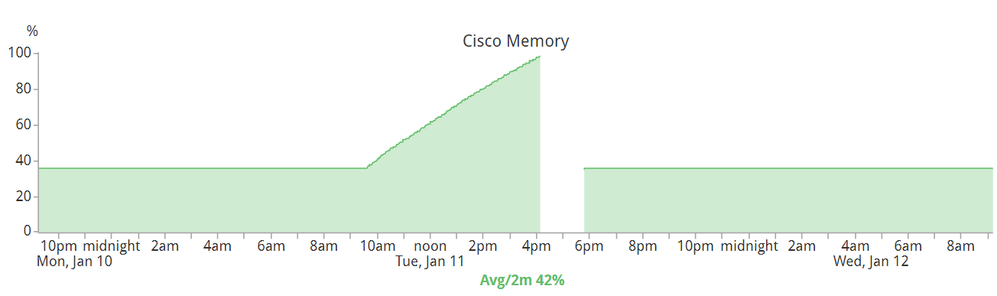

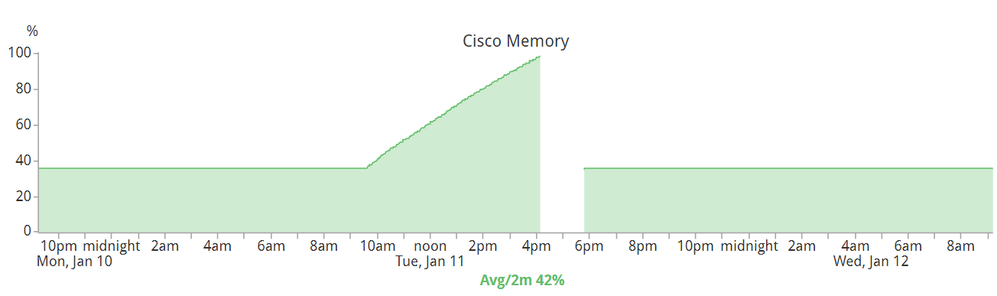

Below, a friend shared me a picture below of an incident that occurred on 11 January 2022:

The picture is the memory utilization of a 3850 stack and running IOS-XE version 16.12.6 (it was the latest version). The memory leak was caused by a lose stacking cable.

Cisco IOS-XE have an EEM script that spits out a log entry when the memory &/or CPU is creeping. But in the above scenario, the EEM probably did not have time to do anything since the memory just went through the roof very quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 07:20 PM

Thanks for the detailed reply and examples.

There are stacks, but there are many non-stacks.

The above customer is a financial company.

It is difficult to do an OS upgrade every time.

I'll think about it a little more.

Thanks for your support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 07:57 PM

@inb wrote:

It is difficult to do an OS upgrade every time.

I get this response very regularly and I have but one answer: When I upgrade the firmware, I do so in MY own terms & MY own time. However, when a switch (or a stack) crashes, the crash always occur at the time you need them the most.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022

02:07 AM

- last edited on

02-22-2022

04:43 AM

by

Translator

![]()

Hello @inb ,

SNMP traps are unsolicited messages sent by the switch to the NMS when some threshold is crossed.

the configuration described in the bug is about SNMP polling with GET messages from the NMS to the switch

>>

Conditions: * Sup7E, Sup7L-E or 4500X * switches running 3.3.0SG, 3.3.1SG, 3.3.2SG or 3.4.0SG * switches being polled for OIDs under CISCO-PROCESS-MIB

Workaround: Exclude polling of the CISCO-PROCESS-MIB using an SNMP view: snmp-server view restrict iso included snmp-server view restrict ciscoProcessMIB excluded snmp-server community cisco view restrict RO

However, as noted you may want to avoid to enable all snmp traps in an effort to reduce the SNMP load on the CPU of the switch.

As asked by @Leo Laohoo can you post

show version

of the affected switch ?

and

show module

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2022 04:14 PM

Hi.

I also checked that bug and I want to disable all snmp traps and enable only what I need.

I'm struggling with no evidence as to whether that operation can reduce CPU and memory load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-15-2022 05:02 PM

Thank you for answer.

I haven't run the tests yet.

But it seems to be the above problem.

thank you.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide