- Cisco Community

- Technology and Support

- Networking

- SD-WAN and Cloud Networking

- Re: Ask the Expert- SD-WAN fundamentals and implementation

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ask the Expert- SD-WAN fundamentals and implementation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-19-2019

06:28 PM

- last edited on

08-20-2019

05:16 PM

by

Hilda Arteaga

![]()

This topic is a chance to discuss more about SD-WAN, it's foundations and inner mechanisms as well as its correct design and implementation to achieve desired business outcomes. Software-Defined WAN (SD-WAN), is a popular technology and this event is aimed to help engineers/customers/partners understand the benefits and possible advantages that its implementation can bring.

To participate in this event, please use the![]() button below to ask your questions

button below to ask your questions

Ask questions from Monday 19th to Friday 30th of August, 2019

Featured expert

David might not be able to answer each question due to the volume expected during this event. Remember that you can continue the conversation on the SD-WAN community.

Find other events https://community.cisco.com/t5/custom/page/page-id/Events?categoryId=technology-support

**Helpful votes Encourage Participation! **

Please be sure to rate the Answers to Questions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-20-2019 12:43 PM

Are there any best practices regarding MSP design specifically with access into the SP cloud for privately addressed services, such as a cucm cluster within a customer vrf? Is it possible to use something such as a vedge 5000 to aggregate multiple customers on the public side and split them into vrfs back toward the SP mpls while still keeping a separate control plane instance per customer (vmanage)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019 11:15 AM - edited 08-27-2019 03:57 AM

Hello Seth,

The common SD-WAN deployment in SP networks can be divided in the following 2 groups:

- Customer dedicated vManage

- Shared vManage - Multitenancy

Each one of them with their advantages and drawbacks. One of the advantages of the shared vManage is to have a centralized platform with all the customers properly segmented within the controller (i.e. multitenancy). This translates into less devices/servers/controllers deployed, thus, less resources used. It does fit into low-scale deployments with several customers having a handful of sites to support. Might be even ideal for PoCs as well.

The main drawback of it being scalability: Each vSmart controller supports a limit of around 5400 control connections (and those are shared when deployed in multitenancy mode), please note that each TLOC will establish a control connection. Furthermore, doing the math by increasing the number of TLOCs in each vEdge will cut down that limit substantially:

- One TLOC - 5400 vEdges

- Two TLOCs - 2700 vEdges

- Three TLOCs - 1800 vEdges

Each vManage controller supports a limit of around 2700 vEdges routers.

Regarding the services: Usually this is done via L3VPNs in the underlay, leaked into the transport VPN (0) via a vEdge deployed as a CE device in the MPLS network in a management tenant, also could be called control center (vManage, vSmart, vBond and Management vEdge). It could be implemented specifically for a customer (like the one you have mentioned - only existing services in a customer VPN) or shared services offered to all the customers (a "services" VPN to host services like DNS or NTP, for instance).

Is it possible to use something such as a vedge 5000 to aggregate multiple customers on the public side and split them into vrfs back toward the SP mpls while still keeping a separate control plane instance per customer (vmanage)?This would imply deploying a device to work in the overlay to aggregate that traffic, similar to what a PE does currently in the underlay. In all honesty, I have not seen this deployment, usually the overlay - either per customer or per group of customers - is deployed to operate as separate/segmented as possible from the underlay (MPLS/DIA transport). The goal is to provide each of the customers with their own/dedicated environment, using vSmart, vManage and vEdges as your segmentation tools (vBond can be shared between customers in a multitenancy deployment), while using the underlay (which is already shared between customers) purely as a transport, and leaking prefixes to the transport VPN (using BGP) as needed to provide a service or a set of services to the customer requesting them.

Hope this is useful!

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019 05:23 PM

Hi @David Samuel Penaloza Seijas, thanks for sharing your knowledge on this session.

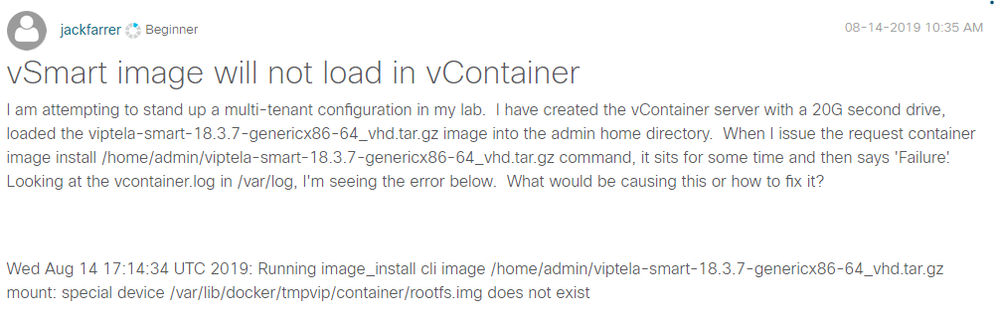

Could you please help to answer the following question from @jackfarrer

Official post link.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 04:01 AM - edited 08-22-2019 04:28 AM

Hello @jackfarrer - not a docker expert here.

Seems to me that despite the fact you have created the 20G drive, the storage driver is not able to see it and therefore it cannot mount it when you try to install the image. I could be blatantly wrong on this (the special device raises some questions), apologies in advance.

Are you able to provide more logs? Is there anything else observed by you?

I will try to replicate your intent and come back with my results.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 07:14 AM

David,

I'm not a Docker expert either, so I'm not sure where to go to get any additional logs or information. I did have issues with the second drive on the vManage server I spun up. I used qemu-img to create the image, but the vManage server was not able to find a partition and would constantly reboot. I ended up attaching the image to a basic Linux VM and using fdisk to partition it and then attached it back to the vManage and it was then able to format it. I tried the same process with the vContainer, but the result was the same, the image install failed. When I drop to vshell on the vContainer and try to run any of the normal Docker commands, I get the message that it cannot connect to the Docker daemon even though the messages log shows the daemon was started.

local7.info: Aug 16 17:55:45 vcontainer SYSMGR[329]: %Viptela-vcontainer-SYSMGR-6-INFO-200017: Started daemon docker @ pid 725 in vpn 0

Permissions on the path for the second drive are locked down to root with execute for everyone else, so I'm not sure if that's an issue or not.

drwx--x--x 11 root root 4096 Aug 16 17:58 docker

I'm also not sure if the standard Docker commands are applicable here, since the image install is done via the Viptela CLI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 02:40 AM

Hi @David Samuel Penaloza Seijas,

I'm trying to figure out how to use service insertion, and the main difficulty here is the redirection policy itself, as well as how the FW should be placed.

I've have the Hub router announcing two different services, netsvc1 and netsvc2 which are inside and outside interfaces of the firewall. I'm doing it like this because you cannot have multiple "FW" type service on the same VPN, so only one IP can be announced, and I confess that I don't have the knowledge on how to have the FW inspecting ou applying rules on one interface and send the traffic back on the same interface.

The problem is that this policy is a Custom Control Policy, and such policy needs to coexist with the Custom Topology policies in place, for Hub and Spoke topology.

Do you have some information or experience in implementations like this, and how should the FW work, routed or transparent.

I've watched David Klebanov videos on this, but it's not clear how the FW is placed here.

Also even though the vSmart receives the services, they are not reflected back to the routers participating on that service VPN, you can see the label towards the destination remains the same, even though on the vSmart the label for that specific service is different.

Thank you for any help you may provide,

I salute you for this initiative

Best Regards,

Please rate helpful posts,

Ruben Carvalho CCIE#57952

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 12:15 PM - edited 08-22-2019 12:30 PM

Hello @rbncarvalho

The approach is right, you differentiate the interfaces by advertising them in separate services. You would then link those services with a site list, so you can advertise the respective service (untrusted interface/zone) to the remote offices or towards the DC or HQ (trusted interface/zone). That way, remote offices will only see the service pointing to the right interface so the traffic does not get dropped. Please note that the site lists are supposed to be planned properly for this to be in place.

The ability to advertise services via OMP allows you to place the firewall at any point in the network as long as its within the SD-WAN fabric. That being said, the advantage of this approach is to designate any specific place (being a regional hub or a colo facility - so delay and jitter are reduced and application performance impact minimized/improved/optimized) to place/deploy/install the firewall appliances or any other device providing the service you are advertising, reducing then expenses for dedicated firewalls in remote offices. Its an sweet spot between centralized FW in a DC - consuming DC's computing and BW resources - and dedicated FW per remote office (high expenses involved for appliances).

For the case of the Hub-and-Spoke topology, would be advantageous to place the FW in the hub, and its the natural place where the traffic flow is headed and does not incur in awkward/confusing/complex redirecting.

The firewall can be either transparent or routed. I personally have bad experiences with transparent firewalls, they are stateful devices anyway (regardless of the mode they operate) and its important to know where they are so you are able to pinpoint problems and troubleshoot efficiently.

Could you provide outputs? What can you see when you issue "show omp services" command?

Thanks!

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 10:13 PM

To add to @David Samuel Penaloza Seijas excellent explanation, here are some more considerations for you @rbncarvalho

As David mentioned, the firewall could be placed anywhere. However, remember that service chaining is a special form of forwarding where we bypass the normal forwarding logic. For this reason, the firewall, or the service that is provided, needs to be "directly connected" to a vEdge. What does that mean? It doesn't mean that the service needs to be physically connected to the vEdge but that the vEdge must be in the same subnet as the service. There can be no L3 hops between the vEdge and the service. You can either stretch L2 by having a switch in between or you can tunnel traffic, for example by using IPSec towards the FW. I recommend you read my blog if you want to see an example of a service chaining topology: http://lostintransit.se/2019/08/20/the-tale-of-the-mysterious-traceroute/

When you advertise a service from a vEdge, it gets advertised to the vSmart(s). Services are NOT reflected to the other vEdges in the network. What you do is to create a control or data policy that based on your match criteria modifies vRoutes that get advertised from the vSmart to the vEdges. Keep in mind that the service VPNs used are actually also services. VPNs are identified by labels and packets sent in data plane are encapsulated with MPLS label. Let's say that we have a route 10.0.0.0/24 in VPN 10 and it has label 1003. Now, when you enforce the service for traffic destined to 10.0.0.0/24, what happens is that the label changes to say 1005. This will also mean that the TLOC (next-hop) changes to be for example a DC instead of a branch.

How does this all work under the covers? When the traffic flow starts, normal forwarding is used where the service label has been applied. When traffic reaches vEdge where FW is located, we can't use normal forwarding as that would just send the traffic onwards. The vEdge looks at the label and sends traffic towards the FW, when traffic returns back, normal forwarding, according to IP route lookup occurs.

Can you hairpin traffic on a FW? Absolutely yes depending on the FW of course. You don't necessarily need two interfaces. It depends on the design. Are you just filtering traffic between branches or between branches and say a DC? If you have two interfaces, you need to advertise to services. Like you said yourself, you could do this by advertising netsvc1 and netsvc2.

If you have access to the SD-WAN mastery course, I recommend you check David Klebanov's videos on service chaining. I also recommend you read through the following links:

I hope this helps.

Best regards,

Daniel

CCIE #37149

CCDE #20160011

Please rate helpful posts.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2019 11:37 PM

Thanks a ton for complementing! Fantastic contribution!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-26-2019 02:08 AM

Hi @David Samuel Penaloza Seijas and @daniel.dib.

Thank you for your feedback.

I've managed to get the service insertion to work, but I had to do it without the custom control policy because I have a custom topology in place and the Site ID were in use.

However I used a data policy for this, and thanks to your inputs this started to work.

Thank you guys for your support.

Best Regards,

Rúben

Please rate helpful posts,

Ruben Carvalho CCIE#57952

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-27-2019 02:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2019 08:58 AM

Hi @daniel.dib and @David Samuel Penaloza Seijas

Thanks for helping to solve this question

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2019 09:00 AM

HI all,

I have a multi-tenant lab, and I'm trying to work with Ansible but I'm getting errors because of the multi-tenant, I would like to know if someone was able to work with Ansible and multi-tenant.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2019 09:36 AM

Hello @juraj.papic

Could you please elaborate more your statement? what kind of error? what are you trying to do/achieve? are you able to provide logs?

Thanks!

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide