- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: C3850 output discards

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

C3850 output discards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2022 03:55 AM

We are experiencing a large number of output discards between two C3850 stacks (sw version 16.12.05b).

They are both (collapsed)core switches in two different datacenters connected by a 10G LR single-mode fiber. It’s a layer 2 connection. Almost all of these discards are in one direction A->B (not B->A)

There is a “backup” line via another switch between these sites. If we change the path costs, so the data takes the “backup path”, the output discards “shift” to the new port/path A->C (10G multi-mode SR) So, the problem has nothing to do with the fiber or the sfp module, but rather with switch A.

The data between those two switches is mainly netapp cluster data, vmotion data and surveillance cam data.

As you can see from the screenshots, the data rate is way below 10G, even below 1G.

So, we don’t have any qos configured.

I tested the connection A->B with iperf3 and I see some TCP retransmits, but no UDP loss

Interval Transfer Bitrate Retr

0.00-10.00 sec 1.09 GBytes 940 Mbits/sec 75 sender

0.00-10.04 sec 1.09 GBytes 935 Mbits/sec receiver

Interval Transfer Bitrate Jitter Lost/Total Datagrams

0.00-10.00 sec 1.25 MBytes 1.05 Mbits/sec 0.000 ms 0/906 (0%) sender

0.00-10.04 sec 1.25 MBytes 1.05 Mbits/sec 0.005 ms 0/906 (0%) receiver

Iperf3 B->A I have almost no retransmits

Interval Transfer Bitrate Retr

0.00-10.00 sec 1.09 GBytes 940 Mbits/sec 2 sender

0.00-10.04 sec 1.09 GBytes 935 Mbits/sec receiver

I’ve read some articles about output discards on C3850 and “microbursts”.

I tried “qos queue-softmax-multiplier 600” and “qos queue-softmax-multiplier 1200” as recommended in these articles. Unfortunately, that didn't bring any change.

Can it be microbursts?

Is there a way to tell what packets/data is being discarded?

Do we need to bother with it at all as we don't experience any problems??

Any other recommendations?

- Labels:

-

Catalyst 3000

-

LAN Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-05-2022 04:52 AM

Case in Cisco drop output I would like to explain to you

https://www.cisco.com/c/en/us/support/docs/switches/catalyst-3850-series-switches/200594-Catalyst-3850-Troubleshooting-Output-dr.html

show platform qos queue conf gigabitEthernet xxx

…

----------------------------------------------------------

DTS Hardmax Softmax PortSMin GlblSMin PortStEnd

--- -------- -------- -------- --------- ---------

0 1 6 39 8 156 7 104 0 0 0 1200

1 1 4 0 9 600 8 400 3 150 0 1200 <-**

2 1 4 0 8 156 7 104 4 39 0 1200

3 1 4 0 10 144 9 96 5 36 0 1200

4 1 4 0 10 144 9 96 5 36 0 1200

**qos queue-softmax-multiplier 1200

1200 is the sum of buffer for all Queue you config

0+1+2+3+4= 1200

156+600+156+144+144=1200

but we face drop in one queue because Queue 1 have 50% of total buffer.

reduce it and monitor the drop output.

so increase the total buffer and keep one queue get 50% is not solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 01:28 AM

I tried the following, according to another community post:

qos queue-softmax-multiplier 1200

class-map match-any TACTEST

match access-group name TACTEST

policy-map TACTEST

class class-default

bandwidth percent 100

ip access-list extended TACTEST

permit ip any any

interface TenGigabitEthernet 2/0/17

service-policy output TACTEST

before:

stwl_core.stack2#$rm software fed switch 2 qos queue stats interface te2/0/17

----------------------------------------------------------------------------------------------

AQM Global counters

GlobalHardLimit: 2947 | GlobalHardBufCount: 0

GlobalSoftLimit: 10365 | GlobalSoftBufCount: 0

----------------------------------------------------------------------------------------------

Asic:0 Core:0 Port:4 Software Enqueue Counters

----------------------------------------------------------------------------------------------

Q Buffers Enqueue-TH0 Enqueue-TH1 Enqueue-TH2 Qpolicer

(Count) (Bytes) (Bytes) (Bytes) (Bytes)

-- ------- -------------------- -------------------- -------------------- --------------------

0 0 4529132031 65293396339 13877910435 0

1 0 0 0 41746826048425 0

2 0 0 0 0 0

3 0 0 0 0 0

4 0 0 0 0 0

5 0 0 0 0 0

6 0 0 0 0 0

7 0 0 0 0 0

Asic:0 Core:0 Port:4 Software Drop Counters

--------------------------------------------------------------------------------------------------------------------------------

Q Drop-TH0 Drop-TH1 Drop-TH2 SBufDrop QebDrop QpolicerDrop

(Bytes) (Bytes) (Bytes) (Bytes) (Bytes) (Bytes)

-- -------------------- -------------------- -------------------- -------------------- -------------------- --------------------

0 0 0 0 0 0 0

1 0 0 23798323885 0 0 0

2 0 0 0 0 0 0

3 0 0 0 0 0 0

4 0 0 0 0 0 0

5 0 0 0 0 0 0

6 0 0 0 0 0 0

7 0 0 0 0 0 0

after:

stwl_core.stack2#$rm software fed switch 2 qos queue stats interface te2/0/17

----------------------------------------------------------------------------------------------

AQM Global counters

GlobalHardLimit: 2447 | GlobalHardBufCount: 0

GlobalSoftLimit: 10865 | GlobalSoftBufCount: 0

----------------------------------------------------------------------------------------------

Asic:0 Core:0 Port:4 Software Enqueue Counters

----------------------------------------------------------------------------------------------

Q Buffers Enqueue-TH0 Enqueue-TH1 Enqueue-TH2 Qpolicer

(Count) (Bytes) (Bytes) (Bytes) (Bytes)

-- ------- -------------------- -------------------- -------------------- --------------------

0 0 0 62653922770 19278407 0

1 0 0 0 0 0

2 0 0 0 0 0

3 0 0 0 0 0

4 0 0 0 0 0

5 0 0 0 0 0

6 0 0 0 0 0

7 0 0 0 0 0

Asic:0 Core:0 Port:4 Software Drop Counters

--------------------------------------------------------------------------------------------------------------------------------

Q Drop-TH0 Drop-TH1 Drop-TH2 SBufDrop QebDrop QpolicerDrop

(Bytes) (Bytes) (Bytes) (Bytes) (Bytes) (Bytes)

-- -------------------- -------------------- -------------------- -------------------- -------------------- --------------------

0 0 16514687 0 0 0 0

1 0 0 0 0 0 0

2 0 0 0 0 0 0

3 0 0 0 0 0 0

4 0 0 0 0 0 0

5 0 0 0 0 0 0

6 0 0 0 0 0 0

7 0 0 0 0 0 0

I'm not fully getting this....

all the drops that previously occured in queue 2 now occur in queue 1 because now I put all incoming data in queue 1 and give it all the available buffer. Is that right?

why is 100% buffer not enough to handle the data? We have 200Mbit Data and the Port has 10Gbit. It should be able to transmit it fast enough, shouldn't it?! what is the problem here? packet count? microburst?

Is the only solution for this to replace the 3k switch with a 9k switch?

Sorry, if these are stupid question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 06:28 AM - edited 05-06-2022 06:35 AM

policy-map TACTEST

class class-default

bandwidth percent 100 <- change this to be queue-buffers ratio 80

show platform qos queue conf gigabitEthernet xxx

the Queue queue must be now around 900.

then check drop again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2022 12:15 AM

I've changed the policy map accordingly:

stwl_core.stack2#$ hardware fed switch 2 qos queue stats interface te2/0/17

----------------------------------------------------------------------------------------------

AQM Global counters

GlobalHardLimit: 2447 | GlobalHardBufCount: 0

GlobalSoftLimit: 10865 | GlobalSoftBufCount: 0

----------------------------------------------------------------------------------------------

Asic:0 Core:0 Port:4 Hardware Enqueue Counters

----------------------------------------------------------------------------------------------

Q Buffers Enqueue-TH0 Enqueue-TH1 Enqueue-TH2 Qpolicer

(Count) (Frames) (Frames) (Frames) (Frames)

-- ------- -------------------- -------------------- -------------------- --------------------

0 0 0 19328844381 14172561 0

1 0 0 0 0 0

2 0 0 0 0 0

3 0 0 0 0 0

4 0 0 0 0 0

5 0 0 0 0 0

6 0 0 0 0 0

7 0 0 0 0 0

Asic:0 Core:0 Port:4 Hardware Drop Counters

--------------------------------------------------------------------------------------------------------------------------------

Q Drop-TH0 Drop-TH1 Drop-TH2 SBufDrop QebDrop QpolicerDrop

(Frames) (Frames) (Frames) (Frames) (Frames) (Frames)

-- -------------------- -------------------- -------------------- -------------------- -------------------- --------------------

0 0 8680921 0 0 0 0

1 0 0 0 0 0 0

2 0 0 0 0 0 0

3 0 0 0 0 0 0

4 0 0 0 0 0 0

5 0 0 0 0 0 0

6 0 0 0 0 0 0

7 0 0 0 0 0 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 06:33 AM

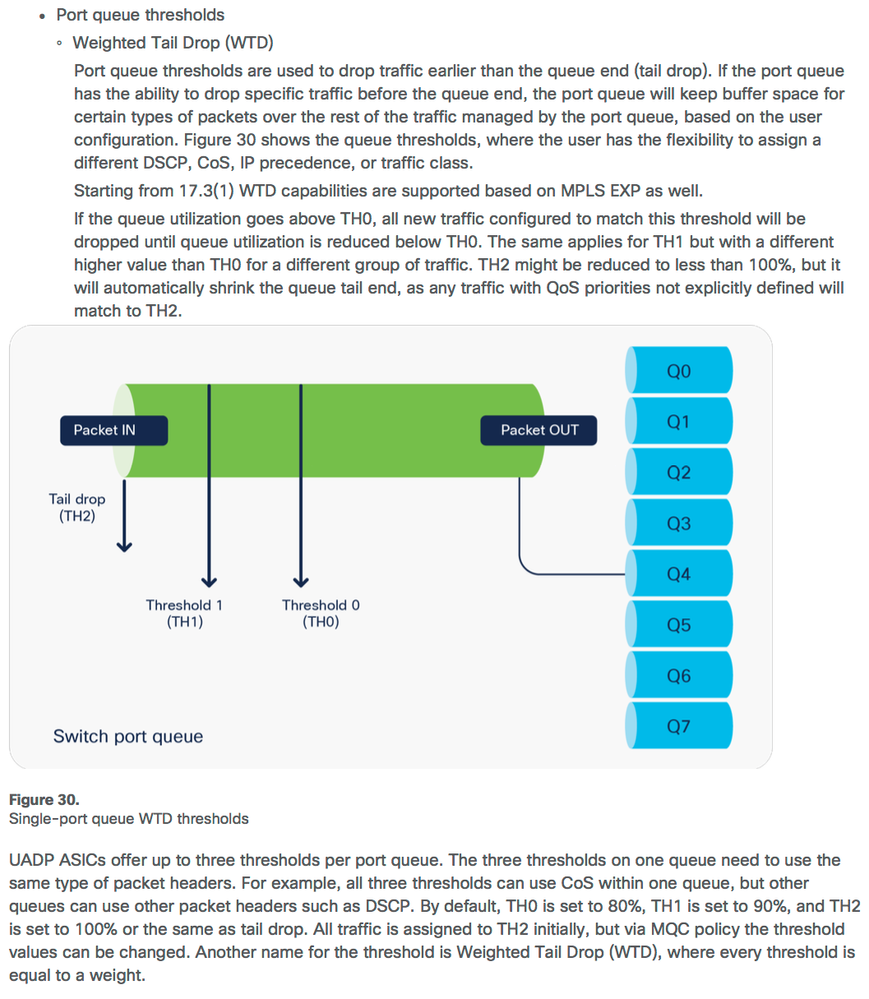

What is drop-TH0 drop-TH1 drop-TH2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 04:15 PM

"Is the only solution for this to replace the 3k switch with a 9k switch?"

With a 9K, only? Probably not. With a "better" switch? Probably.

BTW, 9Ks come in different model series; if you were going to use one, which model series you select might be very imporant.

However, if I was unclear within my prior posting, QoS might mitigate your problem, but as you're not doing QoS now, didn't believe you wanted to go "down the rabbit hole" (or more current expression, might be, you want to take the red pill; laugh).

There's often much you can do with advanced QoS, but it also brings along much in its need for its care and feeding. Something like "qos queue-softmax-multiplier 1200" is about as simple of a QoS configuration change you make that often has beneficial effect, on 3650/3850 platforms, without creating negative side effects too. Often, though, advanced QoS can be "complicated", and done incorrectly, can make some things, or all things, worse.

In the prior gen of 3K switches (3560/3750), many noted "new" performance issues (almost always increased drop rates) when QoS was enabled. (NB: On 3560/3750, QoS disabled, by default; on 3650/3850 QoS [I believe] enabled by default and cannot be disabled) First easy fix, disable QoS unless you really believed you needed it. Second easy fix, configure the "qos queue-softmax-multiplier 1200" equivalent command. If neither of those corrected the problem, either look for a "better" switch or determine whether advanced QoS might mitigate the issue.

As an example of the latter, had a case where a 3750G, with QoS disabled, was dropping multiple packets per second, on two ports. Those ports each has only one host, an Ethernet SAN device.

By enabling QoS, and making some very significant QoS changes, I decreased the drop rate (again which was multiple drops per second) down to a few drops per day!!! Didn't seem to otherwise impair operations on any other ports, yet the QoS changes I made, made that 3750 very "fragile" in the sense that even a minor change in traffic, on that particular switch, could really "upset the apple cart". (Long term solution, we budgeted for a "better" switch.)

BTW, when Cisco had the 3560/3750, within about the same time frame, they offered the 4948. A "G" variant of the 3560/3750 could be had with 48 gig copper ports, which the 4948 provided too. However, under the hood, very different switches.

For an analogy, consider a VW beetle vs. a Porsche. Both have 4 tires, right? Those tires might even be identical between the two autos, but would you think, that even with exactly the same tires the two autos will perform just the same?

If you want to further pursue a possible QoS mitigation solution, I might be able to help there, somewhat, but I expect the rabbit hole to be rather deep.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2022 04:07 PM

"Can it be microbursts?"

Certainly possible, even likely. One reason likely . . .

"As you can see from the screenshots, the data rate is way below 10G, even below 1G."

Extreme bursting can drive effective TCP data transfer rates way, way down. The usual cause for this is timeouts waiting on ACKs for data packets sent, but dropped.

"Is there a way to tell what packets/data is being discarded?"

Unsure on a 3850 platform, beyond directing different traffic types to different egress queues and checking egress queue stats.

"Do we need to bother with it at all as we don't experience any problems??"

Ah, (laugh) that's the 64 bit question. If you truly don't believe it's adverse to your service requirements, then what you have is only an "academic" problem.

I've written, on these forums, I consider an interface congested whenever a frame/packet cannot be immediately transmitted. Yet, whether one frame/packet is waiting, or a thousand, such "congestion" doesn't define whether you have a problem or not. If congestion is a problem, then it might be mitigated with additional bandwidth and/or QoS. (BTW, you can also have issues w/o any "congestion". In such cases, generally QoS won't help while additional bandwidth may, or may not, help.)

In cases where congestion isn't considered a problem, QoS and/or plans to add bandwidth might be made to possibly avoid/mitigate future congestion (e.g. possibly QoS configured now) or quickly mitigate future congestion (e.g. SFP to SFP+, Etherchannel, multiple ECMP L3 paths, etc.).

"Any other recommendations?"

If what you're seeing doesn't appear to be adverse to your service requirements, continue to monitor your service levels and address the issue then. However, that approach might lead you into being "slow" to mitigate a future service issue as you, yet, don't really understand the current cause of what you're seeing now.

In other words, it's probably worthwhile to get to the "cause now", so you can mitigate it, if needed, in the future, but as you don't have a "problem" now, this could be a low priority project.

Not withstanding the forgoing, the most likely issue is caused by 3850 having insufficient buffer resources for your traffic (not an uncommon issue with Catalyst 3Ks, including earlier gens).

There's a reason Cisco has much (much) more expensive switches in their product line up and/or why they generally recommend their Nexus series for data centers. Different switches all seem to have FE, gig, 10g, etc. ports, but what's "behind" those ports can be quite different, as is the "performance" capabilities.

An independent benchmark for most switches might show a switch capable of "wire-speed/line-rate" on all ports concurrently (e.g. port1=>port2, p2=>p3, . . . , p48=>p1), but that's very rarely a real world usage example.

Cannot say regarding resource allocations on a 3850, but on the prior 3750 there was a fixed (and same) buffer RAM allocate per each bank of 24 copper ports and for the "uplink" ports. I.e. balancing ports usage across these banks can better share buffer RAM.

Or, cannot say regarding the 3850s, but again on the first gen 3750, its ring usage was rather wasteful (such as traffic between two ports on the same physical switch member was also transmitted on the ring, and/or, traffic being sent between stack switch members, was removed by the original sending switch, not the receiving switch). Besides a 3850 offering more stack ring bandwidth, how it uses the ring is likely better than a 3750 too, yet unlikely as efficient as a fabric within a chassis switch.

Or, also in other words, perhaps you may find the "best" long term solution is the replace your 3K switches with "better" switches. (Also again, there really is a reason they cost more, beyond increased profit margins.)

(BTW, on the old Catalyst 6500s/7600s, much of the platform's overall "performance" also depended on the line card[s {plural because even the mix of line cards impacted overall performance}], and what optional modules it might have, that hosted its gig, 10g, etc., ports.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-05-2022 03:23 AM - edited 05-05-2022 03:25 AM

hi Joseph

thank you very much for your detailed answer.

I appreciate that.

I get your point to solve the issue as quickly as possible, even if it ain't a "problem" yet.

I will continue to try to find the cause, even if time is short.

I'd love to replace the 3K switches, but I have to convince the boss first.

Last time he said "if I don't notice a difference on my PC afterwards, we don't need new switches"

Maybe I should limit his access port to 10Mbit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 06:05 AM

Hi @reccon

This looks like microburst.

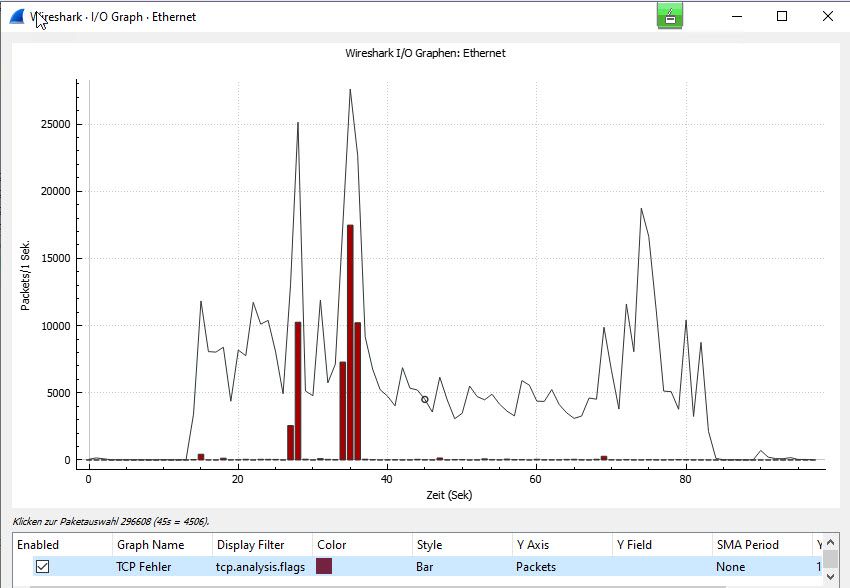

You can confirm this using packet captures. If the drops are frequent or happening at fixed intervals you can simply span the port and then generate I/O graph in Wireshark.

Refer to this guide for step by step instructions-

3850 Egress packet drops are displayed in bytes not number of packets, if you want to see number of packets you can use CLI 'qos queue-stats-frame-count'.

Once the drops are in number of packets you can also use EEM to configure SPAN. Embedded captures may not be useful since the platform throttles it to a certain maximum number of packets per second.

You can try the following EEM trigger-

event interface interface-id parameter output_packets_dropped entry-op ge entry-val # poll-interval #

Thanks,

Ashish

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2022 12:59 AM - edited 05-09-2022 06:59 AM

Hi @ashishr

Thanks for your answer.

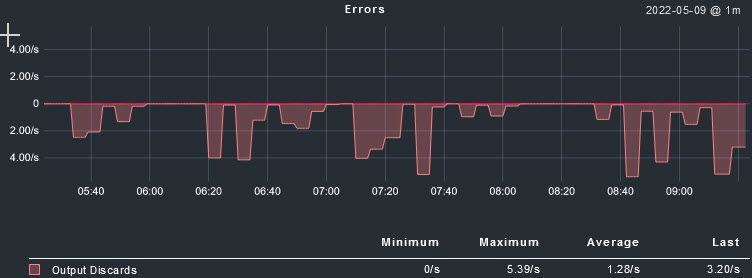

I configured 'qos queue-stats-frame-count' to see the number of packets dropped.

The graph is much less intimidating now. The drop rate is around 0,02-0,04 %

I captured some of the traffic while experiencing packet drops, which seems to confirm the suspicion with a bursty traffic problem.

I used an EEM to write a syslog entry every time more than 50 packets are dropped.

This seems to happen quite regularly. Now I need to find out the source...

002256: May 9 2022 14:25:16.949 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002257: May 9 2022 14:30:22.437 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002258: May 9 2022 14:50:13.549 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002259: May 9 2022 14:55:19.078 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002260: May 9 2022 15:00:14.343 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002261: May 9 2022 15:05:14.910 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

002905: May 9 2022 15:15:20.899 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

003451: May 9 2022 15:25:15.139 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

003452: May 9 2022 15:25:20.140 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

003581: May 9 2022 15:30:30.489 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

003945: May 9 2022 15:35:30.922 CEST: %HA_EM-4-LOG: output-drop: TenGigabitEthernet2/0/17 packets dropped

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-10-2022 06:41 AM - edited 05-10-2022 06:43 AM

Hi @reccon

Try conversation option in Wireshark- Statistics tab > Conversations. If you find anything out of ordinary then you can monitor that flow for a pattern or probably change in application behaviour. It will not exactly identify the source but if you are aware of typical traffic pattern then it would definitely help in identifying any out of ordinary behaviour.

Thanks,

Ashish Ranjan

- « Previous

-

- 1

- 2

- Next »

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide