- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: L3 Routing problem with Nexus VPC pair in typical core position

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-18-2022 02:45 PM

This is a standard configuration, with 2 nexus 9k running VPC between them, and catalyst access switch trunked at L2 redundantly to the two Nexus. All L3 SVI lives on Nexus core, and enumerated with HSRP on each core box.

N9K-1------|

|| CAT3k-1 (vlans 100/200)

N9K-2------|

CAT3k switch is port-channeled to the 2 x N9K, and trunked for vlans 100/200. VPC peer link carries vlans 100/200 and runs on the same native vlan as the CAT switch port channel native vlan.

I noticed an odd problem in that not all traceroutes go through primary HSRP interfaces (N9K-1) when I go back and forth between vlan100 and 200. So essentially N9K-1 has higher HSRP and VPC priority but traffic goes through N9K-2 when routing to vlan 100:

N9K-1:

int vlan100

ip addr 192.168.100.2

hsrp ver 2

hsrp 1

preempt

priority 150

ip addr 192.168.100.1

N9K-2:

int vlan100

ip addr 192.168.100.3

hsrp ver 2

hsrp 1

preempt

priority 125

ip addr 192.168.100.1

Here is the VPC config:

vpc domain 1

peer-switch

role 200 / 300 (primary / secondary)

peer-keepalive dest 1.1.1.1 source 1.1.1.2 vrf vpckeepalive

peer-gateway

fast-convergence

ip arp synchronize

When I traceroute between vlans 100 and 200, sometimes the default gateway of N9K-2 responds, even though HSRP and VPC domain priority is higher. When I shut one one of the HSRP interfaces (100 or 200) on only one of the N9K's, there is weird on/off connectivity between the subnets. So I suspect traffic is coming on on the CAT3k uplink to N9K1 for example, but N9K1's vlan100 interface is down. However, the VPC peer link is not carrying the traffic over to the vlan100 interface of the N9K2. There is no routing between N9K1 and 2 over the VPC peer link. All vlans are L2 with the L3 as SVI on each N9K. Vlans 100/200 are on the VPC peer link.

Anyone have a clue? I suspect its related to the peer-gateway or peer-switch command. Essentially to make it work, I have to shut all SVI's on one or the other N9K. My port-channel hash algorithm is ip-src-dst load balance.

Solved! Go to Solution.

- Labels:

-

Catalyst 3000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 11:47 AM - edited 03-21-2022 11:51 AM

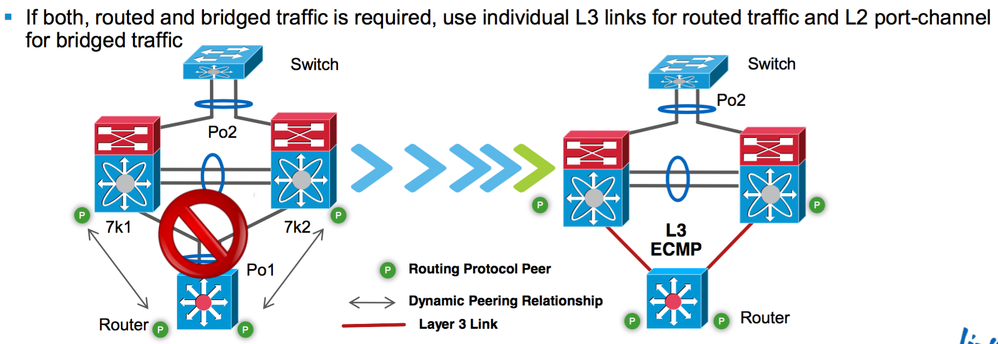

I did some more testing today, and here is my initial conclusion: Nexus with L3 interfaces and doing any sort of routing need a routing protocol between then to handle all the failure scenarios. I kind of suspected this. The to and through frame from workstation doesn't seem to be the broken piece. its the return and through that is broken. Its also probably exacerbated by the nexus peer-gateway command. So here is whats happening, as it appears initially:

workstation sends frame to either PO link, to either nexus.

if down v100 nexus gets frame, it sends to up v100 nexus SVI over peer-link.

if up v100 nexus gets frame, it receives it.

in both cases, up v100 nexus sends frame out v200 for correct processing.

this L3 packet then forwards to firewall.

firewall sends it to DMZ, where dmz host replies.

firewall then send this IP packet back either PO link to either nexus.

either nexus has up v200 so can receive ip packet.

if up v100 nexus gets this, it routes and sends out its up v100 interface.

if down v100 nexus gets this, it tries to route and fails, because it doesn't have an up v100 interface.

peer-gateway command in nexus only compounds this as it says basically nexus peers can load-balance or respond at L3 on either one. this means that packet can be processed by either one, randomly.

So i think the fix is to create another L3 "peer-link" between the two core nexus switches. this is not really a peer-link but a dog leg routed subnet which can shoot the packet to the nexus which has the up v100 SVI, regardless of which nexus it comes into. So it would look something like this:

|----------N9K-1----------|

DMZ----Firewall (Vlan200) || (VPC) || (L3-PO) CAT3k-1 (vlan100)

|----------N9K-2----------|

Now with a routing protocol, the down v100 N9K can send the pack to the correct spot.

When i originally tested, the peer-gateway command was doing something weird to the L3 PO, so i had to turn that off. I think ill have to try it again. if anyone knows how this peer-gateway command affects the non-VPC SVI on the inter-core L3 link, please advise. Many thx to those who spent some brainpower on this.

My conclusion is you always need both L2 (peer-link) and L3 port-channels between nexus, when they are running SVI's. if anyone disagrees with this, please advise on how to work around this routing issue. The only workaround I can see is to forcibly fail-over all HSRP SVI upon sensing one SVI is down; And to turn off peer-gateway command. But it seems kind of intrusive to failover all L3 interfaces if only one SVI is down on one nexus.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 12:04 PM

hi mhm, i think your answer is inline with what i described in the latest post, showing routing behavior. so thank you for reaching out!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 12:19 PM

thx mhm, the recent upload of the diagram doesn't work or apply in all cases. for example, our firewall will not support routing, so cannot create an L3 relationship with nexus. Additionally, to run a routing protocol on all the access swtiches is a burden, and extra complexity. What i will try is to establish routing between the 2 x 9k (7k in your diagram), over another port-channel between the two nexus peers. then they can carry the L3 traffic to the UP access devices SVI if theirs is down.

- « Previous

-

- 1

- 2

- Next »

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide