- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Nexus - VPC and ESX

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nexus - VPC and ESX

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-13-2019 02:32 AM - edited 06-13-2019 06:17 AM

Hello all,

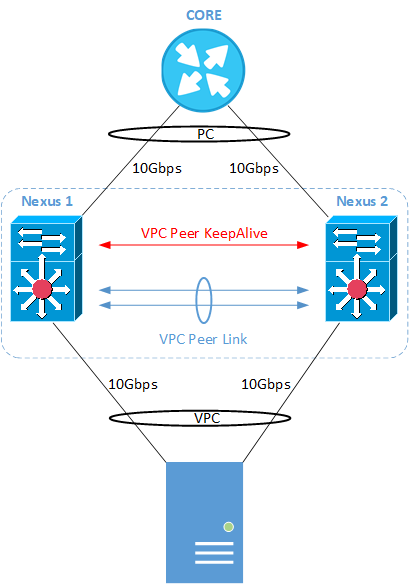

I have two Nexus 93180YC-FX in VPC mode. One ESX is actually connected : 2 links 10gbps on each Nexus with a VPC.

The load balance on Nexus side is : port-channel load-balance src-dst ip

The Nexus or used only on level 2 and devices like ESX have the level 3.

My problem is : only one link, between the Nexus and the ESX, is used for the traffic and it's always the same. I know there is a role priority between both Nexus but I would like both Nexus / links take the traffic to the ESX 50/50. Is it possible in VPC mode ?

Nexus's settings :

Nexus 1

- role priority 100

- vpc domain 20

- vpc role primary

Nexus 2

- role priority 200

- vpc domain 20

- vpc role secondary

Regards,

- Labels:

-

LAN Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-13-2019 04:40 AM

im setup like that and both sides and when looking at the packet counters are sending traffic from the ESX ROIB servers and utilizing both links using LB below , also check are you teamed on ESX side correctly ,systems teams look after that for me but maybe you have access to check

SH RUN | I load

port-channel load-balance ethernet source-dest-port

interface port-channel3

description VPC for xxxxxxxxxxxxxxxxxxxxx

no lacp suspend-individual

switchport access vlan 74

logging event port link-status

logging event port trunk-status

vpc 3

XXXXXXX# sh int e1/3 | sEC TX

TX

6361229910 unicast packets 1088086069 multicast packets 421158334 broadcast packets

7870474313 output packets 3785465685698 bytes

1880947130 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

XXXXXXX# sh int e1/3 | sEC TX

TX

6361233307 unicast packets 1088086225 multicast packets 421158391 broadcast packets

7870477923 output packets 3785466416349 bytes

1880947161 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

##############################

SW2

XXXXXXX# sh int e1/3 | Sec TX

TX

27362921686 unicast packets 1087363315 multicast packets 418516069 broadcast packets

28868801070 output packets 6296658032011 bytes

1386303909 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

XXXXXXX# sh int e1/3 | Sec TX

TX

27362924912 unicast packets 1087363469 multicast packets 418516132 broadcast packets

28868804513 output packets 6296658496978 bytes

1386303909 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-13-2019 11:00 PM

Hello,

the ESX's conf seemes good because on a standalone Nexus (no VPC), the trafic passes both 10Gbps links.

Is the role primary / secondary could be the problem like a role active / standby ?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-17-2019 12:48 AM

No your roles look right , yes thats what i would expect standalone too ,but its teamed to load balance too yes on server side yes

Try the LB source dest port see if it makes a diff , i remember we tested a,lot of these globally on rollout and we use this widely now may give you a more LB flow over both channels

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-18-2019 10:41 AM

Hello,

OK I'll try it soon and give you a feedback.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2019 02:43 AM

Hello,

I forgot to give you a feedback. The settings are correct and all it works.

Thak for your support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2019 03:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-20-2020 02:42 PM

Hello,

This post is relevant to what I would to inquire about.

We have a pair to NX5Ks configured in a vPC doing L2 only. The core server, onto which these two NX5Ks uplink, are doing the L3 routing, hsrp, etc.

We have several ESXs servers that are uplinled to the vPC peers (NX5K_A-Primary & NX5K_B-Secondary). The system team required to have some of these ESXs to be uplinked to the NX5Ks using individual links/orphan links. I don't quite understand why the ESX systems can't be configured in a port-channel(vPC). This type of setup doesn't provide any type of redundancy, load-balancing, and fail-over mechanism from the switches stand-point.

Can you offer any explanation as to why ESX servers don't require their NICs to be configured in a LAG/Port-channels if they should be dual-attached to the nexus 5k vPC members?

Thanks in advance,

~zK

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide