- Cisco Community

- Technology and Support

- Networking

- Switching

- Nexus vPC help (GNS3 lab)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nexus vPC help (GNS3 lab)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 02:44 AM

Hello,

We use Nexus 3Ks as our core switches and I've been trying to build these in GNS3 as I need to make some changes on the live switches soon and want to test few things in a lab environment.

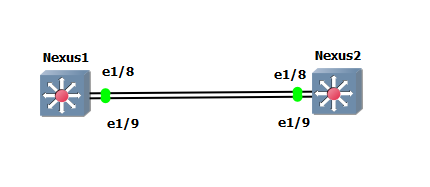

I've put most of the config on the 2 switches, but I'm hitting issues with the vPC on ports Eth1/8 (keep alive) and Eth 1/9 (port channel).

The keep alive seems ok as they can ping each other. However on the Nexus 2 switch I eventually get a BPDU guard alerts due to looks and it shuts the ports down and in some cases reboots the switch (GNS2 possibly).

Can you see anything wrong?

Nexus 1 interface Ethernet1/8 description vPC KeepAlive no switchport vrf member vPC-Keepalive ip address 172.31.255.253/30 no shutdown TEST-LH-LAN-NEXUS-01# sh run int eth1/9 !Command: show running-config interface Ethernet1/9 !Time: Thu May 23 10:28:45 2019 version 7.0(3)I7(3) interface Ethernet1/9 description EMG-LH-LAN-NEXUS vPC switchport mode trunk spanning-tree port type network channel-group 4096

TEST-LH-LAN-NEXUS-01#

Nexus 2 interface Ethernet1/8 description vPC KeepAlive no switchport vrf member vPC-Keepalive ip address 172.31.255.254/30 no shutdown interface Ethernet1/9 description EMG-LH-LAN-NEXUS vPC switchport mode trunk spanning-tree port type network channel-group 4096

From Nexus 1

TEST-LH-LAN-NEXUS-01# sh vpc peer-keepalive vPC keep-alive status : peer is alive --Peer is alive for : (159) seconds, (575) msec --Send status : Success --Last send at : 2019.05.23 10:32:34 267 ms --Sent on interface : Eth1/8 --Receive status : Success --Last receive at : 2019.05.23 10:32:34 3 ms --Received on interface : Eth1/8 --Last update from peer : (0) seconds, (342) msec vPC Keep-alive parameters --Destination : 172.31.255.254 --Keepalive interval : 1000 msec --Keepalive timeout : 5 seconds --Keepalive hold timeout : 3 seconds --Keepalive vrf : vPC-Keepalive --Keepalive udp port : 3200 --Keepalive tos : 192

From Nexus 2

Test-LH-LAN-NEXUS-02# show vpc peer-keepalive vPC keep-alive status : peer is alive --Peer is alive for : (231) seconds, (440) msec --Send status : Success --Last send at : 2019.05.23 10:33:46 928 ms --Sent on interface : Eth1/8 --Receive status : Success --Last receive at : 2019.05.23 10:33:46 493 ms --Received on interface : Eth1/8 --Last update from peer : (1) seconds, (0) msec vPC Keep-alive parameters --Destination : 172.31.255.253 --Keepalive interval : 1000 msec --Keepalive timeout : 5 seconds --Keepalive hold timeout : 3 seconds --Keepalive vrf : vPC-Keepalive --Keepalive udp port : 3200 --Keepalive tos : 192

Nexus 1

TEST-LH-LAN-NEXUS-01# sh vpc bri

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 990

Peer status : peer adjacency formed ok

vPC keep-alive status : peer is alive

Configuration consistency status : success

Per-vlan consistency status : success

Type-2 consistency status : success

vPC role : primary

Number of vPCs configured : 5

Peer Gateway : Enabled

Dual-active excluded VLANs : 254

Graceful Consistency Check : Enabled

Auto-recovery status : Disabled

Delay-restore status : Timer is off.(timeout = 60s)

Delay-restore SVI status : Timer is off.(timeout = 5s)

Operational Layer3 Peer-router : Disabled

vPC Peer-link status

---------------------------------------------------------------------

id Port Status Active vlans

-- ---- ------ -------------------------------------------------

1 Po4096 up 1,10,20-22,30,40,70-72,80,90,99-100,110,120,130,1

40,142,150,160,200,202,204,206,208,210,254,300,91

1,990,998-999

vPC status

----------------------------------------------------------------------------

Id Port Status Consistency Reason Active vlans

-- ------------ ------ ----------- ------ ---------------

10 Po10 down* Not Consistency Check Not -

Applicable Performed

11 Po11 down* Not Consistency Check Not -

Applicable Performed

14 Po14 down* Not Consistency Check Not -

Applicable Performed

20 Po20 down* Not Consistency Check Not -

Applicable Performed

21 Po21 down* Not Consistency Check Not -

Applicable Performed

Please check "show vpc consistency-parameters vpc <vpc-num>" for the

consistency reason of down vpc and for type-2 consistency reasons for

any vpc.

TEST-LH-LAN-NEXUS-01#Nexus 2

Test-LH-LAN-NEXUS-02# sh vpc bri

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 990

Peer status : peer link is down

vPC keep-alive status : peer is alive

Configuration consistency status : failed

Per-vlan consistency status : success

Configuration inconsistency reason: Consistency Check Not Performed

Type-2 inconsistency reason : Consistency Check Not Performed

vPC role : none established

Number of vPCs configured : 5

Peer Gateway : Enabled

Dual-active excluded VLANs : 254

Graceful Consistency Check : Disabled (due to peer configuration)

Auto-recovery status : Disabled

Delay-restore status : Timer is off.(timeout = 60s)

Delay-restore SVI status : Timer is off.(timeout = 5s)

Operational Layer3 Peer-router : Disabled

vPC Peer-link status

---------------------------------------------------------------------

id Port Status Active vlans

-- ---- ------ -------------------------------------------------

1 Po4096 up -

vPC status

----------------------------------------------------------------------------

Id Port Status Consistency Reason Active vlans

-- ------------ ------ ----------- ------ ---------------

10 Po10 down Not Consistency Check Not -

Applicable Performed

11 Po11 down Not Consistency Check Not -

Applicable Performed

14 Po14 down Not Consistency Check Not -

Applicable Performed

20 Po20 down Not Consistency Check Not -

Applicable Performed

21 Po21 down Not Consistency Check Not -

Applicable Performed

Please check "show vpc consistency-parameters vpc <vpc-num>" for the

consistency reason of down vpc and for type-2 consistency reasons for

any vpc.

Test-LH-LAN-NEXUS-02#Can ping across

TEST-LH-LAN-NEXUS-01# ping 172.31.255.254 source-interface eth 1/8 PING 172.31.255.254 (172.31.255.254): 56 data bytes 64 bytes from 172.31.255.254: icmp_seq=0 ttl=254 time=32.194 ms 64 bytes from 172.31.255.254: icmp_seq=1 ttl=254 time=12.565 ms 64 bytes from 172.31.255.254: icmp_seq=2 ttl=254 time=6.27 ms 64 bytes from 172.31.255.254: icmp_seq=3 ttl=254 time=22.06 ms 64 bytes from 172.31.255.254: icmp_seq=4 ttl=254 time=41.795 ms

What will happen is eventually I will get some loop issues kick in.

Does above look ok and healthy as I'm not sure how you test this.

I'm use to stacking switches and not vPC setups, I thinks it's just a big trunk?

Thanks

- Labels:

-

LAN Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 03:05 AM

wheres the PO config is it set for VPC at the end ?

Have you completed the global VPC config with domains ?

Your config looks ok we do it slightly different at the heartbeat or keepalive with a L3 SVI routed and assign it to the port like below

interface Vlan3000

description VPC Heartbeat

vrf member heartbeat

ip address x.x.x.x

interface Ethernet1/44

description VPC Heartbeat

switchport access vlan 3000

logging event port link-status

#################

VPC link example

interface port-channel2

description

switchport mode trunk

no lacp suspend-individual

switchport trunk allowed vlan 2,10-11,17-18,20,28,31,33-34,36-39,48,50,64-65,70,72,74,76,78,80,90-96,102-108,226,400,1226,2224,2512,3020,3025-3028

logging event port link-status

logging event port trunk-status

speed 10000

vpc 2

interface port-channel2

description

switchport mode trunk

no lacp suspend-individual

switchport trunk allowed vlan 2,10-11,17-18,20,28,31,33-34,36-39,48,50,64-65,70,72,74,76,78,80,90-96,102-108,226,400,1226,2224,2512,3020,3025-3028

logging event port link-status

logging event port trunk-status

speed 10000

vpc 2

Global each switch

vpc domain 200

role priority 200

system-priority 150

peer-keepalive destination x.x.x.x source x.x.x.x delay restore 90

Other side would be a reverse with priority 150

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 04:09 AM

Sorry I missed some bits here:

Nexus 1

vrf context management vrf context vPC-Keepalive no system urpf disable hardware profile portmode 48X10G+breakout6x40g vpc domain 990 peer-switch role priority 8192 system-priority 8192 peer-keepalive destination 172.31.255.254 source 172.31.255.253 vrf vPC-Keepal ive delay restore 60 dual-active exclude interface-vlan 254 peer-gateway delay restore interface-vlan 5 ip arp synchronize

Nexus 2

vrf context management vrf context vPC-Keepalive vpc domain 990 peer-switch role priority 16384 system-priority 8192 peer-keepalive destination 172.31.255.253 source 172.31.255.254 vrf vPC-Keepalive delay restore 60 dual-active exclude interface-vlan 254 peer-gateway delay restore interface-vlan 5 ip arp synchronize

interface port-channel4096

description EMG-LH-LAN-NEXUS

switchport mode trunk

spanning-tree port type network

vpc peer-link

I will add the other port channels to the switch configs, but does the VPC number have to match the port channel number or is it best practice?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 05:45 AM

These 2 outputs are obviously an issue , did you try set the channel-group to LACP , channel-group 4096 mode active - each side , again could be GNS3 though , thats only diff i can see against my working 7ks , that its not ;LACP on the VPC peer link members

Peer status : peer link is down

Configuration consistency status : failed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 05:47 AM

Try this command too as the consistency check is saying there is miss matches in config somewhere too

show vpc consistency-parameters interface po4096

| Symptom | Possible Cause | Solution |

|---|---|---|

| Received a type 1 configuration element mismatch. | The vPC peer ports or membership ports do not have identical configurations. | Use the show vpc consistency-parameters interface command to determine where the configuration mismatch occurs. |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 03:17 AM

Hi!

Could you please send the full config on both switches?

I think you do have some problem on the second switch!

/Mohammed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 06:07 AM

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide