- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Hi Ashish,

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-16-2015

08:52 PM

- last edited on

03-25-2019

04:36 PM

by

ciscomoderator

![]()

Hello Experts,

Please need your assistance to understand "What is SLOT TIME" and how it results smallest Eth packet size of 64B ?

I have been through multiple pages on Google in this regard but still I am unclear on this point.

Best Regards!

Solved! Go to Solution.

- Labels:

-

Other Switching

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2016 01:47 PM

Hi Ashish,

It is best to turn to the standard for the definition of the slot time first. IEEE 802.3-2012 states in Section 4.2.3.2.3:

[The slot time] describes three important aspects of collision handling:

a) It is an upper bound on the acquisition time of the medium.

b) It is an upper bound on the length of a packet fragment generated by a collision.

c) It is the scheduling quantum for retransmission.

So what they say is that the slot time is used for three intertwined purposes:

- A station that has started transmitting data can assume that the medium is fully owned by it after the slot time passes without experiencing a collision (aspect 'a')

- If a collision occurs, the length of the collision fragment is at most "slot time"-worth of bits (aspect 'b')

- If a frame needs to be retransmitted after a collision occurs, the backoff time is always an integer multiple of the slot time (aspect 'c')

The length of the slot time is given by the technical nature of the medium and network card construction, and it is 512 bit times for 10/100 Mbps Ethernet, and 4096 bit times for 1 Gbps Ethernet. It should be noted that the importance of the slot time is only related to collision handling. In full duplex, slot time has no meaning because there are no collisions in full duplex. In addition, 10 Gbps and faster Ethernet variants are defined only as full duplex technologies (they do not have half duplex mode of operation anymore), and so for these Ethernet variants, slot time is not defined at all.

Slot Time: It's the quantum time unit and after collision, packets are re-transmitted at integral intervals of slot time(after first collision:1x ST; After second collision: 2x ST; After third collision: 3x ST.....16xST). For 10 & 100mbps its value is 512bits time or 51.2 & 5.12 microsec respectively.

This is not entirely true. Before the n-th retransmission attempt, the network card is required to wait for a random multiple of slot times, with the multiple being selected from the interval <0, 2min(10,n)). This interval grows exponentially with each retransmission, so the resulting backoff times are:

For 1st retransmission: Either 0x or 1x slot time

For 2nd retransmission: Either 0x, or 1x, or 2x, or 3x slot time

For 3rd retransmission: Either 0x, or 1x, or 2x, or 3x, or 4x, or 5x, or 6x, or 7x slot time

For 4th retransmission: Ether 0x, or 1x, or 2x, ..., or 14x, or 15x slot time

and so on

Remember - the waiting is random - if it was fixed, the colliding hosts would collide again as each of them would wait for the same amount of time. The interval from which the random waiting time is chosen grows exponentially, but the network card always chooses a random value out of this interval for the particular retransmission attempt.

Now, question where again I get stuck: We know collision can be detected up to 512bits of any frame(after that any collision if detected will be marked as late collision), now what will happen if we will send any frame < 64B? Why there comes a limit on smaller frame size of 64B?

A valid frame can never be smaller than 64 bytes - that is the trick. The minimum size of a frame is defined to make sure that its transmission takes enough time so that even with a shortest valid frame, a possible collision can be reliably detected. It is the task of the application or the network card driver to never send a datagram that would be shorter than the minimum size of 64 bytes - and if there is not enough data to send, then the datagram needs to be padded with arbitrary bytes so that the resulting frame is at least 64 bytes long.

Would this answer your question? Please feel welcome to ask further!

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-04-2016 01:17 AM

Hi Ashish,

You are welcome.

The point you would like to validate - let me rephrase it for you.

A frame cannot be smaller than 64 bytes (512 bits) because, given by the technical properties of the medium and devices attached to it, it takes approximately 512 bit times for a signal to be sent from a station at one end of the medium, collide at the most distant point of the network with another signal, and propagate back to the original station. This means that a collision - if it occurs - cannot be reliably detected in smaller time. Therefore, the duration of any transmission must be at least 512 bit times, hence the requirement on the minimum frame size.

It is true that if a frame was indeed shorter than 64 bytes, it could happen that its sender would send it in its entirety and think that it was sent without experiencing a collision, and while the last bits of the frame are still travelling the medium, some other station jumps into it and creates a collision. The original sender would not know that this collision has occurred - and this is what we would call a late collision. Ethernet tries to avoid late collisions at all costs, and late collisions should not occur at all if the minimum size of a frame is properly maintained by all connected stations. Still, especially in networks with excessive numbers of repeaters or hubs or overly long media, the incurred delay can lead to late collisions - but these cases occur only when the Ethernet standards are violated. In properly installed Ethernet networks, late collisions should never occur.

Please note that the 64 bytes is an arbitrary value chosen for a particular kind of medium used in Ethernet, and if its maximum length, or the transmission speeds were different, the minimum frame size would also be different.

A sending station cannot ensure (or in other words, guarantee) that the first 512 bits of a frame being sent are collision-free. It's just the opposite - you can guarantee that if a collision occurs, it will occur within the first 512 bits of a frame, provided you have a well-behaved Ethernet implementation.

Sending a train of frames (back-to-back frames, as you have called it) would not change the situation. Each frame is handled individually, and you cannot substitute a single frame consisting of 64 bytes with, say, two frames consisting of 32 bytes.

Feel welcome to ask further!

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2015 12:17 AM

Is below understanding correct ?

Based on exponential backoff algorithm, if collision occurs(CSMA/CD NW) then re-transmission is delayed by an amount of time derived from the slot time and the number of attempts to re transmit.

Slot Time: It's the quantum time unit and after collision, packets are re-transmitted at integral intervals of slot time(after first collision:1x ST; After second collision: 2x ST; After third collision: 3x ST.....16xST). For 10 & 100mbps its value is 512bits time or 51.2 & 5.12 microsec respectively.

Now, question where again I get stuck: We know collision can be detected up to 512bits of any frame(after that any collision if detected will be marked as late collision), now what will happen if we will send any frame < 64B? Why there comes a limit on smaller frame size of 64B?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2016 09:15 AM

Dear Expert,

Please if can add your comments.

Best Regards!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2016 01:47 PM

Hi Ashish,

It is best to turn to the standard for the definition of the slot time first. IEEE 802.3-2012 states in Section 4.2.3.2.3:

[The slot time] describes three important aspects of collision handling:

a) It is an upper bound on the acquisition time of the medium.

b) It is an upper bound on the length of a packet fragment generated by a collision.

c) It is the scheduling quantum for retransmission.

So what they say is that the slot time is used for three intertwined purposes:

- A station that has started transmitting data can assume that the medium is fully owned by it after the slot time passes without experiencing a collision (aspect 'a')

- If a collision occurs, the length of the collision fragment is at most "slot time"-worth of bits (aspect 'b')

- If a frame needs to be retransmitted after a collision occurs, the backoff time is always an integer multiple of the slot time (aspect 'c')

The length of the slot time is given by the technical nature of the medium and network card construction, and it is 512 bit times for 10/100 Mbps Ethernet, and 4096 bit times for 1 Gbps Ethernet. It should be noted that the importance of the slot time is only related to collision handling. In full duplex, slot time has no meaning because there are no collisions in full duplex. In addition, 10 Gbps and faster Ethernet variants are defined only as full duplex technologies (they do not have half duplex mode of operation anymore), and so for these Ethernet variants, slot time is not defined at all.

Slot Time: It's the quantum time unit and after collision, packets are re-transmitted at integral intervals of slot time(after first collision:1x ST; After second collision: 2x ST; After third collision: 3x ST.....16xST). For 10 & 100mbps its value is 512bits time or 51.2 & 5.12 microsec respectively.

This is not entirely true. Before the n-th retransmission attempt, the network card is required to wait for a random multiple of slot times, with the multiple being selected from the interval <0, 2min(10,n)). This interval grows exponentially with each retransmission, so the resulting backoff times are:

For 1st retransmission: Either 0x or 1x slot time

For 2nd retransmission: Either 0x, or 1x, or 2x, or 3x slot time

For 3rd retransmission: Either 0x, or 1x, or 2x, or 3x, or 4x, or 5x, or 6x, or 7x slot time

For 4th retransmission: Ether 0x, or 1x, or 2x, ..., or 14x, or 15x slot time

and so on

Remember - the waiting is random - if it was fixed, the colliding hosts would collide again as each of them would wait for the same amount of time. The interval from which the random waiting time is chosen grows exponentially, but the network card always chooses a random value out of this interval for the particular retransmission attempt.

Now, question where again I get stuck: We know collision can be detected up to 512bits of any frame(after that any collision if detected will be marked as late collision), now what will happen if we will send any frame < 64B? Why there comes a limit on smaller frame size of 64B?

A valid frame can never be smaller than 64 bytes - that is the trick. The minimum size of a frame is defined to make sure that its transmission takes enough time so that even with a shortest valid frame, a possible collision can be reliably detected. It is the task of the application or the network card driver to never send a datagram that would be shorter than the minimum size of 64 bytes - and if there is not enough data to send, then the datagram needs to be padded with arbitrary bytes so that the resulting frame is at least 64 bytes long.

Would this answer your question? Please feel welcome to ask further!

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-27-2017 04:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-03-2016 01:10 AM

H Peter,

Many thanks for your support. It was very informative and with this definition & purpose of SLOT Time is clear to me.

However, please can you comment once more for below point & my understanding?

Point: The frame size can't be smaller than 64Bytes because with this transmission time will be <512bits time. But machine should ensure 512bits time is free of collision in order to ensure medium can be used to it and in this case( sending <64B) if machine is not sending back to back frames such that total 512bits time can be checked, it will not be able to check the slot time is collision free or not?

Is this correct?

Best Regards!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-04-2016 01:17 AM

Hi Ashish,

You are welcome.

The point you would like to validate - let me rephrase it for you.

A frame cannot be smaller than 64 bytes (512 bits) because, given by the technical properties of the medium and devices attached to it, it takes approximately 512 bit times for a signal to be sent from a station at one end of the medium, collide at the most distant point of the network with another signal, and propagate back to the original station. This means that a collision - if it occurs - cannot be reliably detected in smaller time. Therefore, the duration of any transmission must be at least 512 bit times, hence the requirement on the minimum frame size.

It is true that if a frame was indeed shorter than 64 bytes, it could happen that its sender would send it in its entirety and think that it was sent without experiencing a collision, and while the last bits of the frame are still travelling the medium, some other station jumps into it and creates a collision. The original sender would not know that this collision has occurred - and this is what we would call a late collision. Ethernet tries to avoid late collisions at all costs, and late collisions should not occur at all if the minimum size of a frame is properly maintained by all connected stations. Still, especially in networks with excessive numbers of repeaters or hubs or overly long media, the incurred delay can lead to late collisions - but these cases occur only when the Ethernet standards are violated. In properly installed Ethernet networks, late collisions should never occur.

Please note that the 64 bytes is an arbitrary value chosen for a particular kind of medium used in Ethernet, and if its maximum length, or the transmission speeds were different, the minimum frame size would also be different.

A sending station cannot ensure (or in other words, guarantee) that the first 512 bits of a frame being sent are collision-free. It's just the opposite - you can guarantee that if a collision occurs, it will occur within the first 512 bits of a frame, provided you have a well-behaved Ethernet implementation.

Sending a train of frames (back-to-back frames, as you have called it) would not change the situation. Each frame is handled individually, and you cannot substitute a single frame consisting of 64 bytes with, say, two frames consisting of 32 bytes.

Feel welcome to ask further!

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-27-2017 04:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-01-2019 05:26 AM

Hello Peter

Have a doubt on this,

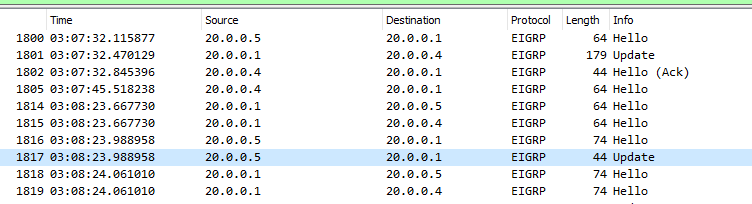

As you told Ethernet interface should not allow a frame with less then 64 bytes Size, but i could see that the EIGRP packets are transmitted with the size of 44 bytes , so how its possible ?

please find the attached photo,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-01-2019 06:05 AM

Hello Sivam,

Thanks for joining!

As you told Ethernet interface should not allow a frame with less then 64 bytes Size, but i could see that the EIGRP packets are transmitted with the size of 44 bytes , so how its possible ?

Wireshark only shows you the size of the IP packet itself in that snapshot you have posted. However, if you check the size of the frames that carry those IP packets, Wireshark will most likely tell you that the frame is 60 bytes long (since the 4B FCS value is not passed to the Wireshark - it stays in the NIC).

What really happens is that the NIC driver on the sending host always makes sure that the overall frame is at least 64 bytes long. If the payload of the outgoing frame is smaller than 46 bytes, the NIC driver will insert nonsense bytes (usually all zeros) right after the useful payload to "stuff" the frame just enough to make it 64 bytes long including all headers and FCS.

Check it out and please let me know if the findings matched!

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2019 08:46 AM - edited 04-02-2019 08:47 AM

Hello @Peter Paluch

Thanks for reply

Have a another basic doubt ,

If NIC won't add FCS at the end of frame , how other end receivers can find that the frame is error free ?

As I studied receiver does CRC for received frame which include sender's FCS bits too.

is it ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-03-2019 01:35 AM

Hello Sivam,

If NIC won't add FCS at the end of frame , how other end receivers can find that the frame is error free ?

This must be a misunderstanding. A sending NIC always adds an FCS at the end of the frame when sending it out, and the receiving NIC always verifies the FCS. It is just that when Wireshark reads out received frames from the NIC, it reads them without the FCS because NICs keep the received FCS to themselves and do not pass it to the driver and the upper layers. The upper layers do not need the FCS because the NIC verifies the FCS itself. It may be possible to instruct the NIC to pass the FCS to the driver so that you can see it in Wireshark, but this is highly NIC-and-driver specific and may not be universally available.

You may be interested in reading this thread regarding seeing the FCS in Wireshark:

https://ask.wireshark.org/question/2876/how-to-see-the-fcs-in-ethernet-frames/

Best regards,

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-03-2019 10:53 AM

Thanks @Peter Paluch for clarification .

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide