- Cisco Community

- Technology and Support

- Data Center and Cloud

- Unified Computing System (UCS)

- Unified Computing System Discussions

- Re: Boot from iSCSI w/MPIO & iSCSI Initators in Windows

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Boot from iSCSI w/MPIO & iSCSI Initators in Windows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-11-2013 01:41 AM

We recently started with a new FlexPod deployment. In this environment we boot from iSCSI. Booting from iSCSI works properly, even with two vNIC's and iSCSI overlaying vNIC's. But I notice random behaviors with the iSCSI Initiator in Windows which I can't get rid of. Allow me to explain our scenario.

Hardware:

- Cisco UCS 6248UP Fabric Interconnects (firmware 2.1(3a)

- Cisco UCS 5108 Blade Servers

- Cisco UCS 2208XP I/O Modules

- Cisco UCS B200 M3 Blade Servers

- VIC 1240 with Port Expander - NetApp FAS2240-2 Storage Controllers

- 2x10Gb Ethernet interfaces

NetApp:

The NetApp Storage Controllers are configure in 7-mode. So essentialy we have two aggregates, which can be seen as two iSCSI Targets. Our LUN's (boot and additional) are deviced between the two aggregates. For example the boot LUN 0 and LUN 1 on the fist aggregate, and LUN 2 on the second aggregate.

Service Profile:

I have created several Service Profiles (from an initial tempalte). Each profile has serveral NIC's, including the following:

- iSCSI_vEth0 (with an iSCSI overlaying vNIC)

- iSCSI_vEth1 (with an iSCHI overlaying vNIC)

- vEth0

- vEth1

- ...

These vNIC are staticaly placed in vCon1, the iSCSI vNIC's at the top. We don't wan't to use Fabric Failvoer, instead we want these two NIC's in combination with MPIO (Multi-Path I/O).

Now, when a server boots it successfully connects to LUN 0 with both overlaying vNIC's. When Windows Server 2012 (R2) is installed it only has a connection to the aggregate where where LUN 0 is hosted. When you start the iSCSI Initiator you should see two connections, which you can't edit or change, because they are printed by the iSCSI Boot ROM. Now... here comes the problem.

When the OS is installed some servers already have two iSCSI connections, two vNIC to one iSCSI Target. You only have ot enable MPIO and you are in fact good to go (for that aggregate). But randomly some servers only have one iSCSI connection. And... you cannot add the second connection manually because Windows prompts an error that is already in use or hidden in the OS. The problem is we cannot connect the iSCSI Targets properly. I have tried everything I can, but we can't get rid of this issue. Something is not right.

Any suggestions? Anybody else who does experience this as well?

- Labels:

-

Unified Computing System (UCS)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-15-2013 08:58 AM

We do not have a Windows iSCSI boot configuration published and validated for the FlexPod with Windows platform. All published and validated FlexPod with Windows hosts boot using FC or FCoE. All published validated FlexPod with Windows hosts topologies include FC or FCoE connectivity storage array in addition to 10GbE Ethernet.

A single path to the LUN should be used when installing Windows. This is due to the lack of MPIO capability in the Windows installer. After Windows is installed, the MPIO feature can be added and configured to claim the LUN. Only then should redundant LUN paths be added.

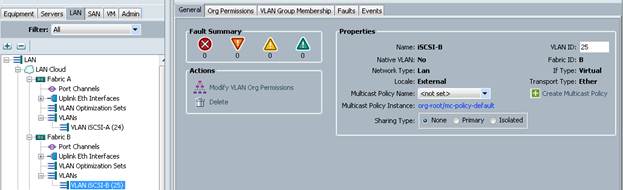

Microsoft does not support manually managing the iSCSI connections to the boot LUN. They need to be configured and managed through the service profile and LUN masking on the storage array. During install, you should have 3 VLANs configured. I typically use the VLANs. iSCSI-A, iSCSI-B, iSCSI-Null. iSCSI-A is for the initiator that connects through fabric A, iSCSI-B is for the initiator the connects through fabric-B, and iSCSI-Null is a VLAN that goes nowhere.

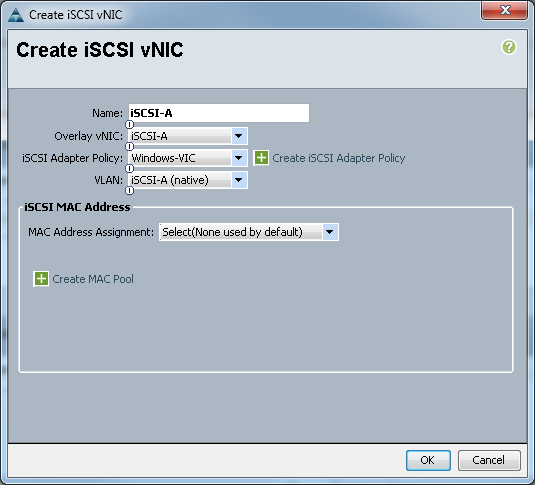

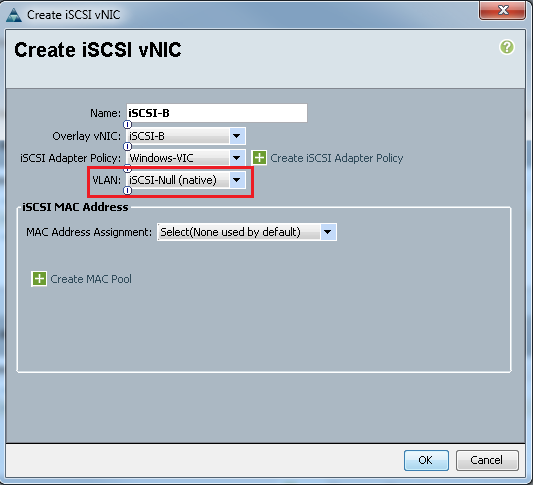

During Windows install, the first iSCSI initiator uses the VLAN iSCSI-A and the second iSCSI initiator uses VLAN iSCSI-Null. This method enforces a single path to the LUN, because you don’t have zoning to help you with path management.

Create iSCSI vNIC iSCSI-A

Create iSCSI vNIC iSCSI-B

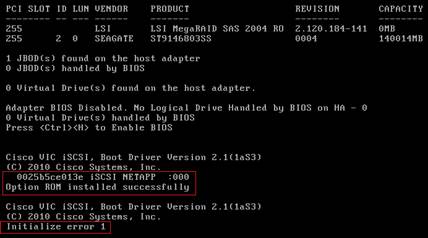

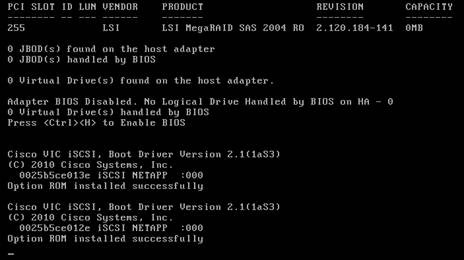

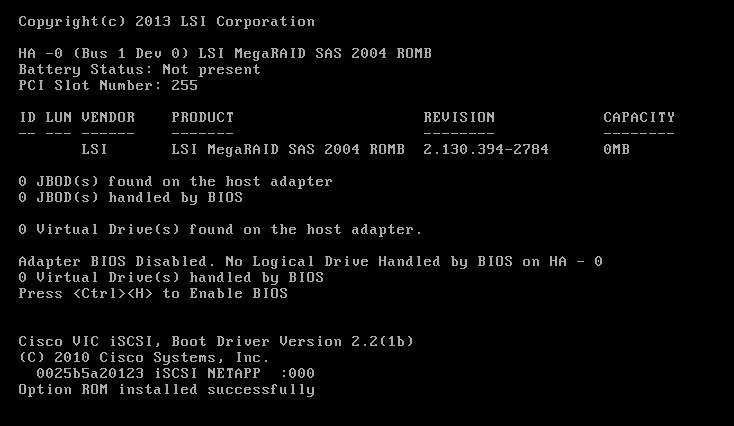

The following screen shows successful path initialization for the first iSCSI initiator. The second initiator show an initializations error because the path through this initiator is intentionally blocked using VLAN iSCSI-NULL. This configuration creates a single path to the LUN for the Windows installation.

After Windows is installed, configure MPIO VID/PID to claim the LUN paths. This can be done by manually entering the VID/PID or using the Discover Multi-Paths tab. Select add support of iSCSI devices and click Add. System will need to reboot.

At this point you can shutdowns Windows and enable the second iSCSI initiator to use the VLAN iSCSI-B instead of the VLAN iSCSI-Null. After making this change booting Windows, you will see that th VIC iption ROM is successfully installed for both iSCIS initators.

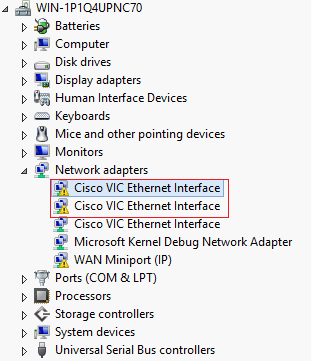

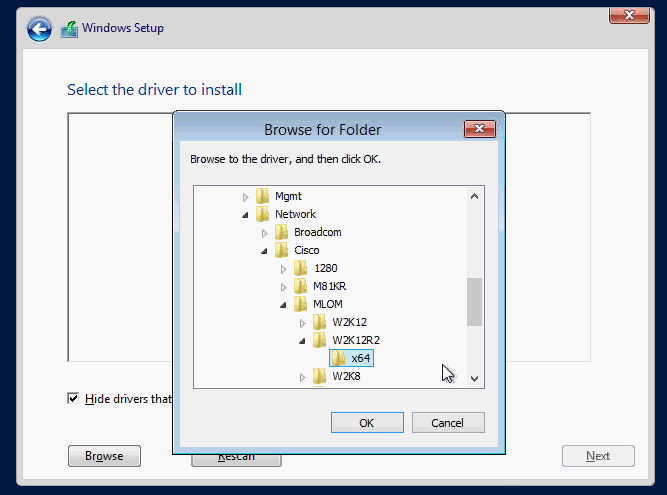

Use Device Manager to install the VIC driver for the remaining vNICs. (not required for Windows Server 2012 R2)

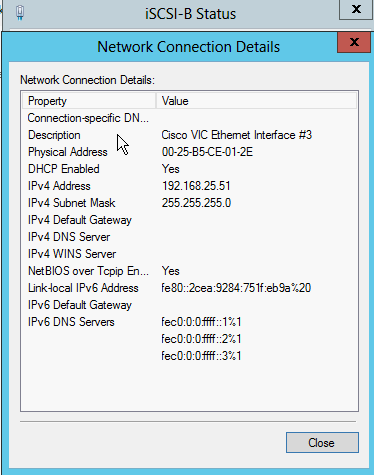

Verify that the iSCSI-B NIC has the IP address assigned by the service profile.

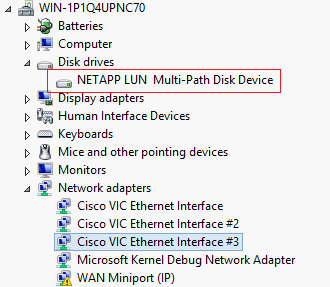

Verify that the boot LUN disk drive device is a Multi Path device.

The information I have provided here assumes that you have the LUN masking configured correctly on the storage array and that the initiators are properly configured to connect to the target and access the LUNs.

Hope this helps,

Mike Mankovsky

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-15-2013 12:01 PM

Hi Mike,

This is exactly how I have configured it. This method works. Windows will inherit the settings defined in the Service Profile. But accoasionally I had an issue that Windows did not get these settings right (e.g. only Fabric A and not B), it didn's show up as a connection on the iSCSI Initiator. But once you try to manually add the connection you get a message it is already in use.

I have now removed all Service Profiles. Re-created all Service Profiles, started from scratch and haven't had any issue since. Fingers crossed.

Thanks,

Boudewijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-01-2013 01:51 PM

Today I have opened a case at Cisco. Together with a Cisco engineer I have looke into this problem. I have configured iSCSI correctly. I was able to re-produce this problem. As far as I notice this issue only occurs when you manually (static) configure the iSCSI vNICs IP Address that are used by the iSCSI overlaying vNICs. If you use an iSCSI-IP-pool from UCS the problems does not seem to occur. Although I need to verify this over a longer period.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2013 10:21 AM

Update. After testing we have noticed that using an iSCSI-IP-Pool within UCS makes it much more stable. But, the problem still occurs. Cisco TAC Support was able to reproduce our issue and is currently investigating it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2014 05:52 AM

Hello Michael,

Regarding the NIC and WAN Miniport showing Warning with "!", this is documented as bug id: CSCuc20216 (link : https://tools.cisco.com/bugsearch/bug/CSCuc20216/?referring_site=ss). After following the workaround stated in bug report, I was able to solve this issue. Even no need NIC driver installation after the OS installation.

Thanks

Raj

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-05-2014 09:49 AM

Hi Michael

I'm in the process of setting up the exact same environment (W2k12R2 iSCSI Boot and exact same kit list) and was wondering if you could shed some light on the actual Windows installation phase. I'm able to establish the initial iSCSI initialization successfully (using 1 path for now) as you can see from below:

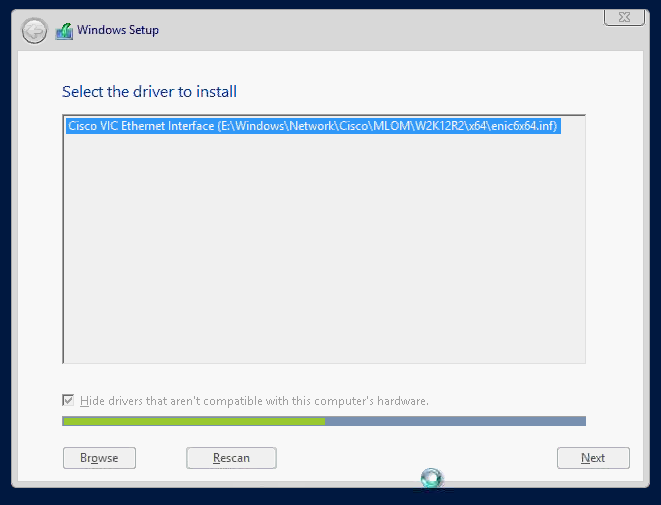

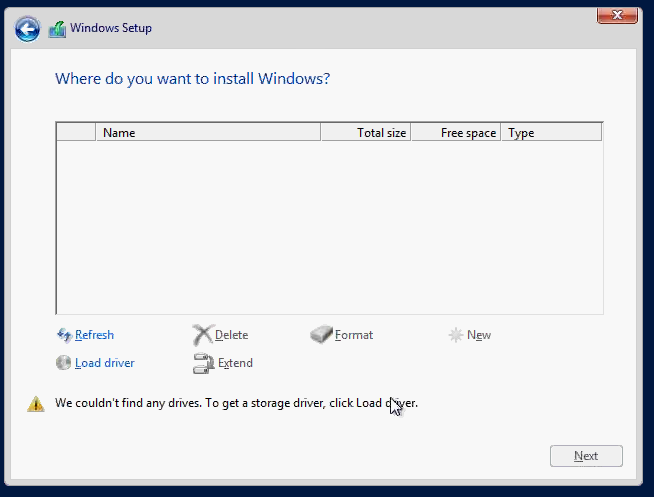

However, I'm unable to view the LUN within the windows installation phase.I've uploaded the ethernet Cisco drivers under /Network/MLOM/enic6x64 as you can see for my VIC1240/PortExpander

Although I've installed the enic6x64, the next screen shown below suggests that Windows is still unable to view the NetApp LUN. Am I installing the wrong drivers for iSCSI boot or is there something fundamentally missing from this setup. I've also noticed from your screenshots that you had created an iSCSI adapter policy (windows-vic)? how different is this from the default policy?

Kind Regards,

Osama Masfary

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-05-2014 06:09 PM

Hi Osama,

I am familiar with that issue.

You have to remove the driver CD-ROM (e.g. iSO), re-insert the Windows setup DVD. Then hit 'Refresh'. only then your disks will be recognized. If that ain't work either and use NetApp, make sure you have configured a LUN ID on your iGroup settings. But I think the DVD and refresh will probably work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2014 04:35 AM

Hi Boudewijn,

Thanks for the response. I've tried removing the drivers ISO, re-insert the Windows Setup DVD then hitting "Refresh" but it still hasn't displayed the LUNs/ The LUN ID in the iGroup is set to 0. Could you please confirm the UCS B series Drivers package that you've used to "Upload Driver" within the Windows installation. According to NetApp's IMT the latest supported setup should consist of the following:

Cisco UCS 1240 Virtual Interface Card and Port Expander CNA

Driver: 2.3.0.4 (NIC) (Async) <<< this refers to 2.1(3) drivers package / the 2.1(3a) package is 2.3.0.10

Firmware: 2.1(3)

Protocol: iSCSI

Spec: Dual-port 10gE PCI-Express CNA, Cisco UCS System Firmware 2.1(3)

Kind Regards,

Osama Masfary

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-19-2014 12:21 PM

HI Osama, did you resolve this install issue?

IM having the same problem (must be a driver issue, as centos 6.5 installs fine?)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-10-2014 11:21 AM

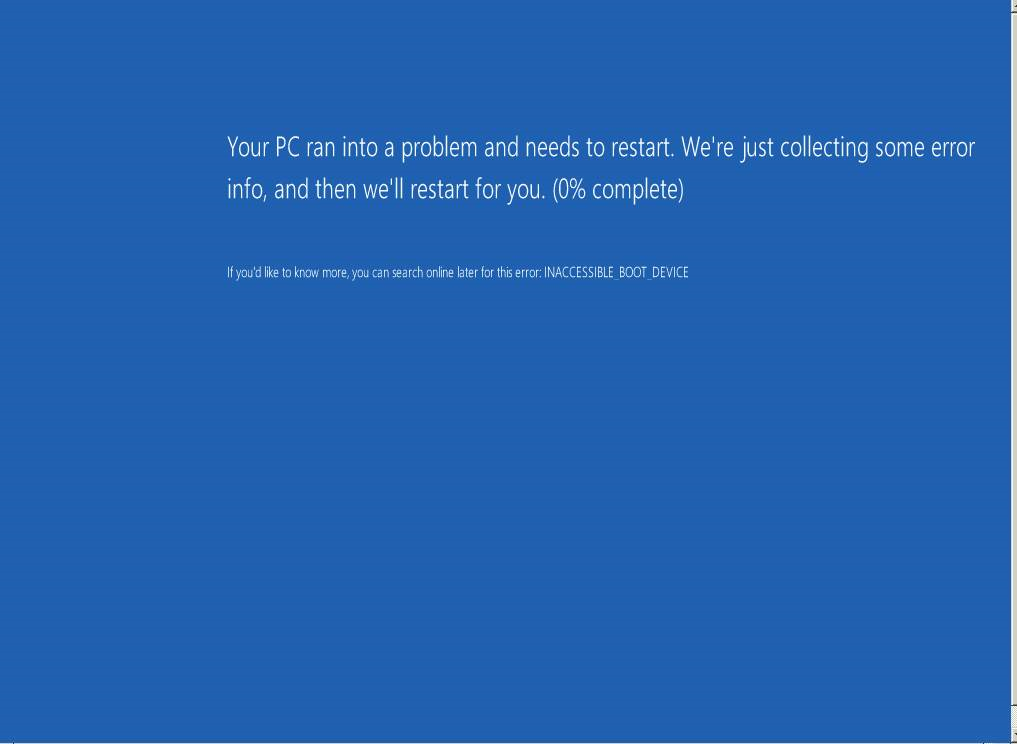

I am having a strange problem where I will install (to a nimble SAN instead of NetApp) where when I install successfully (after loading the cisco network driver during the boot process) and when it reboots Windows starts to load, but then it stops loading an I get the error: INACCESSIBLE_BOOT_DEVICE. When I boot form the installation and try to repair it doesn't even recognize the boot device. I can get this working with ESXi, but windows.... ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2014 03:02 PM

Hi Micheal

Have you managed to resolve this issue you're having? I'm having a similar problem. I start the build of a new 2012 R2 Datacentre image to the blade via MDT task sequence. The task of partitioning and deploying the WIM file to the disk completes successfully.

After first reboot after applying the image it start the windows boot loader runs for about 2 minutes and I then get exactly the same error message listed in your screenshot above i.e. "inaccessible_boot_device".

Let me know if you've had any further progress in resolving the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2014 03:26 PM

Hi Gareth,

If you are installing on a SAN LUN, you need to make sure that ONLY 1-Path is active during the installation. Once the OS installation is complete, enable MPIO in windows and then bring up the second SAN Path. This is a Windows thing in order to successfully install.

Refer to the Installing on a SAN LUN section of UCS install:

Per the install:

" Configure a LUN or RAID volume on your SAN, then connect to the SAN and verify that one (and only one) path exists from the SAN HBA to the LUN."

Hope this helps!

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2014 03:46 PM

Hi MTurano

I have confirmed that Only 1 path exists to SAN. We use a NetApp Storage connected as Appliance Port to FI.

The blade is a B200-M2 with M81KR. I have actually set the B Side adapter as DHCP and can confirm that when the server boots in the BIOS it does not connect on the second path as I don't have an IP assigned to the B side.

I can confirm that when I manually do the installation i.e. Mount the Server 2012 R2 iso and install manually and load the driver from the "ucs-bxxx-drivers.2.2.3.iso" iso I can see the volume and when I install manually she works. We have an automated deployment thou to have consistent setup, so we bootup with MDT iso which includes the driver for the VIC\Palo card. The Task sequence then cleans the disk and partitions and applies a .wim file to the disk which completes successfully.

After the blade reboots after the .wim is applied the blade starts the Windows bootloader and then displayes the blue screen. I have also attempted using a different .wim file that I've injected the drivers into and I still get the same error.

If you have any other suggestions let me know.

Kind regards

Gareth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2014 07:53 PM

If your image streamlined Updates from KB2919355, that might be your problem. It appears to have similar behavior in that things install fine and then you get the Blue Screen.

http://support.microsoft.com/kb/2919355

Also see KB2966870 referenced

Thanks

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide