- Cisco Community

- Technology and Support

- Collaboration

- IP Telephony and Phones

- Re: %VOICE_IEC-3-GW: SIP: Internal Error (PRACK wait timeout) & (1

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

%VOICE_IEC-3-GW: SIP: Internal Error (PRACK wait timeout) & (1xx wait timeout)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 04:00 AM - edited 03-17-2019 01:14 PM

Hi folks,

Have a situation where I'm seeing a lot of spamming of the logs with 2 particular errors:

Jul 24 17:57:57.290 PHT: %VOICE_IEC-3-GW: SIP: Internal Error (PRACK wait timeout): IEC=1.1.129.7.62.0 on callID 18043 GUID=D21758D48E5E11E8BFA4811988141A24 Jul 24 17:57:59.214 PHT: %VOICE_IEC-3-GW: SIP: Internal Error (1xx wait timeout): IEC=1.1.129.7.59.0 on callID 18050 GUID=E0A0CB1E8E5E11E8B755D64093392022

Also this:

SIP: (29214) Group (a= group line) attribute, level 65535 instance 1 not found.

The errors are seen heavily on both MGW & CUBE - but I can see calls arriving at CUBE when debugging.

I'm unclear if the error messages that are seen are as a result of connectivity issues between the CUBE <> Sonus...or connectivity issues between the CUBE <> MGW - or something else.

Don't believe they're between MGW <> SIP upstream provider as we have TONS of other calls coming and going without this issue.

Our CUBE routers are 2911's running:

Cisco IOS Software, C2900 Software (C2900-UNIVERSALK9-M), Version 15.3(3)M5, RELEASE SOFTWARE (fc3) on one Cisco IOS Software, C2900 Software (C2900-UNIVERSALK9-M), Version 15.4(3)M1, RELEASE SOFTWARE (fc1) on the other.

Both are problematic.

We've been having intermittent issues with calls going in or out of this router (well, actually it's happening to two routers).

I couldn't, for the life of me, find any reference to other people's issues with this - hoping someone can shed light and point us in a general direction.

We have "resolved" the issue by reconfiguring the physical topology - so the call failure issue seems to be resolved but we still see these logs and I'm not feeling confident.

Background - when this router was originally setup - they used a single Gig interface for inside and outside of the SIP trunk. Furthermore - they'd tried to set up a port channel to connect it to two different ports on a stack switch. They were unsuccessful at getting this to work - so resolved to a single port - but they didn't remove the port channel config.

Both inside and outside were still on a single port (not an issue I don't believe) with a subinterface in 2 different VLAN's (one for "inside" and one for "outside").

The "reconfigure" was to get two separate ports patched to the switch and remove any sign of port channel config.

I don't have access to the switch to further investigate (not our switch and we don't manage it) - but a bounce of it was out of the question due to services impacted. As such, I bounced both my routers and this "solved" the issue for about 5 minutes before going back to total failure.

I am suspecting an issue with the switch that had some bug after long-term use of the bizarre port channel config left behind - but will never know.

Was hoping someone knew of scenarios where these errors present generally.

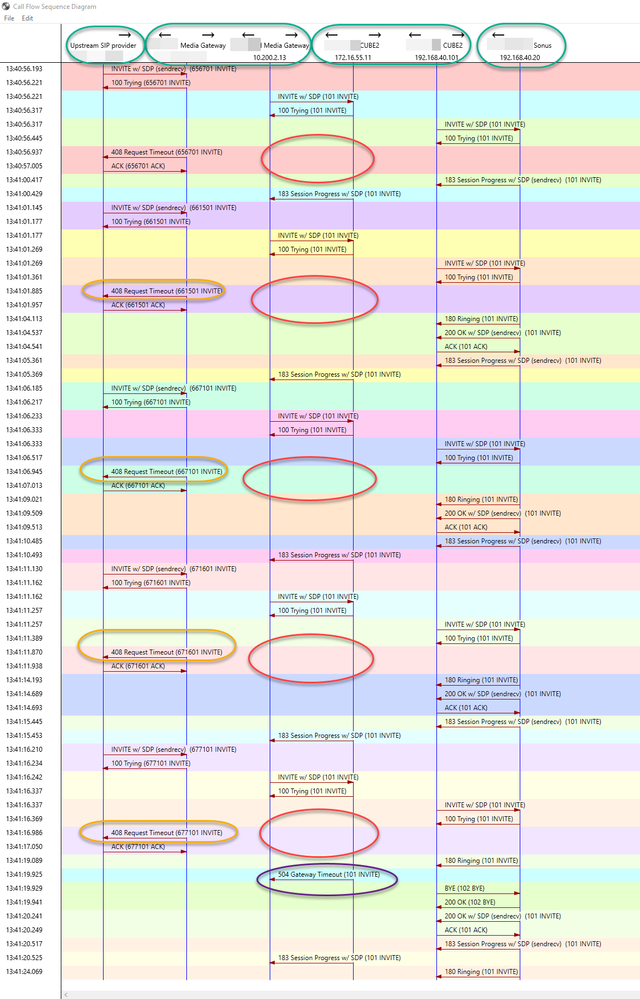

This is the TranslatorX diagram of end-to-end SIP debug. My suspicions of this make me think there's a connectivity issue between the Media Gateway and the CUBE (in the diagram CUBE2) as MGW is sending 408 but I see nothing coming back from CUBE2 after it gets invite from Sonus. Note that none of the debugs I had were from Upstream provider or Sonus - I have only access to MGW and CUBE1 & CUBE2.

End system behind Sonus is Lync.

However, when using a different SIP provider that goes direct to the Sonus, no CUBE involved, the issue is not present.

Really hoping someone can give me another avenue of thought on this issue.

- Labels:

-

Other IP Telephony

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 04:02 AM - edited 07-24-2018 04:34 AM

<deleted, moved content to the main description - a bit new to this new community site, couldn't seem to find how to delete the actual entry entirely>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 04:25 AM - edited 07-24-2018 04:35 AM

<deleted, moved content to the main description - a bit new to this new community site, couldn't seem to find how to delete the actual entry entirely>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 05:27 AM

Hello

Just like you suspect this may be related to connectivity issue. The challenge is how to capture logs for this to investigate further.

If you can reproduce this easily, then here is what you can do

During off hours do the following:

service sequence-numbers

service timestamps debug datetime localtime msec

loggin buffered 10000000 debug

no loggin console

no loggin monitor

default loggin rate-limit

default loggin queue-limit

Then..

{enable the following debugs:

debug ccsip info

debug ccsip transport

debug ccsip mess}

....Capture the logs

term len 0

show loggin

Attach logs here include call details

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 09:29 AM

Hi, thanks for your reply.

Currently, the issue is vapour and the damned thing is working.

There's zero load on things right now due to some emergency workarounds put in place (good for testing) - but the issue is not present for the numbers that I've left behind. They were a problem previously. Gah!

When the problem returns, I'll do this...and throw in a reload in 10 for good measure. I can very quickly redirect traffic from the router if I lose connection during debugs. Will post here if/when I get any.

If the issue stays resolved and never comes back - I'll keep this thread updated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2018 10:27 AM

I've seen the 101 payload the telco is sending you in their setup message cause issues. If you can get them to send you a 100, that might help. Adding voice-class asymmetric payload dtmf to your dial peer might help also.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2018 12:29 AM

Thank you all for your replies.

Still, haven't found the actual cause of this issue - but I'm starting to believe it was hardware on the site (firewall or switch).

As the routers have been relocated to a new office running on different equipment (different internet connection, firewall, and routing/switching infrastructure) and now, apart from a terrible internet link (link is smaller and through a different provider), the bizarre connection issues we've been having have vapourised. Kinda got "lucky" that things fell apart < 1 week from an office relocation.

If things rear their ugly heads again, I'll run some of the suggestions and post back the results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2018 12:30 AM

Oh, and one other thing... a Lync box in a load balanced cluster turned out to have a bung certificate on it which was causing untold TLS issues that seemed to be partially to blame. Hard to tell as all that infrastructure sits outside of our control.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-03-2021 09:05 AM

Did you fix the issue?

I have the same problem too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-05-2021 08:12 PM

We don't know why it was resolved - but strongly believe it was hardware on the site (firewall or switch).

The routers were relocated to a new office running on different equipment (different internet connection, firewall, and routing/switching infrastructure - but with the same CUBEs) and now, apart from a terrible internet link (link is smaller and through a different provider), the bizarre connection issues we've been having have vapourised. Kinda got "lucky" that things fell apart < 1 week from an office relocation.

So yeah, things to look at are switch, firewall, network connectivity.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide