- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Cisco 3850 16.12.3a POE issues

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cisco 3850 16.12.3a POE issues

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-01-2020 08:10 AM

Good morning,

Is anyone else running 16.12.3a IOS on 3850 switches?

Here is an issue we are facing but I can not find any documentation of a bug in this code and its still recommended as the code to go to...

Issue: POE Stops functioning on random ports but works on others. POE will not work for Avaya phones, cameras, cisco phones, or Cisco AP's ( 3602,3702,3802).

Work around: Reboot switch, downgrade, or find a port that will provide POE.

We began upgrading and testing on several stacks for a month or 2 with no issues prior to deploying to approximately 30 stacks of 3850's. After we did a mass deployment we began to see POE issues on switches that seem to be triggered when removing or adding a POE device. Once the condition has been triggered it will not go away until rebooted or downgraded. Logs will state " Controller port error, interface x/x/x, power given, but machine power good wait timer timed out.

I have found similar issues or bugs in older codes, have we regressed?

- Labels:

-

Catalyst 3000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-04-2021 09:18 PM - edited 12-06-2021 04:47 PM

@oscar.garcia88 wrote:

Uptime for this control processor is 15 minutes

System returned to ROM by Power Failure or Unknown at 11:08:20 HGO Thu Dec 2 2021

I am already responding to your thread (LINK)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-06-2021 09:04 AM

Actually I reloaded the switch, it did not boot up due to a power failure, the issue was espontaneous.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 03:51 AM

Anyone know if the POE issue is resolved in 16.12.7 or if 16.9.8 would be the best way to go here?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 04:11 AM - edited 03-28-2022 04:16 AM

@mattw wrote:

Anyone know if the POE issue is resolved in 16.12.7 or if 16.9.8 would be the best way to go here?

Thanks.

Stay away from 16.12.X if the switch is in a stack.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:25 AM

Why?

I know the code has had a lot of issues but seems to be fairly stable now after 16.12.5b.I run this code on half of my switches and have only had oddities on a couple of stacks that I could not determine an RCA.

Oddities- Stack stopped processing data on random ports on a switch in a stack of 4, reloading that member resolved. Random switch crashing in a stack of 3. Had POE ports go bad, reboot did not resolve so we replaced.

I'm tempted to roll out 16.12.7 though.. I didn't run the 16.9.x train long enough on 3850s or enough of them to really gauge if its a solid code or not however the 16.12.x train has a longer life than 16.9.x

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 06:06 AM

We have 43 stacks of Catalyst 3850's on code 16.12.5b and another 51 standalone 3850 on this code. We had been running on this code for around 10 months now. I would say that it is relatively stable and has maximum amount of security fixes. So, I would recommend everyone to move from 16.9.x code due to security vulnerabilities. I don't remember that PoE issue was reported since we did upgrade.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 04:36 PM - edited 03-28-2022 05:14 PM

@AdamF1 wrote:

Why?

I am glad someone asked.

In the last 2 years, I have a very long list of TAC cases regarding 3850 and 9300 switches with 16.12.4 and later.

The most troublesome version is 16.12.4. If the switches are standalone there are no issues, even with 16.12.4.

But once they are stacked, each CPU and memory of each stack member needs to be monitored daily.

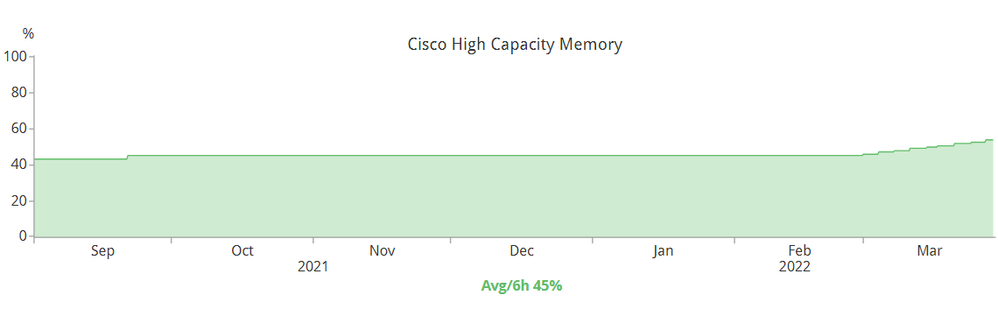

Let me provide a picture:

The picture above is the memory utilization of a switch member of stack (210 days). Remember, "a switch member of a stack" and not the average memory utilization of the entire stack. Totally different stuff here.

The stack has been running 16.12.5b for the last 31 weeks. The stack has two SFP+ optics, a plethora of PoE phones, APs, CCTV, etc. Most importantly, the stack is Dot1X.

The "average" memory utilization for a switch member of a stack should be <30%. Anything higher than that means trouble.

From the time this stack was turned on, there has been no network changes. No more additional ports patched (locked up) and, more importantly, no power reboot.

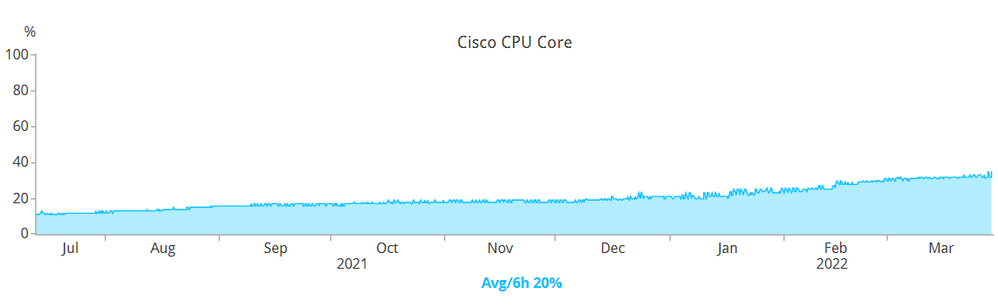

Ok, how about the CPU utilization of a switch stack member?

NOTE: A 3850 has FOUR (4) CPUs.

Have a look at the picture below:

This is a single core from a switch member of a stack. (I am now monitoring each CPU from each stack member.) This is a stack on 16.12.5b with an uptime of 38 weeks.

Same as the before, DOT1X, PoE, SFP+ optics.

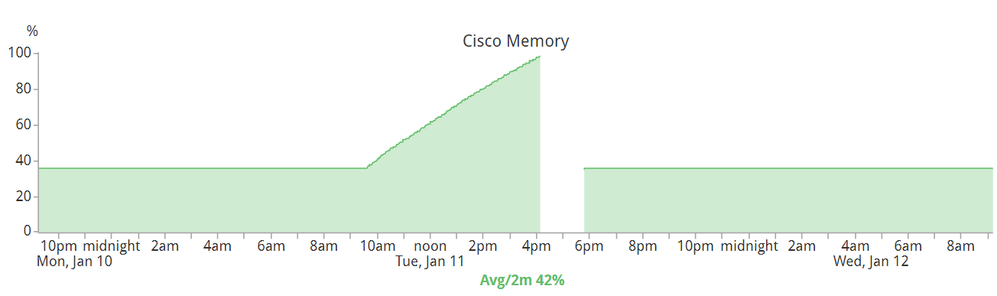

Still not impressed? How about the next one?

This is what a single 3850 CPU memory is going to look like when the stack-mgr goes ballistics. The cause of this issue was a lose stacking cable and the cable was fixes several hours before the spike. Notice the speed on how this CPU brought the entire stack down to it's knees? Take note: A single CPU (of four in a switch) "hot spun" and, after a few hours later, had to cold-reboot the entire stack.

NOTE: I have been "beta testing" Cisco's IOS/IOS-XE codes for the last 10 years and I have never been so busy since IOS-XE was released.

To minimize "runaways" like above I/we perform daily CPU and memory monitoring. If we cannot upgrade the firmware of the switches, we cold-reboot them. Cold-reboot, in our opinion, is far better than the "reload" command because it completely clears out any stupid residual junk. Back in December 2021, I had a stack master "kick out" all the stack members because of "version mismatch". The stack had an uptime of several weeks and was just recently upgraded to 16.12.6. After several hours of troubleshooting (including replacing all switch members), one team member got so "frustrated" (trying to keep this response "PG") he cold-rebooted the entire stack and fixed the problem.

Sorry for such a long response but I hope this helps (someone).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:04 PM

Side question Leo-

What software are you using to monitor the CPU/Memory of the individual switches in a stack? Also what is your polling rate to see that spike before a crash?

I almost wonder if part of the issues have to do with different manufacturing dates of the 3850's? The only change I was aware of is where they recessed the mode button some years later after the initial 3850 release.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:26 PM

@AdamF1 wrote:

I almost wonder if part of the issues have to do with different manufacturing dates of the 3850's? The only change I was aware of is where they recessed the mode button some years later after the initial 3850 release.

I have to be fair here. My previous response is very doom-n-gloom-the-end-is-at-hand. Yeah, too much for someone to stomach sometimes.

Here's some bright side: I have about 15 stacks of 3850 and have an uptime of >4 years. No crashes, not a single Tracebacks seen. All optics are up/up. CPU and memory are as flat-as-a-chessboard. Those stacks are running 3.6.7.

And in my humble opinion, IOS-XE trains 3.6.X and 3.7.X are THE most stable OS for the 3650/3850. Hands down. None of our 3850 with 16.X.X can last 1 year without a cold-reboot/reload.

If it were not for Dot1X, I would be downgrading all our 3850 to 3.6.X.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:37 PM

You are good; we have all experienced different issues in the different trains so its always good to hear first hand from others.

I will say I have one site that runs multiple stacks on 16.9.x train on 3850 MGIGs and it seems we have one or 2 switches reload once a year with no indicator as to why.

3.6.8 has been a very stable code for us as well and has run many years on many stacks without issues for what we use them for.

At this point I don't really have a lot of faith in any of the Cisco switch codes. Not all have the luxury of upgrading/downgrading these once they are in place due to 24/7 critical operations. These switches take well over 20 minutes to finish booting up and close to 30 once you calculate all the POE devices coming back online.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-28-2022 05:54 PM - edited 03-28-2022 07:10 PM

@AdamF1 wrote:

These switches take well over 20 minutes to finish booting up and close to 30 once you calculate all the POE devices coming back online.

Ooooooooooooooooooo ... I gotta perfect solution for this: PERPETUAL POE!

We've been using this feature on the 3850 since being introduced in 3.7.1.

The downside to this is the switch needs to be rebooted ONCE before the switch "remembers".

@AdamF1 wrote:

I will say I have one site that runs multiple stacks on 16.9.x train on 3850 MGIGs and it seems we have one or 2 switches reload once a year with no indicator as to why.

I could be mistaken but I do recall there are about 9 Cisco Bug IDs about routers, switches crashing and does not leave any crashinfo nor coredump files with 16.X.X.

@AdamF1 wrote:

At this point I don't really have a lot of faith in any of the Cisco switch codes.

Well, depends on which part of the world you are in, there is something far "worst" than the quality (or lack of) of the codes: When Cisco TAC support starts playing SLA/KPI games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-29-2022 01:33 AM

Thank you for the very comprehensive response @Leo Laohoo!

So it seems that if we want to stay on 16.12, we need to cold reboot any stacks that are exhibiting issues and this should solve it. Until the issues come back again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-29-2022 01:57 AM

@mattw wrote:

So it seems that if we want to stay on 16.12, we need to cold reboot any stacks that are exhibiting issues and this should solve it. Until the issues come back again

Cold reboot switches every two to three months regardless of issue(s) or not. Just reboot them.

Oh, I forgot one vital thing: Port flapping. (If there is a port that goes down and then up, NON-STOP, that falls in the definition of "port flapping".) Keep a sharp eye out of port flapping. IOS-XE, as a whole, do not like port flapping. It will crash the switch regardless of firmware version. We had "caught" several "ghost" port flapping. Logs would show the ports going down and up. So we investigate and we send someone to track down the cable. When that person is in front of the switch he goes "nothing is plugged to that port". We shut/no shut the port and it is still flapping. Plug a PoE phone in and the phone would not boot up (because the PoE/LLDP process has crashed). Solution? Yup, reboot the bl**dy stack.

I really do not know how 16.12.X was able go past "quality control". Utterly amazing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2022 04:45 AM

Hi Cisco Experts,

So, Is there a final settled code at for the PoE Issues faced by 3700 or 380 APs or Cisco IP Phones etc.

Has anyone got a confirm solution on whcih IOS code has the issue been fixed ?

We have 200 odd 3850 stacks and as it was pandemic we did not notice this issue, but as we are back on campus we are needing to reboot switches left right and centre.

Please if anyone has a confirm answer.

Both BUD IDs - https://bst.cisco.com/bugsearch/bug/CSCvv54912

and https://bst.cisco.com/bugsearch/bug/CSCvv50628

talk about fixed relase been 16.12.5, 5a, 5b 12.7 etc.

Do we know for fact that which is the stable and trustworthy release ?

Thanking in advance.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide