- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Troubleshooting - HTTP/HTTPS not accessible on the network

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-29-2018 10:47 PM - edited 03-08-2019 02:50 PM

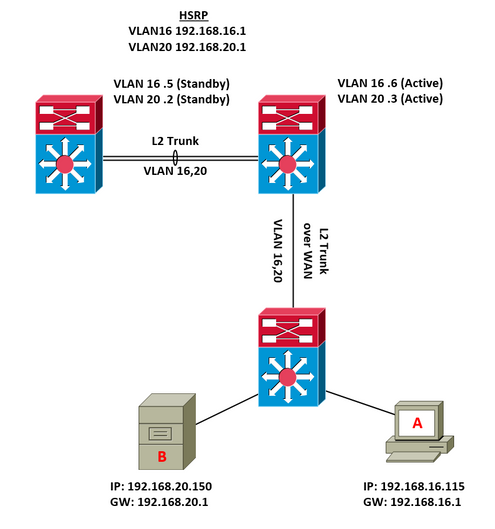

PC A is trying to access Server B web server. WAN is Metro Ethernet with 1ms latency.

- PING from A to B OK

- TRACEROUTE from A to B OK

- SSH from A to B OK

- HTTP/HTTPS from A to B NO RESPONSE

From the WireShark capture, Server B tries to reach PC A after exhausting retries. Any obvious issue with the network?

Solved! Go to Solution.

- Labels:

-

Other Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-01-2018 10:29 PM

Hi joe_chris!

I am sending from mobile, so i can't open pcap file but as soon i come on the work i will do...

Like i told you previous have you did this test also " When you are on the server, can you use webbrowser and access the webserver, efter that please post the wireshark? "

Can you please send " show interfaces" on those interfaces that pc connected and the server?

Zanthra, i did not agree with you the mtu size, this is just fine and don,t need decrease. I would say The link between sites is just good as it could!

Thanks

/Mohammed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-01-2018 11:15 PM

Hi Mohammed,

Thanks for checking the PCAP files.

I cannot run a web browser on the server itself. However please find the PCAP file captured from the server interface, i.e. from the server perspective so you can crosscheck with previous PCAP files. I have included both HTTPS and SSH for comparison.

Will get back to you on 'show interface'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-01-2018 11:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-01-2018 11:09 PM

Hi Zanthra,

Thanks for the insight. I will test using lower MTU size to see if it makes any difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 12:57 AM

Hello,

on a side note, the problem might as well be the L2 WAN link. How is this configured ? Can you post the output of 'show interfaces x' of the two interface that connect both sites ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 01:08 AM

Hi Georg,

Basically the port is connected to the CPE switch by provider. There is one switch at each end. The switch carries different L2 network from Site A to Site B, e.g. port 1 is L2 network 1, port 2 is L2 network 2 etc.

Note that I've removed the actual interface number.

Site A

GigabitEthernetx/y is up, line protocol is up (connected)

Hardware is Gigabit Ethernet Port, address is 2894.0fc6.3bd9 (bia 2894.0fc6.3bd9)

Description:

MTU 1500 bytes, BW 1000000 Kbit, DLY 10 usec,

reliability 255/255, txload 3/255, rxload 1/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 1000Mb/s, link type is auto, media type is 10/100/1000-TX

input flow-control is on, output flow-control is on

Auto-MDIX on (operational: on)

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:04, output never, output hang never

Last clearing of "show interface" counters never

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 0

Queueing strategy: fifo

Output queue: 0/40 (size/max)

5 minute input rate 840000 bits/sec, 1190 packets/sec

5 minute output rate 12688000 bits/sec, 1662 packets/sec

25014879428 packets input, 6840670406116 bytes, 0 no buffer

Received 202383839 broadcasts (57384203 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 input packets with dribble condition detected

38918159560 packets output, 45904476975531 bytes, 0 underruns

0 output errors, 0 collisions, 0 interface resets

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier

0 output buffer failures, 0 output buffers swapped out

Site B

GigabitEthernety/z is up, line protocol is up (connected)

Hardware is Gigabit Ethernet Port, address is 2894.0fc6.3cc8 (bia 2894.0fc6.3cc8)

Description:

MTU 1500 bytes, BW 1000000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 3/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 1000Mb/s, link type is auto, media type is 10/100/1000-TX

input flow-control is on, output flow-control is on

Auto-MDIX on (operational: on)

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:00, output never, output hang never

Last clearing of "show interface" counters never

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 0

Queueing strategy: fifo

Output queue: 0/40 (size/max)

5 minute input rate 11990000 bits/sec, 1611 packets/sec

5 minute output rate 862000 bits/sec, 1198 packets/sec

32406759005 packets input, 39711848191204 bytes, 0 no buffer

Received 887560244 broadcasts (438536781 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 input packets with dribble condition detected

20891977179 packets output, 5250509294585 bytes, 0 underruns

0 output errors, 0 collisions, 0 interface resets

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier

0 output buffer failures, 0 output buffers swapped out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 01:21 AM

Hello,

no errors on the interfaces, so the line looks good actually.

I think it has been mentioned before in this thread that MTU could be a problem. Try and do a simple ping test from your Site A to Site B clients with the DF bit set, and try different packet sizes until you get the below result. That will tell you the maximum MTU size your link supports:

ping -f -l 1400 8.8.8.8

Pinging 8.8.8.8 with 1400 bytes of data:

Reply from 8.8.8.8: bytes=1400 time=10ms TTL=58

Reply from 8.8.8.8: bytes=1400 time=11ms TTL=58

Reply from 8.8.8.8: bytes=1400 time=16ms TTL=58

Reply from 8.8.8.8: bytes=1400 time=14ms TTL=58

Ping statistics for 8.8.8.8:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 10ms, Maximum = 16ms, Average = 12ms

ping -f -l 1500 8.8.8.8

Pinging 8.8.8.8 with 1500 bytes of data:

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Ping statistics for 8.8.8.8:

Packets: Sent = 4, Received = 0, Lost = 4 (100% loss),

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 02:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 03:03 AM - edited 05-02-2018 04:36 AM

Hi joe_chris!

On the time when the server is checking certificate. On that time there is something happning, i don´t know if this is related MTU size. You do have some kind of problem tcp Dup ACK, as i told you before. The server and the pc is not communicating well, we see on the server alot TCP Retransmission without any response.

Have you tested same vlans on the PC and Server? take wireshark and post it? It is look like som packet is lost in somewhere, i don´t know where they are lost....

The server is sending 1460 byte TCP data plus (IP header and TCP header which is total 54 bytes) = 1514 bytes which is ok to run:

se the pictures:

/Mohammed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-02-2018 08:37 AM - edited 05-02-2018 09:30 AM

The duplicate ACK is related to TCP Fast Retransmit. When the client receives the 80 byte "Server Hello Done" ahead of the previous sequence number, it solicits a duplicate ACK to help identify the previous segment as potentially lost.

Something is silently eating full sized packets on the path or blocking the ICMP error messages, because if the server received an ICMP message about packets being too large it would reduce the MTU itself.

Joe_chris, can you contact the WAN provider and check with them what the maximum frame size is? VLAN tags add 4 bytes to an already 1514 byte frame to bring it up to 1518 which could be larger than they allow. (or +4 more bytes with FCS)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-04-2018 01:36 AM

Hi Zanthra,

While waiting for the WAN provider to get back, here is the output with this tool.

https://www.elifulkerson.com/projects/mturoute.php

See if that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-04-2018 01:40 AM

Hi Mohammed,

I did tested the PC and server on the same VLAN. There was no issue at all.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-04-2018 01:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-04-2018 03:44 PM - edited 05-04-2018 03:49 PM

If the MTU is 1496 that means that your WAN links will take the normal 1500 byte frames as long as they don't have the extra 4 byte VLAN tag. Here are a few possible solutions.

1) Make sure all hosts including router interfaces on all VLANs that cross the WAN link are configured with the 1496 byte MTU.

2) Remove the VLAN tagging and use a different port on the CPE for each VLAN.

3) Reorganize the network so that the VLANs don't cross the WAN link, and instead have hosts at site 2 use a local router to route traffic to site 1 as needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-15-2018 11:49 PM - edited 05-15-2018 11:50 PM

Hi Zanthra,

We solved the issue by increasing the WAN link MTU to 1600.

Thanks everyone for your help!!

- « Previous

-

- 1

- 2

- Next »

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide