- Cisco Community

- Technology and Support

- Service Providers

- XR OS and Platforms

- IOS XRv 5.1.1 LDP Interop with Junos

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-25-2014 01:45 PM

Hi

I have configured a Demo Lab

JunoS---------XRv5.1.1---------Ixia

I have setup on all ISIS and LDP

ISIS is working fine

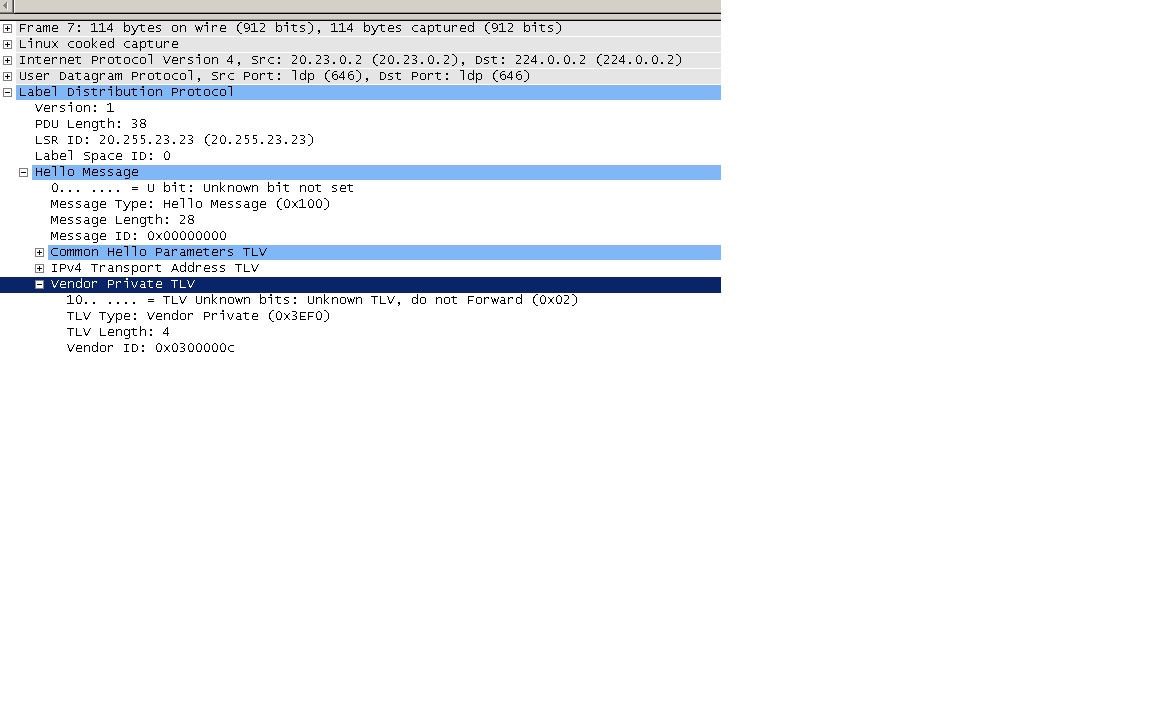

however I am not able to get the LDP up, I have taken a packet capture and I see that Cisco is sending both in the hello and in the initialization packet a vendor-private tlv. as Ixia do not care the session is going up but it seems like juniper do not like that there for terminating the session.

I have tried to look if there is a way to turn that off, no luck!

will appriciate some informative comment.

Solved! Go to Solution.

- Labels:

-

XR OS and Platforms

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2014 02:53 PM

Juniper developer analyzed the issue:

When IOS XRv initiates the LDP session, right after the three-way TCP handshake it sends this:

|Jun 9 09:28:50.021586 LDP rcvd TCP PDU 172.16.20.20 -> 172.16.2.2 (none)

|Jun 9 09:28:50.021599 ver 1, pkt len 67, PDU len 63, ID 172.16.20.20:0

|Jun 9 09:28:50.021608 Msg Initialization (0x200), len 53, ID 1094

|Jun 9 09:28:50.021616 TLV SesParms (0x500), U: 0, F: 0, len 14

|Jun 9 09:28:50.021625 Ver 1, holdtime 180, flags <> (0x0)

|Jun 9 09:28:50.021634 vect_lim 0, max_pdu 1000, id

|Jun 9 09:28:50.021650 TLV P2MPCap (0x508), U: 1, F: 0, len 1

|Jun 9 09:28:50.021658 Capability Flags 0x80

|Jun 9 09:28:50.021667 TLV Unknown (0x509), U: 1, F: 0, len 1

|Jun 9 09:28:50.021674 TLV Unknown (0x50b), U: 1, F: 0, len 1

|Jun 9 09:28:50.021682 TLV Unknown (0x3eff), U: 1, F: 0, len 12

It's the max_pdu 1000 that makes Juniper send the notification (Juniper expects 1200 or higher).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-26-2014 08:43 AM

Hi,

Can you get show mpls ldp trace and show mpls ldp neighbor

Lets see whether that vendor private is causing the issue.

Thanks,

Chander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-26-2014 10:03 AM

I actually tried to look over into the trace but it was not very informative, my best guess was on the vendor-private in hello:

RP/0/0/CPU0:XRv1#show mpls ldp trace | utility tail -20

Wed Feb 26 17:58:39.142 UTC

Feb 26 17:58:29.773 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:29.773 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:30.783 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:30.783 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:31.793 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:31.793 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:32.793 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:32.793 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:33.803 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:33.803 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:34.803 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:34.803 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:35.813 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:35.813 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:36.813 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:36.813 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:37.823 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:37.823 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 26 17:58:38.822 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 26 17:58:38.822 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

RP/0/0/CPU0:XRv1#sh mpls ldp neighbor

Wed Feb 26 17:58:43.472 UTC

RP/0/0/CPU0:XRv1#

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-26-2014 11:56 PM

ok, with a friend help the probelm and juniper is not the problem nor the private vendor tlv and the one that is sending the RST is Cisco:

Feb 27 07:31:10.093 mpls/ldp/str 0/0/CPU0 t1 [PEER]:3587: Peer(22.255.1.1:0): Unsupported/Unknown TLV (type 0x503, U/F 1/0) rcvd in INIT msg

Feb 27 07:31:10.093 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x508): Tx to peer 22.255.1.1:0

Feb 27 07:31:10.093 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x509): Tx to peer 22.255.1.1:0

Feb 27 07:31:10.093 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x50b): Tx to peer 22.255.1.1:0

Feb 27 07:31:10.093 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x3eff): Tx to peer 22.255.1.1:0

Feb 27 07:31:10.093 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 27 07:31:10.093 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:2381: ldp_tcp_xport_incall succeeded: peer 22.255.1.1:0, state: 2 fd=110

Feb 27 07:31:10.093 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:1482: ldp_tcp_xport_disconnect: state: 1 fd: 110

Feb 27 07:31:10.103 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3301: TCB(22.255.1.1): fd -1 state 1, adj_grp 0 invalid tcp conn

Feb 27 07:31:10.103 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:1482: ldp_tcp_xport_disconnect: state: 1 fd: 4294967295

notice that when the U bit is set to 1 according to RFC the TLV should be ignored and the proccess the rest of the messages as if not exist:

http://tools.ietf.org/html/rfc5036#section-3.6.1.1

U-bit

Unknown TLV bit. Upon receipt of an unknown TLV, if U is clear

(=0), a notification MUST be returned to the message originator

and the entire message MUST be ignored; if U is set (=1), the

unknown TLV is silently ignored and the rest of the message is

processed as if the unknown TLV did not exist.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-27-2014 12:10 AM

and in continue to the issue I have disabled GR on Juniper and still XRv wont allow the session to come up:

Feb 27 08:07:16.184 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

Feb 27 08:07:17.194 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x508): Tx to peer 22.255.1.1:0

Feb 27 08:07:17.194 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x509): Tx to peer 22.255.1.1:0

Feb 27 08:07:17.194 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x50b): Tx to peer 22.255.1.1:0

Feb 27 08:07:17.194 mpls/ldp/cap 0/0/CPU0 t1 [CAP]:1110: Cap(0x3eff): Tx to peer 22.255.1.1:0

Feb 27 08:07:17.194 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:3199: TCP: vaccept_batch: afi=1, fd=59, 1 accepts

Feb 27 08:07:17.194 mpls/ldp/misc 0/0/CPU0 t1 [MISC]:2381: ldp_tcp_xport_incall succeeded: peer 22.255.1.1:0, state: 2 fd=110

Feb 27 08:07:17.194 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:1482: ldp_tcp_xport_disconnect: state: 1 fd: 110

Feb 27 08:07:17.194 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3301: TCB(22.255.1.1): fd -1 state 1, adj_grp 0 invalid tcp conn

Feb 27 08:07:17.194 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:1482: ldp_tcp_xport_disconnect: state: 1 fd: 4294967295

So plot thikens

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-07-2014 01:39 PM

I am having exactly the same issue.

Anybody knows how to work around the issue?

Any help is appreciated.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-07-2014 01:39 PM

hey guys,

Say, the unsupported unknown tlv is just a notification that we saw something like that, it is not disconnecting the session because of it.

I am a bit concerned about this part:

Feb 27 08:07:16.184 mpls/ldp/err 0/0/CPU0 t1 [ERR][MISC]:3076: VRF(0x60000000): ldp_tcp_xport_incall: Call from 22.255.1.1 with Adj Grp(22.255.1.1) with NULL tcb

The socket appears to be invalid on multiple message sends (even after this INIT) and that is I think cause dby something else.

It seems that we dont have a tcp control block for this address.

could it be that the mpls ldp config has a different routerID, I mean is it configured pointing to an interface instead of an address...?

Can you show us the show run mpls ldp.

regards

xander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2014 02:53 PM

Juniper developer analyzed the issue:

When IOS XRv initiates the LDP session, right after the three-way TCP handshake it sends this:

|Jun 9 09:28:50.021586 LDP rcvd TCP PDU 172.16.20.20 -> 172.16.2.2 (none)

|Jun 9 09:28:50.021599 ver 1, pkt len 67, PDU len 63, ID 172.16.20.20:0

|Jun 9 09:28:50.021608 Msg Initialization (0x200), len 53, ID 1094

|Jun 9 09:28:50.021616 TLV SesParms (0x500), U: 0, F: 0, len 14

|Jun 9 09:28:50.021625 Ver 1, holdtime 180, flags <> (0x0)

|Jun 9 09:28:50.021634 vect_lim 0, max_pdu 1000, id

|Jun 9 09:28:50.021650 TLV P2MPCap (0x508), U: 1, F: 0, len 1

|Jun 9 09:28:50.021658 Capability Flags 0x80

|Jun 9 09:28:50.021667 TLV Unknown (0x509), U: 1, F: 0, len 1

|Jun 9 09:28:50.021674 TLV Unknown (0x50b), U: 1, F: 0, len 1

|Jun 9 09:28:50.021682 TLV Unknown (0x3eff), U: 1, F: 0, len 12

It's the max_pdu 1000 that makes Juniper send the notification (Juniper expects 1200 or higher).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2014 02:53 PM

if the reason you say is that the juniper send notification based on that then they are wrong, see that the max optional value is 4096 however the receiving LSR must calculate according to the lowest not optional:

http://tools.ietf.org/html/rfc5036:

Max PDU Length

...

The receiving LSR MUST calculate the maximum PDU length for the session by using the smaller of its and its peer's proposals for Max PDU Length.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2014 12:08 PM

Juniper developer is working on fix for issue (1). Looks like in the past (at least with traditional non-virtual IOS-XR), the Max PDU value was never set to that low value by IOS-XR. Whenever there is a public release with the fix (to allow for these low Max PDU values) I will let you know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-08-2014 10:01 PM

Hello Amonge,

I am having the same issue.. Did you get an ans from cisco or Juniper ? Please let me know how did you resolved it if already.

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2014 03:38 PM

Hello,

Junos has been adapted to accept Max PDU value of 1000.

This fix (via PR/1007096) will be available in 14.1R3 and 14.2R1, currently planned for early October.

Hope this helps.

Thanks,

Ato

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2014 12:44 PM

Let me rephrase my description above, cases (1) and (2).

The TCP session establishment is initiated by the router which has the highest IP address in the link. This router is also the one that sends the first LDP initialization message. So just by changing the IP addresses, I can determine who will initiate the TCP session and who will send the first LDP initialization message.

(1) When IOS XRv initiates the LDP session, right after the three-way TCP handshake... the description I quoted above, Juniper developer is looking into this (on the Juniper side).

(2) When Junos initiates the LDP session, right after the three-way TCP handshake it sends an Initialization message with max_pdu 4096. And IOS XRv sends a TCP RST back.

Further analysis shows the trigger for (1) and (2) are actually the same: interoperability issues in Max PDU negotiation.

Thanks!

Ato

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2014 12:44 PM

Hi Ato,

This issue is also being addressed from our side. I opened a bugid for it and a fix will be available soon.

https://tools.cisco.com/bugsearch/bug/CSCuq77238

Regards

Harold Ritter, CCIE #4168 (EI, SP)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2014 03:43 PM

Sounds great, thanks Harold!

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide