- Cisco Community

- Technology and Support

- DevNet

- DevNet Collaboration

- Collaboration Blogs

- Finesse 12.5 performance improvements - part 1. c10k

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Finesse Failover as a driving force for performance

- Nature of the challenge

- C10k Scale

- Asynchronous request processing

- Finesse Tomcat

- Changes introduced to Finesse web server

- Front Apache Tomcat with Nginx web server

- Nginx performs SSL termination and provides HTTP/2 connectivity to clients.

- Cache static files at Nginx

- Cache possible REST API responses at Nginx

- Enable browser cache of static resources

- Caching of gadget requests

- Summary of Improvements

The browser-based Finesse Agent Desktop 12.5, that ships with the Cisco Unified CCE / CCX contact center solutions, has made massive strides in the performance of its underlying infrastructure, which improves its throughput and reliability by an order of magnitude compared to Finesse 11.x and below.

This is part one of a series of posts from the engineering team that aims to describe the changes made in the 12.5 release and the resulting improvements.

Finesse Failover as a driving force for performance

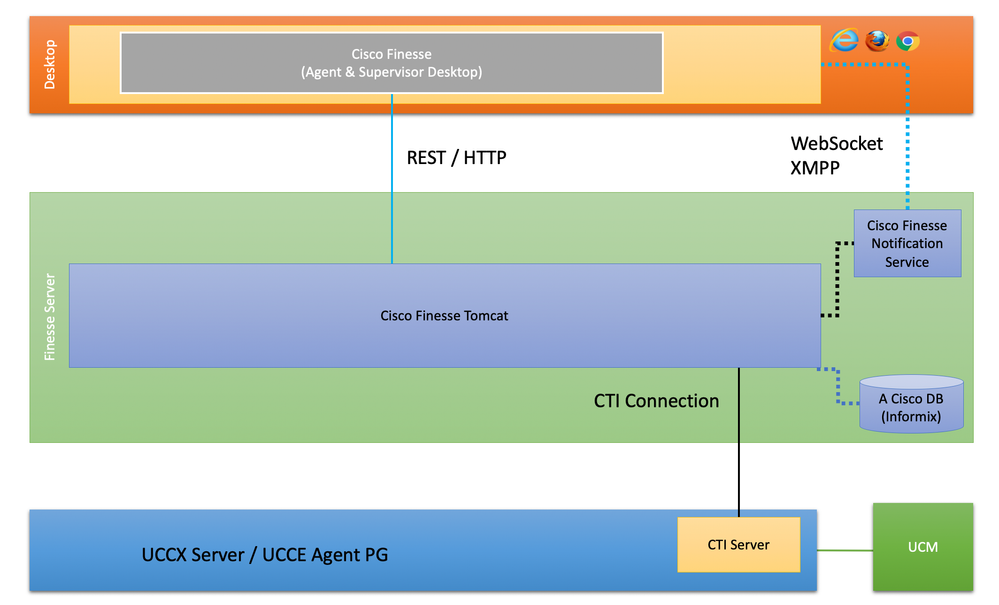

Faster failover is one of the major improvements that was added in Finesse 12.5. Failover is the process by which the desktop reloads from the alternate Finesse server when it senses a problem with the currently connected server.

Finesse failover involves 2 major scenarios,

- CTI Failover -

A breakage of the CTI connection above, causes Finesse server to go out of service, while it connects to the alternate side and re-initializes its in-memory state. The desktop will keep querying either sides, (preferring the current side) and ultimately reconnects to the Finesse server which comes into service. If the Finesse it is connected to, does not come back in service, the desktop has to be reloaded from the alternate Finesse. - Desktop Failover

When the browser running the Finesse desktop, faces disruption to the REST / HTTP connection or XMPP connection shown above, the desktop will reload from an active side and re-initialize its display state.

Nature of the challenge

A desktop reload, requires all the clients to reload the complete desktop & its resources from the alternate server within a very short window.

This operation is akin to a complete browser relaunch for the desktop and produces the same load as a login operation for a single client.

While shift changes and consequent user logins typically happen over a period of a few minutes, a browser failover would generate the entire load at the Finesse server in a much shorter time period, comprising of static HTTP resource loads and REST API calls from all the clients involved.

This made scaling thousands of clients to simultaneously become available in the shortest possible time, an exacting challenge.

Therefore, this requirement became the source of a major list of optimisations made to the Finesse desktop.

C10k Scale

One of the critical challenges faced by a web server is scale. In the initial days of Common Gateway Interface (CGI) web , HTTP servers used to serve a request by spawning a new process for each request, which was no doubt influenced by the Unix process spawning philosophy. Once the scale requirements grew, this was deemed to be too inefficient and thread-based request handling replaced it and has been more or less in force for a decade or two.

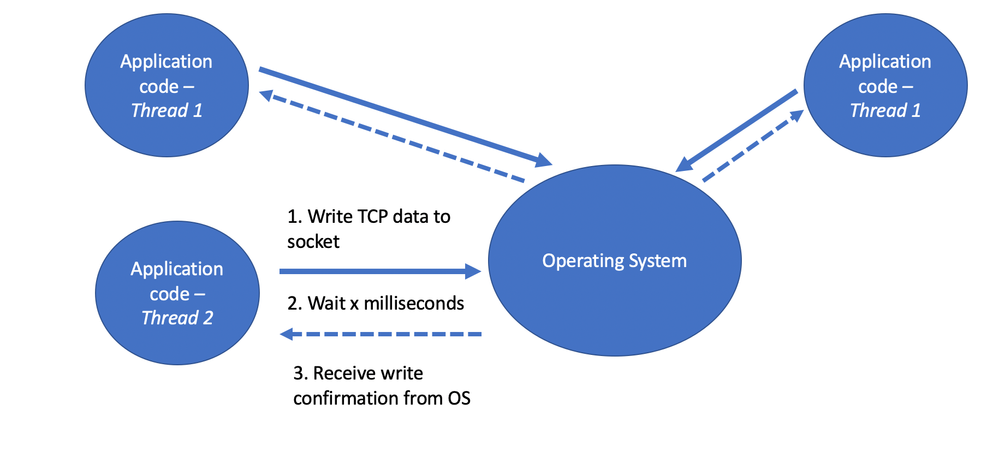

However, this architecture became inadequate beyond a certain scale, and c10k refers to the challenge of scaling a single web server to handle simultaneous connections from 10k clients. The act of spawning a different thread for each request becomes untenable at this scale due to the inherent in-efficiency present in context switching between different thread stacks and synchronising between them.

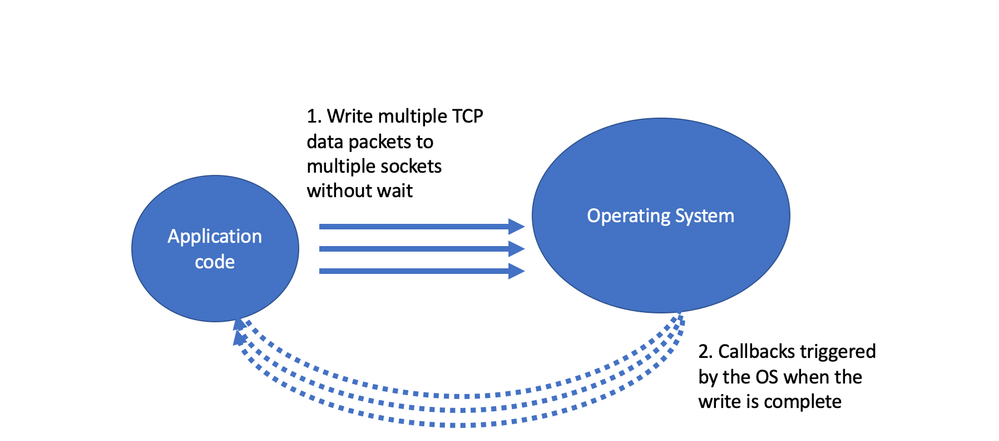

Asynchronous request processing architecture (which was definitely not pioneered by the web industry) was the next family of architecture that was picked by web servers to overcome this challenge.

Asynchronous request processing

Asynchronous requests refer to the nature of application code that aims to remove extra thread requirements by using only non-blocking API calls, which does not entail any waits. The results of the API calls are communicated over callbacks to the application code to process later.

This allows a single thread to run without being blocked and cater to multiple requests at the same time.

Finesse Tomcat

Finesse uses Apache Tomcat as its underlying web server. Tomcat HTTP connector with the fastest throughput (APR) uses synchronous style of request processing and improving upon this, became a requirement to meet the rate of requests pushed during a failover scenario. Though Tomcat does have a non-blocking connector, it handles only the TCP connection establishment phase in an async manner and does not seem to be able to scale the static resource processing in an async manner.

Therefore, Finesse opted to use Nginx since it has an asynchronous / event-based architecture, which was a departure from the thread-based request processing used by Apache Tomcat. Nginx was one of the pioneers of this new web server architecture and has now become one of the leading web servers in the industry.

Changes introduced to Finesse web server

These are the changes we introduced to improve the tomcat scale issues

-

Front Apache Tomcat with Nginx web server

The Apache Tomcat was modified to run only on localhost over HTTP and the Nginx server was configured to expose the ports required for the clients.

In tests involving 3000 clients firing all of the login time requests in a 1 minute window, the Nginx server was able to successfully finish the load, where the Apache server tried to cope, by increasing the thread count and finally ground to stop since the server could not deal with the number of threads created (amounting to 5000 odd threads, from which the test never recovers).

This failure is essentially the crux of the problem faced by threaded synchronous architectures. -

Nginx performs SSL termination and provides HTTP/2 connectivity to clients.

Once the Apache tomcat was made local, it no longer required to process SSL requests and the SSL termination was taken over by Nginx, which allowed protocol support separate from the underlying Tomcat server.

Deploying Nginx as a proxy to Tomcat thus enabled HTTP/2 support, which would have otherwise, required a tomcat upgrade. If you are not familiar with benefits of using HTTP/2, here is why everyone should be using HTTP/2. -

Cache static files at Nginx

Nginx’s cache flexibility allowed Finesse to serve the static HTML resource serving responsibility to the Nginx server. Once this is done, the REST API requests that drive the core functionality of Finesse are the only requests that the Finesse tomcat needs to handle.

Removing Tomcat from serving the countless number of requests reduces the load on the system (far fewer threads and CPU consumption) and provides better throughput to the REST request layer.

-

Cache possible REST API responses at Nginx

As part of the effort to reduce the number of requests that reach Tomcat, responses for the API calls that retrieve configuration was cached at Nginx for a very brief period of time. This ensured that after a single client had fetched this data, it would not hit the Apache Tomcat again for all other agents of the same team retrieving the same data.

This reduced the load on the server by a factor 20 or 30, depending on the team size. This reduced the impact of the desktop initialisations to a large extend. Since this data is cached only to cater to logins, it is cached for only a few seconds in a memory backed file system, thus removing any disk impact for the load. -

Enable browser cache of static resources

Browser can cache static files and does not request for the full files if the cache-control headers are present. Nginx supports cache-control header configuration, which makes it easy to control this behavior. We pre-loaded all the static files from the secondary site and unless the browser cache is loaded, these resources are never pulled again.

This reduces the number of requests hitting the Finesse web server and those that do hit the server are served by the Nginx cache. -

Caching of gadget requests

Finesse gadget functionality is structured as multiple iFrames created within the desktop’s page area, loading a specific gadget’s HTML. Once Nginx based HTTP response cache was available, caching semi-constructed gadget HTML’s for XML gadgets became possible and all the stock gadgets where moved to XML based gadgets.

Gadget HTML creation done by the Shindig web server was expensive due the parsing and caching it performs to modify the script and CSS includes, such that scripts will correctly refer to the Finesse server. Performing this repeatedly for all agents is a gross waste of the server’s capability and this has now become efficiently cached, with the aid of some clever scripts at the desktop, which can fill in a few remaining dynamic parts of the response.

By offloading this processing, a significant load was reduced again. If each desktop contains 5 gadgets, that is a saving of 20,000 VERY expensive requests.

Please note though that this optimisation is available only for static gadget URIs, and to make use of this, all stock gadgets that ship with the solution have been converted to use XML based URI’s.

CCE deployments performing upgrades, need to run a CLI to upgrade their LiveData gadget URI’s, after CUIC is upgraded.

Summary of Improvements

- Connection handling is vastly improved. I.e. c10k compliance + HTTP/2 + SSL termination

The HTTP request handling infrastructure is vastly improved.

- Reduction in CPU consumption from-

- SSL offloading

- reduced request processing at Tomcat

- Reduction in no of HTTP calls due to

- Efficient static resource caching.

- REST response caching.

- Gadget HTML response caching.

- Resiliency

Sudden increase in load, can be handled effectively with improvements in connection handling, which otherwise breaks the old Tomcat server. Finesse is primarily a web application and the improvements made in its HTTP processing availability improves it resiliency during peak periods and load situations. - Security

Since Nginx is acting as physical layer of separation, much like a reverse proxy, between Tomcat & the HTTP request termination point, it provides an additional level of security, by shielding Tomcat and enforcing limits, before the request is evaluated by Tomcat.

Application code is tightly coupled with the Apache Tomcat server due to the usage of the Tomcat provided JEEE interfaces. However, no such restriction applies to the external proxy.

Therefore, this allows the product to react quickly and the client facing web server layer becomes easily upgradeable, which is a big plus from security perspective. Additional security checks can also be enabled at Nginx if so desired, using security modules like modsecurity.

Overall, this change has provided significant gains from all the above perspectives and enables new opportunities due to the flexibility it provides to the application stack.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: